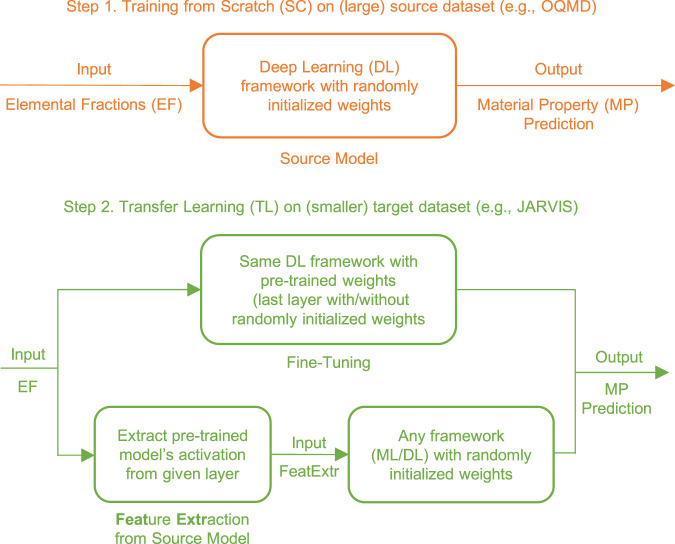

Fig. 1. The proposed cross-property deep-transfer-learning approach.

First, a deep neural network framework (e.g., ElemNet) is trained from scratch, with randomly initialized network weights, on a big DFT-computed source data set (e.g., OQMD) using elemental fractions as input. Here, we refer to the model trained on the source dataset as the source model. Next, the same architecture (ElemNet) is trained on smaller target datasets (e.g., JARVIS) with different properties, using cross-property transfer learning, which can be done in two ways: 1. model parameters are initialized with that of the source model and then fine-tuned using the corresponding target dataset; or 2. source model is used to extract features for the target dataset in terms of activations from each layer of the source model, which are used as the input to build new ML and DL models (also called freezing method, if the features are extracted only from the last layer).