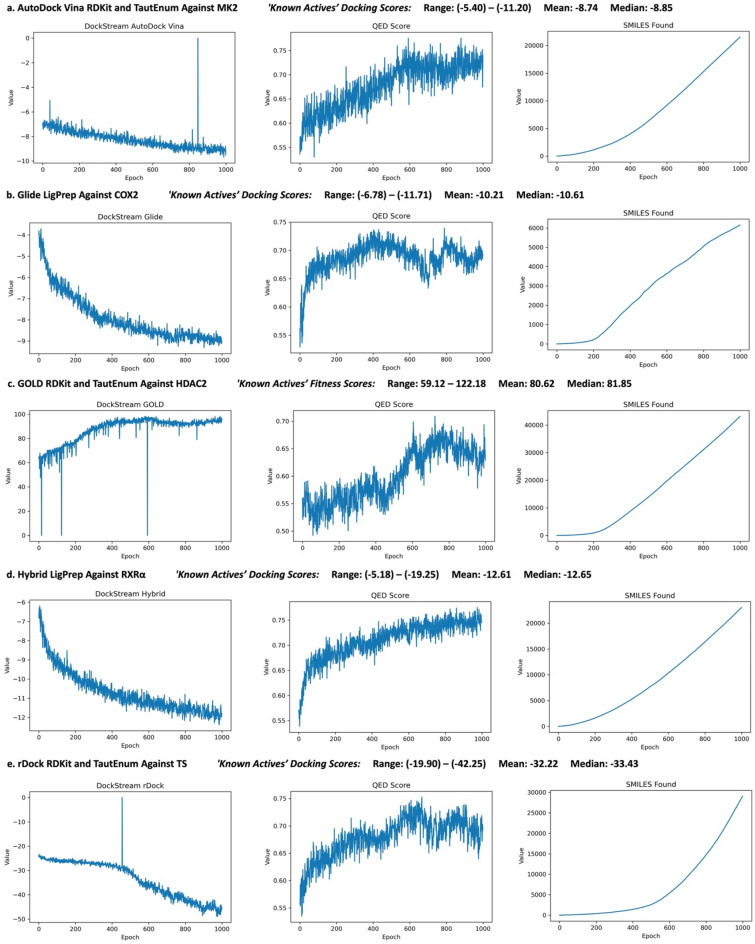

Fig. 4.

REINVENT-DockStream agent reinforcement learning training progress for selected experiments: a AutoDock Vina with RDKit and TautEnum against MK2 (PDB ID: 3KC3), b Glide with LigPrep against COX2 (PDB ID: 1CX2), c GOLD with RDKit and TautEnum against HDAC2 (PDB ID: 3MAX), d Hybrid with LigPrep against RXRα (PDB ID: 2P1RT), and e rDock with RDKit and TautEnum against TS (PDB ID: 1I00) (see Additional file 1: Figs. S16–33 for training plots of all experiments). ‘Known Actives’ docking scores (fitness scores for GOLD) from the DEKOIS 2.0 dataset are shown.26 Docking and QED score optimization and the number of SMILES found are shown. Each epoch proposes batch size (128) number of compounds. Lower docking scores for AutoDock Vina, Glide, Hybrid, and rDock, and higher fitness scores for GOLD are considered better. The direction of the docking score optimizations reflect this difference. ‘SMILES found’ refers to the cumulative number of unique compounds proposed that pass a total score threshold. If every epoch generates only unique, valid, and favourable compounds, the plot is linear