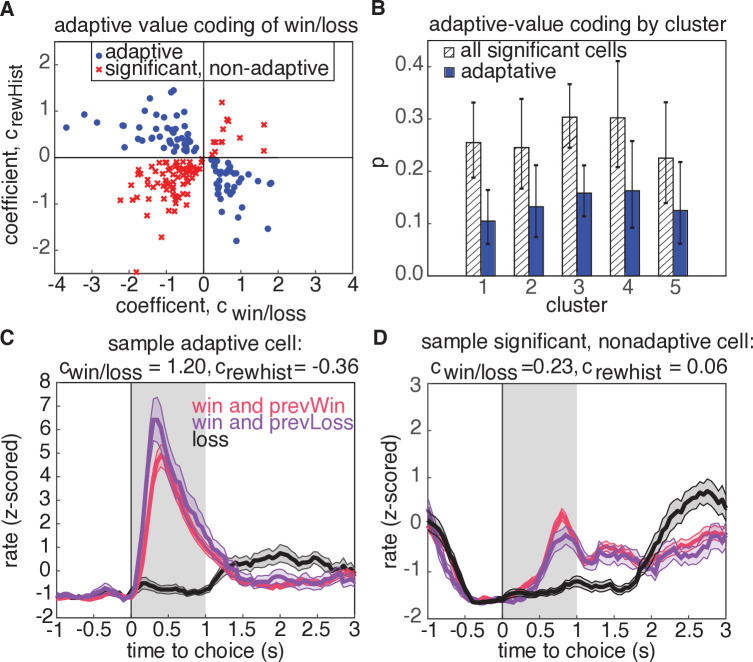

Figure 6. Adaptive value coding analysis using linear regression of firing rates for the 1s period following reward delivery against current and previous reward outcomes.

(A) Regression coefficients for the current reward outcome, (parameterized as win = 1, and loss=-1), and previous trial outcome, . 180 neurons had significant coefficients for both regressors (, t-test), and 81 neurons had coefficients with opposite signs, consistent with adaptive value coding (blue dots). The remaining neurons have differential responses due to reward history, but inconsistent with adaptive value coding (red crosses). (B) Probability that a model with significant regressors for both current and past reward outcome would come from a given cluster. Shaded regions denote all models from panel A, and blue bars show the probability for adaptive neurons only. Error bars are the 95% confidence interval of the mean for a binomial distribution with observed counts from each cluster. (C) Example cell demonstrating adaptive value coding. Shaded gray region denotes time window used to compute mean firing rate for the regression. (D) Sample cell demonstrating significant modulation due to reward history, but with a relationship inconsistent with adaptive value coding.