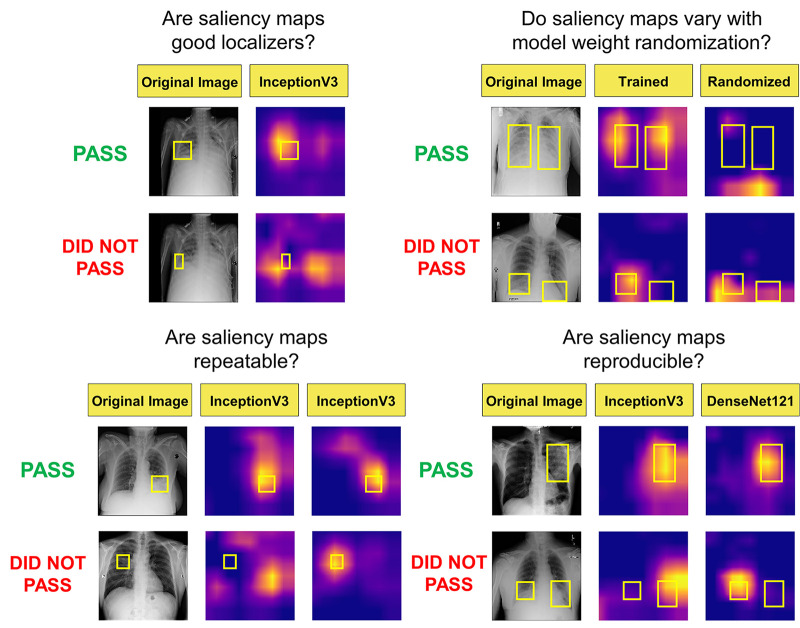

Figure 1:

Visualization of the different questions addressed in this work. Note that the top rows of images and saliency maps demonstrate ideal (and less commonly observed) high-performing examples (“pass”), while the bottom rows of images demonstrate realistic (and more commonly observed) poor-performing examples (“did not pass”). First, we examined whether saliency maps are good localizers in regard to the extent of the maps’ overlap with pixel-level segmentations or ground truth bounding boxes. Next, we evaluated whether saliency maps were affected when trained model weights were randomized, indicating how closely the maps reflect model training. Then we generated saliency maps from separately trained InceptionV3 models to assess their repeatability. Finally, we assessed the reproducibility by calculating the similarity of saliency maps generated from different models (InceptionV3 and DenseNet-121) trained on the same data.