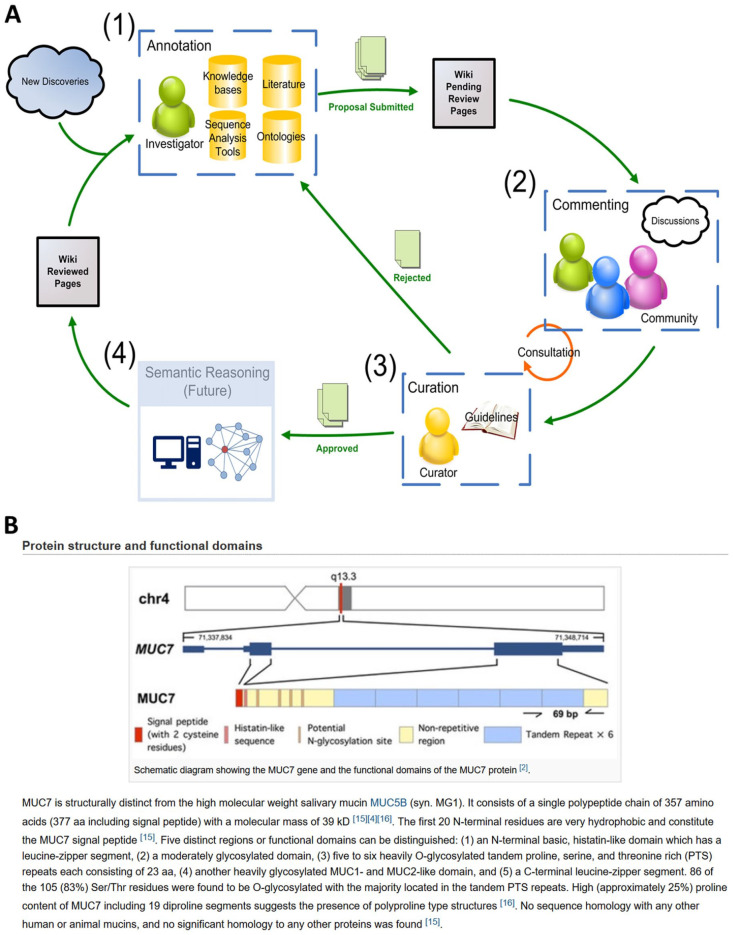

Figure 3.

Crowdsourcing opportunities available in the Human Salivary Proteome (HSP) Wiki. (A) Workflow for the annotation and curation process in the HSP Wiki that leverages the collective expertise of the research community. (1) A researcher has just finished a study and adds his findings to the Wiki. (2) After the proposal is submitted, the community will have the opportunity to register their feedback. (3) With expert knowledge on the subject matter, a curator reviews the proposal, taking into consideration the feedback gathered from the community. The curator may approve or reject all or parts of the proposal. If rejected, the researcher can resubmit the rejected changes after revisions. If approved, the new content will be incorporated into the Wiki. An additional step is possible, in which (4) the annotations in the Wiki can be fed to machine learning tools for discovering implicit relationships among entities. A curator will need to examine the output from these tools to ensure that the machine-generated knowledge is accurate before incorporating it into the Wiki. (B) As demonstrated in this screenshot of a sample expert commentary page, users with recognized expertise on certain proteins are invited to provide descriptions including unique properties of these proteins in saliva.