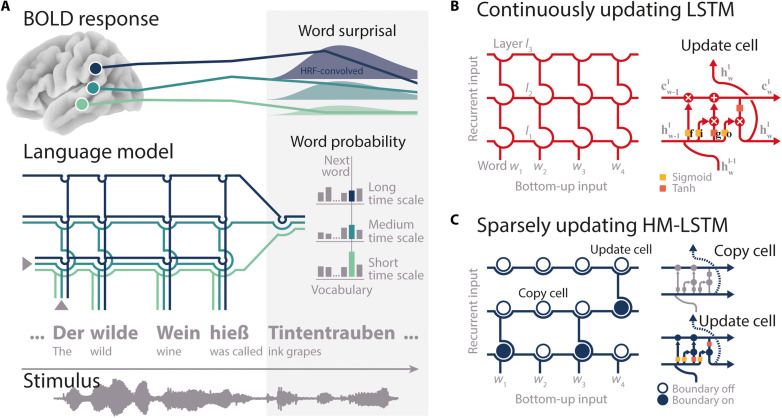

Fig. 1. Modeling neural speech prediction with artificial neural networks.

(A) Bottom: Participants listened to a story (gray waveform) during fMRI. Middle: On the basis of preceding semantic context (“The wild wine was called”), a language model assigned a probability of being the next word to each word in a large vocabulary (gray bars). The probability of the actual next word (“ink grapes”; colored bars) was read out from each layer of the model separately, with higher layers (darker blue colors) accumulating information across longer semantic time scales. Top: Word probabilities were transformed to surprisal, convolved with the hemodynamic response function (HRF, bell shapes), and mapped onto temporoparietal BOLD time series (colored lines). (B) Two language models were trained. With each new word-level input, the “continuously updating” LSTM (45) combines “old” recurrent long-term () and short-term memory states () with “new” bottom-up linguistic input () at each layer l. This allowed information to continuously flow to higher layers with each incoming word. f, forget gate; i, input gate; g, candidate state; o, output gate. (C) The “sparsely updating” HM-LSTM (42) was designed to learn the hierarchical structure of text. An upper layer keeps its representation of context unchanged (copy mechanism) until a boundary indicates the end of an event on the lower layer and information is passed to the upper layer (update mechanism). Networks were unrolled over the sequence of words for illustration only.