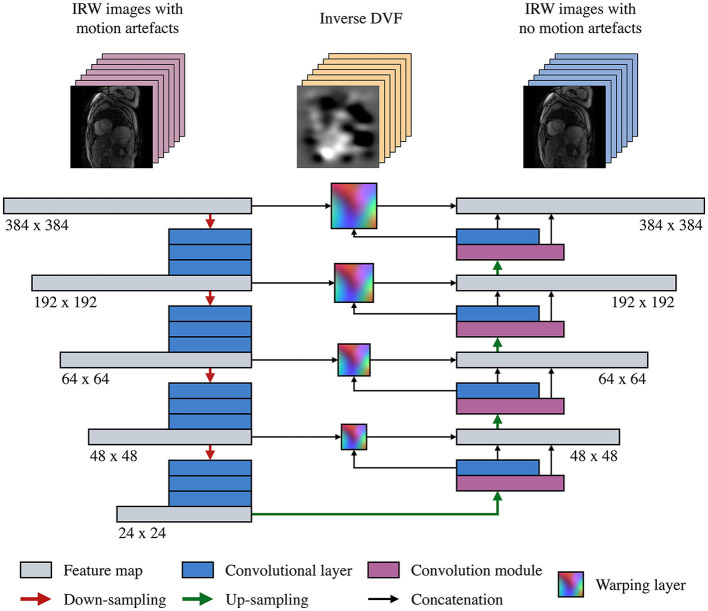

Figure 2.

Structure of the proposed motion correction convolutional neural network (MOCOnet). A stack of seven inversion recovery-weighted (IRW) images is input into the encoder-decoder structure on a per-channel basis. The warping layers estimate the optical flow from all the channels in a coarse-to-fine manner at each scale. The last warping layer generates the inverse distance vector field (DVF), i.e., the deformation required to correct the motion artefacts, in a groupwise manner.