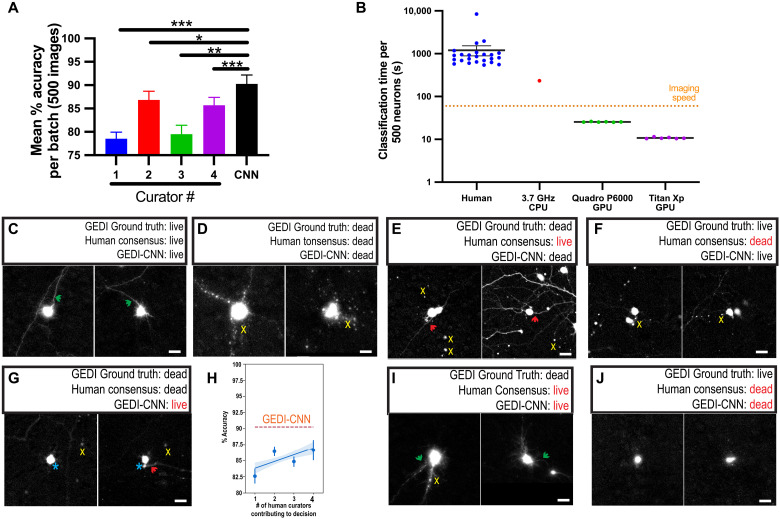

Fig. 3. GEDI-CNN has superhuman accuracy and speed at live/dead classification.

(A) Mean classification accuracy for GEDI-CNN and four human curators across four batches of 500 images using GEDI biosensor data as ground truth [±SEM, analysis of variance (ANOVA) Dunnett’s multiple comparison, ***P < 0.0001, **P < 0.01, and *P < 0.05]. (B) Speed of image curation by human curators versus GEDI-CNN running on a central processing unit (CPU) or graphics processing unit (GPU). Dotted line indicates average imaging speed (±SEM). (C and D) Representative cropped EGFP morphology images in which GEDI biosensor ground truth, a consensus of human curators, and GEDI-CNN classify neurons as live (C) or dead (D). Green arrows point to neurites from central neuron; yellow x’s mark peripheral debris near central neuron. (E to G, I, and J) Examples of neurons that elicited different classifications from the GEDI ground truth, the GEDI-CNN, and/or the human curator consensus. Green arrows point to neurites from central neuron, and red arrows point to neurites that may belong to a neuron other than the central neuron. Yellow x’s indicate peripheral debris near the central neuron. Turquoise asterisk indicates a blurry fluorescence from the central neuron. (H) Number of humans needed for their consensus decisions to reach GEDI-CNN accuracy. Human ensembles are constructed by recording modal decisions over bootstraps, which can reduce decision noise exhibited by individual curators. The blue line represents a linear fit to the human ensembles, and the shaded area depicts a 95% confidence interval.