Abstract

We survey and reflect on how learning (in the form of individual learning and/or culture) can augment evolutionary approaches to the joint optimization of the body and control of a robot. We focus on a class of applications where the goal is to evolve the body and brain of a single robot to optimize performance on a specified task. The review is grounded in a general framework for evolution which permits the interaction of artificial evolution acting on a population with individual and cultural learning mechanisms. We discuss examples of variations of the general scheme of ‘evolution plus learning’ from a broad range of robotic systems, and reflect on how the interaction of the two paradigms influences diversity, performance and rate of improvement. Finally, we suggest a number of avenues for future work as a result of the insights that arise from the review.

This article is part of a discussion meeting issue ‘The emergence of collective knowledge and cumulative culture in animals, humans and machines’.

Keywords: evolution, individual learning, cultural learning

1. Introduction

Given the abundance of intelligent lifeforms existing on the Earth arising as a product of evolution, it is unsurprising that the basic concepts of evolution have proved appealing to computer scientists for as long as they have had access to digital technologies. The fundamental ideas of evolution—that of population, variation, selection—have been ‘borrowed’ to solve problems in diverse fields dating as far back as the 1950s. For example, Turing described an evolutionary inspired method to solve control problems [1]; in the 1960s, a group of engineers in Germany invented the first ‘Evolution Strategy’1 in order to optimize the design parameters of a physical hinged plate and later of a dual-phase gas nozzle [2], while Holland’s seminal book on genetic algorithms [3] was first published in 1975.

The 1990s onwards saw a huge explosion in both the development of improved evolutionary algorithms (EAs), in the domains to which they were applied, and in theoretical understanding of their processes. EAs found success in solving many hard combinatorial optimization problems in domains such as logistics and scheduling, as well as in continuous optimization domains such as function or parameter optimization. A natural follow-on from this was to apply artificial evolution to the field of robotics and artificial life (Alife) in order to evolve new designs and controllers, giving rise to the now vibrant field of evolutionary robotics (ER). The field encompasses the use of artificial EAs to design morphology [4], control [5] or a combination of the two for both simulated and physical robots [6–9]. Furthermore, it has progressed to cover evolution with a wide range of materials, ranging from soft, flexible materials that can be easily deformed under pressure to provide adaptation [10], through modular approaches that evolve configurations of pre-existing components [11] to approaches that exploit the ability to rapidly print hard-plastic components in a diverse array of forms [12].

(a) . Embodied artificial evolution

The move to applying EAs in robotics introduces an important conceptual shift with respect to previous work that applied EAs in the domains of combinatorial optimization or function optimization. There is a fundamental difference between evaluating a genome that encodes a string of numbers that (say) represent a solution to a function optimization problem and evaluating a genome where the string of numbers represents the control parameters of a robot: in the latter case, the controller exists in a physical body that interacts with the environment.2 This notion is captured by the now popular term ‘embodied intelligence’, which describes the design and behaviours of physical objects situated in the real-world and was first introduced by Brooks in 1991 [13]. Pfiefer and Bongard’s seminal text ‘How the body shapes the way we think’ [14] expanded on the idea that intelligent control is not only dependent on brain, but at the same time both constrained and enabled by the body. The text provides numerous examples of how intelligence might arise as a result of the interplay of morphology, materials, interaction with the environment and control.

(b) . Co-evolution of morphology and control

For the evolutionary roboticist, this clearly points to the fact that to properly exploit the power of evolution to discover appropriate robots for a given task, one must consider the co-evolution of body and control, rather than use EAs to either search for controllers for fixed (hand-designed) body-plans [15] or vice-versa, optimize morphology only. This has the obvious advantage of allowing evolution to discover for itself the appropriate balance between morphological and neural complexity in response to the particular environment and task under consideration. This has given rise to a thriving field more generally known as morphogenetic robotics [16]: defined in broad terms, this specifically includes approaches to ‘the design of the body or body parts, including sensors and actuators, and/or, design of the neural network-based controller of robots’ [16, p. 146]. In addition, the term also includes research dubbed ‘evo-devo’ which considers robots that can change their morphology over the course of their lifetime [17]. In this article, we only discuss work that falls into the former category, in that artificial evolution produces a robot body whose controller can adapt over a lifetime owing to individual or cultural learning mechanisms, but whose body remains fixed during this period.

In order to develop a system that can achieve this in practice, evolution should act on a population whose individuals have a genome that encodes the information required to generate both morphology and control. However, this notion raises a challenge for artificial evolutionary methods in the design of the genome encoding. Assuming no prior knowledge of what type of robot is required for a task, then a single population should contain a diverse set of genotypes that encode for morphologically diverse body-plans. Here, an artificial evolutionary system significantly diverges from its biological counterpart: in the latter, evolution takes place within distinct populations consisting of a single species. By contrast, in the artificial case, we essentially have multiple species inter-breeding within a single population: that is, if a species is defined by a particular set of morphological features, then we can have (for example) a single population that contains wheeled land-based robots, swimming robots and flying robots. A result of this is that offspring might bear little similarity to either parent. In such cases, an inherited neural controller—with inputs corresponding to sensory apparatus and outputs to actuator control—is likely to be incompatible with the new body [18,19]. The problem is exacerbated if the morphological space to which evolution is applied is rich.

One way of addressing the mis-match issue is to use an encoding for the controller that is morphology-independent, i.e. one in which the genome specifies a mechanism for generating the controller, rather than directly specifying it. These designs are referred to as generative encodings. However, even the controllers inherited via a morphology-independent mechanism are likely to require fine-tuning to specialize the controller to the nuances of the new body. This motivates the requirement for learning to occur in addition to evolution, in order to either refine a controller to body or create a new if the inherited controller is unsuitable.

(c) . If it evolves it needs to learn

Eiben et al. proposed an evolutionary framework for robotic evolution called the Triangle of Life (TOL) [20]. The three sides of the triangle represent three phases: (i) morphogenesis in which the body of a robot is created3 from the specification denoted on a genome; (ii) learning in which the new robot undergoes training to improve its inherited controller, perhaps following a syllabus of increasingly complex skills in a restricted or simplified environment; and (iii) life, i.e. the robot is released into the intended real environment and its fitness is measured as its ability to accomplish a task. Deviating from much of the previous work in ER, the TOL adds a learning phase to the evolutionary cycle in which individual learning occurs after every new robot is ‘born’. Hale et al. [8] describe a physical implementation of the TOL, in which robots are three-dimensionally printed then enter a simplified replica of the real environment dubbed the ‘nursery’. Here, a robot refines its skills before being released into the real world. Furthermore, robots that fail to attain a defined level of learning are removed from the system and do not participate further in the evolutionary cycle.

Eiben and Hart further expand on the role of the learning phase in [21], arguing that it is in fact essential, particularly if evolving directly in hardware. In the extreme case where there is a complete mis-match between the number and type of sensors and actuators of parents and that of their offspring, any inherited neural controller is not likely to be usable, as the network will have an incorrect structure, i.e. an incorrect number of inputs and outputs. In this case, a new control neural network must be generated that matches the offspring body, and the weights of the network learned from scratch [9]. On the other hand, using any representation that permits inheritance of controller with an appropriate structure for the child, then the learning process can act as a form of adaptation over the course of an individual’s lifetime. That is, the learning process can adjust the weights of the neural network to refine its behaviour. Having expended computational effort on applying a learning mechanism, one can then consider whether a Lamarckian [22] system might be used—although largely dismissed as a theory in biological evolution, Larmarck proposed that adaptations acquired or learned by an individual during its lifetime can be passed onto its offspring. With respect to robotics, this is realized, for example, by writing a controller learned during a robot lifetime back to the genome, so that it can be inherited by future offspring. An alternative hypothesis is the Baldwin effect—this suggests that the ability to learn during a lifetime can influence reproductive success, i.e. evolution selects for organisms that are more capable of learning. This effect can also be studied within an artificially evolving robot population.

(d) . Cultural learning

An alternative form of learning that might be considered in the context of ER is that of cultural learning [23]. Cultural algorithms introduce the notion of dual-inheritance, allowing information to be transmitted at both the individual level and group level. At the individual level, information is transferred directly from parents to offspring. However, useful information can be extracted from a population at any moment during the evolution and stored in an external repository (commonly known as a belief-space in the literature) which is expanded and updated over time. This can contain (for example) domain specific knowledge, examples of successful solutions, or temporal knowledge regarding the history of the evolutionary process. Thus, the belief-space stores and manipulates the knowledge acquired from the experience of individuals in the population space. Information can be transmitted from the belief-space directly to offspring, hence enabling a dual form of inheritance. This has been shown to considerably speed up an evolutionary process [19,24].

It should also be noted that an additional form of learning—that of social learning—can also be applied in the context of ER (e.g. [25,26]). In contrast to cultural learning in which information discovered over evolutionary generations is stored in an external repository and can be accessed by future generations, social learning relies on direct robot–robot interactions during a single generation. That is, it assumes that there is a swarm of robots, each of which can interact with others during a generation, and can modify their behaviours according to information observed or collected via these interactions [27,28]. Thus in the case of social-learning, the learning process exploits information captured in a current generation, while cultural learning enables the learning process to exploit information gathered from multiple previous generations. Here we restrict our discussion to the subset of evolutionary robotics which does not rely on a swarm, i.e. is focused towards emergence of a single robot appropriate for a specified task and does not rely on the existence of multiple robots at any moment in time.

(e) . Overview of remainder of article

The remainder of the paper provides a survey of the field of ER with respect to three axes identified above (i.e. evolution, individual learning and cultural learning). The review is restricted to evolutionary joint optimization of body and controllers in the context of a particular task with a defined objective function that should be maximized. That is, we do not consider open-ended evolutionary systems in which there is no specific objective other than to survive and reproduce in an environment.

In order to provide a foundation on which to discuss learning, we first review evolution only approaches methods to joint-optimization of body and control. We then then consider the role of individual learning mechanisms in improving newly generated offspring, and the influence of the learning process on the main evolutionary process. Finally, we describe recent work which introduces a form of learning that can loosely be described as cultural learning: this enables the learning process to exploit a structured knowledge-store that captures historical knowledge collected from individuals evaluated across multiple generations.

2. Frameworks for joint evolution of body and control

Before diving into an in-depth discussion of interacting evolutionary and learning processes, a brief primer on artificial evolution is provided. In a typical EA, we assume the existence of a population, which consists of a set of individuals, each of which is specified by an artificial genome. In the robotics applications considered, each individual typically contains information that can be interpreted to define the morphology and control information for a single robot. The EA begins by initializing a population at random. The fitness of each resulting robot is then calculated by observing its performance on some task of interest. The resulting fitness values are used by a selection mechanism that determines which robots are chosen to reproduce each generation. Reproduction operators combine the information stored in two individuals to create offspring. Typically, an entire generation is replaced with its offspring, after which the cycle just described repeats until a given termination condition is reached.

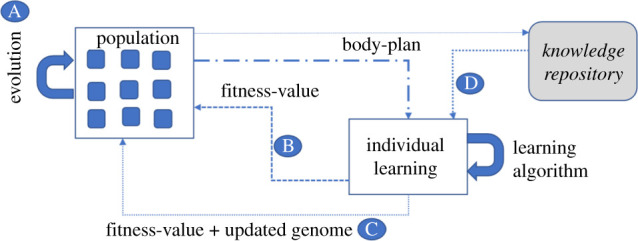

Although the generic EA just described provides the backbone of the large majority of applications of artificial evolution to problem solving, the robotics domain brings additional complexity in trying to jointly optimize both morphology and control. Hence it is typical to augment the standard EA with a learning mechanism, usually applied to refine the robot’s control mechanism. Figure 1 presents a general framework that augments the generic evolutionary process with individual and/or cultural learning mechanisms and frames the review that follows. The diagram depicts four possible architectures in which robot bodies and controllers can be jointly optimized via processes which use either only evolutionary mechanisms (i.e. at the population level, figure 1a), those that augment evolution at the population level with individual learning (figure 1b,c), and finally those that also add cultural learning (figure 1d). The general framework encapsulates a dual-loop, in which evolution and learning processes can interact in various ways, with the relative balance in terms of computational effort between the two loops introducing an additional variable into the process. The four mechanisms are considered in turn below, followed by a general discussion.

Figure 1.

Four different processes for joint optimization of body and control are shown. A. Robots are designed via an evolutionary process that acts on a population of genomes that define body-plans and controllers. B. Each new robot generated via evolution also refines its controller via individual learning over a lifetime: learning is either tabula-rasa or starts from the inherited controller. The evaluated fitness on a task/environment is returned to the evolutionary process C. as in B, however the learned controller is written back to the genome, i.e. follows a Lamarkian process. D. The learning algorithm is initialized from a knowledge repository that contains good controllers learned in previous generations, i.e. represents a form of cultural evolution. (Online version in colour.)

(a) . Joint optimization via evolution only

We first discuss the process denoted in figure 1a in which robots are designed only via an evolutionary process that acts on a population of genomes that define both body-plans and controllers, i.e. there is a single evolutionary loop with no learning mechanism. In this scenario, collective knowledge is gradually accumulated in the genomes of a population through reproductive operators (crossover and mutation) that gradually mix the genetic material of robots over generations. This obviously necessitates that both morphology and controller of offspring must both be inherited from the parent robots. As we noted above, this introduces a constraint on the representation of the controller on the genome in that it must be capable of producing a controller that matches the new body, in terms of capturing input from sensors and outputs to the actuators.

The first evolutionary approach to joint optimization can be dated back to the pioneering work of Karl Sims [29] in 1994 who proposed a method to evolve autonomous three-dimensional virtual creatures that exhibit diverse competitive strategies in physically simulated worlds. A directed-graph representation is used to describe morphology and control, with control graphs nested inside each morphological node. Nearly 30 years later, similar representations are still in use: for example Veenstra et al. [30] evolve a blue-print that specifies both the body and controller of a modular robot, i.e. one that is built from a library of ‘modules’ that can connect together at multiple sites on each module. Their blue-print takes the form of a single directed tree instead of a graph but as in Sim’s work, it defines both body and control, therefore encapsulating its tightly coupled nature. The tree can be encoded on the genome directly, however it is also possible to instead encode a mechanism for generating the tree, e.g. a parametrized L-system [31]: in this case, a set of production rules that specify how a tree can be built are evolved. Working directly in hardware (i.e. bypassing simulation), Brodbeck et al. [7] use a ‘mother robot’ to automatically assemble offspring from a set of cubic active and passive modules. Their robots are specified by a genome that contains n genes, one per module required. Each gene contains information about the module type to be used (active or passive), construction parameters and finally two parameters that specify the motor control of the module (the phase and amplitude of a sinusoidal controller).

While the above approaches uses the same encoding to specify both morphology and control, other authors separate the specification of each component into two separate encodings, which are represented on the same genome. Although this separation in some sense undermines the prevailing view that body and control are tightly coupled, the explicit separation isolates each component and therefore enables tailored changes to be made to either component. For example, this approach is adopted in a line of work described by Cheney et al. [32,33] who consider the evolution of soft-robots composed of regular cubic-voxels that can be realized with either active material (i.e. analogous to muscles) or passive material (analogous to tissue) is addressed. The genome specifies two neural networks; one provides the outputs required to define the morphology of a robot, while the second describes the controller, producing outputs that determine the actuation of each voxel. Evolution acts on each network independently. However, their experiments reveal that joint optimization approaches can tend to lead to premature convergence of the morphology, and therefore restrict ultimate performance. They propose a mechanism for addressing this [32] known as ‘morphological innovation protection’—the goal is to allow time for a controller to adapt to a new body via evolutionary mutations by temporarily reducing selection pressure on individuals that have new morphological features introduced via mutation.

While all of the methods above are shown to be capable of evolving robots that exhibit high performance with respect to the tasks they are evolved for, it is clear that applying a learning mechanism over the lifetime of an individual has the potential to improve an inherited controller. Methods to implement individual learning are described in the next section.

(b) . Joint optimization with individual learning

In these schemes, each time a new robot is generated via evolution it is given an opportunity to refine its controller by running an individual learning algorithm: this enables it to either improve its inherited controller over some fixed period of time, or in some cases, to learn a controller from scratch. In both cases, the learned fitness is associated with the robot at the end of learning process and used by the evolutionary process to drive selection (figure 1b). Furthermore, depending on the representation used to encode the controller, the learned controller can be written back to the genome before further evolution takes place, i.e. a Larmarkian process can be followed (figure 1c). Examples of these approaches are now discussed.

(i) . Individual learning applied to inherited controllers

For representations that genetically encode a mechanism to generate a controller with a suitable topology, the inherited controller that a child robot is created with can be refined during a ‘learning period’ of fixed duration. For example, Jelasavic et al. [22] tackle the problem of co-optimization using a modular robotic framework in which the body-plan consists of an arrangement of pre-designed modules and control is realized via a central pattern generator.4 They use an EA as the learner: each robot genome encodes a population of controller-generating algorithms: each parent passes a randomly chosen 50% of these algorithms to its child. An EA is then applied in the learning phase to evolve the population inherited by the child, with the best fitness found then assigned to the child. This process is Lamarkian as the child retains the evolved population and is able to pass this to its own descendants. Their results show perhaps unsurprisingly that the Lamarkian approach considerably reduces the time required to reach a given performance level, hence it is particularly important when the available learning budget is small. Additionally, they observe that apply learning to offspring produced from two parents that are morphologically similar results in a greater increase in fitness than when applied to offspring of dissimilar parents.

Miras et al. [35] conduct a similar study, but in their work, only a single controller-generator is inherited by a child. A new evolutionary algorithm is then launched to optimize the weights of the generated controller. In this case, the process is not Larmarkian as the EA is applied to the network generated by controller-generating algorithm, rather than the genetically encoded algorithm itself. Running experiments with and without learning, they find that the robots created via learning have significantly different morphology than those without. They postulate that in contrast to the common view that ‘the body shapes the brain’ [14] that they in fact observe ‘the brain shaping the body’. Furthermore, they observe that the learning delta—the difference between the fitness of the inherited controller and that of the learned controller—increases over time, suggesting that adding learning directs the evolving population towards morphologies which are more capable of learning.

(ii) . Individual learning from scratch

The methods just described make use of indirect encodings of the controller in order to address the problem of the mis-match between the structure of an inherited controller and the potentially modified morphology of a child robot. Although this elegantly solves the mis-match issue, there are some downsides: using an indirect encoding, the fitness landscape can be discontinuous—small changes in the genotype can lead to major changes in the phenotype (and in fact the opposite, i.e. a major genotypic change can lead to a small phenotypic change). Several authors have also noted that the evolution progress using an indirect encoding is slower than that of a direct one (e.g. [36]), something that is of particular concern if one is hoping to conduct fitness evaluations in hardware. To address these concerns, an alternative approach to individual learning is simply to create a neural controller with a suitable structure (i.e. appropriate inputs and outputs) once the new morphology of a child has been decoded from the genome, and then learn its weights from scratch using an individual learning algorithm that operates directly on a vector that explicitly represents the controller weights. This (i) removes the need to design a morphology-agnostic controller representation, and (ii) has the advantage that many types of learning algorithm can be applied to learn the controller (for example, reinforcement learning, evolution, stochastic gradient descent).

For example, Gupta et al. [9] use a reinforcement learning (RL) algorithm to optimize the controllers of robots whose morphologies are evolved using an EA. Each time a new morphology is produced via evolution, the RL algorithm is applied from scratch to optimize a policy to control the robot. Interestingly, they show that the coupled dynamics of evolution over generations and learning over an individual lifetime leads to evidence of a morphological Baldwin effect long postulated by biologists [37], demonstrated experimentally by a rapid reduction in the learning time required to achieve a pre-defined level of fitness over multiple generations. Hence, evolution selects for morphologies that learn faster, enabling behaviours learned by early ancestors to be expressed early in the lifetime of their descendants. They go on to suggest a mechanistic basis for both the Baldwin effect and the emergence of morphological intelligence, based on evidence that the coupled process tends to produce morphologies that are more physically stable and energy efficient, and can therefore facilitate learning and control.

Le Goff et al. are motivated by the goal of applying artificial evolution and learning to physical populations of robots [4,8,19]. Their goal is to find a learning algorithm that is as efficient as possible given that conducting experiments in hardware can be time-consuming. They again use an EA as the learner (applied from scratch to a randomly initialized population), but propose two modifications to increase efficiency [38]: (i) the EA is initialized with a very small population, and periodically restarted if evolution stagnates with an increased population size; and (ii) a novelty mechanism [39] is added in order to maintain diversity. A comparison to another type of learner—a Bayesian optimizer (BO)—shows that although both methods can achieve similar performance, the computational running time of their method is significantly shorter than BO and is therefore preferable in the context of physical robotics.

(c) . Joint optimization with individual and cultural learning

While the previous section has focused on individual learning mechanisms in which an individual robot improves its controller based only on information gathered during its own lifetime, we now turn our attention of mechanisms which enable the individual learning phase described in the TOL [20] to be influenced by cultural information.

Cultural algorithms were first introduced into the field of artificial evolution in 1990s [23,40]. They are based on the notion that in advanced societies, culture accumulates in the form of knowledge-repositories that capture information acquired by multiple individuals over years of experience. If a new individual has access to this repository of information, it is able to learn things even when it has not experienced them directly. Cultural algorithms are prevalent in the field of swarm-robotics [27,28] in which robots learn to adapt to complex environments by learning from each other. However, here we restrict the discussion to methods in which the individual learning process of a single robot as depicted on the TOL can be influenced by external repositories of information built up over multiple generations. The repository effectively stores the results of previous individual learning trials that can be drawn upon by future generations.

Le Goff [18,19] describe a method for bootstrapping the individual learning phase by drawing on such a repository, which can be used when learning by default is tabula rasa owing to the difficulty of inheriting an appropriate controller (e.g. as in [9]). They consider that robots can be categorized by ‘type’ according to a coarse-grained definition of their morphology. Specifically, type is defined by a tuple (sensors, wheels, joints) which denotes the number of each component present in newly produced robots. An external repository stores the single best controller found through individual learning for each potential type. When a new robot is created via the evolutionary cycle, the learning phase is initiated by first selecting a controller from a robot with matching type from the repository (assuming one exists). A learning algorithm then attempts to improve this controller in the context of the new body. The repository is updated whenever an improved controller is found for the type. The authors demonstrate that the use of the repository results in a significant increase in performance over robots that learn from scratch [18,19]. In addition, they find that the use of the archive results in a rapid reduction in that the number of evaluations required to reach a performance threshold over generations, owing to the ability to ‘start’ from a better solution selected from the archive. However, they also observe a similar premature convergence of morphology as reported by Cheney et al. [33]. We return to this aspect in the discussion section.

Although not strictly speaking an evolutionary approach, the work of Liao et al. [41] is also worth mentioning. They employ a dual optimization process to find the best morphology and controller for a walker micro-robot, optimizing morphology and then control in separate loops making use of information learned across generations. Here a BO is used to learn a controller for each new morphology, taking advantage of previous information to initialize the optimizer, hence aligning with scheme D in figure 1. They use a form of BO known as contextual Bayesian optimization (cBO) [42] to optimize a Gaussian Process (GP) model to define the controller policy. By encoding the morphologies as contexts, cBO takes advantage of the similarities between different morphologies and is therefore able to generalize to good polices for unseen designs faster. In essence, rather than learn a new GP model for each new robot evaluated, the models are shared such that learning for robot b can start from a previously learned model for robot a if they have similar morphology. Conceptually, therefore, this is very similar to the approach from Le Goff just described. However in this case, the repository consists of GP models rather than controller weights.

Finally, we also remark that there is an increasing body of work that uses novelty search [43] to jointly optimize body and control. Although this is rarely cast as cultural evolution in the literature, in fact it makes use of an external archive of information that is updated over the generations based on past experiences, and is used to guide evolution. Hence this might be considered as cultural evolution in the sense described above that individual evolution is guided by experiences of other robots, and in that individuals have access to information that does not exist in the current population.

The notion of novelty-search was first introduced by Lehman & Stanley [43]: the idea is to replace objective-driven evolution in which selection is driven by a performance (fitness) related quantity with a selection mechanism that is driven instead by novelty. Novelty is defined with respect to a user-defined descriptor capturing information about either the morphology or behaviour of the robot. An external archive which expands over time stores examples of descriptors discovered over generations. The novelty-score of an individual in the current population is obtained by calculating the average distance between its descriptor and its k-nearest neighbours in the super-set formed by the current population and the archive; the resulting novelty-score then influences the probability of selection. At the end of a generation, the archive is updated with (for example) a small sample of the new descriptors derived from the offspring. In a similar manner to the work of Le Goff et al. described above, the information stored in the archive (the descriptor) typically describes the morphology of the robot, for example it can consist of a vector containing the number of sensors, actuators etc., derived from the genetic description of the robot [44]. However, rather than bootstrapping learning as in [19], in novelty search the external archive influences the direction of the evolutionary process by driving the population to discover new areas of the search-space that have not yet been explored. There is increasing evidence of the success of this approach in producing robots with morphology/controllers that exhibit high performance, particularly in the soft robotics community [45–48].

3. Discussion and future directions

We have described a number of ways in which artificial evolution can be augmented with learning mechanisms to jointly evolve the morphology and controller of robots. These processes are described in the context of a general framework that defines how individual or cultural learning interact with the evolutionary process. The framework enables the emergence of collective knowledge to improve the performance of a robot in three different ways: (i) knowledge accumulates purely through evolutionary processes acting on a population, and is transmitted over generations via the reproductive processes of crossover and mutation; (ii) evolution is augmented with an individual learning process in which knowledge is learned over an individual lifetime, and optionally can be transmitted back to the genome; and (iii) evolution interacts with a cultural learning mechanism which provides direct access to collective knowledge stored in a repository that is external to the population or learner. The literature survey indicates that all three methods have a role to play in accumulating and transferring knowledge in an artificial evolutionary system for designing robots.

We have shown that the use of learned knowledge—whether individual or cultural—has a positive impact on the evolutionary process [19,35]. In the case of individual learning, a morphological Baldwin effect that reduces the number of generations needed to reach a defined threshold has been observed by Gupta et al. [9], while Miras et al. provide some evidence that ‘the brain can shape the body’, showing that adding learning results in different types of morphologies to those that exclude learning. A variety of different methods of learning have been deployed (EA [35], RL [9], BO [41]), suggesting that including a learning loop is necessary but the mechanism by which the learning occurs is less important. We also considered whether learned information could be propagated through a population using Lamarkian evolution, in which the genome is directly modified to reflect the results of learning. An observed result of this interaction between learning and evolution is that it leads to noticeable changes in morphology, tending to produce more symmetrical and larger structures. However Jelasavic [22] also note that the benefits for the offspring of using a Lamarkian system are higher if the parents are more similar.

(a) . Cultural learning

From the perspective of cultural learning, we have described two approaches that make use of an external archive of knowledge that accumulates over generations from multiple individuals. This knowledge can be exploited by an evolutionary process to guide evolution. The approaches described do not rely on social interactions between robots, but instead require knowledge learned by an individual to be extracted and stored in a repository, and that new individuals have access to this repository. A key aspect of cultural learning is determining what information to store in the external repository (belief-space): in general it should store abstracted representations of individuals from an evolving population. In the context of evolutionary robotics, it would be expected that this information captures information about either the morphology or behaviour of the robot.

Reynolds [49] defines five possible types of knowledge that can be captured in a belief space: normative (describing promising variable ranges that provide guidelines for adjustment); situational (exemplary cases useful for specific situations); domain (useful information regarding the problem domain; temporal (important events in the search); and topographical (reasoning about the search landscape). The work described above makes use of situational knowledge, in storing exemplars that can bootstrap learning [19] or diversify evolution [45–48]. Clearly, there is further scope for extending the information stored in these knowledge archives with other types. Another relevant aspect to consider is the granularity of the knowledge stored. For example, Le Goff et al. [19] deliberately use a high-level abstraction of robot ‘type’ to store knowledge (see §2(c)). This makes the process of finding a matching type more straightforward and therefore increases the chance of being able to bootstrap from the repository. However, it should be clear that robots of the same type may in fact have very different skeletons (and therefore sizes) and different layouts of sensors/actuators, and hence even an inherited controller may not perform well. Although finer-grained definitions of type that accounted for these differences could be introduced, they would reduce the probability of a match (a way to mitigate this would be to store several controllers per type in conjunction with an informed heuristic for selecting among them). Again there are obvious opportunities for future work in this direction to better understand how to best make use of cultural archives to influence the joint optimization of morphology and control via evolutionary methods.

In the above survey, we have largely ignored the role that the environment can play in enabling the discovery of useful knowledge that can be later exploited by learning. It is well recognized that environmental complexity fosters the evolution of morphological intelligence [14]. Hence although we have described a general framework that elucidates the mechanisms by which evolution and learning can interact, the environment in which this occurs is also crucial. For example, Gupta [9] showed that exposing robots to a variety of physical environments (e.g. varying the friction or angle of the surface over which the robots move) and the task (the objective to be optimized) during evolution with learning leads to robots that learn faster. This is also an obvious direction for future work to gain further insights in this direction as to what form of knowledge is most useful, and what environments are required to facilitate the generation of useful knowledge.

(b) . Limitations

We note that this article restricts the discussion to the joint optimization of morphology and control to realize the design of a robot optimized with respect to a specific goal. Although there are studies in swarm robotics which employ social and cultural learning methods, e.g. Thenius et al. [27], they tend to focus only on learning control mechanisms for robots with fixed morphology. However, there is an emerging body of work that takes morphological change into account when adapting behaviour: Cully et al. [50] considered the role of learning in the context of adapting controllers in response to morphological change that occurs during a lifetime, while Walker et al. [51] study a form of learning in which the morphology of a soft-robot can deliberately adapt during an individual lifetime (for example, growing an additional ‘body-part’), mediated by environmental signals. It is likely that a deeper study of both individual and cultural learning mechanisms would also prove fruitful here.

To conclude, the take-away message is that knowledge in a variety of forms obtained by multiple forms of learning mechanisms clearly plays a key role in augmenting evolution in the context of evolutionary robotics where robots have both adaptable form and behaviour, with many potential lines of research remaining open.

Endnotes

The modern version of this method, co-matrix adaption evolution strategy, is to date one of the most commonly used optimization methods in the domain of continuous optimization.

The same is true whether in simulation or physical robots given that most modern simulations use sophisticated and realistic physics-engines to model environmental interactions.

Either in simulation or in reality.

A central pattern generator is a form of neural circuitry that outputs cyclic patterns typically found in vertebrates [34].

Data accessibility

This article has no additional data.

Authors' contributions

E.H.: conceptualization, funding acquisition, writing—original draft; L.L.G.: conceptualization, writing—review and editing.

Competing interests

We declare we have no competing interests.

Funding

This work is partially supported by EPSRC EP/R035733/1.

References

- 1.Turing A. 1950. Computing machinery and intelligence. Mind 59, 433-460. ( 10.1093/mind/LIX.236.433) [DOI] [Google Scholar]

- 2.Rechenberg I. 1973. Evolutionsstrategie—optimierung technischer systeme nach prinzipien der biologischen evolution. PhD thesis, Technical University of Berlin, Berlin, Germany. Reprinted by Frommann-Holzboog.

- 3.Holland JH et al. 1992. Adaptation in natural and artificial systems: an introductory analysis with applications to biology, control, and artificial intelligence. Cambridge, MA: MIT Press. [Google Scholar]

- 4.Buchanan E et al. 2020. Bootstrapping artificial evolution to design robots for autonomous fabrication. Robotics 9, 106. ( 10.3390/robotics9040106) [DOI] [Google Scholar]

- 5.Doncieux S, Bredeche N, Mouret JB, Eiben AEG. 2015. Evolutionary robotics: what, why, and where to. Front. Rob. AI 2, 4. [Google Scholar]

- 6.Lipson H, Pollack JB. 2000. Automatic design and manufacture of robotic lifeforms. Nature 406, 974-978. ( 10.1038/35023115) [DOI] [PubMed] [Google Scholar]

- 7.Brodbeck L, Hauser S, Iida F. 2015. Morphological evolution of physical robots through model-free phenotype development. PLoS ONE 10, e0128444. ( 10.1371/journal.pone.0128444) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Hale MF et al. 2019. The ARE robot fabricator: how to (Re) produce robots that can evolve in the real world. In The 2019 Conf. on Artificial Life: A Hybrid of the European Conf. on Artificial Life (ECAL) and the Int. Conf. on the Synthesis and Simulation of Living Systems (ALIFE), pp. 95-102. Cambridge, MA: MIT Press. [Google Scholar]

- 9.Gupta A, et al. 2021. Embodied intelligence via learning and evolution. Nat. Commun. 12, 5721. ( 10.1038/s41467-021-25874-z) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Hiller J, Lipson H. 2011. Automatic design and manufacture of soft robots. IEEE Trans. Rob. 28, 457-466. ( 10.1109/TRO.2011.2172702) [DOI] [Google Scholar]

- 11.Jelisavcic M, De Carlo M, Hupkes E, Eustratiadis P, Orlowski J, Haasdijk E, Auerbach JE, Eiben AE. 2017. Real-world evolution of robot morphologies: a proof of concept. Artif. Life 23, 206-235. ( 10.1162/ARTL_a_00231) [DOI] [PubMed] [Google Scholar]

- 12.Hale MF et al. 2020. Hardware design for autonomous robot evolution. In 2020 IEEE Symp. Ser. on Computational Intelligence (SSCI), pp. 2140-2147. New York, NY: IEEE. [Google Scholar]

- 13.Brooks RA. 1991. Intelligence without reason. In Proc. of the 12th Int. Joint Conf. on Artificial Intelligence, IJCAI’91, vol. 1, pp. 569-595. San Francisco, CA, USA: Morgan Kaufmann Publishers Inc. [Google Scholar]

- 14.Pfeifer R, Bongard J. 2006. How the body shapes the way we think: a new view of intelligence. Cambridge, MA: MIT Press. [Google Scholar]

- 15.Bongard J. 2011. Morphological change in machines accelerates the evolution of robust behavior. Proc. Natl Acad. Sci. USA 108, 1234-1239. ( 10.1073/pnas.1015390108) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Jin Y, Meng Y. 2010. Morphogenetic robotics: an emerging new field in developmental robotics. IEEE Trans. Syst. Man Cybern. C (Appl. Rev.) 41, 145-160. ( 10.1109/TSMCC.2010.2057424) [DOI] [Google Scholar]

- 17.Vujovic V, Rosendo A, Brodbeck L, Iida F. 2017. Evolutionary developmental robotics: improving morphology and control of physical robots. Artif. Life 23, 169-185. ( 10.1162/ARTL_a_00228) [DOI] [PubMed] [Google Scholar]

- 18.Le Goff L, Hart E. 2021. On the challenges of jointly optimising robot morphology and control using a hierarchical optimisation scheme. In 2021 Genetic and Evolutionary Computation Conf. Companion, Lille, France. New York, NY: ACM. [Google Scholar]

- 19.Le Goff LK et al. 2021. Morpho-evolution with learning using a controller archive as an inheritance mechanism. ArXiv (https://arxiv.org/abs/2104.04269)

- 20.Eiben A, Bredeche N, Hoogendoorn M, Stradner J, Timmis J, Tyrrell A, Winfield A. 2013. The triangle of life: evolving robots in real-time and real-space. In Proc. of the 12th Eur. Conf. on the Synthesis and Simulation of Living Systems (ECAL 2013), Taormina, Italy (eds Lio P, Miglino O, Nicosia G, Nolfi S, Pavone M), pp. 1056-1063. Cambridge, MA: MIT Press. [Google Scholar]

- 21.Eiben A, Hart E. 2020. If it evolves it needs to learn. In Proc. of the 2020 Genetic and Evolutionary Computation Conf. Companion, Cancun, Mexico, pp. 1383-1384. New York, NY: ACM. [Google Scholar]

- 22.Jelisavcic M, Glette K, Haasdijk E, Eiben A. 2019. Lamarckian evolution of simulated modular robots. Front. Rob. AI 6, 9. ( 10.3389/frobt.2019.00009) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Reynolds RG. 1999. Cultural algorithms: theory and applications. In New ideas in optimization (eds Corne D, Glover F, Dorigo M), pp. 367-378. London, UK: McGraw Hill. [Google Scholar]

- 24.Coello Coello CA, Becerra RL. 2004. Efficient evolutionary optimization through the use of a cultural algorithm. Eng. Optim. 36, 219-236. ( 10.1080/03052150410001647966) [DOI] [Google Scholar]

- 25.Bredeche N, Fontbonne N. 2021. Social learning in swarm robotics. Phil. Trans. R. Soc. B 377, 20200309. ( 10.1098/rstb.2020.0309) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Winfield AFT, Blackmore S. 2021. Experiments in artificial culture: from noisy imitation to storytelling robots. Phil. Trans. R. Soc. B 377, 20200323. ( 10.1098/rstb.2020.0323) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Thenius R et al. 2016. subCULTron-cultural development as a tool in underwater robotics. In Artificial life and intelligent agents symposium, pp. 27-41. Berlin, Germany: Springer. [Google Scholar]

- 28.Ali MZ, Daoud MI, Alazrai R, Reynolds RG. 2020. Evolving emergent team strategies in robotic soccer using enhanced cultural algorithms. In Cultural algorithms: tools to model complex dynamic social systems, pp. 119-142. Hoboken, NJ: Wiley. [Google Scholar]

- 29.Sims K. 1994. Evolving 3D morphology and behavior by competition. Artif. life 1, 353-372. ( 10.1162/artl.1994.1.4.353) [DOI] [Google Scholar]

- 30.Veenstra F, Glette K. 2020. How different encodings affect performance and diversification when evolving the morphology and control of 2D virtual creatures. In Artificial life Conf. Proc., Vermont, USA, pp. 592-601. Cambridge, MA: MIT Press. [Google Scholar]

- 31.Lindenmayer A, Jürgensen H. 1992. Grammars of development: discrete-state models for growth, differentiation, and gene expression in modular organisms. In Lindenmayer systems (eds Rozenberg G, Salomaa A), pp. 3-21. Berlin, Germany: Springer. [Google Scholar]

- 32.Lipson H, Sunspiral V, Bongard J, Cheney N. 2016. On the difficulty of co-optimizing morphology and control in evolved virtual creatures. In Artificial life Conf. Proc., Cancun, Mexico, vol. 13, pp. 226-233. Cambridge, MA: MIT Press. [Google Scholar]

- 33.Cheney N, Bongard J, SunSpiral V, Lipson H. 2018. Scalable co-optimization of morphology and control in embodied machines. J. R. Soc. Interface 15, 20170937. ( 10.1098/rsif.2017.0937) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Ijspeert AJ. 2008. Central pattern generators for locomotion control in animals and robots: a review. Neural Netw. 21, 642-653. ( 10.1016/j.neunet.2008.03.014) [DOI] [PubMed] [Google Scholar]

- 35.Miras K, De Carlo M, Akhatou S, Eiben A. 2020. Evolving-controllers versus learning-controllers for morphologically evolvable robots. In Int. Conf. on the Applications of Evolutionary Computation (Part of EvoStar), Seville, Spain, pp. 86-99. Berlin, Germany: Springer. [Google Scholar]

- 36.Le Goff LK, Hart E, Coninx A, Doncieux S. 2020. On pros and cons of evolving topologies with novelty search. In Artificial life Conf. Proc., Vermont, USA, pp. 423-431. Cambridge, MA: MIT Press. [Google Scholar]

- 37.Waddington CH. 1942. Canalization of development and the inheritance of acquired characters. Nature 150, 563-565. ( 10.1038/150563a0) [DOI] [PubMed] [Google Scholar]

- 38.Le Goff LK et al. 2020. Sample and time efficient policy learning with cma-es and Bayesian optimisation. In Artificial life Conf. Proc., Vermont, USA, pp. 432-440. Cambridge, MA: MIT Press. [Google Scholar]

- 39.Lehman J, Stanley KO. 2011. Novelty search and the problem with objectives. In Genetic programming theory and practice IX (eds Riolo R, Vladislavleva E, Moore J), pp. 37-56. Berlin: Springer. [Google Scholar]

- 40.Reynolds RG. 1994. An introduction to cultural algorithms. In Proc. Third Annual Conf. on Evolutionary Programming, vol. 24, pp. 131-139. Singapore: World Scientific. [Google Scholar]

- 41.Liao T, Wang G, Yang B, Lee R, Pister K, Levine S, Calandra R. 2019. Data-efficient learning of morphology and controller for a microrobot. In 2019 Int. Conf. on Robotics and Automation (ICRA), pp. 2488-2494. New York, NY: IEEE. [Google Scholar]

- 42.Metzen JH, Fabisch A, Hansen J. 2015. Bayesian optimization for contextual policy search. In Proc. second machine learning in planning and control of robot motion workshop. Hamburg, Germany: IROS. [Google Scholar]

- 43.Lehman J, Stanley KO. 2011. Abandoning objectives: evolution through the search for novelty alone. Evol. Comput. 19, 189-223. ( 10.1162/EVCO_a_00025) [DOI] [PubMed] [Google Scholar]

- 44.Lehman J, Stanley KO. 2011. Evolving a diversity of virtual creatures through novelty search and local competition. In Proc. 13th Ann. Conf. on Genetic and Evolutionary Computation, Dublin, Ireland, pp. 211-218. New York, NY: ACM. [Google Scholar]

- 45.Gravina D, Liapis A, Yannakakis GN. 2018. Fusing novelty and surprise for evolving robot morphologies. In Proc. Genetic and Evolutionary Computation Conference, Kyoto, Japan, pp. 93-100. New York, NY: ACM. [Google Scholar]

- 46.Joachimczak M, Suzuki R, Arita T. 2015. Improving evolvability of morphologies and controllers of developmental soft-bodied robots with novelty search. Front. Rob. AI 2, 33. [Google Scholar]

- 47.Krčah P. 2012. Solving deceptive tasks in robot body-brain co-evolution by searching for behavioral novelty. In Advances in robotics and virtual reality, pp. 167-186. Berlin, Germany: Springer. [Google Scholar]

- 48.Methenitis G, Hennes D, Izzo D, Visser A. 2015. Novelty search for soft robotic space exploration. In Proc. 2015 Ann. Conf. on Genetic and Evolutionary Computation, Madrid, Spain, pp. 193-200. New York, NY: ACM. [Google Scholar]

- 49.Reynolds R, Peng B. 2004. Cultural algorithms: modeling of how cultures learn to solve problems. In 16th IEEE Int. Conf. on Tools with Artificial Intelligence, Boca Raton, FL, pp. 166-172. New York, NY: IEEE. [Google Scholar]

- 50.Cully A, Clune J, Tarapore D, Mouret JB. 2015. Robots that can adapt like animals. Nature 521, 503-507. ( 10.1038/nature14422) [DOI] [PubMed] [Google Scholar]

- 51.Walker K, Hauser H, Risi S. 2021. Growing simulated robots with environmental feedback: an eco-evo-devo approach. In Proc. Genetic and Evolutionary Computation Conf. Companion, Lille, France, pp. 113-114. New York, NY: ACM. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

This article has no additional data.