Abstract

Introduction:

High quality epidemic forecasting and prediction are critical to support response to local, regional and global infectious disease threats. Other fields of biomedical research use consensus reporting guidelines to ensure standardization and quality of research practice among researchers, and to provide a framework for end-users to interpret the validity of study results. The purpose of this study was to determine whether guidelines exist specifically for epidemic forecast and prediction publications.

Methods:

We undertook a formal systematic review to identify and evaluate any published infectious disease epidemic forecasting and prediction reporting guidelines. This review leveraged a team of 18 investigators from US Government and academic sectors.

Results:

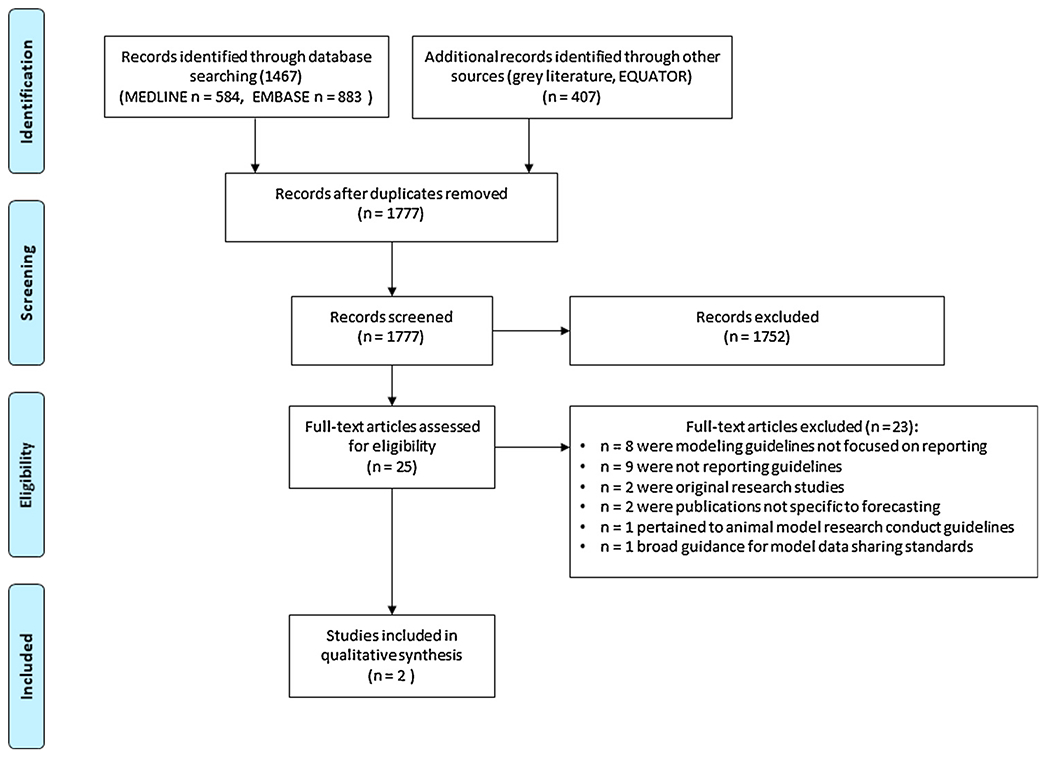

A literature database search through May 26, 2019, identified 1467 publications (MEDLINE n = 584, EMBASE n = 883), and a grey-literature review identified a further 407 publications, yielding a total 1777 unique publications. A paired-reviewer system screened in 25 potentially eligible publications, of which two were ultimately deemed eligible. A qualitative review of these two published reporting guidelines indicated that neither were specific for epidemic forecasting and prediction, although they described reporting items which may be relevant to epidemic forecasting and prediction studies.

Conclusions:

This systematic review confirms that no specific guidelines have been published to standardize the reporting of epidemic forecasting and prediction studies. These findings underscore the need to develop such reporting guidelines in order to improve the transparency, quality and implementation of epidemic forecasting and prediction research in operational public health.

Keywords: Epidemic, Outbreak, Forecasting, Prediction, Modeling, Reporting guidelines

1. Introduction

Epidemic forecasting and prediction are a critical biomedical research enterprise with major public and global health relevance (Rivers et al., 2019; Polonsky et al., 2019). Forecasting and prediction of epidemiological phenomena has offered critical insights into recent outbreaks, including those caused by Ebola virus, Zika virus, chikungunya virus, and pandemic influenza viruses (Worden et al., 2019; Perkins et al., 2016; Del Valle et al., 2018; Kobres et al., 2019; Keegan et al., 2017; Nsoesie et al., 2014). During recent outbreaks of Ebola, for instance, modeling research has predicted short and longer term case count trajectories (Worden et al., 2019), has estimated the impact of violence on outbreak growth and control (Wannier et al., 2019), has predicted the effectiveness of non-pharmaceutical and vaccine countermeasures (Merler et al., 2016), and has quantified the risk of international spread (Gomes et al., 2014).

While the terms ‘forecasting’ and ‘prediction’ are often conflated and heterogeneously defined, forecasting research typically offers quantitative statements about an event, outcome, or trend that has not yet been observed, conditional on data that has been observed. In the context of infectious disease epidemics, this often refers to short- to mid-term projections of disease incidence, and related targets, such as the timing of peak incidence. Such forecasts can predict epidemic growth, spatial spread, peak and total case burden, mortality, and morbidity in ways relevant to resource management (Rivers et al., 2019; Kobres et al., 2019). The term ‘prediction’ is more broadly and loosely used in epidemiological research, and may refer to models that examine the mechanistic drivers of epidemiological characteristics, such as human mobility, population immunity, contact patterns, public health interventions and climatic factors (Perkins et al., 2016; Ewing et al., 2017), as well as studies that estimate epidemiological characteristics with inherent forecasting value, such as R0 (Kobres et al., 2019). Forecasting research often uses data from these and other covariates. Epidemic forecasting and prediction is not limited to pandemics, the approaches also enhance routine preparedness for seasonal communicable diseases such as non-pandemic influenza and dengue viruses (Reich et al., 2019; Spreco et al., 2018; Debellut et al., 2018; Lauer et al., 2018; Lowe et al., 2018, 2017). In this manuscript, we refer to this collective body of research as “epidemic forecasting and prediction research”.

Many fields of biomedical research use consensus reporting guidelines to promote standardization and improve quality of research practice. These reporting guidelines also provide a framework for end-users to interpret the validity of such research approaches and findings. The Enhancing the Quality and Transparency of Health Research (EQUATOR) network refers to biomedical research reporting guidelines as “simple, structured tools for health researchers to use while writing manuscripts” (Anon, 2019a). Rather than providing guidance on how to perform research, they enumerate what should be reported in publications to “ensure a manuscript can be, for example, understood by a reader or replicated by a researcher” (Anon, 2019b). In the case of epidemic forecasting, such readers may include those in operational public health (such as government health officials), epidemic model developers who may seek to reproduce or leverage the modeling methods presented in other studies, or the mainstream media (reporting to the general public on an epidemic). The EQUATOR consortium further defines a reporting guideline as “a checklist, flow diagram, or structured text to guide authors in reporting a specific type of research, developed using explicit methodology” (Anon, 2019b). The latter emphasis on explicit methodology for creating guidelines requires a structured, reproducible, consensus process that is specifically described a priori (Moher et al., 2010). Prominent reporting guidelines include the Preferred Reporting Items for Systematic Reviews and Meta-Analyses (PRISMA) statement (Anon, 2019c), the Consolidating Standards of Reporting Trials (CONSORT) statement (Anon, 2019d), the Standards for Reporting of Diagnostic Accuracy Studies (STARD) guidelines (Cohen et al., 2016), and the Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) guidelines (Anon, 2019e). The development of these reporting guidelines has been shown to be effective in improving the quality and clarity of academic publications. As an example, reporting of clinical trials improved after the introduction of the CONSORT guidelines (Hopewell et al., 2012).

To our knowledge, no current reporting guideline exists for epidemic forecasting and prediction research. Development of a comprehensive epidemic forecasting and prediction research reporting guideline may ultimately lead to improvements in: i) the consistency of reporting, ii) the reproducibility of results, iii) the quality of practice, and iv) the transparency of research. Underscoring the need for such reporting standardization, a recent evaluation of Zika epidemic forecasting and prediction studies found that there was substantial heterogeneity in the reporting of study methods, uncertainties (assessed through reporting of uncertainty intervals), data, and other critical information (e.g. clear and accurate display of model output) which would be needed to completely understand and replicate the work (Kobres et al., 2019).

An essential first step in efforts to develop epidemic forecasting reporting guidelines is performing a systematic review, which follows reporting health-research guideline development best-practice (Moher et al., 2010, Anon, 2019c). We therefore undertook a systematic review to (i) identify all published epidemic forecasting or prediction reporting guidelines; and (ii) qualitatively evaluate their strengths, limitations and suitability of any guidelines found for the field of epidemic forecasting and prediction research.

2. Materials and methods

We followed the PRISMA statement to conduct this systematic review (Table S1) (Anon, 2019c). An a priori systematic review protocol was developed and agreed upon by the entire review team (n = 18) made up of members from U.S. Government and academia before the review commenced. Our protocol was not published in the International Prospective Register of Systematic Reviews (PROSPERO), but is publicly available at https://github.com/cmrivers?tab=repositories (Anon, 2019g).

2.1. Search strategy

MEDLINE and EMBASE electronic databases were searched through May 26, 2019, using the following search ontology: “[epidemic OR outbreak OR influenza OR Ebola OR Zika OR SARS OR Chikungunya OR MERS OR pathogen OR pandemic OR virus OR viruses] AND [forecasting OR prediction OR modeling OR modelling] AND guidelines”. This search ontology was not restricted to the title or abstract. The pathogen-specific terms (e.g “Zika”, “virus”) were included to capture recent major outbreaks and epidemics, but as Boolean [OR] operators these would not have restricted the search to just these pathogens or pathogen-categories.

In order to identify the relevant grey literature: (i) leading experts in the field of epidemic modeling and forecasting were contacted through an epidemic model implementation working group, and (ii) the EQUATOR website was reviewed for any existing epidemic forecasting guidelines which had been published (Anon, 2019a).

2.2. Eligibility criteria

Our inclusion criterion consisted of any publication which proposes a set of reporting guidelines for epidemic forecasting or prediction research. Our exclusion criteria were as follows:

Non-communicable disease modeling reporting guidelines

Publications which proposed how to perform epidemic modeling studies (rather than how to report them)

Modeling reporting guidelines which were not specific to epidemic forecasting and prediction

Narrative review articles

Perspective pieces

Editorials

Duplicate studies

Descriptive or analytic epidemiological publications

Clinical management or diagnostic guidelines

2.3. Study screening and eligibility determination

Literature review results were divided and assigned to 10 unique reviewer-pairs across 18 investigators from U.S. Government and academia. Both reviewers in each pair independently screened the titles and abstracts for potential eligibility in a citation manager software. The two reviewers came to a consensus if their screened-in article short-lists differed, and a third-party adjudicator decided if the pairs were unable to reach a consensus on screening in articles. For articles that made the second-round review (i.e. the screened-in studies), the reviewer-pairs repeated the screening and consensus process with the full text of the article to determine eligibility. Reasons for excluding the study/article were documented. A third independent reviewer adjudicated when reviewers were unable to reach a consensus on the final eligibility of any study.

2.4. Data collection process and data items

Eligible articles were qualitatively described by the reviewer pair in conjunction with a 3rd reviewer.

3. Results

Our literature database search identified 1467 publications (MEDLINE n = 584, EMBASE n = 883). A search of the EQUATOR website and discussions with experts identified a further 405 and 2 publications, respectively (Fig. 1). Of these 1777 unique publications, 25 were screened-in through the first review of title and abstract for further consideration (Fig. 1). Two of those publications were ultimately deemed eligible by the paired-reviewer consensus process through full text review (Eddy et al., 2012; Field et al., 2014). A further qualitative review of these eligible publications by Eddy et al. and Field et al. found that they were both limited in their guideline specificity and applicability to epidemic forecasting and prediction reporting (Eddy et al., 2012; Field et al., 2014).

Fig. 1.

PRISMA flow chart.

The first, by Eddy et al., includes a set of recommendations for medical decision-making model transparency in 2012 (Eddy et al., 2012). The major rationale for these guidelines offered by the authors is that “trust and confidence are critical to the success of health care models” and can be achieved with transparency and validation. These authors derived the guidelines through an iterative, structured process with individuals (model users and model developers) voting on draft recommendations, which were made available for further comment. However, these recommendations stop short of formal guidelines, were designed to be applicable to the broad category medical decision-making models, were not specifically tailored to epidemic forecasting, and do not encompass all of the uses relevant to epidemics (such as guidance on the reporting of prospective forecasts and their method of validation or the documentation of the source of epidemic case count data). Further, the recommendations cover both model reporting as well as the conduct of model validation.

Nevertheless, several aspects of these recommendations were found to be potentially relevant to epidemic forecasting and prediction research. The authors call for modeling results to be transparent with sufficient non-technical documentation to be accessible to any interested reader. Items suggested to be included in the non-technical section included: the purpose of the model, the model data sources, study funding sources, a graphical representation of the model components, model inputs, model outputs, effects of uncertainty, the potential applications of the model, and limitations of its intended applications (Eddy et al., 2012).

Eddy et al. also call for extended technical model documentation to allow full replication by others with sufficient modeling expertise. Yet they highlight the challenges of providing such technical documentation in full, including intellectual property concerns, dynamic changes to the model components over time, and the need for appropriate expertise to interpret technical documentation (particularly model code) (Eddy et al., 2012). The authors suggest work-around solutions such as running code and providing model output to others upon request, and/or making full technical model documentation available upon private request rather than providing complete code in the public domain (Eddy et al., 2012).

The second candidate guidelines by Field et al. are an extension of the STROBE guidelines for molecular epidemiology and seek to “improve the reporting of studies and, in turn, to assist interpretation of the data and increase understanding of what was actually done by researchers” (Field et al., 2014). These guidelines, named ‘STROBE for Molecular Epidemiology’ (STROME), are not explicitly aimed at epidemic forecasting and prediction research. Rather, they apply to a wide range of molecular epidemiology studies ranging from descriptive clonal typing to advanced phylodynamics (Field et al., 2014). Nevertheless, these recommendations also offered several reporting items of potential relevance to epidemic forecasting. These relevant items include (i) explicit description of case definitions, (ii) documentation of sampling methods, (iii) documentation of the study time frame, (iv) description of data sources and related laboratory diagnostic methods, (v) description of missing data, (vi) documentation of relevant ethics approvals, (vii) evaluating consistency of findings between different lines of evidence, (viii) description of the study objectives, and (ix) acknowledgement of case ascertainment bias and non-independence, if present. The development of the STROME guidelines also followed a structured, iterative process of guideline development. Consecutive versions of the guidelines were circulated to reach a consensus on content, and incorporated a range of stakeholders and complementary expertise from multiple countries, fields, and sectors (Field et al., 2014).

In addition to the review of these two eligible articles, the screening process of our systematic review noted several publications that sought to standardize good practices for biomedical modeling conduct (more broadly than forecasting and prediction). In 2011 the International Society for Pharmacoeconomics and Outcomes Research and the Society for Medical Decision Making (ISPOR-SMDM) Modeling Good Research Practices Task Force generated a set of “optimal practices that all models should strive toward” (Caro et al., 2012). This consortium derived a series of modeling good practice recommendations covering model conceptualization, event simulation, dynamic transmission modeling, model parameter estimation and uncertainty analysis (Caro et al., 2012). These were not eligible in our review because they pertain to modeling practice rather than model reporting. However, they may be of general interest to the epidemic modeling community and we cite them here (Caro et al., 2012; Briggs et al., 2012; Pitman et al., 2012). Similar modeling practice guidelines for health technology assessment and disaster response modeling were also noted (Brandeau et al., 2009; Dahabreh et al., 2008).

4. Conclusions

While our systematic review identified two eligible manuscripts that highlighted important features of modeling studies by consensus (Eddy et al., 2012; Field et al., 2014), neither publication ultimately described reporting guidelines specific to epidemic forecasting and prediction. This systematic review, therefore, confirms that no published recommendations exist to standardize the reporting of epidemic forecasting and prediction studies. This is in contrast to multiple other biomedical research fields which have clear standards in study reporting, many of which are endorsed and enforced by biomedical journals (Anon, 2019a, h).

One potential limitation of this systematic review is that, while it did include two databases (MEDLINE and EMBASE), it did not include others such as the Web of Science or SciELO. Including this latter database in particular may have mitigated another limitation of performing searches with English search terms only, although we did not restrict the MEDLINE and EMBASE database searches by language and MEDLINE does identify English-translated abstracts which often accompany non-English articles (Anon, 2020). A final limitation was that we did not explicitly search for reporting guidelines which exist for non-medical forecasting. For example, these may be a feature of weather forecasting and indeed infectious disease forecasters have often looked to the field of weather forecasting for lessons on how to best implement their research (Viboud and Vespignani, 2019).

To redress the lack of appropriate reporting standards for epidemic forecasting and prediction (Kobres et al., 2019), we have now launched the Epidemic Forecasting and Reporting Guidelines (EPIFORGE) initiative (Anon, 2019f). The EPIFORGE initiative aims to develop guidance on how to report epidemic forecasting and prediction studies (not how to perform such studies) (Anon, 2019f). Broadly, EPIFORGE aims to improve the consistency of epidemic forecasting reporting, and thereby forecasting reproducibility, quality, and transparency. The systematic review presented here was an important step of the EPIFORGE process as it confirms the lack of a suitable existing guideline to meet the needs of this field (Moher et al., 2010). The results of this effort are expected in Spring 2020, and the EPIFORGE reporting checklist development process has so far examined items within the domains of reproducibility, transparency, validity, interpretability, funding and sponsorship.

Further, this systematic review has provided valuable reference materials for the EPIFORGE guideline development process (Anon, 2019f). While not specific to epidemic forecasting, the model reporting recommendations by Eddy et al. and Field et al. have prompted consideration of case definitions, laboratory methods, code sharing, study time-frames, missing data, model applications, funding sources, model structure, and bias in the evolving EPIFORGE guidelines (Eddy et al., 2012; Field et al., 2014). The guideline development principles used by Eddy et al. and Field et al. have also been adopted into the EPIFORGE methods which use a structured, iterative consensus process (i.e. a three-round Delphi process) across a range of model developers and model users from multiple sectors and multiple countries. These methods follow best-practice guidance for health research reporting guideline development (Anon, 2019b; Moher et al., 2010). Such an approach is critical to maximizing the quality, acceptability, and eventual implementation of the final EPIFORGE recommendations and improve the quality, transparency, and reproducibility of forecasting/prediction practice. (Eddy et al., 2012; Field et al., 2014).

Acknowledgments

The authors are grateful for the Outbreak Science and Model Implementation Working Group in hosting this activity.

Funding statement

NGR reports funding by NIGMS grant R35GM119582. BMA is supported by Bill and Melinda Gates Foundation through the Global Good Fund. SP and IMB were funded by the Armed Forces Health Surveillance Branch (GEIS: P0116_19_WR_03.11).

Footnotes

Publisher's Disclaimer: Disclaimer

Publisher's Disclaimer: The content is solely the responsibility of the authors and does not necessarily represent the official views of NIGMS or the National Institutes of Health. Material has been reviewed by the Walter Reed Army Institute of Research. There is no objection to its presentation and/or publication. The opinions or assertions contained herein are the private views of the author, and are not to be construed as official, or as reflecting true views of the Department of the Army or the Department of Defense. The views expressed here are those of the authors and do not necessarily reflect the official policy of the Department of Defense, Department of the Army, U.S. Army Medical Department or the U.S. Government. The findings and conclusions in this report are those of the authors and do not necessarily represent the official position of the Centers for Disease Control and Prevention.

CRediT authorship contribution statement

Simon Pollett: Conceptualization, Methodology, Formal analysis, Investigation, Data curation, Writing - original draft, Writing - review & editing, Supervision, Project administration. Michael Johansson: Investigation, Writing - review & editing, Supervision. Matthew Biggerstaff: Investigation, Writing - review & editing, Supervision. Lindsay C. Morton: Investigation, Writing - review & editing, Supervision. Sara L. Bazaco: Investigation, Writing - review & editing, Supervision. David M. Brett Major: Investigation, Writing - review & editing, Supervision. Anna M. Stewart-Ibarra: Investigation, Writing - review & editing, Supervision. Julie A. Pavlin: Investigation, Writing - review & editing, Supervision. Suzanne Mate: Investigation, Writing - review & editing, Supervision. Rachel Sippy: Investigation, Writing - review & editing, Supervision. Laurie J. Hartman: Investigation, Writing - review & editing, Supervision. Nicholas G. Reich: Investigation, Writing - review & editing, Supervision. Irina Maljkovic Berry: Investigation, Writing - review & editing, Supervision. Jean-Paul Chretien: Investigation, Writing - review & editing, Supervision. Benjamin M. Althouse: Investigation, Writing - review & editing, Supervision. Diane Myer: Investigation, Writing - review & editing, Supervision. Cecile Viboud: Investigation, Writing - review & editing, Supervision. Caitlin Rivers: Conceptualization, Investigation, Writing - original draft, Writing - review & editing, Supervision, Project administration.

Declaration of Competing Interest

The authors declare no conflicts of interest.

References

- www.equator-network.org accessed Oct 04 2019.

- https://www.equator-network.org/toolkits/developing-a-reporting-guideline/, accessed Oct 04 2019.

- www.prisma-statement.org, accessed Oct 04 2019.

- www.consort-statement.org accessed Oct 04 2019.

- https://strobe-statement.org accessed Oct 04 2019.

- http://www.equator-network.org/library/reporting-guidelines-under-development/reporting-guidelines-under-development-for-observational-studies/#EPI-FORGE accessed Oct 04 2019.

- https://www.crd.york.ac.uk/PROSPERO/ accessed October 06 2019.

- http://www.consort-statement.org/about-consort/uptake-by-journals accessed October 6 2019.

- https://pubmed.ncbi.nlm.nih.gov/help/, accessed March 22 2020.

- Brandeau ML, McCoy JH, Hupert N, Holty J-E, Bravata DM, 2009. Recommendations for modeling disaster responses in public health and medicine: a position paper of the society for medical decision making. Med. Decis. Mak 29, 438–460. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Briggs AH, Weinstein MC, Fenwick EAL, Karnon J, Sculpher MJ, Paltiel AD, 2012. Model parameter estimation and uncertainty: a report of the ISPOR-SMDM modeling good research practices task force–6. Value Health 15, 835–842. [DOI] [PubMed] [Google Scholar]

- Caro JJ, Briggs AH, Siebert U, Kuntz KM, 2012. Modeling good research practices–overview: a report of the ISPOR-SMDM modeling good research practices task force-1. Med. Decis. Mak. 32, 667–677. [DOI] [PubMed] [Google Scholar]

- Cohen JF, Korevaar DA, Altman DG, Bruns DE, Gatsonis CA, Hooft L, et al. , 2016. STARD 2015 guidelines for reporting diagnostic accuracy studies: explanation and elaboration. BMJ Open 6, e012799. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dahabreh IJ, Trikalinos TA, Balk EM, Wong JB, 2008. Guidance for the Conduct and Reporting of Modeling and Simulation Studies in the Context of Health Technology Assessment. [PubMed]

- Debellut F, Hendrix N, Ortiz JR, Lambach P, Neuzil KM, Bhat N, et al. , 2018. Forecasting demand for maternal influenza immunization in low- and lower-middle-income countries. PLoS One 13, e0199470. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Del Valle SY, McMahon BH, Asher J, Hatchett R, Lega JC, Brown HE, et al. , 2018. Summary results of the 2014-2015 DARPA Chikungunya challenge. BMC Infect. Dis 18, 245. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eddy DM, Hollingworth W, Caro JJ, Tsevat J, McDonald KM, Wong JB, 2012. Model transparency and validation: a report of the ISPOR-SMDM modeling good research practices task force-7. Med. Decis. Mak 32, 733–743. [DOI] [PubMed] [Google Scholar]

- Ewing A, Lee EC, Viboud C, Bansal S, 2017. Contact, travel, and transmission: the impact of winter holidays on influenza dynamics in the United States. J. Infect. Dis 215, 732–739. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Field N, Cohen T, Struelens MJ, Palm D, Cookson B, Glynn JR, et al. , 2014. Strengthening the reporting of molecular epidemiology for infectious diseases (STROME-ID): an extension of the STROBE statement. Lancet Infect. Dis 14, 341–352. [DOI] [PubMed] [Google Scholar]

- Gomes MFC, Pastore Y, Piontti A, Rossi L, Chao D, Longini I, Halloran ME, et al. , 2014. Assessing the international spreading risk associated with the 2014 west African ebola outbreak. PLoS Curr. 6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hopewell S, Ravaud P, Baron G, Boutron I, 2012. Effect of editors’ implementation of CONSORT guidelines on the reporting of abstracts in high impact medical journals: interrupted time series analysis. BMJ (Clin. Res. Ed.) 344, e4178. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Keegan LT, Lessler J, Johansson MA, 2017. Quantifying Zika: Advancing the Epidemiology of Zika With Quantitative Models. J. Infect. Dis 216, S884–S900. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kobres P-Y, Chretien J-P, Johansson MA, Morgan JJ, Whung P-Y, Mukundan H, et al. , 2019. A systematic review and evaluation of Zika virus forecasting and prediction research during a public health emergency of international concern. PLoS Negl. Trop. Dis 13, e0007451. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lauer SA, Sakrejda K, Ray EL, Keegan LT, Bi Q, Suangtho P, et al. , 2018. Prospective forecasts of annual dengue hemorrhagic fever incidence in Thailand, 2010-2014. Proc. Natl. Acad. Sci. U. S. A 115, E2175–E2182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lowe R, Stewart-Ibarra AM, Petrova D, Garcia-Diez M, Borbor-Cordova MJ, Mejia R, et al. , 2017. Climate services for health: predicting the evolution of the 2016 dengue season in Machala, Ecuador. Lancet Planet. Health 1, e142–e151. [DOI] [PubMed] [Google Scholar]

- Lowe R, Gasparrini A, Van Meerbeeck CJ, Lippi CA, Mahon R, Trotman AR, et al. , 2018. Nonlinear and delayed impacts of climate on dengue risk in Barbados: a modelling study. PLoS Med. 15, e1002613. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Merler S, Ajelli M, Fumanelli L, Parlamento S, Pastore Y, Piontti A, Dean NE, et al. , 2016. Containing Ebola at the source with ring vaccination. PLoS Negl. Trop. Dis 10, e0005093. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moher D, Schulz KF, Simera I, Altman DG, 2010. Guidance for developers of health research reporting guidelines. PLoS Med. 7, e1000217. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nsoesie EO, Brownstein JS, Ramakrishnan N, Marathe MV, 2014. A systematic review of studies on forecasting the dynamics of influenza outbreaks. Influenza Other Respir. Viruses 8, 309–316. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perkins TA, Siraj AS, Ruktanonchai CW, Kraemer MUG, Tatem AJ, 2016. Model-based projections of Zika virus infections in childbearing women in the Americas. Nat. Microbiol 1, 16126. [DOI] [PubMed] [Google Scholar]

- Pitman R, Fisman D, Zaric GS, Postma M, Kretzschmar M, Edmunds J, et al. , 2012. Dynamic transmission modeling: a report of the ISPOR-SMDM modeling good research practices task force working group-5. Med. Decis. Mak 32, 712–721. [DOI] [PubMed] [Google Scholar]

- Polonsky JA, Baidjoe A, Kamvar ZN, Cori A, Durski K, Edmunds WJ, et al. , 2019. Outbreak analytics: a developing data science for informing the response to emerging pathogens. Philos. Trans. R. Soc. Lond., B, Biol. Sci 374, 20180276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reich NG, Brooks LC, Fox SJ, Kandula S, McGowan CJ, Moore E, et al. , 2019. A collaborative multiyear, multimodel assessment of seasonal influenza forecasting in the United States. Proc. Natl. Acad. Sci. U. S. A 116, 3146–3154. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rivers C, Chretien J-P, Riley S, Pavlin JA, Woodward A, Brett-Major D, et al. , 2019. Using “outbreak science” to strengthen the use of models during epidemics. Nat. Commun 10, 3102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spreco A, Eriksson O, Dahlstrom O, Cowling BJ, Timpka T, 2018. Evaluation of nowcasting for detecting and predicting local influenza epidemics, Sweden, 2009-2014. Emerging Infect. Dis 24, 1868–1873. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Viboud C, Vespignani A, 2019. The future of influenza forecasts. Proc. Natl. Acad. Sci. U. S. A 116, 2802–2804. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wannier SR, Worden L, Hoff NA, Amezcua E, Selo B, Sinai C, et al. , 2019. Estimating the impact of violent events on transmission in Ebola virus disease outbreak, Democratic Republic of the Congo, 2018–2019. Epidemics 28, 100353. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Worden L, Wannier R, Hoff NA, Musene K, Selo B, Mossoko M, et al. , 2019. Projections of epidemic transmission and estimation of vaccination impact during an ongoing Ebola virus disease outbreak in Northeastern Democratic Republic of Congo, as of Feb. 25, 2019. PLoS Negl. Trop. Dis 13, e0007512. [DOI] [PMC free article] [PubMed] [Google Scholar]