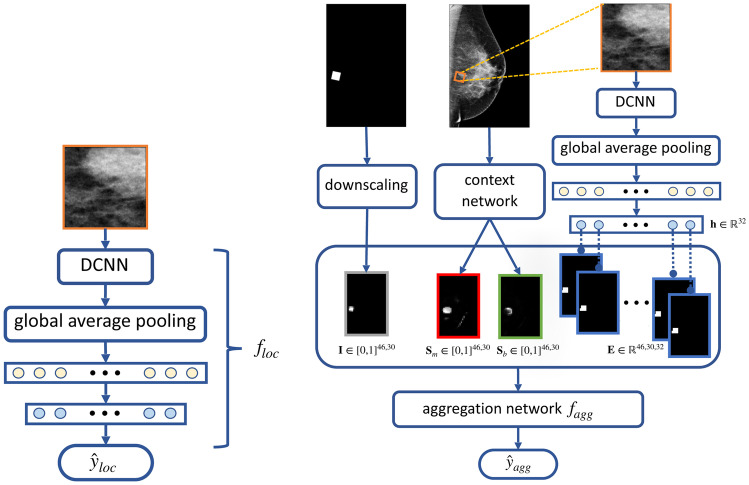

Fig. 4.

Illustration of the proposed method. Left: a deep convolutional neural network which takes image patches of 256256 pixels as inputs, denoted as . Right: the aggregation network, , that takes the concatenation of three types of maps as inputs: 1) location indicator map, , in gray, generated by downscaling the binary mask indicating the cropping window’s location, 2) saliency maps, and , in red and green, generated by the context network, based on Globally Aware Multiple Instance Classifier [16], 3) embedding map, , in blue, formed by the representation , produced by