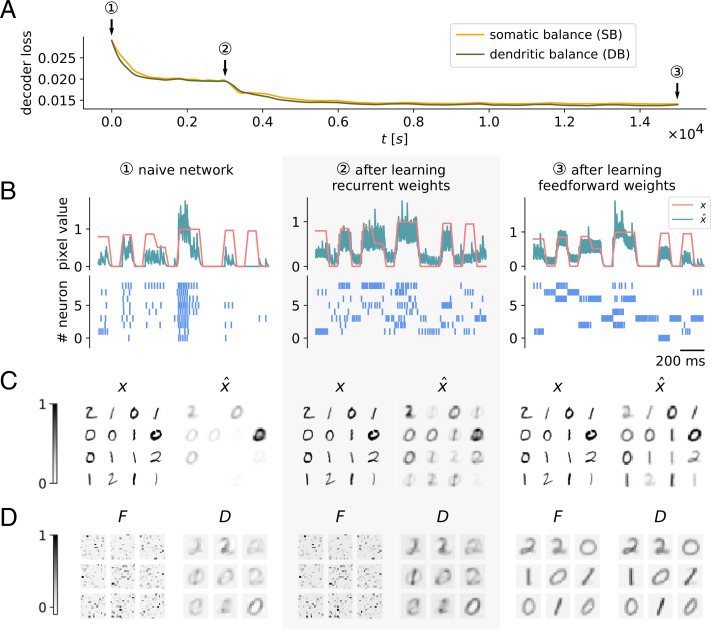

Fig. 3.

Learning an efficient encoding with recurrent and feedforward synaptic plasticity. In this simulation experiment, networks consisting of nine coding neurons encoded 16 × 16 images of digits 0, 1, and 2 from the MNIST dataset. (A) Decoder loss decreases with neural plasticity for both models using either SB or DB. A naive network with random feedforward and zero recurrent weights shows a large decoder loss (1). Learning recurrent connections results in a drop in decoder loss (2). Later, feedforward plasticity was turned on, also resulting in an improvement of performance (3). Final performances and encodings of SB and DB are very similar. (B–D) Results of the DB network for different moments in time during learning. (B) Input signal xi and decoded signal for a single pixel i in the center of the image. MNIST digits were presented as constant input signals for 70 ms and faded for 30 ms to avoid discontinuities. After learning, the decoded signal tracks the input reasonably well given the very limited capacity of the network. Below are the spike trains of all neurons in the network in response to the input signal. Learning recurrent weights decorrelates neural responses; learning feedforward weights makes neural responses more specific for certain inputs. (C) Sample of input images x from the MNIST dataset and reconstructions of the input images. The reconstructions presented here are calculated by averaging the decoded signal during input signal presentation over 70 ms. (D) Feedforward weights F and the optimal decoder D. Weights F are first initialized randomly; after learning every neuron becomes specific for a certain prototypical digit. Learning also causes feedforward and decoder weights to align.