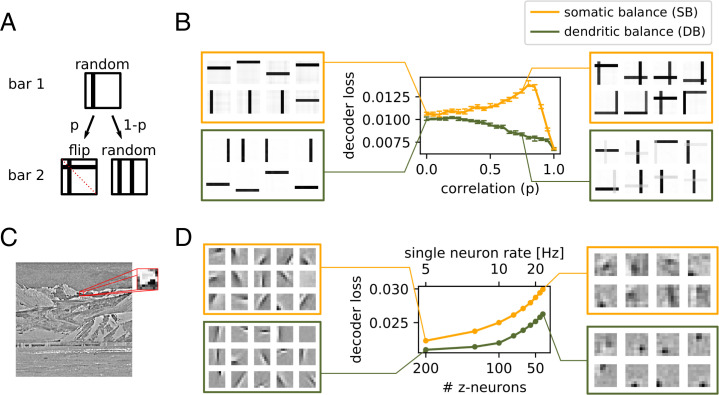

Fig. 4.

Dendritic balance improves learning for complex correlations in the input signal. (A and B) In one simulation experiment, 16 neurons code for images containing 2 random of 16 possible bars. Thus, optimally, every neuron codes for a single bar. (A) Creation of input images with correlation between reoccurring patterns. Two bars are selected in succession and added to the image. With probability p the bars are symmetric around the top left to bottom right diagonal axis. With probability 1 –p the two bars are chosen randomly. (B) Decoder loss after learning for different correlation strengths for networks with SB and DB. Displayed is the median decoder loss for 50 different realizations for each datapoint; error bars denote 95% bootstrap confidence intervals. On the sides, 8 of all 16 converged feedforward weights are shown for representative networks. When correlations between bars are present, the representations learned by SB overlap, while DB still learns efficient single-bar representations. (C and D) Similarly, for complex natural stimuli DB finds better representations when coding neurons are correlated. (C) We extracted 16 × 16-pixel images from a set of whitened pictures of natural scenes (3), scaled them down to 8 × 8 pixels, and applied a nonlinearity (SI Appendix, section D). (D) Decoder loss after learning of SB and DB networks featuring varying numbers of coding neurons, while keeping the population rate constant at 1,000 Hz. On the sides we show exemplary converged feedforward weights. For a large number of coding neurons (Left) both learning schemes yield similar representations, but performance is slightly better for DB. For a small number of neurons (Right) DB learns more refined representations with substantially reduced decoder loss compared to SB. The reason is that for a small number of neurons the learned representations are more correlated and consequently are harder to disentangle. Notably, different amounts of neurons result in different coding strategies.