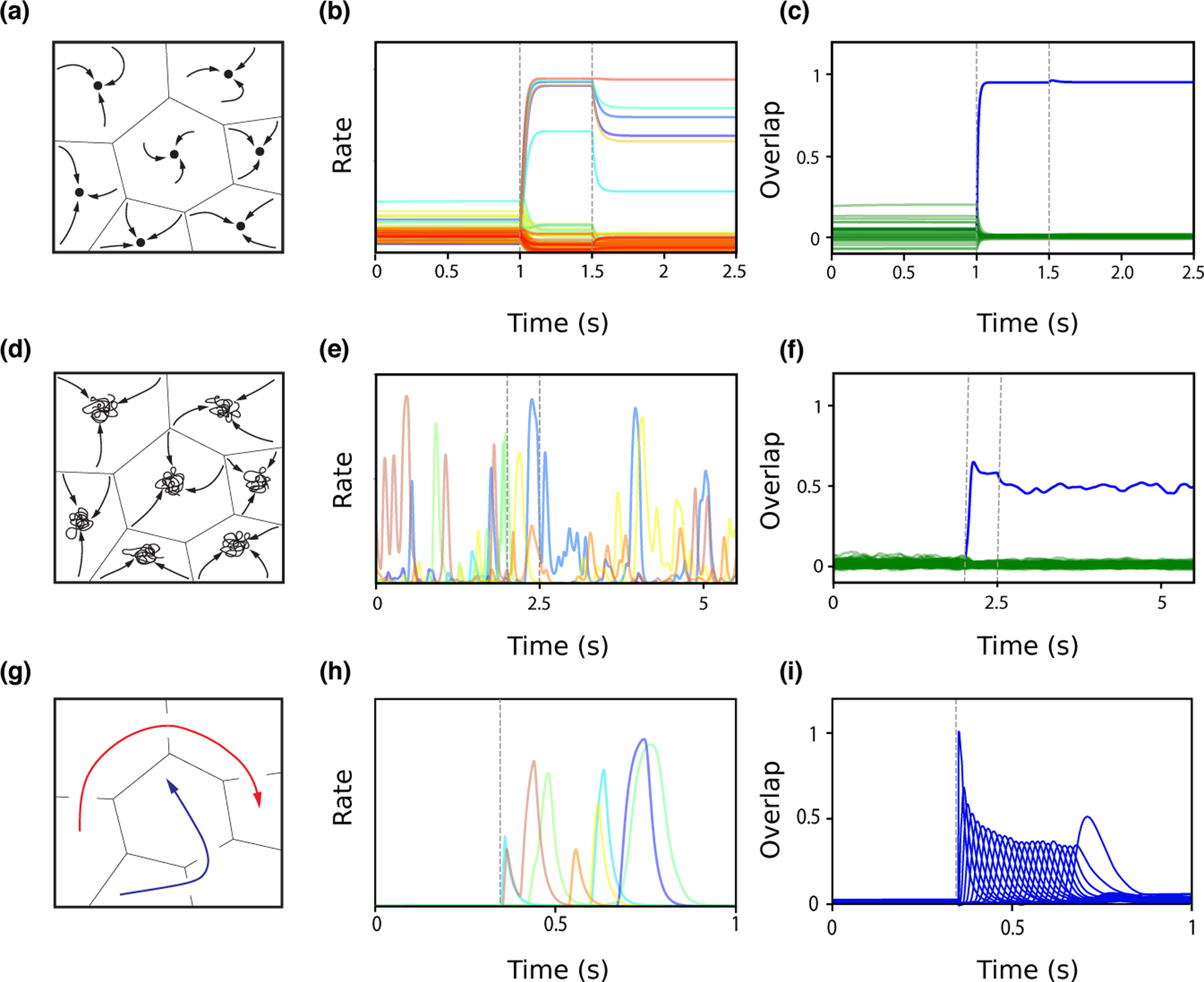

Figure 3:

Network dynamics following learning. A. Schematic of fixed point attractor retrieval states occupying discrete subspaces. The space of network states is divided into basins of attraction, within which network activity will converge to a fixed point (black circle), representing a learned item. B. Single unit firing rates during background (0–1s), presentation of a pattern stored in memory (between dashed lines, 1–1.5s), and ‘delay’ period following stimulus presentation (1.5–2.5s), in a network in which distributions of stored patterns and learning rule have been inferred from data [28•]. In the background state, single neurons fire with a wide (~ log-normal) distribution of rates. During the stimulus presentation, a small fraction of neurons are driven to high rates, while most neurons are suppressed. After the stimulus is removed, the network converges to a selective attractor states, in which a small fraction of neurons exhibits persistent activity. C. Overlap between network state and the presented pattern (blue) increases during stimulus presentation, and remains high following removal of external input, while overlaps between network state and other stored patterns (green) remains small. D. Schematic of chaotic attractor retrieval states. Network activity is confined to a subspace, but does not converge to a stable fixed point. E–F. Similar as in panel B–C, except the network has stronger recurrent connections and stimulus presentation is between 2 and 2.5s [28•]. The network is in a chaotic state before stimulus presentation, with all single neuron firing rates fluctuating widely. After stimulus presentation, the network switches to a different chaotic state that is strongly correlated with the stored pattern (see blue overlap in F). G. Schematic of sequential pattern retrieval for two separate stored sequences (red and blue). Activity transitions from one subspace to the next. H–I. Similar as in panels B–C, except the network stores sequences, and is initialized close to the first stored pattern in a sequence of 24 patterns at 0.35s [29•]. Single neurons are transiently activated at different times, and the network successively visits the neighborhoods of the different patterns composing the sequence as shown by transient overlap activations in I.