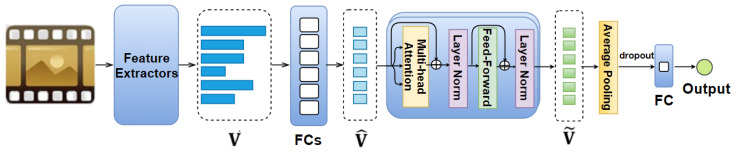

Figure 2.

Our proposed Feature AttendAffectNet. For dimension reduction, the set of feature vectors is fed to fully connected layers with eight neurons each (so as to obtain a set of dimension-reduced feature vectors ) before being passed through N identical layers (each layer includes a multi-head self-attention accompanied with a feed-forward layer). The output of such stack is a set of encoded feature vectors Ṽ, which are then fed to an average pooling layer, dropout, and a fully connected layer (consisting of one neuron) to obtain the predicted arousal/valence values.