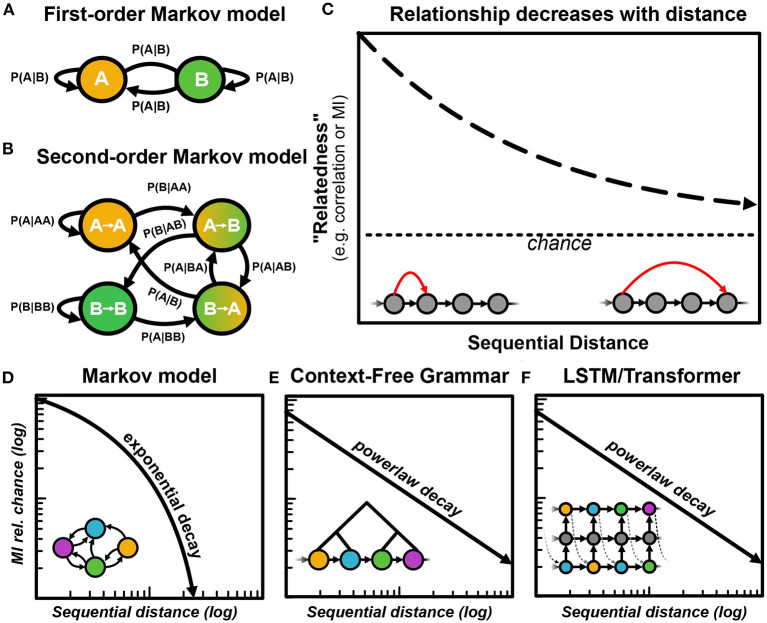

Figure 5.

Capturing long and short-range sequential organization with different models. (A) An example of a 2-state Markov model, capturing 22 = 4 transitional probabilities between states. (B) An example second-order Markov model, capturing 23 = 8 transition probabilities between states. (C) A visualization of the general principle that as sequential distances increase, the relatedness between elements (measured through mutual information or correlation functions) decays toward chance. (D) Sequences generated by Markov models decay exponentially toward chance. (E) Context-free grammars produce sequences that decay following a power law. (F) Certain neural network models such as LSTM RNNs and Transformer models produce sequences that also decay following a power law.