Abstract

The coronavirus (COVID-19) pandemic has been adversely affecting people's health globally. To diminish the effect of this widespread pandemic, it is essential to detect COVID-19 cases as quickly as possible. Chest radiographs are less expensive and are a widely available imaging modality for detecting chest pathology compared with CT images. They play a vital role in early prediction and developing treatment plans for suspected or confirmed COVID-19 chest infection patients. In this paper, a novel shape-dependent Fibonacci-p patterns-based feature descriptor using a machine learning approach is proposed. Computer simulations show that the presented system (1) increases the effectiveness of differentiating COVID-19, viral pneumonia, and normal conditions, (2) is effective on small datasets, and (3) has faster inference time compared to deep learning methods with comparable performance. Computer simulations are performed on two publicly available datasets; (a) the Kaggle dataset, and (b) the COVIDGR dataset. To assess the performance of the presented system, various evaluation parameters, such as accuracy, recall, specificity, precision, and f1-score are used. Nearly 100% differentiation between normal and COVID-19 radiographs is observed for the three-class classification scheme using the lung area-specific Kaggle radiographs. While Recall of 72.65 ± 6.83 and specificity of 77.72 ± 8.06 is observed for the COVIDGR dataset.

Keywords: COVID-19 detection, Fibonacci -p patterns, X-ray images, artificial intelligence, biomedical imaging, machine learning

I. Introduction

On march 11, 2020, the World Health Organization (WHO) declared coronavirus (COVID-19) as an pandemic due to its far-reaching seriousness throughout the world [1], [2]. As of July 27, 2020, over 16,000,000 cases and more than 600,000 deaths were recorded worldwide, with more than 250,000 cases and 5,400 deaths filed in the last 24 hours [3]. In the United States, the Center for Disease Control and Prevention (CDC) has recorded around 4,000,000 cases and more than 100,000 deaths due to coronavirus as of July 27, 2020 [4]. A real-time reverse transcriptase-polymerase chain reaction (RT-PCR) test is currently employed to detect COVID-19 cases. However, the test faces a critical problem of detecting false negatives and false positives, achieving sensitivity as low as nearly 60-70% [5]–[7]. Additionally, there is still a shortage in the availability of test kits worldwide. Moreover, the test process is labor-intensive and time-consuming and takes a long time to produce reports [8], [9]. Therefore, it generates a need for using other diagnostic approaches such as clinical investigation, epidemiological history, pathogenic analysis, computed tomography (CT), or x-ray imaging for detecting COVID-19 more quickly and effectively.

Severe COVID-19 infections exhibit similar clinical characteristics to bronchopneumonia, such as fever, cough, and dyspnea [10]–[13]. Therefore, using just the clinical investigation may not be sufficient for COVID-19 detection. Radiology imaging, such as CT or chest x-ray, is another primary tool that can be used for diagnosing COVID-19. Bilateral, multi-focal, ground-glass opacities with limited or posterior dispersal are some of the features that the majority of the COVID-19 radiology images exhibit [12], [14]–[18]. In recent studies, CT imaging has been widely used to study and detect COVID-19 cases [16], [19]. However, besides exposing the patient to a higher dosage of radiation, CT imaging is also more expensive [19].

On the contrary, x-ray imaging is cheaper and more widely available in most hospitals, making it the first-line radiologists' tool to detect COVID-19 cases [11], [19]. However, differentiating COVID-19 from other lung infections such as viral pneumonia can be very difficult for the radiologist. This lack of specificity could result in a delay of treatment and pose a danger to the patient as well as the health care providers [20]–[23]. Thus, an automated computerized system for more accurate and effective detection of COVID-19 from viral pneumonia and normal condition chest radiographs would be more invaluable.

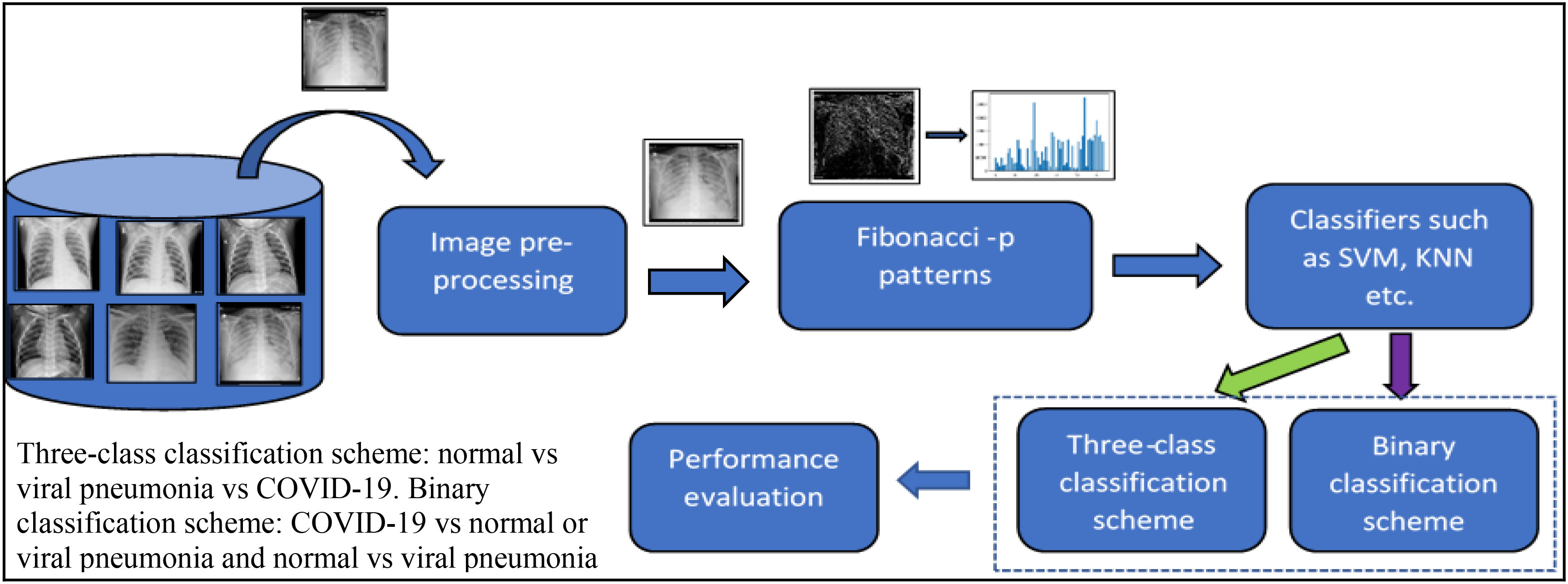

Several deep learning (DL) architectures have been recently proposed to increase the accuracy in COVID-19 detection from viral pneumonia and normal radiographs. However, these methods are sophisticated and require higher computation time and resources, specialized hardware such as GPUs to train the models. DL models usually require a large training data to obtain a stable model, and given the nature of the pandemic, it is difficult to get an extensive database. Comparatively, machine learning models are simple, easy to train and deploy, and are fast. Moreover, machine learning models do not require large datasets to obtain stabilized models. Furthermore, the presence of ground-glass opacities is one of the important features seen in COVID-19 radiographs; thus, extracting textual information would help get an accurate diagnosis. Therefore, in this paper, an Artificial Intelligence (AI) based approach using shape-dependent Fibonacci -p patterns and machine learning models is proposed to effectively capture the radiographs' textural information and accurately diagnose COVID-19 (Fig. 1).

Fig. 1.

Proposed AI-based Fibonacci -p patterns-based classification system. From the directory of images, an input image is read, which is normalized in the image pre-processing step. Fibonacci image is generated using the shape-dependent Fibonacci -p pattern extractor, from which histogram is extracted and send to the classifier for training and testing purposes. Depending on the classification scheme chosen, namely, binary or three-class, classification is performed. Performance evaluation is performed on the generated confusion matrix.

The following are the contribution of this paper:

-

a)

A novel shape-dependent Fibonacci -p patterns-based feature descriptor to extract the underlying distinctive textural patterns, which is computationally inexpensive, tolerant to illumination changes and noise.

-

b)

A new tool for automated detection and classification with higher accuracy that separates the COVID-19 cases from non-COVID-19 cases by using a small dataset of chest radiographs.

-

c)

Result evaluation on the full radiographs and lung area-specific radiographs of the Kaggle dataset and the lung area-specific radiographs of the COVIDGR dataset, using evaluation metrics such as classification accuracy, precision, recall, specificity, F1 score, and confusion matrix.

The rest of the paper is organized as follows: Section II describes the current work related to COVID-19 detection with their advantages and disadvantages; Section III describes the proposed feature extractor method; Section IV describes the database used, classification models employed, and the evaluation parameters used, along with the results obtained after computer simulation; Section V concludes the paper with future work.

II. Related Work

Presently, several DL architectures using convolutional neural network namely, COVID-Net [24], DarkNet [25], CovidX-net [26], CheXnet [27], COVID-SDnet [52] and pre-trained CNNs [2], [9], [28]–[30] have been implemented to detect COVID-19 from viral pneumonia and normal chest radiographs. The performance of these architectures on their corresponding datasets is mentioned in Table I. Even though the aforementioned methods have achieved good detection accuracies, there is still room for improvement for increasing the effectiveness in identifying COVID-19 from normal and viral pneumonia. These mentioned methods being deep-learning models usually require several hours of training time and computational hardware such as GPU's. They are usually data-hungry and complex. A machine learning approach is proposed here to overcome these shortcomings, which are lightweight models making them easier to deploy, requiring less training time, and would not require specialized hardware. Textural information plays a critical role in analyzing chest radiographs [31]. Thus, in this paper, a novel shape-dependent Fibonacci -p patterns-based texture descriptor using machine learning classification models is proposed to distinguish COVID-19, viral pneumonia, and normal chest radiographs. Additionally, a comparative analysis of the proposed method with the existing DL models for classification schemes COVID-19 vs normal and normal vs. viral pneumonia vs COVID-19 for the full radiograph Kaggle dataset is also performed. Furthermore, the proposed method's performance on lung area-specific radiographs for the Kaggle and COVIDGR dataset is also evaluated in this paper.

TABLE I. Literature review for the chest x-ray COVID-19 detection.

| Author | Dataset | Method | Classification scheme | Evaluation Results |

|---|---|---|---|---|

| Linda Wang et al. [24] | COVIDX dataset: 16756 chest x-ray images from open repositories | COVID-Net | Normal vs Non-COVID vs COVID-19 | Accuracy=93.3% |

| Hafeez Abdul et al. [30] | Pre-trained ResNet50 model | Accuracy=90% | ||

| Ozturk et al. [25] | 142 COVID-19, 500 normal, and 500 viral pneumonia images | DarkNet Model | COVID-19 vs non- finding | Accuracy = 98.08% |

| COVID-19 vs non-finding vs viral pneumonia | Accuracy= 87.02% | |||

| Hemdan et al. [27] | 25 Normal and COVID-19 images | CovidX-Net | Normal vs COVID-19 | Accuracy=90% F1-score = 0.89 and 0.91 for normal and COVID-19 respectively using DenseNet201 |

| Narin et al. [28] | 50 COVID-19 images from GitHub dataset and 50 normal from Kaggle dataset | Pre-trained ResNet50 | Normal vs Viral Pneumonia vs COVID-19 | Accuracy= 97% using Inception V3 model and 87% for Inception-images ResNet V2 model |

| Ioannis et al. [29] | Dataste1: 224 COVID-19, 700 bacterial pneumonia, and 504 normal x-ray images, Dataste2: 224 COVID-19, 712 bacterial and viral pneumonia, and 504 normal x-ray images | Different fine-tuned DL models | Normal vs. COVID-19 vs. Viral Pneumonia | Accuracy=96.7%, Sensitivity=98.66%, Specificity=96.46% |

| Asif et al. [9] | 864 COVID-19, 1341 normal, and 1345 viral pneumonia x- ray images | Pre-trained Inception V3 model | Normal vs. COVID-19 vs. Viral Pneumonia | Accuracy=96% |

| Chowdhury et al. [2] | Kaggle Dataset: 219 COVID-19, 1341 Normal, and 1345 Viral Pneumonia | Pre-trained CNN models | Normal vs COVID-19 | Accuracy= 98.3% |

| Normal vs COVID-19 vs. Viral Pneumonia | Accuracy=98.3% | |||

| Bassi et al. [27] | CheXNet | Normal vs COVID-19 vs Viral Pneumonia | Accuracy=97.8% | |

| Tabik et. al [52] | COVIDGR dataset: 852 images with 426 images non-COVID-19 and COVID-19 | COVID-SDnet | Non-COVID vs COVID |

Accuracy=

|

III. Shape-Dependent Fibonacci-p Patterns

Local Binary Patterns (LBP) is a texture descriptor that utilizes the center pixel's information and its respective neighboring pixels to encode the structural and statistical texture information present in an image [32]. Herein, an image is first divided into overlapping windows of neighborhood mxn and for each of these windows, the center pixel and its surrounding n neighboring pixels are compared. Suppose the latter is greater than or equal to the center pixel. In that case, it is binarized as ‘one’ or else as ‘zero.’ A binary pattern obtained by combining these binary numbers is then converted into a decimal value by assigning the appropriate decimal weights and summing them together, thus encoding the textural information present in the window [33], [34] and subsequently obtaining an LBP image. The following are the advantages of classical LBP; (a) it is simple, fast, and easy, and (b) is insensitive to illumination changes. However, it suffers the disadvantages of intolerance to noise and computational expensiveness due to longer feature vector dimensionality. To overcome these shortcomings, a Fibonacci -p patterns-based descriptor is proposed.

Fibonacci-p patterns are textural feature descriptors that work very similar to LBP, i.e., they also encode the textural pattern information surrounding every pixel present in an image by assigning appropriate Fibonacci weights to them [35]. However, the difference between LBP and Fibonacci -p patterns is that in the latter, a set threshold value is used for binarizing the mxn neighborhood. If the difference between the center pixel and its respective neighboring pixels is greater than or equal to the set threshold value, the neighboring pixel is binarized as ‘one,’ or else is binarized as ‘zero’. To generate the decimal value, Fibonacci weights are assigned to the obtained binary pattern and summed together. Thus, generating a Fibonacci image. The following is the mathematical formula used for computing Fibonacci -p patterns [36]–[38]:

|

Where,  = number of neighbors and

= number of neighbors and  = radius, p= pattern value,

= radius, p= pattern value,  = Fibonacci weights and

= Fibonacci weights and  = set threshold. Table II shows the Fibonacci weights computed using (3) depending on the p-value.

= set threshold. Table II shows the Fibonacci weights computed using (3) depending on the p-value.

TABLE II. Fibonacci weights computed for different p values [36].

|

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|

|

0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | … |

| 0 | 1 | 2 | 4 | 8 | 16 | 32 | 64 | 128 | 256 | … |

| 1 | 1 | 1 | 2 | 3 | 5 | 8 | 13 | 21 | 34 | … |

| 2 | 1 | 1 | 1 | 2 | 3 | 4 | 6 | 9 | 13 | … |

| 3 | 1 | 1 | 1 | 1 | 2 | 3 | 4 | 5 | 7 | … |

| 4 | 1 | 1 | 1 | 1 | 1 | 2 | 3 | 4 | 5 | … |

| : | : | : | : | : | : | : | : | : | : | … |

| ∞ | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

Thresholding plays an integral role while computing Fibonacci -p patterns as it helps in eliminating noise while extracting the textural patterns from the images. The threshold value determines the extent of information be incorporated in the patterns, i.e., the higher the threshold value, the less the information becomes incorporated and vice versa [39]. The Fibonacci-p patterns serve the following advantages: (a) when  , the weights obtained are similar to LBP, thus, behaving as an LBP operator, which is another textural descriptor, (b) it is insensitive to the illumination changes, (c) it is insensitive to noise because of the utilization of threshold value in the binarization process, (d) it is computationally inexpensiveness due to reduction in feature vector dimensionality for

, the weights obtained are similar to LBP, thus, behaving as an LBP operator, which is another textural descriptor, (b) it is insensitive to the illumination changes, (c) it is insensitive to noise because of the utilization of threshold value in the binarization process, (d) it is computationally inexpensiveness due to reduction in feature vector dimensionality for  (Table II), and (e) it has the flexibility to add more information due to lower feature vector for

(Table II), and (e) it has the flexibility to add more information due to lower feature vector for  [35].

[35].

However, for a window size larger than  , not all pixels in the given window gets included while computing the Fibonacci-p patterns. For example, for a

, not all pixels in the given window gets included while computing the Fibonacci-p patterns. For example, for a  window using k neighbors, only k pixels get encoded in the pattern information, missing out information present in the remaining pixels, which could be important. Moreover, the classical Fibonacci fails to encode patterns having various shapes except for the circular pattern. To solve this problem, Shape-dependent Fibonacci -p patterns-based feature descriptor is proposed.

window using k neighbors, only k pixels get encoded in the pattern information, missing out information present in the remaining pixels, which could be important. Moreover, the classical Fibonacci fails to encode patterns having various shapes except for the circular pattern. To solve this problem, Shape-dependent Fibonacci -p patterns-based feature descriptor is proposed.

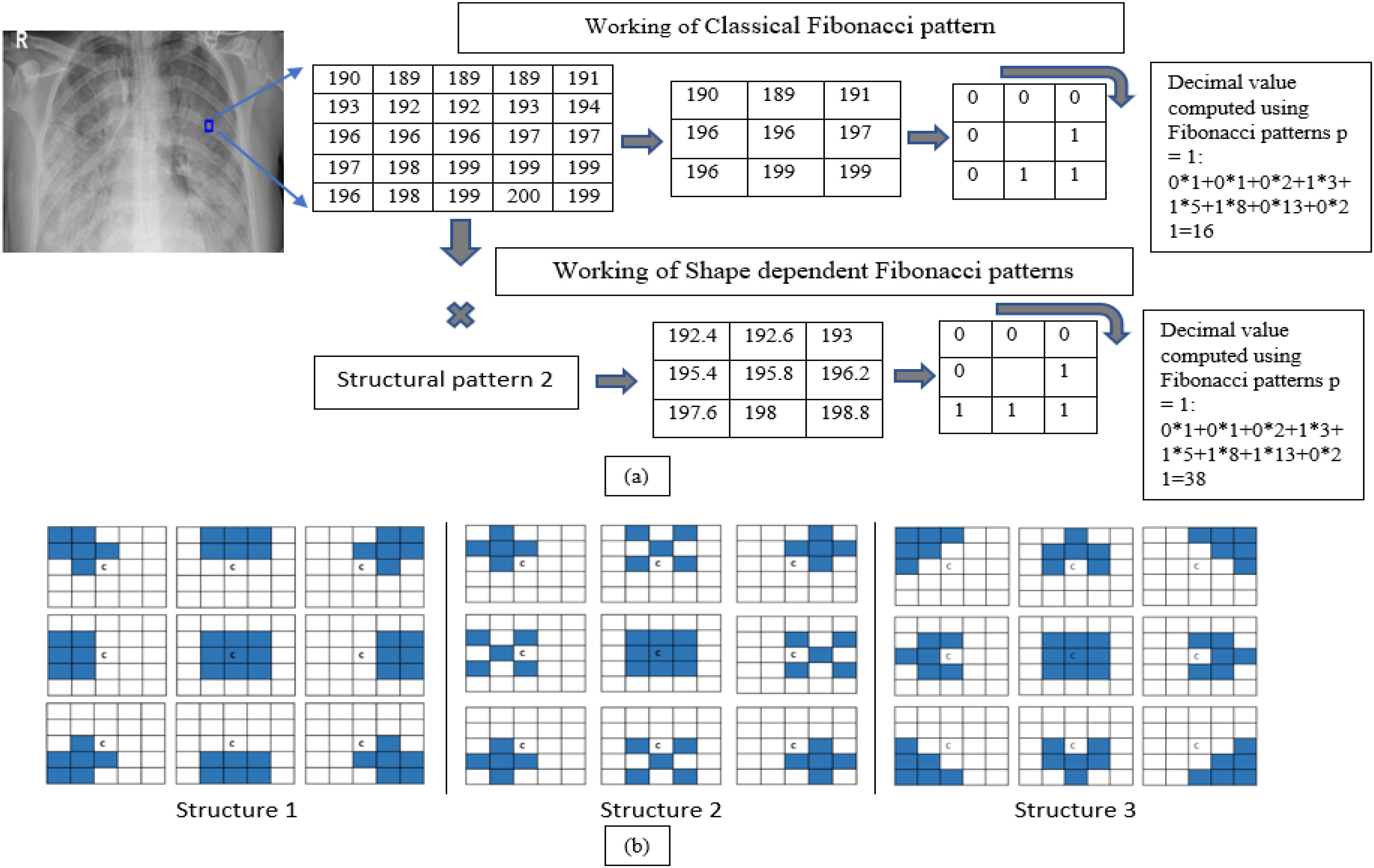

Shape-dependent Fibonacci -p patterns use the different shapes given in the structural pattern (Fig. 2(b)) to encrypt the textural information present in an image. To compute shape-dependent Fibonacci -p patterns, the image is first divided into windows of mxn neighborhoods. From each window, information is extracted as per the highlighted area present in the structural pattern's nine shapes. The arithmetic mean is computed for each shape information and arranged similarly to the structural pattern. Fibonacci -p patterns are then computed using equations (1), (2), and (3). This ensures that most of the information present in the mxn neighborhood is taken into account. Fig. 2(a) illustrates the working of classical Fibonacci -p patterns and the Shape-dependent Fibonacci -p pattern  neighborhood, and Fig. 2(b) shows the three different structural patterns that are experimented with within this paper. Only the 8 neighbors that lie on the circle's circumference of radius

neighborhood, and Fig. 2(b) shows the three different structural patterns that are experimented with within this paper. Only the 8 neighbors that lie on the circle's circumference of radius  get encoded in the classical Fibonacci case. However, it is unknown if the point getting encoded is a random noise point or a textural pattern point. To mitigate this, the Shape-dependent Fibonacci -p patterns encode the localized area information instead. This is because the average values of the texture data extracted using the structural pattern's shape information is used for encoding.

get encoded in the classical Fibonacci case. However, it is unknown if the point getting encoded is a random noise point or a textural pattern point. To mitigate this, the Shape-dependent Fibonacci -p patterns encode the localized area information instead. This is because the average values of the texture data extracted using the structural pattern's shape information is used for encoding.

Fig. 2.

Schematic representation of working of Fibonacci -p patterns and shape-dependent Fibonacci -p patterns using  , and 8 neighbors. The highlighted areas have the value ‘one,’ and the rest are ‘zeros’.

, and 8 neighbors. The highlighted areas have the value ‘one,’ and the rest are ‘zeros’.

Since the shape of the disease pattern to be encoded can be arbitrary, using the points lying circularly may not be enough to highlight all the edges, curves, and edge ends. Therefore, the shape-dependent-p patterns will be more beneficial as the information is extracted using different shapes. Furthermore, in contrast to classical Fibonacci, the center pixel information also gets encoded here.

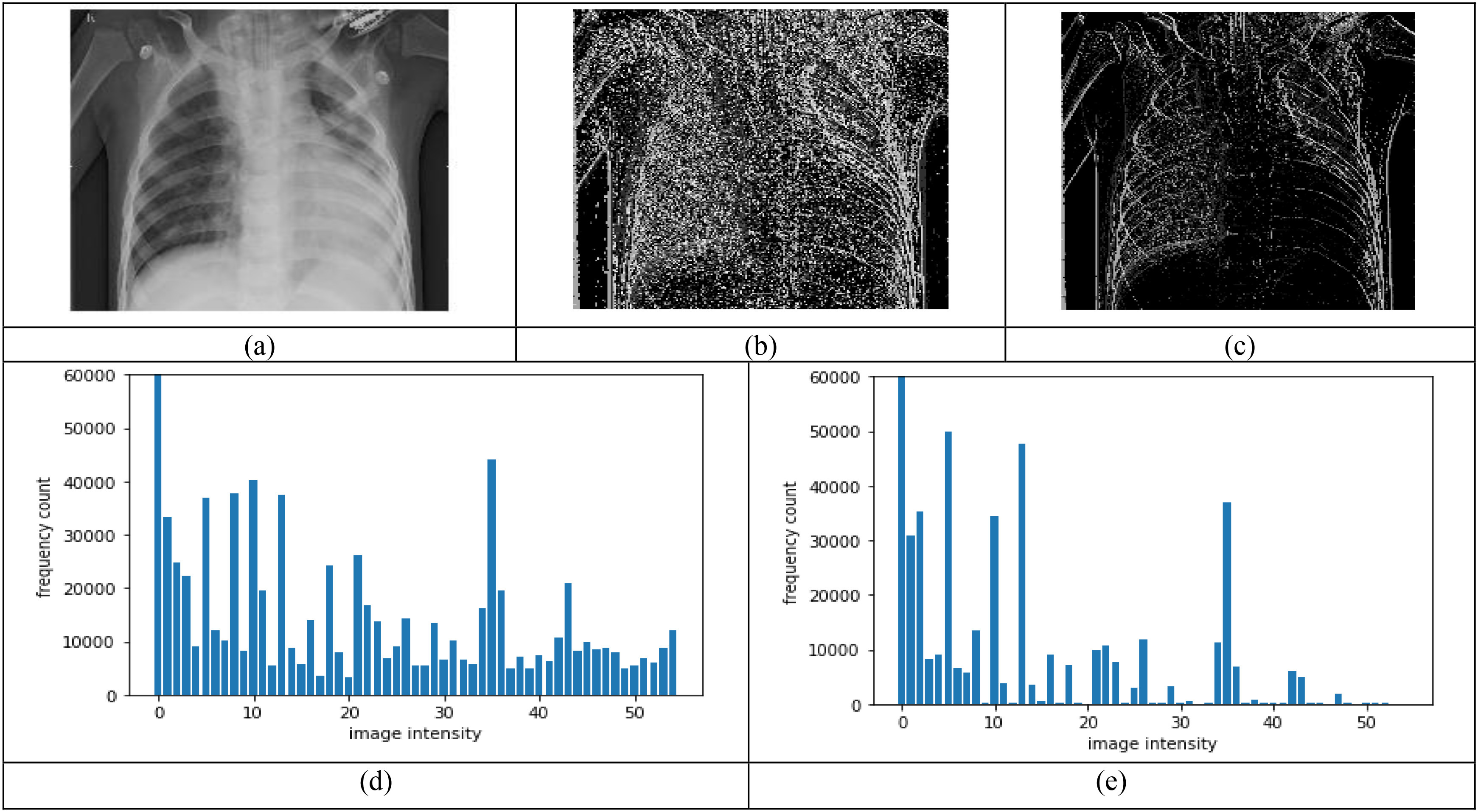

The main advantage of shape-dependent Fibonacci -p patterns is the encoding of the textural patterns aligned in different directions and shapes in the image all in one operation. In addition, the arithmetic means computation performed behaves like mean filtering, inherently eliminating the noise present in the image. Another advantage of Shape-dependent Fibonacci -p patterns is that they can detect different textures and discontinuities such as spots, flat areas, edges and edge ends, and curves. Thus, the Shape-dependent Fibonacci patterns concept's key benefit is capturing more textural information than the classical Fibonacci case, making it a more data-adaptive and context-aware image descriptor. Fig. 3 shows the performance between the classical Fibonacci operator and the shape-dependent Fibonacci operator on COVID-19 radiographs.

Fig. 3.

Comparison between classical Fibonacci and shape-dependent Fibonacci p patterns, using a set window size of  , and

, and  , (a) Original image, (b) Classical Fibonacci image, (c) Shape-dependent Fibonacci p image using structural pattern 2, (d-e) Histograms obtained from the images in (b) and (c) respectively.

, (a) Original image, (b) Classical Fibonacci image, (c) Shape-dependent Fibonacci p image using structural pattern 2, (d-e) Histograms obtained from the images in (b) and (c) respectively.

It can be observed that for a set window size of  , threshold of 2, and

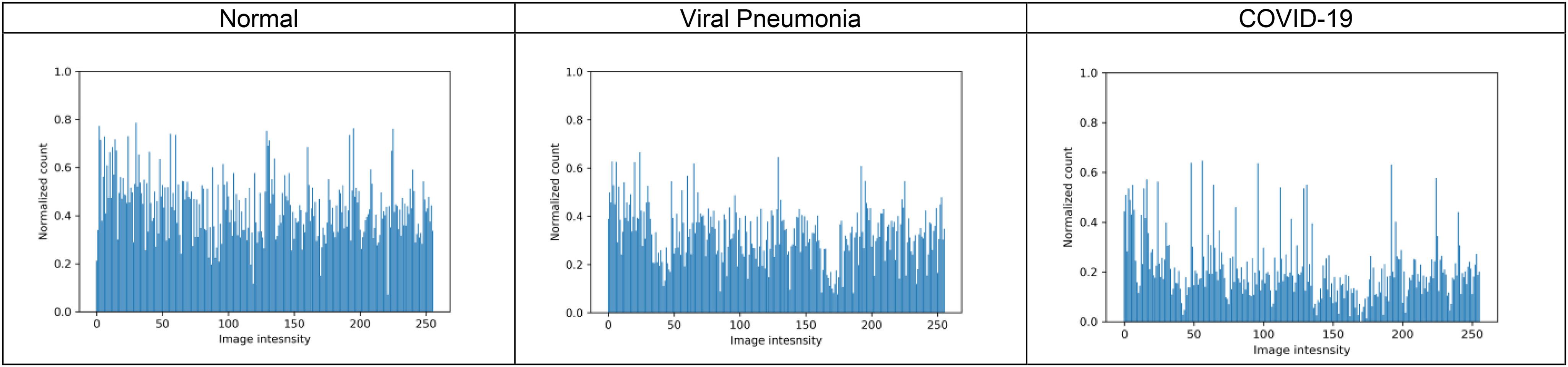

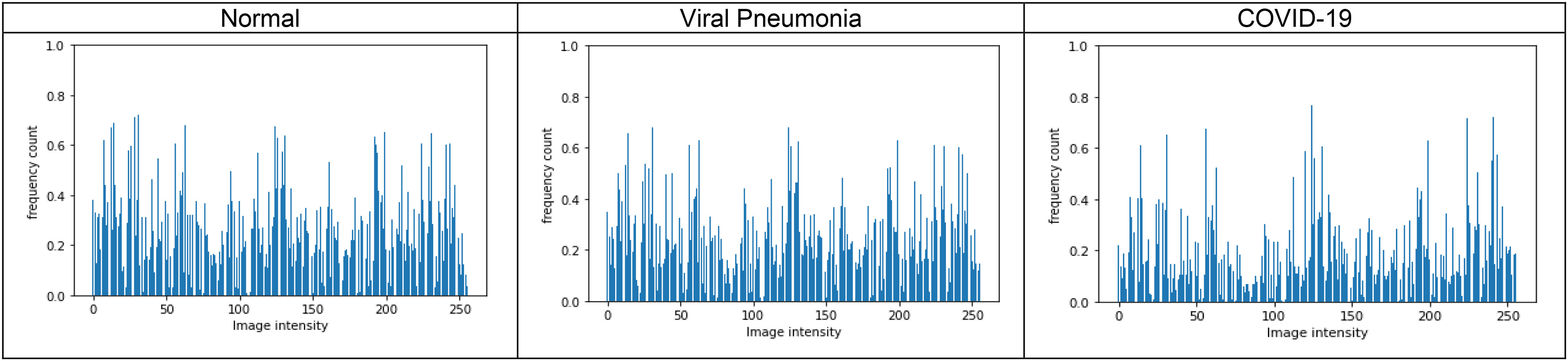

, threshold of 2, and  , the Shape-dependent Fibonacci operator has less noise encoded compared to the classical case, which reflects on the histogram feature extracted from the Fibonacci images. The histogram feature obtained from the shape-dependent Fibonacci images of all three classes is then scaled between 0 and 1 and sent to the classifier for training and testing purposes. Fig. 4 illustrates the histograms computed from the full normal, viral pneumonia and COVID-19 radiographs present in the Kaggle dataset. Fig. 5 illustrates the histograms computed from the normal, viral pneumonia and COVID-19 radiographs from the lung area-specific radiograph Kaggle dataset. The histograms shown is computed over 20 images and averaged for each class.

, the Shape-dependent Fibonacci operator has less noise encoded compared to the classical case, which reflects on the histogram feature extracted from the Fibonacci images. The histogram feature obtained from the shape-dependent Fibonacci images of all three classes is then scaled between 0 and 1 and sent to the classifier for training and testing purposes. Fig. 4 illustrates the histograms computed from the full normal, viral pneumonia and COVID-19 radiographs present in the Kaggle dataset. Fig. 5 illustrates the histograms computed from the normal, viral pneumonia and COVID-19 radiographs from the lung area-specific radiograph Kaggle dataset. The histograms shown is computed over 20 images and averaged for each class.

Fig. 4.

Illustration of histograms of normal, viral pneumonia and COVID-19 radiographs from full radiograph kaggle dataset for pattern value  , window size

, window size  and

and  .

.

Fig. 5.

Illustration of histograms of normal, viral pneumonia and COVID-19 radiographs from lung area-specific kaggle dataset, for pattern value p = 0, window size  and

and  .

.

IV. Computer Simulations and Experimental Results

A. Dataset Description

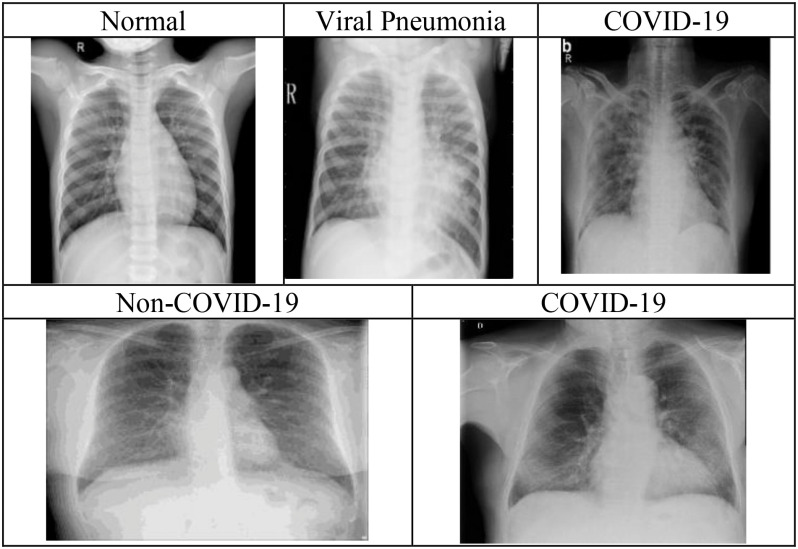

The first dataset (Kaggle dataset) comprises of 219 COVID-19, 1345 viral pneumonia, and 1341 normal chest radiographs, obtained from the publicly available Kaggle website [2]. The authors collected the COVID-19 chest radiographs in [2] from different sources [40], [41], and the different articles published related to coronavirus. Similarly, viral pneumonia and normal chest radiographs used in the dataset were collected from the publicly available dataset on the Kaggle website [42]. Each radiograph in the database is of the size  . The second dataset (COVIDGR dataset) comprises 852 chest radiographs, with both positive (Covid-19) and negative (Non-Covid-19) class containing 426 chest radiographs [52]. All the images in this dataset were acquired from the same X-ray machine, and the chest radiographs were labeled as COVID-19 only when both the RT-PCR test and the radiologist confirmed the results within a day [52]. Image normalization is performed here to ensure that all the images lie in the same contrast range so that the classification system's effectiveness is not affected. Fig. 6 illustrates the radiographs present in Kaggle Dataset and COVIDGR dataset.

. The second dataset (COVIDGR dataset) comprises 852 chest radiographs, with both positive (Covid-19) and negative (Non-Covid-19) class containing 426 chest radiographs [52]. All the images in this dataset were acquired from the same X-ray machine, and the chest radiographs were labeled as COVID-19 only when both the RT-PCR test and the radiologist confirmed the results within a day [52]. Image normalization is performed here to ensure that all the images lie in the same contrast range so that the classification system's effectiveness is not affected. Fig. 6 illustrates the radiographs present in Kaggle Dataset and COVIDGR dataset.

Fig. 6.

Illustration of chest radiographs present in the Kaggle dataset (top row) and COVIDGR dataset (bottom row).

B. Feature Extraction and Training

The images are read sequentially from the image directories present in the dataset on which normalization is performed. The Shape-dependent Fibonacci features are extracted from these normalized images, and the extracted feature matrix with its corresponding labels is randomly shuffled and split into training, testing, and validation sets. Six different machine learning classifiers, namely SVM [43], KNN [44], Random Forest [45], AdaBoost [46], Gradient Tree Boosting [47], and Decision Tree [48], are used for training purposes. For the above-mentioned classifiers, automated hyper-parameter tuning with appropriate cross-validations is performed, and the classifier model giving the best result is automatically selected. For the Kaggle dataset, the feature matrix with its corresponding labels is randomly shuffled and split into 70% training and30% testing sets, and 10% of the training data as a validation set. Hyper-parameter tuning is performed using 10 cross-fold validation. For the COVIDGR dataset, the feature matrix with its corresponding labels is randomly shuffled and split into 90% training, and 10% testing sets 10% of the training data as validation. The Hyper-parameter tuning is performed using 5 cross-fold validation.

C. Performance Evaluation

The best-selected classifier model's performance is evaluated using different parameters, namely, accuracy, sensitivity (recall), specificity, precision, and f1-score. The following are the formulae for computing the parameters mentioned above [49], [50]:

|

where,  , and

, and  are the number of classes correctly classified as positive and negative classes respectively, and

are the number of classes correctly classified as positive and negative classes respectively, and  , and

, and  are the number of images falsely classified as positive and negative classes, respectively.

are the number of images falsely classified as positive and negative classes, respectively.

D. Results

For the Kaggle dataset, four different classification schemes are implemented in this paper, namely, COVID-19 vs viral pneumonia, COVID-19 vs normal, normal vs viral pneumonia, and normal vs viral pneumonia vs COVID-19 chest radiographs. Since the dataset used here is imbalanced, using accuracy as the only tool to measure the effectiveness of the feature extractor would not be enough. Furthermore, how truly the model can distinguish COVID-19, viral pneumonia, and normal chest radiographs from each other is also a critical factor to be measured. Thus, using parameters like recall, specificity, precision, and f1-score is of more significance. To select the optimal pattern (p) and threshold value(T) for the above-mentioned classification schemes, their values are varied from 0–3, and values giving the best precision-recall performance are chosen. Similarly, the optimal structural pattern is selected for evaluating the precision-recall performance of all three proposed structural patterns.

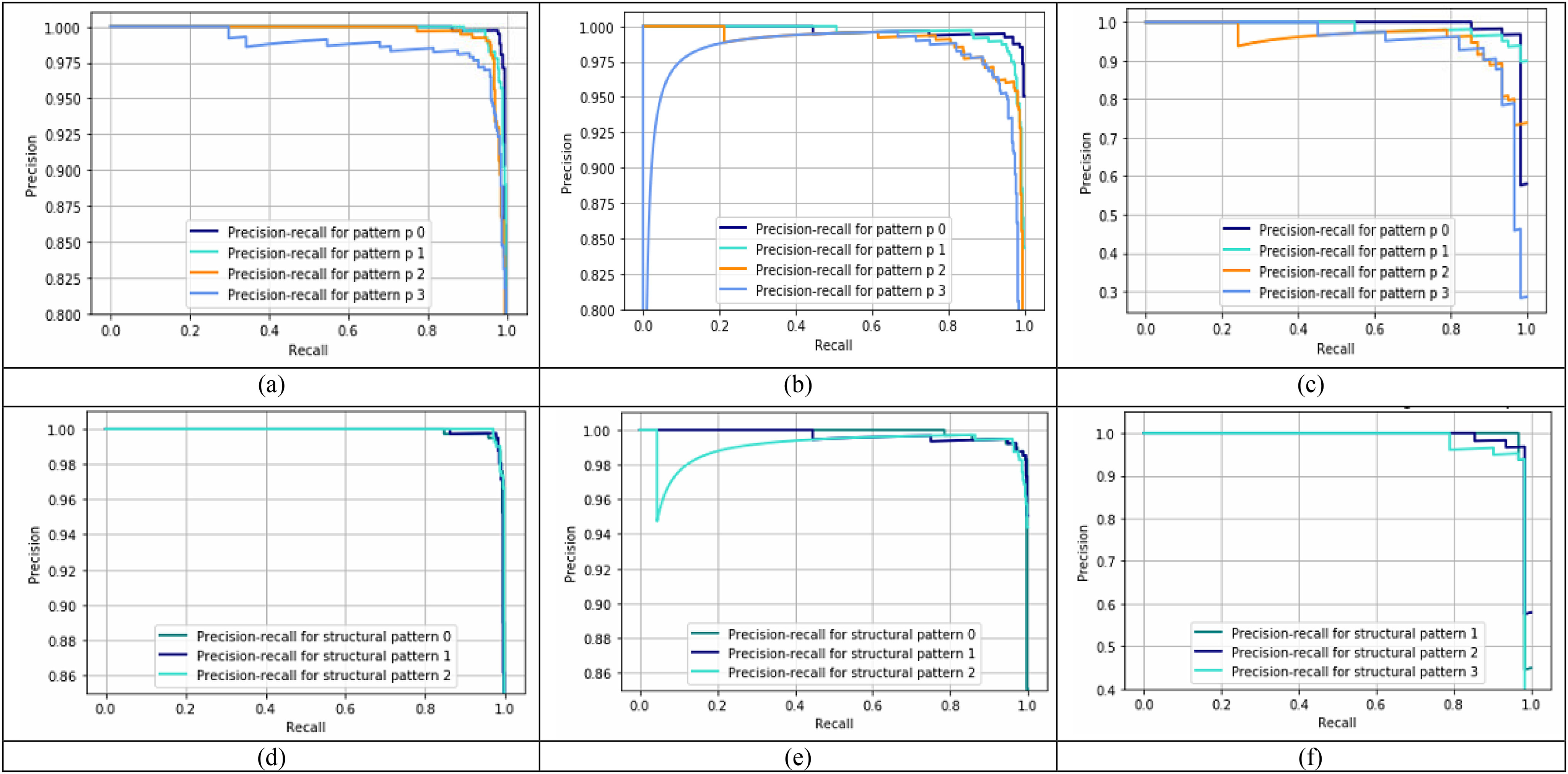

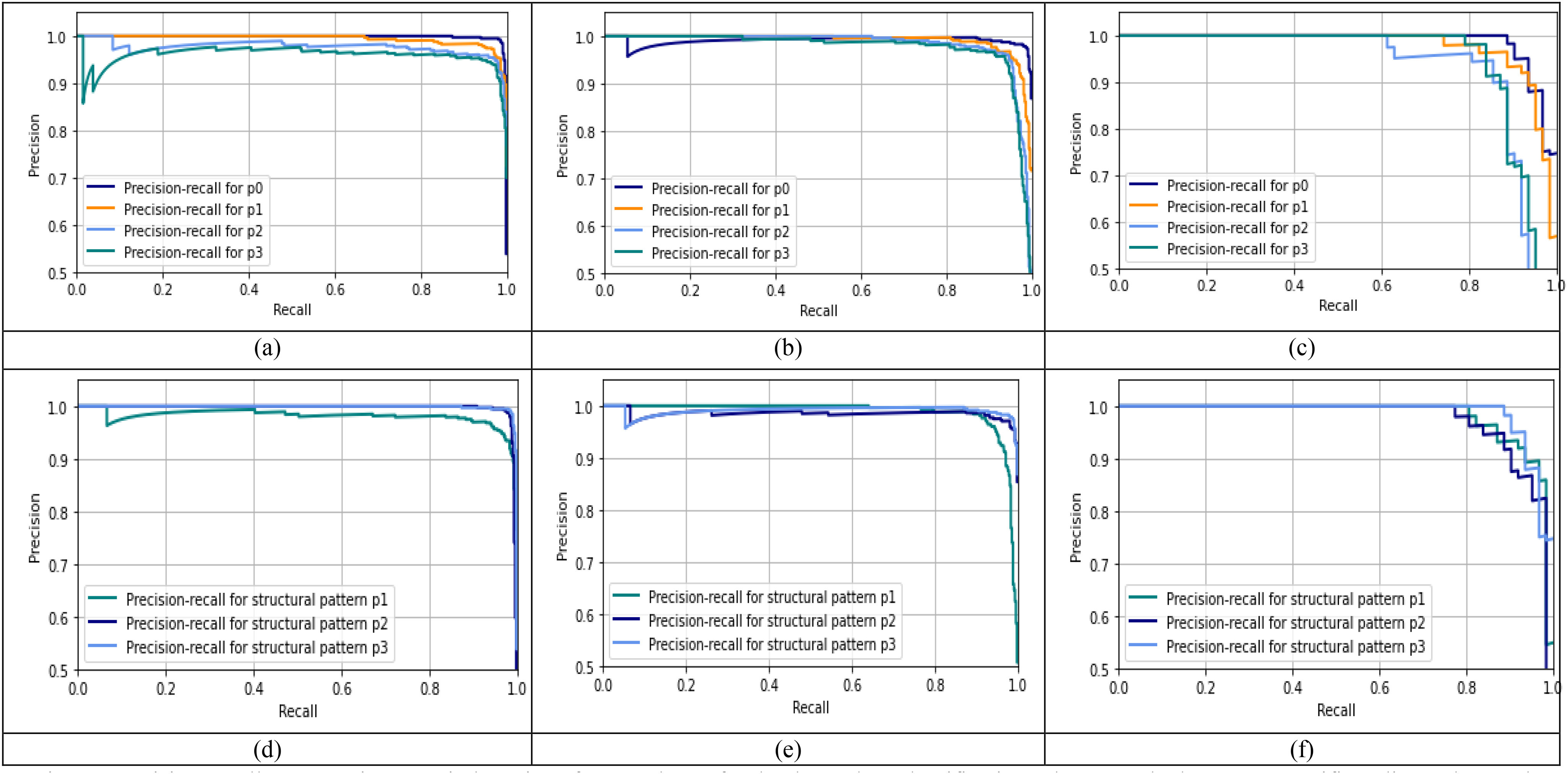

Fig. 7 illustrates the precision-recall curves plotted to assess the performance of different p values and structural patterns for the three-class classification scheme, i.e., normal vs viral pneumonia vs COVID-19. Fig. 7(a), (b), and (c), show the precision-recall curves for different p values for the normal, viral pneumonia, and COVID-19 cases, respectively. It can be observed that  gives the best performance for all three cases. To generate these curves, the window size and threshold value was set to

gives the best performance for all three cases. To generate these curves, the window size and threshold value was set to  and 1, and the structural pattern 2 is used. Likewise, Fig. 7(d), (e), and (f), show the precision-recall curves of different structural patterns on normal, viral pneumonia, and COVID-19 cases, respectively.

and 1, and the structural pattern 2 is used. Likewise, Fig. 7(d), (e), and (f), show the precision-recall curves of different structural patterns on normal, viral pneumonia, and COVID-19 cases, respectively.

Fig. 7.

Precision-recall curves using set window size of  and

and  for the three-class classification scheme on the full radiograph kaggle dataset. (a), (b), and (c) show the precision-recall performance of different pattern (p) values on normal, viral pneumonia and COVID-19 images respectively using structural pattern 2, and (d), (e), and (f) show the precision-recall performance of different structural patterns for normal, viral pneumonia and COVID-19 images respectively using

for the three-class classification scheme on the full radiograph kaggle dataset. (a), (b), and (c) show the precision-recall performance of different pattern (p) values on normal, viral pneumonia and COVID-19 images respectively using structural pattern 2, and (d), (e), and (f) show the precision-recall performance of different structural patterns for normal, viral pneumonia and COVID-19 images respectively using  .

.

Similar performance is observed for all three structural patterns for normal and viral pneumonia images, but structural pattern 2 yields better performance for COVID-19 images. To generate these curves, the window size and threshold value were set to  and 1, and

and 1, and  was used. The optimal parameters for the other classification schemes were obtained similarly. Computer simulations show that for the classification schemes, COVID-19 vs viral pneumonia and normal vs viral pneumonia,

was used. The optimal parameters for the other classification schemes were obtained similarly. Computer simulations show that for the classification schemes, COVID-19 vs viral pneumonia and normal vs viral pneumonia,  , and structure pattern 2 gives the best results, whereas, for the classification scheme, COVID-19 vs normal, both

, and structure pattern 2 gives the best results, whereas, for the classification scheme, COVID-19 vs normal, both  and

and  , and structural pattern 2 yields the best outcome. Table III(A) illustrates the performance of the proposed feature extractor and the DL based methods utilized for the Kaggle dataset for the classification schemes COVID-19 vs normal and normal vs viral pneumonia vs COVID-19.

, and structural pattern 2 yields the best outcome. Table III(A) illustrates the performance of the proposed feature extractor and the DL based methods utilized for the Kaggle dataset for the classification schemes COVID-19 vs normal and normal vs viral pneumonia vs COVID-19.

TABLE III(A). Comparative analysis of the proposed method with DL based methods used on the full radiograph Kaggle dataset.

| Classification scheme | Author | Method | Accuracy | Recall | Specificity | Precision | F1-score |

|---|---|---|---|---|---|---|---|

| COVID-19 vs Normal | Chowdhury et al. [2] | AlexNet | 97.5% | 95% | 100% | 100% | 97% |

| ResNet18 | 96.7% | 93.3% | 100% | 100% | 96.5% | ||

| DenseNet210 | 97.5% | 95% | 100% | 100% | 97.4% | ||

| SqueezeNet | 98.3% | 96.7% | 100% | 100% | 98.3% | ||

| Our work* | Classical Fibonacci p patterns | 99.78% | 98.21% | 100% | 100% | 99.09% | |

| Shape-dependent Fibonacci -p patterns | 99.78% | 100% | 99.76% | 98.25% | 99.12% | ||

| Normal vs viral pneumonia vs COVID-19 | Chowdhury et al. [2] | AlexNet | 95.4% | 93% | 95.8% | 100% | 95.5% |

| ResNet18 | 95% | 95% | 96% | 100% | 97.4% | ||

| DenseNet210 | 96.7% | 96% | 96% | 98.3% | 97.1% | ||

| SqueezeNet | 98.3% | 96.7% | 99% | 98% | 98.3% | ||

| Bassi et al. [27] | CheXNet | 97.8% | 97.8% | - | 100% | 97.8% | |

| Our work* | Classical Fibonacci -p patterns | 98.74% | 97.73% | 99.27% | 98.17% | 97.95% | |

| Shape-dependent Fibonacci -p patterns | 98.88% | 98.72% | 99.35% | 98.29% | 98.50% |

It can be observed that the proposed method achieves better performance by nearly 5–7% for detecting COVID-19 images (recall) for the COVID-19 vs regular classification scheme. A high sensitivity, thereby correctly identifying most of the COVID-19 images is preferable in the current pandemic climate. Similarly, for the three-class classification scheme, the proposed method shows improved performance of 1–5% and 1–4% in recall and specificity, respectively, as compared to methods used by Chowdhury et al. [2] and Bassi et al. [27]. Likewise, compared to the classical Fibonacci -p pattern, the proposed Shape-dependent Fibonacci -p pattern yields better recall results for both the classification schemes. This is because the conventional Fibonacci -p patterns fail to incorporate the alignment and shape of the textural patterns that are required for more accurately distinguishing the three classes from each other.

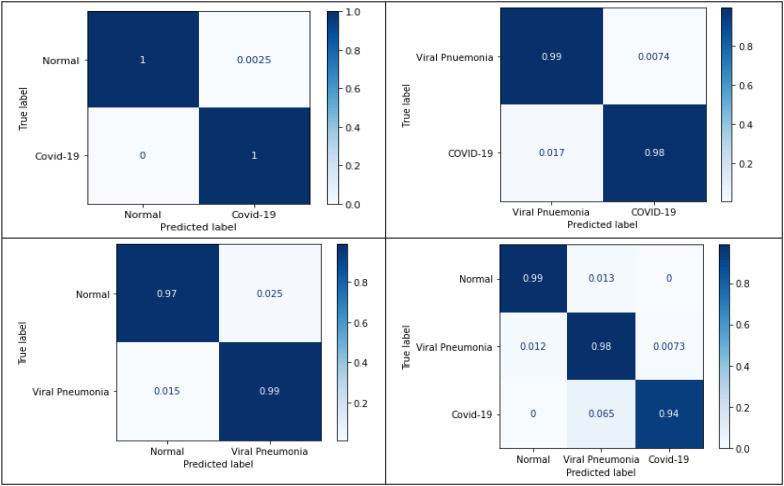

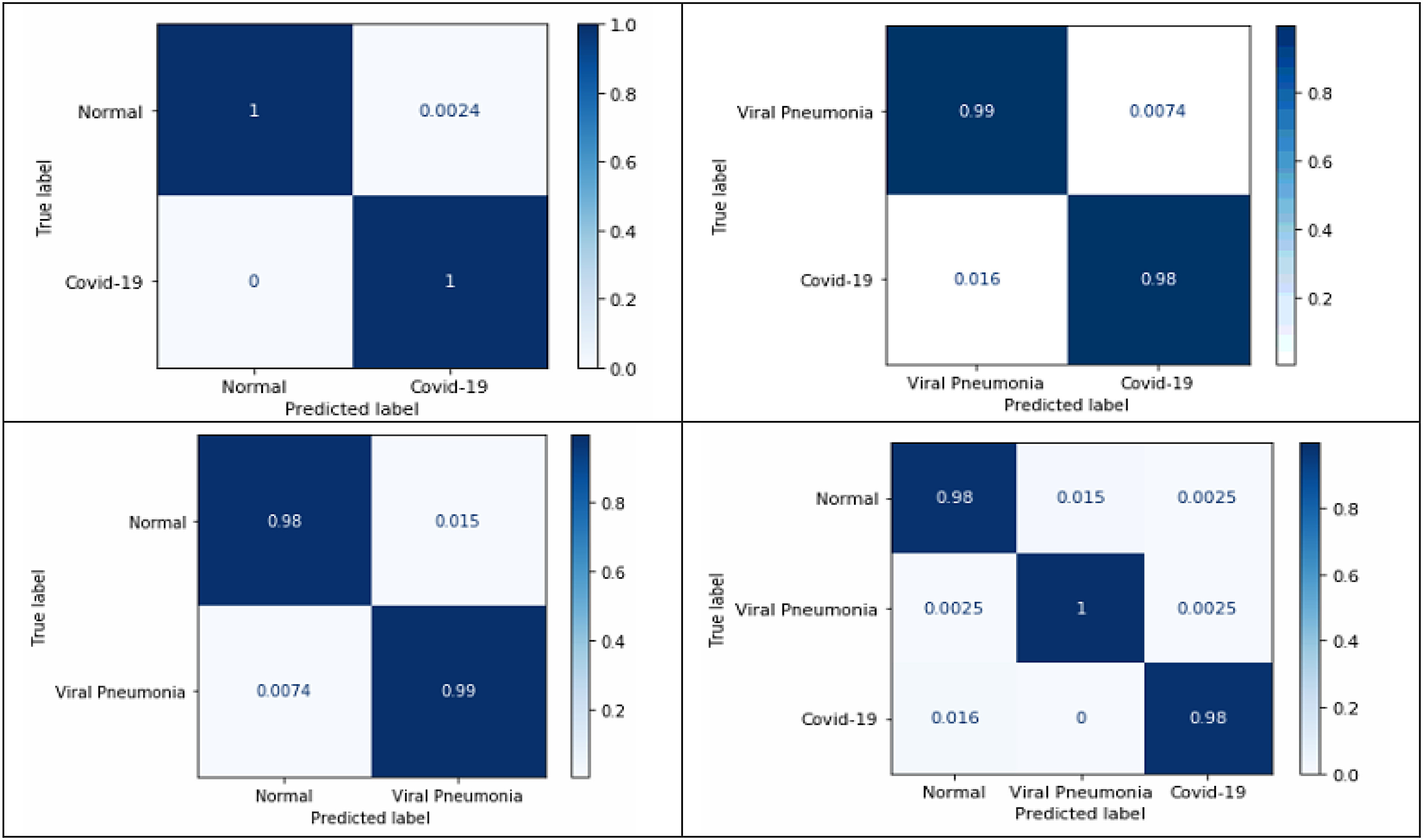

Table IV shows the performance of the proposed method for the classification schemes COVID-19 vs viral pneumonia, and normal vs viral pneumonia, from which high recall and specificity values can be noted. Thus, 98.44% of the time COVID-19 images will be correctly classified concerning viral pneumonia images, and 98.50% of the time, viral pneumonia images will get correctly identified from the normal images. Similarly, 99.26% of the time, viral pneumonia images will get correctly distinguished from COVID-19 images. Confusion matrices play a critical role in understanding how the classification models work on the test data. Different evaluation parameters are computed using the information obtained from it. Fig. 10 shows the normalized confusion matrices for the aforementioned classification schemes for the full radiograph Kaggle dataset, which can validate the above tables' results.

TABLE IV. Performance analysis of COVID vs viral pneumonia and viral pneumonia vs normal classification schemes on the full radiograph and lung area-specific radiograph Kaggle dataset.

| Full radiograph Kaggle dataset | Lung area-specific radiograph Kaggle Dataset | |||

|---|---|---|---|---|

| COVID-19 vs Viral Pneumonia | Normal vs Viral Pneumonia | COVID-19 vs Viral Pneumonia | Normal vs Viral Pneumonia | |

| Accuracy | 99.14% | 98.88% | 99.13% | 98.01% |

| Recall | 98.44% | 98.50% | 98.28% | 98.52% |

| Specificity | 99.26% | 99.26% | 99.26% | 97.50% |

| Precision | 95.45% | 98.53% | 95.00% | 97.56% |

| F1-score | 96.92% | 98.90% | 96.61% | 98.04% |

Fig. 10.

Normalized confusion matrices for the proposed four classification schemeson full radiograph Kaggle dataset.

E. Results Obtained for the Lung Area-Specific Radiograph Kaggle Dataset

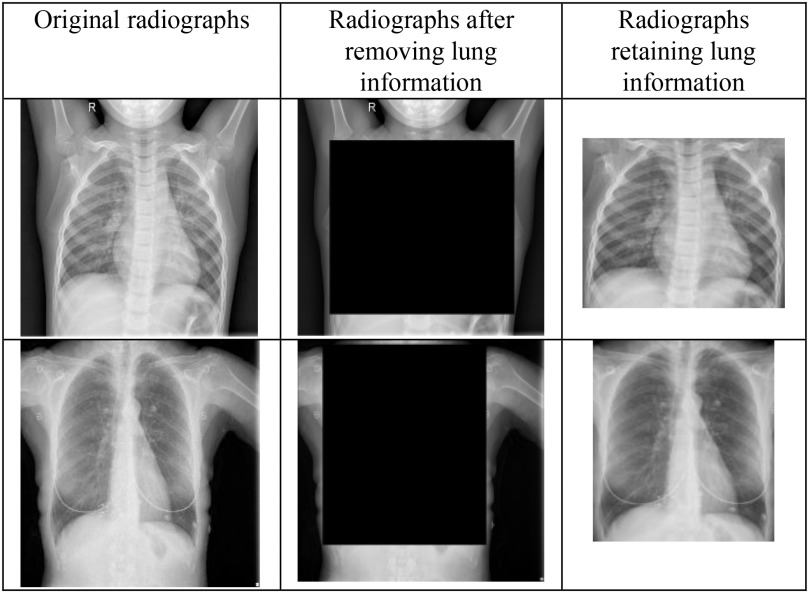

Cohen et al. in [53] noticed that the testing protocols may be learning the dataset-specific information rather than disease-specific information on generalizing chest radiographs prediction across multiple datasets. Currently, the majority of COVID-19 detection and recognition papers have combined the COVID-19 images from the dataset in [54] with the existing non-COVID-19 datasets. In [51], the authors proposed a protocol to test whether the COVID-19 prediction model learn dataset specific or disease-specific information when used across multiple datasets. Herein, the lung information is removed by blackening the center of the chest radiographs obtained from different datasets and AlexNet is trained to see if it can identify the source of the dataset. It was observed that if both the training and testing set contained images from the same dataset, AlexNet was able to distinguish them very accurately. The solution recommended for this problem was to find dataset datasets with similar features or find a pre-processing method to delete dataset-specific information. Thus, in this paper, to delete the dataset-specific information, the chest radiographs from the Kaggle dataset and COVIDGR dataset are hand cropped to retain the lung information i.e., the disease-specific information. Hence, generating the lung area-specific radiograph Kaggle and COVIDGR dataset. The proposed feature descriptor is tested on them, and its performance is evaluated. Fig. 9 illustrates the sample images present in lung area-specific Kaggle and COVIDGR dataset. This dataset is available on Kaggle website (www.kaggle.com/dataset/ab84db1d9bab332bb7d6e2bd89a287c0b712144423f9f773e1924c62255099d4)?.

Fig. 9.

Illustration of full radiographs (left), radiographs after cropping lung information (middle), and radiographs having just the lung information (right) for the Kaggle dataset (top) and COVIDGR dataset (bottom).

Fig. 8 illustrates the precision-recall curves obtained for the three-class classification scheme for the lung area-specific radiograph Kaggle dataset. Fig. 8(a), (b), and (c) show the precision-recall curves for different p-values using a fixed structural pattern and Fig. 8(d), (e), and (f), show the precision-recall curves for different structural patterns using a fixed p-value; for the normal, viral pneumonia, and COVID-19 classes, respectively. To generate these curves, a fixed threshold value of 0 and a window size  is used. It can be observed that for a three-class classification scheme, structural pattern 1 gives a better recall performance for the COVID-19 radiographs but has a low recall for viral pneumonia and normal class. However, structural pattern 3 yields better performance for all three classes. On comparing the precision-recall curves obtained for the lung area-specific radiograph and full radiograph Kaggle dataset, a similar performance is observed for normal and viral pneumonia class, with a slight decrement in COVID-19 class detection performance. This results in a minor decrement of 2% in the overall recall performance of the three-class classification scheme while having similar specificity performance as compared to the full radiograph Kaggle dataset, which can be seen from Table III (A) and Table III(B).

is used. It can be observed that for a three-class classification scheme, structural pattern 1 gives a better recall performance for the COVID-19 radiographs but has a low recall for viral pneumonia and normal class. However, structural pattern 3 yields better performance for all three classes. On comparing the precision-recall curves obtained for the lung area-specific radiograph and full radiograph Kaggle dataset, a similar performance is observed for normal and viral pneumonia class, with a slight decrement in COVID-19 class detection performance. This results in a minor decrement of 2% in the overall recall performance of the three-class classification scheme while having similar specificity performance as compared to the full radiograph Kaggle dataset, which can be seen from Table III (A) and Table III(B).

Fig. 8.

Precision-recall curves using set window size of  and

and  for the three-class classification scheme on the lung area-specific radiograph Kaggle dataset. (a), (b), and (c) show the precision-recall performance of different pattern (p) values on normal, viral pneumonia and COVID-19 images respectively using structural pattern 3, and (d), (e), and (f) show the precision-recall performance of different structural patterns for normal, viral pneumonia and COVID-19 images respectively using

for the three-class classification scheme on the lung area-specific radiograph Kaggle dataset. (a), (b), and (c) show the precision-recall performance of different pattern (p) values on normal, viral pneumonia and COVID-19 images respectively using structural pattern 3, and (d), (e), and (f) show the precision-recall performance of different structural patterns for normal, viral pneumonia and COVID-19 images respectively using  .

.

TABLE III(B). Performance analysis of the proposed method on the lung area-specific radiograph kaggle dataset for normal vs COVID-19 classification.

| Classification scheme | Author | Method | Accuracy | Recall | Specificity | Precision | Fl-score |

|---|---|---|---|---|---|---|---|

| Normal vs COVID-19 | Our work* | Classical Fibonacci p patterns | 99.78% | 98.08% | 100% | 100% | 99.03% |

| Shape-dependent Fibonacci -p patterns | 99.78% | 100% | 99.75% | 98.27% | 99.13% | ||

| Normal vs viral pneumonia vs COVID-19 | Our work* | Classical Fibonacci -p patterns | 97.79% | 96.90% | 98.52% | 97.78% | 97.32% |

| Shape-dependent Fibonacci -p patterns | 98.03% | 96.76% | 98.86% | 97.20% | 96.69% |

However, for the two-class classification schemes, namely COVID-19 vs normal and COVID-19 vs viral pneumonia, comparable recall-specificity performance for COVID-19 detection can be observed for both lung area-specific radiograph and full radiograph Kaggle datasets, which can be seen from Table III and IV. Whereas, for the normal vs. viral pneumonia classification scheme, a decrement of around 2% in specificity can be observed, while having similar recall performance. Computer simulations show that for the classification schemes, COVID-19 vs. viral pneumonia and normal vs. COVID-19,  , structure pattern 1 and

, structure pattern 1 and  gives the best results, whereas, for the classification scheme, normal vs. viral pneumonia,

gives the best results, whereas, for the classification scheme, normal vs. viral pneumonia,  , and structural pattern 2 yield the best outcome.

, and structural pattern 2 yield the best outcome.

Fig. 11 shows the normalized confusion matrices for the aforementioned classification schemes for the lung area-specific radiograph Kaggle dataset, which can validate the above table's results. From the confusion matrices, nearly 100% detection between normal and COVID-19 class can be observed using disease-specific information from the radiographs in the three-class classification scheme.

Fig. 11.

Normalized confusion matrices for the proposed four classification schemes on lung area-specifc radiograph Kaggle dataset.

F. Performance Evaluation on COVIDGR Dataset

For the COVIDGR dataset, two-class classification scheme namely, COVID-19 vs. non-COVID-19 is proposed in this paper. The optimal parameter selection process for this dataset follows the same procedure as described for the Kaggle dataset. The proposed feature extractor's performance is evaluated using the average and standard deviation values for each of the mentioned parameters over the 5 different executions performed on the 5-cross validation. Table V illustrates the proposed feature descriptor's performance and the authors' DL methods in the article [53]. It can be observed that structural pattern 3 gives the best recall performance, but it has low specificity performance. However, structural pattern 2 yields a better-balanced recall-specificity performance. Moreover, it can be observed that the structural pattern 2 yields better recall and specificity performance than most DL methods, namely COVIDNet-CXR, COVID-CAPS, ResNet50 without segmentation, and FuCiTNet, while achieving comparable results with respect to the COVID-SDNet.

TABLE V. Comparative analysis of the proposed method with DL based methods used on the COVDIGR dataset.

| Non-COVID-19 | COVID-19 | |||||||

|---|---|---|---|---|---|---|---|---|

| Author | Methods | Accuracy | F1-score | Precision | Specificity | F1-score | Precision | Recall |

| Tabik et. al [52] | COVDINet-CXR |  |

|

|

|

|

|

|

| COVID-CAPS |  |

|

|

|

|

|

|

|

| ResNet50 without segmentation |  |

|

|

|

|

|

|

|

| ResNet50 with segmentation |  |

|

|

|

|

|

|

|

| FuCiTNet |  |

|

|

|

|

|

|

|

| COVID-SDNet |  |

|

|

|

|

|

|

|

| Our work* | Structural pattern1 |  |

|

|

|

|

|

|

| Structural pattern2 |  |

|

|

|

|

|

|

|

| Structural pattern3 |  |

|

|

|

|

|

|

|

V. Conclusion

This paper proposes a machine learning-based approach using a novel textural feature descriptor, Shape-dependent Fibonacci-p patterns for effectively distinguishing COVID-19, viral pneumonia, and normal condition chest radiographs from each other. This descriptor's key advantage is that it can encrypt textural patterns having different shapes, orientations, and discontinuities in one operation while inherently removing noise from the image. Computer simulations for the full radiograph Kaggle dataset show that the proposed method has better recall performance than the DL methods and the classical Fibonacci descriptor. Nearly 100% and 98.44% COVID-19 detection accuracy are achieved for the classification schemes COVID-19 vs normal and COVID-19 vs viral pneumonia, respectively. For the lung area-specific radiograph Kaggle dataset, similar performance was observed for COVID-19 detection for the classification schemes COVID-19 vs normal and COVID-19 viral pneumonia. Likewise, for the COVIDGR dataset, the proposed feature descriptor yielded better performance compared to most of the DL methods while achieving comparable performance with respect to method COVID-SDnet. Since the proposed approach is a machine learning model, it does not require specialized hardware, has less training time, obtains stabilized model with good detection performance with small training datasets, is lightweight, and can be deployed quickly. Future efforts will be focused on: (a) constructing a 3D feature descriptor that can help analyze 3D medical images, such as 3D CT images, by extracting the volume information and depth of spread of the disease, (b) detecting COVID-19 symptoms using multi-view based 3D shapes where the input data are taken from different angles, (c) testing surfaces for coronavirus detection, and (d) studying the long-term effects of COVID-19 from the patients recovered from the disease.

Acknowledgment

Thanking the reviewers whose suggestions have helped improve the quality of the manuscript.

Contributor Information

Karen Panetta, Email: Karen@ece.tufts.edu.

Foram Sanghavi, Email: Foram.Sanghavi@tufts.edu.

Sos Agaian, Email: sos.agaian@csi.cuny.edu.

Neel Madan, Email: nmadan@tuftsmedicalcenter.org.

References

- [1].“WHO director-general's opening remarks at the media briefing on COVID-19 - March 11 2020,” accessed Jun. 2, 2020. [Online]. Available: https://www.who.int/dg/speeches/detail/who-director-general-s-opening-remarks-at-the-media-briefing-on-covid-19

- [2].Chowdhury M. E. et al. , “Can AI help in screening viral and COVID-19 pneumonia?,” 2020, arXiv:2003.13145.

- [3].“WHO corona virus (Covid-19) situation reports-189,” accessed Jul. 28, 2020. [Online]. Available: https://www.who.int/docs/default-source/coronaviruse/situation-reports/20200727-covid-19-sitrep-189.pdf?sfvrsn=b93a6913_2

- [4].“Centers for disease control prevention,” accessed 29-May-2020. [Online]. Available: https://www.cdc.gov/coronavirus/2019-ncov/cases-updates/cases-in-us.html

- [5].Kanne J. P., Little B. P., Chung J. H., Elicker B. M., and Ketai L. H., “Essentials for radiologists on COVID-19: An update—Radiology scientific expert panel,” ed: Radiological Society of North America, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Ai T. et al. , “Correlation of chest CT and RT-PCR testing for coronavirus disease 2019 (COVID-19) in China: a report of 1014 cases,” Radiology, vol. 296, no. 2, pp. E32–E40, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Fang Y. et al. , “Sensitivity of chest CT for COVID-19: Comparison to RT-PCR,” Radiology, vol. 296, no. 2, pp. E115–E117, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Wang W. et al. , “Detection of SARS-CoV-2 in different types of clinical specimens,” Jama , vol. 323, no. 18, pp. 1843–1844, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Asif S. and Wenhui Y., “Automatic detection of COVID-19 using X-ray images with deep convolutional neural networks and machine learning,” medRxiv, 2020.

- [10].Wang D. et al. , “Clinical characteristics of 138 hospitalized patients with 2019 novel coronavirus–infected pneumonia in Wuhan, China,” Jama , vol. 323, no. 11, pp. 1061–1069, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [11].Chen N. et al. , “Epidemiological and clinical characteristics of 99 cases of 2019 novel coronavirus pneumonia in wuhan, china: A descriptive study,” Lancet , vol. 395, no. 10223, pp. 507–513, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Huang C. et al. , “Clinical features of patients infected with 2019 novel coronavirus in wuhan, china,” Lancet , vol. 395, no. 10223, pp. 497–506, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [13].Li Q. et al. , “Early transmission dynamics in wuhan, china, of novel coronavirus–infected pneumonia,” New England J. Med. , 2020. [DOI] [PMC free article] [PubMed]

- [14].Corman V. M. et al. , “Detection of 2019 novel coronavirus (2019-nCoV) by real-time RT-PCR,” Eurosurveillance , vol. 25, no. 3, 2020, Art. no. 2000045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Chu D. K. et al. , “Molecular diagnosis of a novel coronavirus (2019-nCoV) causing an outbreak of pneumonia,” Clin. Chem. , vol. 66, no. 4, pp. 549–555, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Chung M. et al. , “CT imaging features of 2019 novel coronavirus (2019-nCoV),” Radiology , vol. 295, no. 1, pp. 202–207, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [17].Hosseiny M., Kooraki S., Gholamrezanezhad A., Reddy S., and Myers L., “Radiology perspective of coronavirus disease 2019 (COVID-19): Lessons from severe acute respiratory syndrome and middle east respiratory syndrome,” Amer. J. Roentgenol. , vol. 214, no. 5, pp. 1078–1082, 2020. [DOI] [PubMed] [Google Scholar]

- [18].Salehi S., Abedi A., Balakrishnan S., and Gholamrezanezhad A., “Coronavirus disease 2019 (COVID-19): A systematic review of imaging findings in 919 patients,” Amer. J. Roentgenol. , pp. 1–7, 2020. [DOI] [PubMed]

- [19].Ng M.-Y. et al. , “Imaging profile of the COVID-19 infection: Radiologic findings and literature review,” Radiol.: Cardiothoracic Imag. , vol. 2, no. 1, 2020, Paper e200034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Tahir A. M. et al. , “A systematic approach to the design and characterization of a smart insole for detecting vertical ground reaction force (vGRF) in gait analysis,” Sensors , vol. 20, no. 4, p. 957, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Chowdhury M. E. et al. , “Real-Time smart-digital stethoscope system for heart diseases monitoring,” Sensors , vol. 19, no. 12, 2019, Art. no. 2781. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Chowdhury M. E. et al. , “Wearable real-time heart attack detection and warning system to reduce road accidents,” Sensors , vol. 19, no. 12, 2019, Art. no. 2780. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Kallianos K. et al. , “How far have we come? Artificial intelligence for chest radiograph interpretation,” Clin. Radiol. , 2019. [DOI] [PubMed]

- [24].Wang L. and Wong A., “COVID-Net: A tailored deep convolutional neural network design for detection of COVID-19 cases from chest radiography images,” 2020, arXiv: 2003.09871. [DOI] [PMC free article] [PubMed]

- [25].Ozturk T., Talo M., Yildirim E. A., Baloglu U. B., Yildirim O., and Rajendra Acharya U., “Automated detection of COVID-19 cases using deep neural networks with X-ray images,” Comput. Biol. Med., 2020, Art. no. 103792, doi: 10.1016/j.compbiomed.2020.103792. [DOI] [PMC free article] [PubMed]

- [26].Hemdan E. E.-D., Shouman M. A., and Karar M. E., “Covidx-net: A framework of deep learning classifiers to diagnose covid-19 in X-ray images,” 2020, arXiv:2003.11055.

- [27].Bassi P. R. and Attux R., “A deep convolutional neural network for COVID-19 detection using chest X-Rays,” 2020, arXiv:2005.01578.

- [28].Narin A., Kaya C., and Pamuk Z., “Automatic detection of coronavirus disease (covid-19) using X-ray images and deep convolutional neural networks,” 2020, arXiv:2003.10849. [DOI] [PMC free article] [PubMed]

- [29].Apostolopoulos I. D. and Mpesiana T. A., “Covid-19: Automatic detection from X-ray images utilizing transfer learning with convolutional neural networks,” Phys. Eng. Sci. Med. , p. 1, 2020. [DOI] [PMC free article] [PubMed]

- [30].Farooq M. and Hafeez A., “Covid-resnet: A deep learning framework for screening of covid19 from radiographs,” 2020, arXiv:2003.14395.

- [31].Pereira R. M., Bertolini D., Teixeira L. O., Silla C. N., Jr., and Costa Y. M. G., “COVID-19 identification in chest X-ray images on flat and hierarchical classification scenarios,” Comput. Methods Programs Biomed. , vol. 194, pp. 105532–105532, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [32].Li Y., Xu X., Li B., Ye F., and Dong Q., “Circular regional mean completed local binary pattern for texture classification,” J. Electron. Imag. , vol. 27, no. 4, 2018, Paper 043024. [Google Scholar]

- [33].Huang D., Shan C., Ardabilian M., Wang Y., and Chen L., “Local binary patterns and its application to facial image analysis: A survey,” IEEE Trans. Syst., Man, Cybern., Part C Appl. Rev. , vol. 41, no. 6, pp. 765–781, Nov. 2011. [Google Scholar]

- [34].Suhas B., “Automated system for skin cancer classification,” M.S. thesis, The University of Texas at San Antonio, 2015. [Google Scholar]

- [35].Sanghavi F. and Agaian S., “Application of local binary pattern and human visual fibonacci texture features for classification different medical images,” in Proc. Mobile Multimedia/Image Process., Secur., Appl., 2017, Paper 102210S. [Google Scholar]

- [36].Agaian S., Astola J., Egiazarian K., and Kuosmanen P., “Decompositional methods for stack filtering using fibonacci p-codes,” Signal Process. , vol. 41, no. 1, pp. 101–110, 1995. [Google Scholar]

- [37].Gevorkian D. Z., Egiazarian K. O., Agaian S. S., Astola J. T., and Vainio O., “Parallel algorithms and VLSI architectures for stack filtering using fibonacci p-codes,” IEEE Trans. Signal Process. , vol. 43, no. 1, pp. 286–295, Jan. 1995. [Google Scholar]

- [38].Zhou Y., Agaian S., Joyner V. M., and Panetta K., “Two fibonacci p-code based image scrambling algorithms,” in Proc. Image Processing: Algorithms and Systems VI, 2008, Art. no. 681215. [Google Scholar]

- [39].Sultana M., Bhatti M. N. A., Javed S., and Jung S.-K., “Local binary pattern variants-based adaptive texture features analysis for posed and nonposed facial expression recognition,” J. Electron. Imag. , vol. 26, no. 5, 2017, Art. no. 053017. [Google Scholar]

- [40].Radiology S. I. S. O. M. A. I.. “COVID-19 database,” [Online]. Available: https://www.sirm.org/category/senza-categoria/covid-19/

- [41].Dao J. P. C. A. P. M. A. L., “COVID-19 image data collection,” accessed May 25, 2020. [Online]. Available: https://github.com/ieee8023/covid-chestxray-dataset

- [42].Mooney P., “Chest X-Ray images (Pneumonia),” 2018. [Online]. Available: https://www.kaggle.com/paultimothymooney/chest-xray-pneumonia

- [43].Boser B. E., Guyon I. M., and Vapnik V. N., “A training algorithm for optimal margin classifiers,” in Proc. 5th Annu. Workshop Comput. Learn. Theory, 1992, pp. 144–152. [Google Scholar]

- [44].Peterson L. E., “K-nearest neighbor,” Scholarpedia , vol. 4, no. 2, 2009, Art. no. 1883. [Google Scholar]

- [45].Pal M., “Random forest classifier for remote sensing classification,” Int. J. Remote Sens. , vol. 26, no. 1, pp. 217–222, 2005. [Google Scholar]

- [46].Hastie T., Rosset S., Zhu J., and Zou H., “Multi-class adaboost,” Statist. Its Interface , vol. 2, no. 3, pp. 349–360, 2009. [Google Scholar]

- [47].Friedman J. H., “Stochastic gradient boosting,” Comput. Statist. Data Anal. , vol. 38, no. 4, pp. 367–378, 2002. [Google Scholar]

- [48].Safavian S. R. and Landgrebe D., “A survey of decision tree classifier methodology,” IEEE Trans. Syst., Man, Cybern. , vol. 21, no. 3, pp. 660–674, May-Jun. 1991. [Google Scholar]

- [49].Lalkhen A. G. and McCluskey A., “Clinical tests: Sensitivity and specificity,” Continuing Educ. Anaesth. Crit. Care Pain , vol. 8, no. 6, pp. 221–223, 2008. [Google Scholar]

- [50].Hand D. J., “Assessing the performance of classification methods,” Int. Statist. Rev. , vol. 80, no. 3, pp. 400–414, 2012. [Google Scholar]

- [51].Maguolo G. and Nanni L., “A critic evaluation of methods for covid-19 automatic detection from X-ray images,” 2020.arXiv:2004.12823. [DOI] [PMC free article] [PubMed]

- [52].Tabik S. et al. , “COVIDGR dataset and COVID-SDNet methodology for predicting COVID-19 based on chest X-Ray images,” IEEE J. Biomed. Health Inform. , vol. 24, no. 12, pp. 3595–3605, Dec. 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [53].Cohen J. P., Hashir M., Brooks R., and Bertrand H., “On the limits of cross-domain generalization in automated X-ray prediction,” in Medical Imaging With Deep Learning. PMLR, 2020, pp. 136–155. [Google Scholar]

- [54].Dao J. P. C. A. P. M. A. L., “COVID-19 image data collection,” accessed May 25, 2020, 2020. [Online]. Available: https://github.com/ieee8023/covid-chestxray-dataset