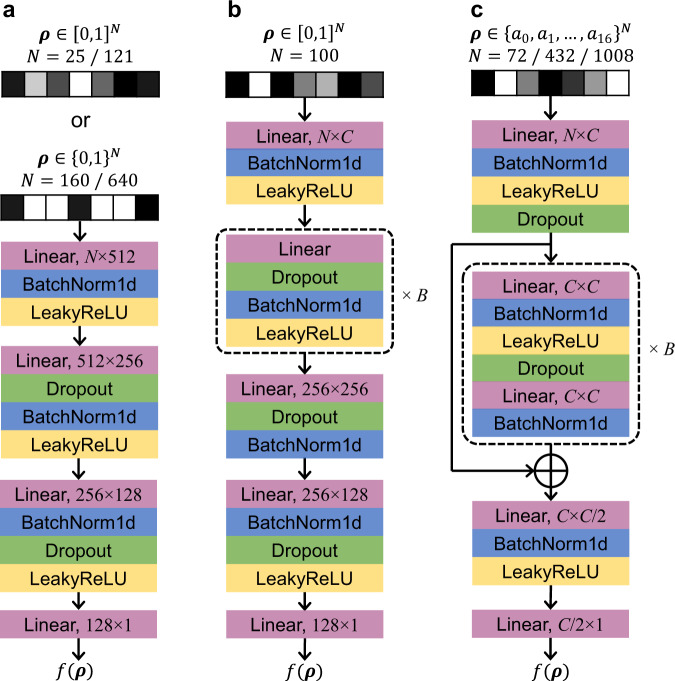

Fig. 8. Architectures of DNN.

The input is a design vector ρ and the output is the predicted objective function value f(ρ). “Linear” presents a linear transformation and “BatchNorm1d” denotes one-dimensional batch normalization used to avoid internal covariate shift and gradient explosion for stable training55. “LeakyReLU” is an activation function extended from ReLU with activated negative values. “Dropout” is a regularization method to prevent overfitting by randomly masking nodes68. a The DNN in the compliance and fluid problems. b The DNN in the heat problem. Two architectures are used in this problem. At the 100th loop and before, B = 1, C = 512, and the Linear layer in the dashed box is 512 × 256. At the 101st loop and afterwards, B = 4, C = 512 and the 4 Linear layers are 256 × 512, 512 × 512, 512 × 512 and 512 × 256, respectively. c The DNN in the truss optimization problems. B = 1. C = 512 when N = 72/432; C = 1024 when N = 1008.