Abstract

We propose a nonlinear, wavelet-based signal representation that is translation invariant and robust to both additive noise and random dilations. Motivated by the multi-reference alignment problem and generalizations thereof, we analyze the statistical properties of this representation given a large number of independent corruptions of a target signal. We prove the nonlinear wavelet-based representation uniquely defines the power spectrum but allows for an unbiasing procedure that cannot be directly applied to the power spectrum. After unbiasing the representation to remove the effects of the additive noise and random dilations, we recover an approximation of the power spectrum by solving a convex optimization problem, and thus reduce to a phase retrieval problem. Extensive numerical experiments demonstrate the statistical robustness of this approximation procedure.

Keywords: multi-reference alignment, method of invariants, wavelets, signal processing, wavelet scattering transform

1. Introduction

The goal in classic multi-reference alignment (MRA) is to recover a hidden signal from a collection of noisy measurements. Specifically, the following data model is assumed.

Model 1 (Classic MRA).

The classic MRA data model consists of M independent observations of a compactly supported, real-valued signal :

| (1.1) |

where:

for 1 ⩽ j ⩽ M.

are independent samples of a random variable .

are independent white noise processes on , with variance σ2.

The signal is thus subjected to both random translation and additive noise. The MRA problem arises in numerous applications, including structural biology [32,64,65,70,71,79], single cell genomic sequencing [51], radar [43,85], crystalline simulations [76], image registration [18,40,69] and signal processing [85]. It is a simplified model relevant for cryo-electron microscopy (cryo-EM), an imaging technique for molecules that achieves near atomic resolution [11,14,75]. In this application one seeks to recover a three-dimensional reconstruction of the molecule from many noisy two-dimensional images/projections [41]. Although MRA ignores the tomographic projection of cryo-EM, investigation of the simplified model provides important insights. For example, [5,66] investigate the optimal sample complexity for MRA and demonstrate that M = Θ(σ6) is required to fully recover f in the low signal-to-noise regime when the translation distribution is periodic; this optimal sample complexity is the same for cryo-EM [7,82]. Recent work has established an improved sample complexity of M = Θ(σ4) for MRA when the translation distribution is aperiodic [1], and this rate has been shown to also hold in the more complicated setting of cryo-EM, if the viewing angles are non-uniformly distributed [72]. Problems closely related to Model 1 include the heterogenous MRA problem, where the unknown signal f is replaced with a template of k unknown signals f1, . . . , fk [16,54,66,77], as well as multi-reference factor analysis, where the underlying (random) signal follows a low-rank factor model and one seeks to recover its covariance matrix [50].

Approaches for solving MRA generally fall into two categories: synchronization methods and methods that estimate the signal directly, i.e. without estimating nuisance parameters. Synchronization methods attempt to recover the signal by aligning the translations and then averaging. They include methods based on angular synchronization [8,15,24,67,73,84], where for each pair of signals the best pairwise shift is computed and then the translations are estimated from this pairwise information [6], and semi-definite programming [4,9,10,25], which approximates the quasi-maximum likelihood estimator of the shifts by relaxing a non-convex rank constraint. However, these methods fail in the low signal-to-noise regime. Methods that estimate the signal directly include both the method of moments [44,48,72] and expectation maximization, or EM-type, algorithms [1,30]; a number of EM-type algorithms have also been developed for the more complicated cryo-EM problem [33,68]. An important special case of the method of moments is the method of invariants, which seeks to recover f by computing translation invariant features, and thus avoids aligning the translations. However, the task is a difficult one, as a complete representation is needed to recover the signal, and yet the representation may be difficult to invert and corrupted by statistical bias. Generally, the signal is recovered from translation invariant moments, which are estimated in the Fourier domain [29,44]. Recent work [5,14] utilizes such Fourier invariants (mean, power spectrum and bispectrum) and recovers by solving a non-convex optimization problem on the manifold of phases.

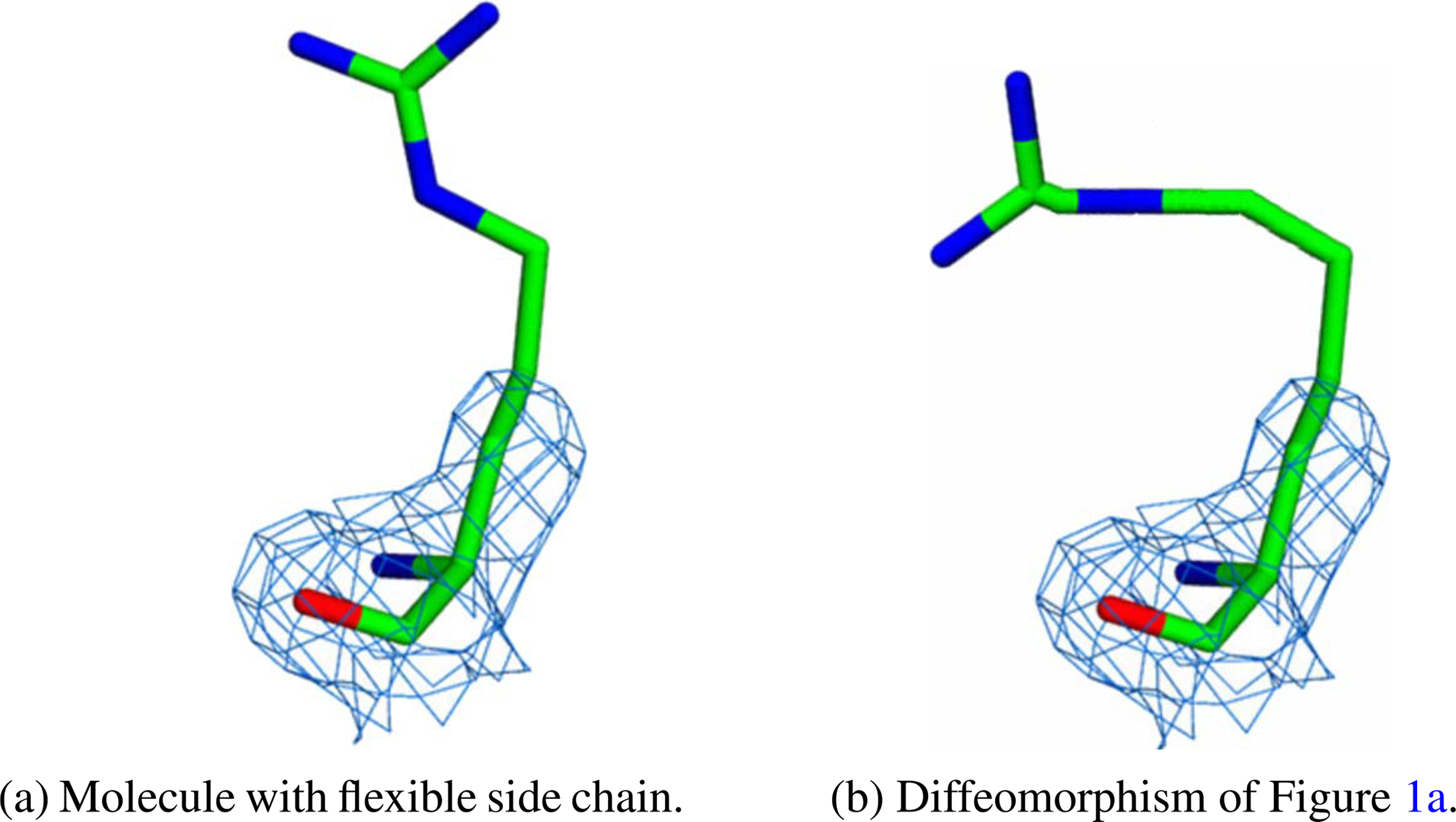

Classic MRA however fails to capture many of the biological phenomena arising in molecular imaging, such as the random rotations of the molecules and the tomographic projection associated with the imaging of three-dimensional objects. Another shortcoming is that the model fails to capture the dynamics that arise from flexible regions in macromolecular structures. These flexible regions are very important in structural biology, for example in understanding molecular interactions [36,39,52,53] and molecular recognition of epigenetic regulators of histone tails [17,31,58]. The large-scale dynamics of these regions makes imaging challenging [81], and thus sample preparation in cryo-EM generally seeks to minimize these dynamics by focusing on well-folded macromolecules frozen in vitreous ice [63]. However, this ‘may severely impact... the nature of the intrinsic dynamics and interactions displayed by macromolecules’ [63]. Although modern cryo-EM is making great strides in understanding flexible systems [3,37,38,59], formulating models that are more capable of capturing the motions associated with the flexible regions of macromolecules could open the door to applying cryo-EM more broadly, i.e. to less well-folded macromolecules. Mathematically, the motion of the flexible region can be modeled as a diffeomorphism. See Fig. 1, which shows a molecule with a flexible side chain (1(a)) and a diffeomorphism resulting from movement of the flexible region (1(b)). Figure 1(a) is taken from [63], and Fig. 1(b) was obtained by deforming it.

Fig. 1.

Dynamics arising from flexible regions in macromolecular structures [63].

This article thus generalizes the classic MRA problem to include a random diffeomorphism. Specifically, we consider recovering a hidden signal from

where Lτ is a dilation operator that dilates by a factor of (1−τ). The dilation operator Lτ is a simplified model for more general diffeomorphisms Lζ f(x) = f(ζ(x)), since in the simplest case when ζ(x) is affine, Lζ simply translates and dilates f (see Section 2.1). Dilations are also relevant for the analysis of time-warped audio signals, which can arise from the Doppler effect and in speech processing and bioacoustics. For example, [60–62] consider a stationary random signal f(x), which is time-warped, i.e. , and use a maximum likelihood approach to estimate ζ. In [27,28], a similar stochastic time warping model is analyzed using wavelet based techniques. The noisy dilation MRA model considered here corresponds to the simplest case of time-warping, when ζ is an affine function. This special case is in fact very important in imaging applications [22,23,46,57,69,80], where it is critical to compute features which are scale invariant, as objects are naturally dilated by the ‘zoom’ of an image.

A new approach is needed to solve this more general MRA problem, as Fourier invariants will fail, being unstable to the action of diffeomorphisms, including dilations. The instability occurs in the high frequencies, where even a small diffeomorphism can significantly alter the Fourier modes. We instead propose wavelet coefficient norms as invariants, using a continuous wavelet transform. This approach is inspired by the invariant scattering representation of [56], which is provably stable to the actions of small diffeomorphisms. However, here we replace local averages of the modulus of the wavelet coefficients with global averages (i.e. integrations) of the modulus squared, thus providing rigid invariants that can be statistically unbiased. Similar invariant coefficients have been utilized in a number of applications including predicting molecular properties [34,35] and quantum chemical energies [45], and in microcanonical ensemble models for texture synthesis [19]. Recent work [42] has also generalized such coefficients to graphs.

1.1. Notation

The Fourier transform of a signal is

We remind the reader that compactly supported functions are in . The power spectrum is the nonlinear transform that maps f to

We denote f(x) ⩽ Cg(x) for some absolute constant C by f(x) ≲ g(x). We also write f(x) = O(g(x)) if |f(x)| ⩽ Cg(x) for all x ⩾ x0 for some constants x0, C > 0; f(x) = o(g(x)) denotes f(x)/g(x) → 0 as x → ∞; f(x) = Θ(g(x)) denotes C1g(x) ⩽ |f(x)| ⩽ C2g(x) for all x ⩾ x0 for some constants x0, C1, C2 > 0. The minimum of a and b is denoted a ∧ b, and the maximum by a ∨ b.

2. MRA models and the method of invariants

Standard MRA models are generalized to models that include deformations of the underlying signal in Section 2.1. Section 2.2 reviews power spectrum invariants and introduces wavelet coefficient invariants. Theorem 2.4 proves wavelet coefficient invariants computed with a continuous wavelet transform and a suitable mother wavelet are equivalent to the power spectrum, showing there is no information loss in the transition from one representation to the other.

2.1. MRA data models

A standard MRA scenario considers the problem of recovering a signal in which one observes random translations of the signal, each of which is corrupted by additive noise. The problem is particularly difficult when the signal-to-noise ratio (SNR) is low, as registration methods become intractable. In [5,13,14,16,54,74] the authors propose a method using Fourier-based invariants, which are invariant to translations and thus eliminate the need to register signals.

A more general MRA scenario incorporates random deformations of the signal f, which could be used to model underlying physical variability that is not captured by rigid transformations and additive noise models. For example [4,7] consider a discrete signal f corrupted by an arbitrary group action, [47,85] consider random deformations arising in RADAR and [2] considers a generalization of MRA where signals are rescaled by random constants. Another natural mathematical model is small, random diffeomorphisms, which leads to observations of the form

| (2.1) |

where is a random diffeomorphism, is a random translation and the signals εj(x) are independent white noise random processes. The transform Lζ is the action of the diffeomorphism ζ on f,

If , then one can verify .

One of the keys to the Fourier invariant approach of [5,13,14,16,54,74] is the authors can unbias the Fourier invariants of the noisy signals, thus allowing them to devise an unbiased estimator of the Fourier invariants of the signal f (or a mixture of signals in the heterogeneous MRA case). For the diffeomorphism model (2.1) this would require developing a procedure for unbiasing the (Fourier) invariants of against both additive noise and random diffeomorphisms.

In order to get a handle on the difficulties associated with the proposed diffeomorphism model, in this paper we consider random dilations of the signal f, which corresponds to restricting the diffeomorphism to be of the form

Specifically, we assume the following noisy dilation MRA model.

Model 2 (Noisy dilation MRA data model).

The noisy dilation MRA data model consists of M independent observations of a compactly supported, real-valued signal :

| (2.2) |

where Lτ is an normalized dilation operator,

In addition, we assume the following:

for 1 ⩽ j ⩽ M.

are independent samples of a random variable .

- are independent samples of a bounded, symmetric random variable τ satisfying

are independent white noise processes on with variance σ2.

Remark 2.1

The interval is arbitrary and can be replaced with any interval of length 1. In addition, the spatial box size is arbitrary, i.e. , can be replaced with . All results still hold with replacing σ wherever it appears.

Thus, the hidden signal f is supported on an interval of length 1, and we observe M independent instances of the signal that have been randomly translated, randomly dilated and corrupted by additive white noise. We assume the hidden signal is real, but the proposed methods can also handle complex valued signals with minor modifications. Recall ε(x) is a white noise process if ε(x) = dBx, i.e. it is the derivative of a Brownian motion with variance σ2.

While the noisy dilation MRA model does not capture the full richness of the diffeomorphism model, it already presents significant mathematical difficulties. Indeed, as we show in Section 5, Fourier invariants, specifically the power spectrum, cannot be used to form accurate estimators under the action of dilations and random additive noise. The reason is that Fourier measurements are not stable to the action of small dilations (measured here by |τ|), since the displacement of relative to depends on |ω|. Intuitively, high-frequency modes are unstable, and yet high frequencies are often critical; for example removing high frequencies increases the sample complexity needed to distinguish between signals in a heterogeneous MRA model [5]. We thus replace Fourier-based invariants with wavelet coefficient invariants, which are defined in Section 2.2. As we show the wavelet invariants of the signal f can be accurately estimated from wavelet invariants of the noisy signals , with no information loss relative to the power spectrum of f.

For future reference we also define the following dilation MRA model, which includes random translations and random dilations but no additive noise. Thus, Models 1 and 3 are both special cases of Model 2.

Model 3 (Dilation MRA data model).

The dilation MRA data model consists of M independent observations of a compactly supported, real-valued signal :

| (2.3) |

where Lτ is an normalized dilation operator,

In addition, we assume (i)–(iii) of Model 2.

2.2. Method of invariants

We now discuss how invariant representations can be used to solve MRA data models and introduce the wavelet invariants used in this article.

2.2.1. Motivation and related work

Let Ttf(x) = f(x − t) denote the operator that translates by t acting on a signal f. Invariant measurement models seek a representation in a Banach space such that

| (2.4) |

In MRA problems, one additionally requires that

| (2.5) |

The first condition (2.4) removes the need to align random translations of the signal f, whereas the second condition (2.5) ensures that if one can estimate Φ(f) from the collection , then one can recover an estimate of f (up to translation) by solving

| (2.6) |

where is the Banach space norm.

When the observed signals are corrupted by more than just a random translation, though, as in Model 2, estimating Φ(f) from is not always straightforward. Indeed, one would like to compute

| (2.7) |

but the quantity is not always an unbiased estimator of Φ(f), meaning that . In order to circumvent this issue, one must select a representation Φ such that

| (2.8) |

where is a bias term depending on the choice of Φ, f, and the signal corruption model . If (2.8) holds and if we can compute a such that for , then one can amend (2.7) to reduce the bias

in which case

almost surely by the law of large numbers. The main difficulty therefore is twofold. On the one hand, one must design a representation Φ that satisfies (2.4), (2.5) and (2.8) with a bias b that can be estimated; on the other hand, the optimization (2.6) must be tractable. For random translation plus additive noise models (i.e., Model 1), the authors of [5,14] describe a representation Φ based on Fourier invariants that satisfies the outlined requirements and for which one can solve (2.6) despite the optimization being non-convex. The Fourier invariants include (i.e. the integral of f), the power spectrum of f and the bispectrum of f. Each invariant captures successively more information in f. While carries limited information, the power spectrum recovers the magnitude of the Fourier transform, namely it recovers the non-negative, real-valued function ρ(ω) such that , but the phase information Θ(ω) is lost. Since , the power spectrum is invariant to translations as the Fourier modulus kills the phase factor induced by a translation t of f. However, it is in general not possible to recover a signal from its power spectrum, although in certain special cases the phase information can be resolved; results along these lines are in the field of phase retrieval [26,78]. The bispectrum is also translation invariant and invertible so long as [66].

In Section 5 we show that it is impossible to significantly reduce the power spectrum bias for Model 2, which includes translations, dilations and additive noise. We thus propose replacing the power spectrum with the norms of the wavelet coefficients of the signal f. These invariants satisfy (2.4) and (2.8) for Model 2 and yield a convex formulation of (2.6). They do not satisfy (2.5) for general , but Theorem 2.4 in Section 2.2.2 shows that knowing the wavelet invariants of f is equivalent to knowing the power spectrum of f, which means that any phase retrieval setting in which recovery is possible will also be possible with the specified wavelet invariants. For example if the signal lives in a spline or shift invariant space in addition to being realvalued, then it can be recovered from its phaseless measurements [26,78].

2.2.2. Wavelet invariants

We now define the wavelet invariants used in this article. A wavelet is a waveform that is localized in both space and frequency and has zero average,

Note throughout this article ψ will always denote a wavelet in with zero average, satisfying ‖ψ‖2 = 1 as well as the classic admissability condition . A dilation of the wavelet by a factor λ ∈ (0,∞) is denoted,

where the normalization guarantees that ‖ψλ‖2 = ‖ψ‖2 = 1. The continuous wavelet transform W computes

The parameter λ corresponds to a frequency variable. Indeed, if ξ0 is the central frequency of ψ, the wavelet coefficients f ∗ ψλ recover the frequencies of f in a band of size proportional to λ centered at λξ0. Thus, high frequencies are grouped into larger packets, which we shall use to obtain a stable, invariant representation of f.

The wavelet transform Wf is equivariant to translations but not invariant. Integrating the wavelet coefficients over x yields translation invariant coefficients, but they are trivial since . We therefore compute norms in the x variable, yielding the following nonlinear wavelet invariants:

Definition 2.1 (Wavelet invariants).

The L2 wavelet invariants of a real-valued signal are given by

| (2.9) |

where ψλ(x) = λ1/2ψ(λx) are dilations of a mother wavelet ψ.

Throughout this article ψ can be taken as a Morlet wavelet, in which case ψ is constructed to have frequency centered at ξ by for , but results hold more generally for what we refer to as k-admissible wavelets, where k ⩾ 0 is an even integer. See Appendix A for a precise description of this admissibility criteria. The wavelet invariants can be expressed in the frequency domain as

which motivates the following definition of ‘wavelet invariant derivatives’.

Definition 2.2 (Wavelet invariant derivatives).

The n-th derivative of (Sf)(λ) is defined as

Remark 2.2

Definition 2.1 assumes , which allows the wavelet ψ to be either real or complex. Our results can easily be extended to complex f, but a strictly complex wavelet would be needed, with Sf(λ) computed for all λ ∈ (−∞, ∞) \ 0.

Remark 2.3

For a discrete signal of length n, computing the wavelet invariants via a continuous wavelet transform is O(n2), while computing the power spectrum is O(n log n). Thus, one pays a computational cost to achieve greater stability with no loss of information. On the other hand, if wavelet invariants are computed for a dyadic wavelet transform (i.e. only for O(log n) λ’s), the computational cost is the same and stability is maintained, but more information is lost.

Remark 2.4

When is continuous, Definition 2.2 reduces to a normal derivative, i.e. one can check that . However, when Pf is not continuous, in general and (Sf)(n)(λ) is more convenient for controlling the error of the estimators proposed in this article. Throughout this article, the notation (Sf)(n)(λ) will thus denote the derivative of Definition 2.2 and will denote the standard derivative.

Under mild conditions, one can show that . The values λ = 2j for correspond to rigid versions of first-order wavelet scattering invariants [56]. The continuous wavelet transform Wf is extremely redundant; indeed, for suitably chosen mother wavelets, the dyadic wavelet transform with λ = 2j for is a complete representation of f. However, the corresponding operator S restricted to λ = 2j is not invertible. When one utilizes every frequency λ ∈ (0,∞), though, the resulting norms uniquely determine the power spectrum of f, so long as the wavelet ψ satisfies a type of independence condition.

Condition 2.3

Define

If for any finite sequence of distinct positive frequencies, the collection is linearly independent functions of λ, we say the wavelet ψ satisfies the linear independence condition.

Remark 2.5

Condition 2.3 is stated in terms of to avoid assumptions on whether ψ is real or complex. When , for ω ⩾ 0. When ψ is complex analytic, . When but not complex analytic, simply incorporates a reflection of about the origin. Since we assume uniquely defines (Sf)(λ), since by the Plancherel and Fourier convolution theorems.

Theorem 2.4

Let and assume ψ satisfies Condition 2.3 and has compact support. Then,

Proof.

First assume Pf = Pg, which means for almost every . Using the Plancheral and Fourier convolution theorems,

Now suppose Sf = Sg. Since Sf and Sg are continuous in λ, we have

Since we have and thus . By interpolation we have , and the same for Pg. By applying Lemma 2.1 (stated below) with p(ω) = (Pf)(ω) − (Pg)(ω) (note p is continuous since ), we conclude Pf = Pg for almost every ω. □

Lemma 2.1

Let be continuous and assume p(ω) = p(−ω), has compact support and Condition 2.3. Then,

The proof of Lemma 2.1 is in Appendix C. We remark that many wavelets satisfy Condition 2.3 and have compactly supported Fourier transform, so Theorem 2.4 is broadly applicable. For example, Proposition 2.1 below proves that any complex analytic wavelet with compactly supported Fourier transform satisfies Condition 2.3. Morlet wavelets satisfy Condition 2.3 (see Lemma C.1 in Appendix C) but do not have compactly supported Fourier transform; however, does have fast decay for a Morlet wavelet and numerically we observe no issues. We also note, the assumption that has compact support in Theorem 2.4 can be removed if f, g are bandlimited. The following Proposition, proved in Appendix C, gives some sufficient conditions guaranteeing Condition 2.3.

Proposition 2.1

The following are sufficient to guarantee Condition 2.3:

has a compact support contained in the interval [a, b], where a and b have the same sign, e.g. complex analytic wavelets with compactly supported Fourier transform.

and there exists an N such that all derivatives of order at least N are non-zero at ω = 0, e.g. the Morlet wavelet.

Remark 2.6

In practice, Pf, Sf are implemented as discrete vectors, and Sf is obtained from Pf via matrix multiplication, i.e. Sf = F(Pf) for some real matrix F with FTF strictly positive definite. Thus, , where σmin > 0 is the smallest singular value of the matrix F, and the spectral decay of F, which can be explicitly computed, thus determines the stability of the representation. The smoother the wavelet, the more rapidly the spectrum decays, since when Pψ ∈ Cp, FTF is defined by a Cp kernel and thus has eigenvalues that decay like o(1/np+1) [20]. There is thus a tradeoff between smoothness and stability. In this article we choose smoothness over stability, since smoothness is required for unbiasing noisy dilation MRA, and in our experiments the Morlet wavelet yielded the best results. We therefore invert the representation by solving an optimization problem that is initialized to be close to the desired solution (see Section 6.5), and we avoid computing the pseudo-inverse of F, which is unstable for our smooth wavelet.

3. Unbiasing for classic MRA

In this section we consider the classic MRA model (Model 1). We discuss unbiasing results for both the power spectrum and wavelet invariants, as well as simulation results comparing the two methods. In the following proposition we establish unbiasing results for the power spectrum by rederiving some results from [14], extended to the continuum setting. The proposition is proved in Appendix D.

Proposition 3.1

Assume Model 1. Define the following estimator of (Pf)(ω):

Then with probability at least 1 − 1/t2,

| (3.1) |

We obtain an identical result for wavelet invariants (Proposition 3.2) when signals are corrupted by additive noise only. See Appendix D for the proof.

Proposition 3.2

Assume Model 1. Define the following estimator of (Sf)(λ):

Then with probability at least 1 − 1/t2,

| (3.2) |

As M → ∞, the error of both the power spectrum and wavelet invariant estimators decays to zero at the same rate, and one can perfectly unbias both representations. As demonstrated in Section 5, this is not possible for noisy dilation MRA (Model 2), as there is a non-vanishing bias term. However, a nonlinear unbiasing procedure on the wavelet invariants can significantly reduce the bias.

We illustrate and compare additive noise unbiasing for power spectrum estimation using , the power spectrum method of Proposition 3.1 and , the wavelet invariant method of Proposition 3.2. To approximate (Pf) from the wavelet invariants , we apply the convex optimization algorithm described in Section 6.5 to obtain , the power spectrum approximation that best matches the wavelet invariants . Thus, throughout this article, denotes a power spectrum estimator obtained by first unbiasing wavelet invariants and then running an optimization procedure, while denotes an estimator computed by directly unbiasing the power spectrum. Our simulations compare the L2 error of both of these estimators, i.e. we compare and .

Figure 2(a) shows the uncorrupted power spectrum (red curve) of a medium frequency Gabor function , and the power spectrum after the signal is corrupted by additive noise with level σ = 2−3 (blue curve); the SNR of the experiment is 0.56 (see Section 6.1). Figure 2(b) shows the L2 error of the power spectrum estimation for the two methods as a function of log2(M) for a fixed SNR, and Fig. 2(c) shows the L2 error as a function of log2(σ) for a fixed M. The L2 errors for the two methods are similar; however, estimation via wavelet invariants is advantageous when the sample size M is small or the additive noise level σ is large. As M becomes very large or σ very small, the power spectrum method is preferable as the smoothing procedure of the wavelet invariants may numerically erase some extremely small scale features of the original power spectrum.

Fig. 2.

Simulation results for additive noise model for medium frequency Gabor .

4. Unbiasing for dilation MRA

In this section we analyze the dilation MRA model (Model 3). We thus assume the signals have been randomly translated and dilated but there is no additive noise.

In fact there is a simple algorithm to recover f under this model. Since , is an unbiased estimator of , and so can be accurately approximated. Once is recovered, one can take any signal yj and dilate it so that , and the result will be an accurate approximation of the hidden signal f for M large. However, this approach collapses in the presence of even a small amount of additive noise. In the presence of additive noise, an alternative is to attempt a synchronization by centering each signal. The center cf of signal f can be defined in the classical way by

Since the signals yj(x − (cf + tj)) are perfectly aligned, one can thus attempt an alignment by defining . However cyj − (cf + tj) = O(σ ∨ σ2 + η), so significant errors arise in the synchronization that cannot be resolved by averaging. As our goal is ultimately to produce a method that can be extended to the noisy dilation MRA model, we abandon both the trivial solution (which cannot be extended to noisy dilation MRA) and the synchronization approach (which produces large errors) and explore a method based on empirical averages.

We first observe that random dilations cause and to be biased estimators of (Pf)(ω) and (Sf)(λ), and the bias for both is O(η2), where η2 is the variance of the dilation distribution. However, if the moments of the dilation distribution are known and Pf, Sf are sufficiently smooth, one can apply an unbiasing procedure to the above estimators so that the resulting bias is O(ηk+2), where k ⩾ 2 is an even integer.

Throughout this section, we assume k ⩾ 2 is an even integer, and define the constants Ci from the first k/2 even moments of τ by for i = 2, 4, . . . , k. Note since we assume , C2 = 1. We define the constants B2, B4, . . . , Bk by solving

| (4.1) |

for i = 2, 4, . . . , k; these constants are deterministic functions of the moments of τ. A non-recursive formula related to the Euler numbers can be derived, which defines Bi explicitly in terms of C2, . . . , Ci; however, the recursive formula (4.1) is easier to implement numerically.

We introduce two additional moment-based constants that are defined by the Ci, Bi constants:

| (4.2) |

| (4.3) |

where C0, |B0| = 1, and when i = j = 0 in (4.3), is replaced with 1.

Remark 4.1

Since the distribution of τ is bounded, we are guaranteed that T < ∞, and in general can consider both T and E to be O(1) constants. For example for the uniform distribution, and , which gives .

We utilize the following two lemmas, which are proved in Appendix E, to derive results for both the power spectrum and wavelet invariants.

Lemma 4.1

Let Fλ(τ) = L((1 − τ)λ) for some function L ∈ Ck+2(0, ∞) and a random variable τ satisfying the assumptions of Section 2.1, and let k ⩾ 2 be an even integer. Assume there exist functions , such that

and define the following estimator of L(λ):

Then Gλ(τ) satisfies

where

and E is the absolute constant defined in (4.3).

Lemma 4.2

Let the assumptions and notation of Lemma 4.1 hold, and let τ1, . . . , τM be independent. Define

Then with probability at least 1 − 1/t2

The deviation of the estimator from L(λ) thus depends on two things: (1) the bias of the estimator that is O(ηk+2) and (2) the standard deviation of the estimator that is , since Λ(λ) = O(η).

4.1. Power spectrum results for dilation MRA

We now show how this unbiasing procedure based on both the moments of τ and the even derivatives of Py can be used to obtain an estimator of Pf.

Proposition 4.1

Assume Model 3 and . Define the following estimator of (Pf)(ω):

where the constants Bi satisfy (4.1). Let

Then for all ω ≠ 0, with probability at least 1 − 1/t2,

| (4.4) |

where

Proof.

Since Pf is a translation invariant representation, we can ignore the translation factors and consider the model . In addition since , (Pyj)(ω) = (Pyj)(−ω) and it is sufficient to consider ω ∈ (0, ∞). Proposition 4.1 then follows directly from Lemma 4.2 with λ = ω, L = Pf since (Pyj)(ω) = (Pf)((1 − τj)ω) = Fω(τj), Λi = Ωi, and Λ = Ω. □

We postpone a discussion of the shortcomings of Proposition 4.1 to Section 4.3, where we compare the power spectrum and wavelet invariant results for dilation MRA.

4.2. Wavelet invariant results for dilation MRA

We now apply the same unbiasing procedure to the wavelet invariants. Unlike for the power spectrum, where the error may depend on the frequency ω (see (4.4) and Section 4.3), the wavelet invariant error can be uniformly bounded independently of λ with high probability. The following two Lemmas establish bounds on the derivatives of (Sf)(λ) and are needed to prove Proposition 4.2; they are proved in Appendix B.

Lemma 4.3 (Low-frequency bound).

Assume and . Then the quantity |λm(Sf)(m)(λ)| can be bounded uniformly over all λ. Specifically:

for Ψm defined in (A1).

Lemma 4.4 (High-frequency bound for differentiable functions).

Assume , and . Then the quantity |λm(Sf)(m)(λ)| can be bounded by

for Θm defined in (A2).

When ψ is a Morlet wavelet or more generally when ψ is (k + 2)-admissible as described in Appendix A, these lemmas allow one to bound the error of the order k wavelet invariant estimator for dilation MRA in terms of the following quantities:

| (4.5) |

where Ψi, Θi are defined in (A1), (A2) and E is defined in (4.3).

Proposition 4.2

Assume Model 3, the notation in (4.5), and that ψ is (k + 2)-admissible. Define the following estimator of (Sf)(λ):

where the constants Bi satisfy (4.1). Then with probability at least 1 − 1/t2,

Proof.

Since Sf is a translation invariant representation, we can ignore the translation factors and consider the model . Since ψ is k + 2-admissible, , which guarantees(Sf)(λ) ∈ Ck+2(0, ∞). We note that since , Pf is continuous, and the Leibniz integral rule guarantees that for 1 ⩽ n⩽ k + 2. By applying Lemma 4.3, we have for all 0 ⩽ i ⩽ k + 2, so that Lemma 4.2 holds for L(λ) = (Sf)(λ), , and R(λ) = 1. Now by applying Lemma 4.4, we have for all 0 ⩽ i ⩽ k + 2, so that Lemma 4.2 also holds for L(λ) = (Sf)(λ), , and R(λ) = 4 (note since , ). Thus, Lemma 4.2 in fact holds with ; since (Syj)(λ) = (Sf)((1 − τj)λ) = Fλ(τj), we obtain Proposition 4.2 □

Since , Proposition 4.2 guarantees that the error can be uniformly bounded independent of λ. In addition, if the signal is smooth, the error for high-frequency λ will have the favorable scaling λ−2. An important question in practice is how to choose k, i.e. what order wavelet invariant estimator minimizes the bias. Consider for example when , and . By using a second-order estimator, we can decrease the bias from O(η2) to O(η4), and we can further decrease the bias to O(η6) by choosing k = 4. However, Ψk increases very rapidly in k. Indeed, as can be seen from (A1), Ψk increases like k!. Thus, one possible heuristic (assuming η is known) is to choose where minimizes the bias upper bound kΨk+2(2Eη)k+2. Since Ψk increases factorially, Ψk ∼ (Ck)k for some constant C, and will be inversely proportional to η, that is . The following corollary of Proposition 4.2 then holds for any .

Corollary 4.1

Under the assumptions of Proposition 4.2, if Ψi(2Eη)i is decreasing for i ⩽ k + 2, then with probability at least 1 − 1/t2:

| (4.6) |

Similarly, if Θi(2Eη)i is decreasing for i ⩽ k + 2, then with probability at least 1 − 1/t2:

| (4.7) |

Remark 4.2

We observe that for a discrete lattice I of λ values, we can define the discrete 1-norm by . Assume the lattice has cardinality n, and that Ψi(2Eη)i, Θi(2Eη)i are decreasing for i ⩽ k + 2. Applying Proposition 4.2 with and a union bound over the lattice gives

with probability at least 1 − 1/s2. When n ≪ M, which is the context for MRA, the 1-norm of the error is O(ηk+2) as M → ∞.

4.3. Comparison

Although Propositions 4.2 and 4.1 at first glance appear quite similar, the wavelet invariant method has several important advantages over the power spectrum method, which we enumerate in the following remarks.

Remark 4.3

Proposition 4.2 (wavelet invariants) applies to any signal satisfying , but Proposition 4.1 requires . Thus, as k is increased, the power spectrum results apply to an increasingly restrictive function class. Furthermore, as discussed in Section 5, if the signal contains any additive noise, Pyj is not even C1, which means the unbiasing procedure of Proposition 4.1 cannot be applied. On the other hand, by choosing , Sf will inherit the smoothness of the wavelet, and the wavelet invariant results will hold for any and any k.

Remark 4.4

Since (Pfτ)(ξ) = (Pf)((1 − τ)ξ), dilation will transport the frequency content at ξ to (1 − τ)ξ, so that the displacement is τξ. Thus, when ξ is very large, |(Pf)(ξ) − (Pfτ)(ξ)| can be large even for τ small. Because the wavelet invariants bin the frequency content, and these bins become increasingly large in the high frequencies, this does not occur for wavelet invariants. More specifically, there is always a signal f and frequency ξ for which is large regardless of k. Consider for example when . Then Ωk(ξ) ∼ ξk, and . However, for M large enough, the order k wavelet invariant estimator satisfies for all λ. The wavelet invariants are thus stable for high-frequency signals, where the power spectrum fails.

Remark 4.5

For the wavelet invariants there will be a unique that minimizes kΨk+2(2Eη)k+2, and does not depend on λ. Furthermore, can be explicitly computed given the wavelet ψ and moment constant E. On the other hand, the minimum of kΩk+2(ω)(2Eω)k+2 with respect to k will depend on both the frequency ω and the signal f, so that , and it becomes unclear how to choose the unbiasing order.

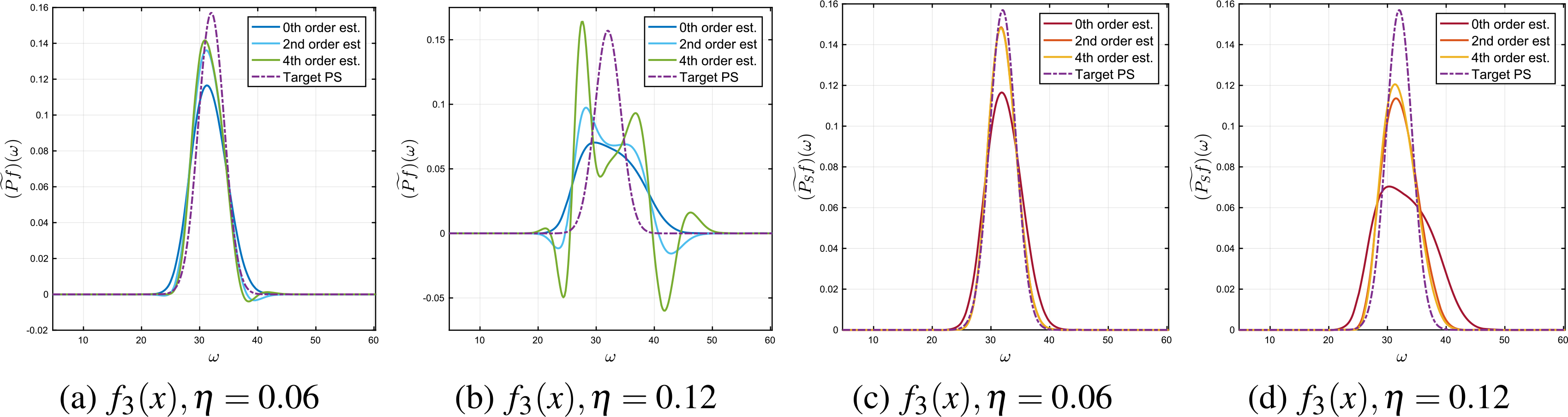

4.4. Simulation results for dilation MRA

We first illustrate the unbiasing procedure of Propositions 4.1 and 4.2 for the high-frequency signal . Figure 3 shows the power spectrum estimator and the wavelet invariant estimator for k = 0, 2, 4 for both small and large dilations, where denotes the combined wavelet invariant unbiasing plus optimization procedure (see Section 6.5). Higher order unbiasing is beneficial for both methods for small dilations but fails for the power spectrum for large dilations. Both methods will of course fail for η large enough, but for high-frequency signals the power spectrum fails much sooner.

Fig. 3.

Order k = 0, 2, 4 power spectrum estimators (first two figures) and wavelet invariant estimators (last two figures) for the signal . Figures 3(a) and 3(c) show small dilations and Figs 3(b) and 3(d) show large dilations.

Next we compare and , the L2 error of estimating the power spectrum of the target signal via the power spectrum estimators of Proposition 4.1 and via the wavelet invariant estimators of Proposition 4.2, followed by a convex optimization procedure. We consider order k = 0, 2, 4 estimators for both the power spectrum and wavelet invariants on the following Gabor atoms of increasing frequency:

These functions satisfy f = Real(h) where for ξ = 8, 16, 32, and thus exhibit the behavior described in Remark 4.4.

Simulation results are shown in Fig. 4; the horizontal axis shows log2(M) while the vertical axis shows log2(Error). For each value of M, the error was calculated for 10 independent simulations and then averaged. The unbiasing procedure of Propositions 4.1 and 4.2 requires knowledge of the moments of the dilation distribution, but in practice these are unknown. Thus, the first two even moments of the dilation distribution (η2,C4η4) were estimated empirically with the fourth-order estimators described in Section 6.3 (see Definition 6.1). For the low-frequency signal, the fourth-order power spectrum estimator was best for both small and large dilations and is preferable due to the lower computational cost (see Remark 2.3). For the high-frequency signal, the fourth-order wavelet invariant estimator was best for large dilations and WSC k = 2 and k = 4 were best and equivalent for small dilations. For the medium-frequency signal, the higher order power spectrum estimators were best for small dilations while the higher order wavelet invariant estimators were best for large dilations. Thus, the simulation results confirm that the wavelet invariants will have an advantage over Fourier invariants when the signals are either high frequency or corrupted by large dilations. We remark that one obtains nearly identical error plots with oracle knowledge of the dilation moments, indicating that the empirical moment estimation procedure is highly accurate in the absence of additive noise, even for small M values.

Fig. 4.

L2 error with standard error bars for dilation model (empirical moment estimation). Top row shows results for small dilations (η = 0.06) and bottom row shows results for large dilations (η = 0.12). First, second, third column shows results for low, medium, high frequency Gabor signals. All plots have the same axis limits.

5. Noisy dilation MRA model

Finally, we consider the noisy dilation MRA model (Model 2) where signals are randomly translated and dilated and corrupted by additive noise. Section 5.1 gives unbiasing results for wavelet invariants and Section 5.2 reports relevant simulations.

5.1. Wavelet inariant results for noisy dilation MRA

To state Proposition 5.1 as succinctly as possible, we also define the following quantity

| (5.1) |

where E is defined in (4.3) and Ψm is defined in (A1).

Proposition 5.1

Assume Model 2 and that ψ is (k + 2)-admissible. Define the following estimator of (Sf)(λ):

where the constants Bi satisfy (4.1). Then with probability at least 1 − 1/t2

| (5.2) |

where E, Λ(λ), Ψ are as defined in (4.3), (4.5), (5.1).

The following corollary is an immediate consequence of Proposition 5.1.

Corollary 5.1

Let the assumptions of Proposition 5.1 hold, and in addition assume Ψi(2Eη)i is decreasing for i ⩽ k + 2. Then with probability at least 1 − 1/t2

| (5.3) |

We remark that there are two components to the estimation error bounded by the right-hand side of (5.3): the first two terms are the error due to dilation, as in Corollary 4.1 of Proposition 4.2, and the last two terms are the error due to additive noise, as given in Proposition 3.2. Thus, the wavelet invariant representation allows for a decomposition of the error of the noisy dilation MRA model into the sum of the errors of the random dilation model and the additive noise model. This is possible because the representation inherits the differentiability of the wavelet and is not possible when , in which case the dilation unbiasing procedure has a more complicated effect on the additive noise. A result equivalent to Proposition 5.1 cannot be made for the power spectrum, because the nonlinear unbiasing procedure of Proposition 4.1 cannot be applied to the power spectra of signals from the noisy dilation MRA corruption model, since they are not differentiable in the presence of additive noise.

Proof of Proposition 5.1.

Since Sf is a translation invariant representation, we can ignore the translation factors and consider the model . For notational convenience, we define the following order k derivative ‘unbiasing’ operator:

| (5.4) |

which is defined on any function of λ, so that we can express our estimator by

We can thus decompose the error as follows:

To bound the above terms we utilize the following two Lemmas, which are proved in Appendix F.

Lemma 5.1

Let the notation and assumptions of Proposition 5.1 hold, and let Aλ be the operator defined in (5.4). Then with probability at least 1 − 1/t2

Lemma 5.2

Let the notation and assumptions of Proposition 5.1 hold, and let Aλ be the operator defined in (5.4). Then with probability at least 1 − 1/t2

Applying Proposition 4.2 to bound the dilation error, Lemma 5.1 to bound the additive noise error, and Lemma 5.2 to bound the cross term error gives (5.2). □

5.2. Simulation results for noisy dilation MRA

We once again consider the Gabor atoms of varying frequency introduced in Section 4.4, and compare the L2 error of estimating the power spectrum by (1) averaging the power spectra of the noisy signals, and applying additive noise unbiasing; this is the zero-order power spectrum method (PS k = 0), defined in Proposition 3.1, and (2) by approximating the wavelet invariants by the estimators given in Proposition 5.1 for k = 0, 2, 4, and then applying the optimization procedure described in Section 6.5; we refer to these methods as WSC k = i for i = 0, 2, 4. We emphasize that for the noisy dilation MRA model, it is impossible to define higher order methods for the power spectrum.

We first consider the errors obtained given oracle knowledge of the noise moments, both additive and dilation. Results are shown in Fig. 5 for all parameter combinations resulting from σ = 2−4, 2−3 (giving SNR = 2.2, 0.56) and η = 0.06, 0.12. The horizontal axis shows log2(M) and the vertical axis shows log2(Error); for each value of M, the error was calculated for 10 independent simulations and then averaged. For all simulations τ was given a uniform distribution, a challenging regime for dilations, and the sample size ranged over 16 ⩽ M ⩽ 131, 072. For the medium- and high-frequency signals, for large enough M, WSC k = 2 and WSC k = 4 have significantly smaller error than the order zero estimators, indicating that the nonlinear unbiasing procedure of Proposition 5.1 contributes a definitive advantage. For the high-frequency signal and large M, the error using WSC k = 4 is decreased by a factor of about 3 from the PS k = 0 error. For small dilations (η = 0.06), there is not much of a difference in performance between WSC k = 2 and WSC k = 4, but the gap between these estimators widens for large dilations (η = 0.12), as the fourth-order correction becomes more important. For the low-frequency signal under small dilations, PS k = 0 achieves the smallest error for large M. However, when M is small or the dilations are large, the WSC estimators have the advantage for the low-frequency signal as well, and WSC k = 4 is once again the best estimator for large M.

Fig. 5.

L2 error with standard error bars for noisy dilation MRA model (oracle moment estimation). First, second and third column shows results for low-, medium- and high-frequency Gabor signals. All plots have the same axis limits.

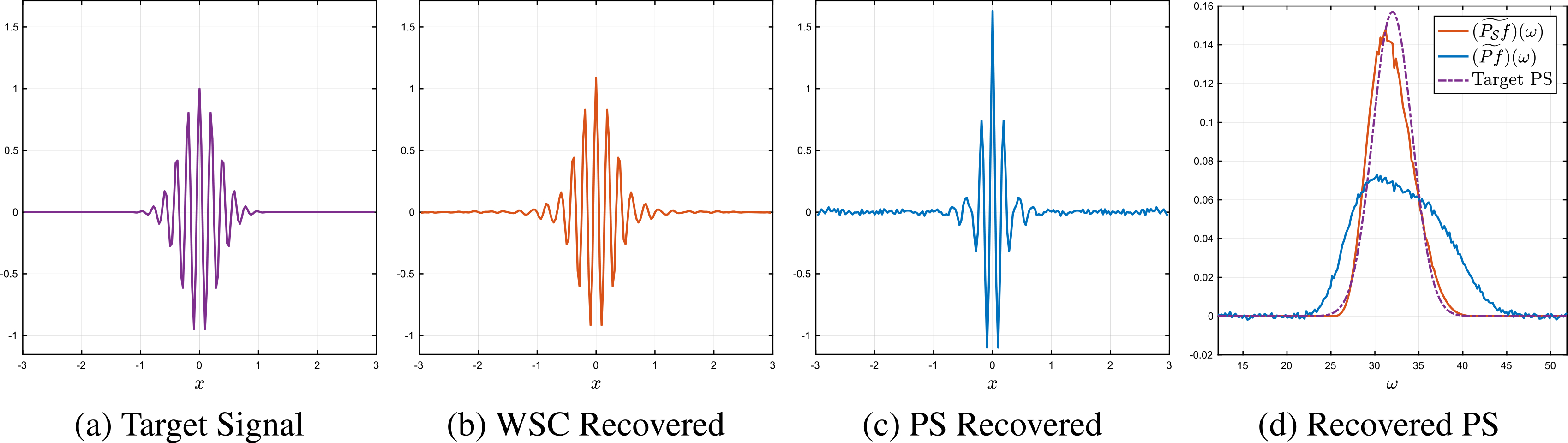

We note that although in general recovering the power spectrum is insufficient for recovering the signal, the signal can be recovered when and by taking the inverse Fourier transform of the root power spectrum. Figure 6 shows the approximate signals recovered by this procedure from PS k = 0 (Fig. 6(c)) and WSC k = 4 (Fig. 6(b)) for the high-frequency Gabor signal f3(x) (Fig. 6(a)). The WSC-recovered signal is a much better approximation of the target signal. The recovered power spectra are shown in Fig. 6(d); PS k = 0 is much flatter than the target power spectrum, while WSC k = 4 is a good approximation of both the shape and height of the target power spectrum.

Fig. 6.

Signal recovery results for with M = 20, 000, η = 0.12, SNR = 2.2.

Appendix G outlines an empirical procedure for estimating the moments of τ in the special case when t = 0 in the noisy dilation MRA model (i.e. no random translations). All simulations reported in Fig. 5 are repeated (with minor modifications) with empirical additive and dilation moment estimation, and the results are reported in Fig. G7 of Appendix G.

Appendix H contains additional simulation results for a variety of high-frequency signals.

Remark 5.1

One could also solve noisy dilation MRA with an EM algorithm. Appendix I describes how the method proposed in [1] can be extended to solve Model 2. Although EM algorithms provide a flexible tool for accurate parameter estimation in a variety of MRA models, the primary disadvantage is the high computational cost of each iteration. Each iteration costs O(Mn3), while wavelet invariant estimators can be computed in O(Mn2). In addition the statistical priors chosen may bias the signal reconstruction [12], and the algorithm will generally only converge to a local maximum. In this article we thus explore whether it is possible to solve noisy dilation MRA more efficiently and accurately by nonlinear unbiasing procedures.

6. Numerical implementation

In this section we describe the numerical implementation of the proposed method used to generate the results reported in Sections 3, 4.4 and 5.2. Section 6.1 describes how signals were generated, and Sections 6.2 and 6.3 describe empirical procedures for estimating the additive noise level and the moments of the dilation distribution τ. Finally, Section 6.4 discusses how the derivatives used for unbiasing were computed, and Section 6.5 describes the convex optimization algorithm used to recover Pf from Sf. All simulations used a Morlet wavelet constructed with ξ = 3π/4.

6.1. Signal generation and SNR

All signals were defined on [−N/4, N/4] and then padded with zeros to obtain a signal defined on [−N/2, N/2]; the additive noise was also defined on [−N/2, N/2]. Signals were sampled at a rate of 1/2ℓ, thus resolving frequencies in the interval [−2ℓπ, 2ℓπ] with a frequency sampling rate of 2π/N. We used N = 25 and ℓ = 5 in all experiments, keeping the box size and resolution fixed. For each experiment with hidden signal f, the SNR was calculated by .

6.2. Empirical estimation of additive noise level

The additive noise level σ2 can be estimated from the mean vertical shift of the mean power spectrum in the tails of the distribution. Specifically, for Σ = [−2ℓπ, 2ℓπ] \ [−2ℓ−1π, 2ℓ−1π], we define

If we choose ℓ large enough so that the target signal frequencies are essentially contained in the interval for ω ∈ Σ, and this is a robust and unbiased estimation procedure since by Lemma D.1.

6.3. Empirical moment estimation for dilation MRA

Given the additive noise level, the moments of the dilation distribution τ for dilation MRA (Model 3) can be empirically estimated from the mean and variance of the random variables αm(yj) defined by

| (6.1) |

for integer m ⩾ 0. More specifically, we define the order m squared coefficient of variation by

| (6.2) |

The following proposition guarantees that for M large the second and fourth moments of the dilation distribution can be recovered from CV0, CV1. In fact one could continue this procedure for higher m values, i.e. will define estimators of the first even moments of τ, accurate up to O(ηk+2), but for brevity we omit the general case.

Proposition 6.1

Assume Model 3 and CV0, CV1 defined by (6.1) and (6.2). Then

Proof.

Since ,

where we assume we have chosen ℓ large enough so that the target signal frequencies are essentially supported in [−2ℓ−1π, 2ℓ−1π]. Thus,

When m = 0, we have

When m = 1, we have

We cannot compute CVm exactly, but by replacing Var, with their finite sample estimators, we obtain an approximate as M → ∞. Motivated by Proposition G.1, we thus use , to define estimators of η2 and C4η4.

Definition 6.1

Assume Model 3 and let , be the empirical versions of (6.2). Define the second-order estimator of η2 by . Define the fourth-order estimators of (η2, C4η4) by the unique positive solution (, ) of

For noisy dilation MRA (Model 2), estimating the dilation moments is more difficult. We give a procedure for estimating the moments in the special case t = 0 in Appendix G. Empirical moment estimation procedures that are simultaneously robust to translations, dilations and additive noise are an important area of future research.

6.4. Derivatives

All derivatives were approximated numerically using finite difference calculations. A sixth-order finite difference approximation was used for second derivatives, and a fourth-order finite difference approximation was used for fourth derivatives. This procedure was done on the empirical mean for each representation, not the individual signals. In fact since the wavelet is known, could be computed analytically, and (Syj)(n)(λ) computed using Definition 2.2. Thus error due to finite difference approximations could be avoided for wavelet invariant derivatives.

6.5. Optimization

In this section we describe the convex optimization algorithm for computing , the power spectrum approximation that best matches the wavelet invariants . Since the wavelet invariants are only computed for λ > 0, we also incorporate zero frequency information into the loss function via , an approximation of the power spectrum at frequency zero. For all of the examples reported in this article, the quasi-newton algorithm was used to solve an unconstrained optimization problem minimizing the following convex loss function:

where

Letting denote the minimizer of the above loss function, we then define . Theorem 2.4 ensures that when the loss function is defined with the exact wavelet invariants Sf, it has a unique minimizer corresponding to Pf. Whenever , the symmetry of (Pf)(ω) ensures that , and thus it is sufficient to optimize over the non-negative frequencies and then symmetrically extend the solution. Such a procedure ensures the output of the optimization algorithm is symmetric while avoiding adding constraints to the optimization. The algorithm was initialized using the mean power spectrum with additive noise unbiasing only, i.e. PS k = 0. The optimization output does depend on various numerical tolerance parameters, which were held fixed for all examples.

Remark 6.1

Alternatively, one can invert the representation by applying a pseudo-inverse with Tikhonov regularization. Specifically, if F is the matrix defining the wavelet invariants, so that Sy = F(Py), then one can define . This procedure however requires careful selection of the hyper-parameter λ and did not work as well as inverting via optimization in our experiments.

7. Conclusion

This article considers a generalization of classic MRA, which incorporates random dilations in addition to random translations and additive noise and proposes solving the problem with a wavelet invariant representation. These wavelet invariants have several desirable properties over Fourier invariants, which allow for the construction of unbiasing procedures that cannot be constructed for Fourier invariants. Unbiasing the representation is critical for high-frequency signals, where even small diffeomorphisms cause a large perturbation. After unbiasing, the power spectrum of the target signal can be recovered from a convex optimization procedure.

Several directions remain for further investigation, including extending results to higher dimensions and considering rigid transformations instead of translations. Such extensions could be especially relevant to image processing, where variations in the size of an object can be modeled as dilations. Incorporating the effect of tomographic projection would also lead to results more directly relevant to problems such as cryo-EM. The tools of the present article, although significantly reducing the bias, do not allow for a completely unbiased estimator for noisy dilation MRA due to the bad scaling of certain intrinsic constants. Thus, an important open question is whether it is possible to define unbiased estimators for noisy dilation MRA using a different approach. The noisy dilation MRA model of this article corresponds to linear diffeomorphisms, and constructing unbiasing procedures that apply to more general diffeomorphisms is also an important future direction. In addition, one can construct wavelet invariants that characterize higher order auto-correlation functions such as the bispectrum, and future work will investigate full signal recovery with such invariants.

Acknowledgements

We would like to thank the reviewers for their detailed comments and insights that greatly improved the manuscript. We would also like to thank Stephanie Hickey for providing useful references on flexible regions of macromolecular structures.

Funding

Alfred P. Sloan Foundation (Sloan Fellowship FG-2016–6607 to M.H.); Defense Advanced Research Projects Agency (Young Faculty Award D16AP00117 to M.H.); National Science Foundation (grant 1912906 to A.L.; grant 1620216 and CAREER award 1845856 to M.H.).

A. Wavelet admissibility conditions

This appendix describes the wavelet admissibility conditions that are needed for the main results in this article, namely Propositions 4.2 and 5.1. The wavelet ψ is k-admissible if and Ψk < ∞, Θk < ∞ where

| (A.1) |

| (A.2) |

For ψ to be k-admissible, it is sufficient for , (Pψ)(i) to decay faster than ωi+1, and (see Lemma B.1 in Appendix B). The condition is slightly stronger than the classic admissability condition [55, Theorem 4.4]. When is continuously differentiable, is sufficient to guarantee Cψ < ∞; but here we need for some ϵ > 0 as ω → 0. If this condition is removed, we are not guaranteed Θk < ∞, but all results in fact still hold, with replacing in Propositions 4.2 and 5.1. Any wavelet with fast decay satisfies this stronger admissibility condition, and it ensures that a smooth signal will enjoy a fast decay of wavelet invariants.

Remark A.1

The Morlet wavelet ψ(x) =→g(x)(eiξx − C) is k-admissible for any k, since , Pψ has fast decay, and as ω → 0. One can also choose to be an order k + 1-spline of compact support.

B. Properties of wavelet invariants

This appendix establishes several important properties of wavelet invariants. Lemma B.1 gives sufficient conditions guaranteeing that a wavelet is k-admissible. Lemmas 4.3 and 4.4 bound wavelet invariant derivatives. Lemma B.2 bounds terms that arise in the dilation unbiasing procedure of Sections 4.2 and 5.

Lemma B.1 (k-admissible).

If , (Pψ)(i) decays fast than ωi+1, and , then ψ is k-admissible.

Proof.

We first note that guarantees . Since (Pψ)(i) decays faster than ωi+1 and , for 0 ⩽ i ⩽ k, so Ψk < ∞. Also and implies for 2 ⩽ i ⩽ k. In addition, by assumption. Thus, to conclude Θk < ∞, it only remains to show . Since (Pψ)′ is continuous and decays faster than ω2, only the integrability around the origin needs to be verified. We note that and Pψ continuous implies Pψ ∼ ω1+ϵ for some ϵ > 0 as ω → 0. Thus, (Pψ)′ ∼ ωϵ as ϵ → 0, so that ω−1(Pψ)′ ∼ ωϵ−1; the function is thus integrable around the origin since ϵ − 1 > −1. □

Lemma 4.3 (Low frequency bound).

Assume and . Then the quantity |λm(Sf)(m)(λ)| can be bounded uniformly over all λ. Specifically,

for Ψm defined in (A.1).

Proof.

Let , and let

Utilizing Definition 2.2 we obtain

Expanding the derivative gives

Utilizing and , one obtains

Lemma 4.4 (High frequency bound for differentiable functions).

Assume , and . Then the quantity |λm(Sf)(m)(λ)| can be bounded by

for Θm defined in (A.2).

Proof.

Recall from the proof of Lemma 4.3 that

where and . Since and , we obtain

Lemma B.2

Assume and ψ is m-admissible, and let Bm, E,Ψm,Θm be as defined in (4.1), (4.3), (A.1) (A.2). Then,

where

Proof.

From the proof of Lemma 4.3:

From the proof of Lemma 4.4:

Utilizing |Bm| ⩽ Em gives

The following Corollary is obtained from Lemma B.2 when f is a dirac-delta function.

Corollary B.1

Assume ψ is m-admissible, and let Bm, E,Ψm be as defined in (4.1), (4.3), (A1). Then,

C. Power spectrum and wavelet invariant equivalence

This appendix contains supporting results for demonstrating the equivalence of the power spectrum and wavelet invariants. Lemma 2.1 establishes that wavelet invariants uniquely determine any bandlimited L2 function, as long as the wavelet satisfies the linear independence Condition 2.3 and a mild integrability condition. Proposition 2.1 gives two criteria that are sufficient to guarantee Condition 2.3. Finally, Lemma C.1 establishes that the Morlet wavelet satisfies Condition 2.3.

Lemma 2.1

Let be continuous and assumep(w) = p(−w), has compact support and Condition 2.3. Then

Proof.

Since p is continuous, there exists an ϵ > 0 such that on (0, ϵ) one either has p = 0, p > 0, or p < 0. Claim: one must have p = 0. Suppose not, and without loss of generality assume p > 0 on (0, ϵ) and that the support of is contained in the interval [1, 2]. Now choose λ0 small enough so that is supported on [ϵ/1, ϵ) i.e. λ0 = ϵ/2. Clearly, there must exist a subset of positive measure such that on . Then,

We conclude

but this is impossible since the integrand is strictly positive on . We thus conclude that p = 0 on (0, ϵ). Thus, it is sufficient to only consider frequencies [ϵ, ∞].

Assume for all λ. Since p(ω) = p(−ω),

where I = [ϵ, ∞). We now define for some β > 0, and observe that

Note

We now apply the change of variable ωi = 1/ξi, and let g(ξi) = p(1/ξi). We obtain

| (C.1) |

Now consider the kernel

Note that k is a strictly positive definite kernel function if for any finite sequence in [0, 1/ϵ], the n by n matrix A defined by

is strictly positive definite [83]. Viewing as functions of λ, we see that

and A is thus a Gram matrix. Since the are linearly independent if and only if the are linearly independent, and the are linearly independent by assumption, we can conclude that A and thus k are strictly positive definite. Now consider the corresponding integral operator on [0, 1/ϵ]:

Since , and thus are continuous, and k will thus be continuous as long as it remains bounded. To check boundedness we observe that k(ξ1, ξ2)2 ⩽ k(ξ1, ξ1)k(ξ2, ξ2) [21], and

Since has a compact support, clearly , and k is thus bounded on the compact interval [0, 1/ϵ] as long as β ⩾ 3/2. Since k is continuous and [0, 1/ϵ] is compact, K : L2[0, 1/ϵ] → L2[0, 1/ϵ] is a compact, self-adjoint operator and by Mercer’s Theorem K is also strictly positive definite [83]. Since by (C.1), we conclude g = 0 in L2[0, 1/ϵ]. Thus, p(1/ξ) = 0 for almost every ξ ∈ (0, 1/ϵ], which implies p(ω) = 0 for almost every ω ∈ [ϵ, ∞). Since p(ω) = p(−ω) and p = 0 on (0, ϵ), p = 0 for almost every . □

Proposition 2.1

The following are sufficient to guarantee Condition 2.3:

has a compact support contained in the interval [a, b], where a and b have the same sign, e.g. complex analytic wavelets with compactly supported Fourier transform.

and there exists an N such that all derivatives of order at least N are non-zero ω = 0, e.g. the Morlet wavelet.

Proof.

Let be a finite sequence of distinct positive frequencies, and let denote the corresponding functions of λ.

First assume (i). Without loss of generality we assume that [a, b] is a positive interval and that on (a, a + ϵ) for some ϵ > 0. Clearly, . A simple calculation shows that the support of is contained in the interval , and in a neighborhood of . Assume we have ordered the ωi so that ω1 > . . . > ωn > 0. Now suppose

Note is the only function in the above collection with support in a neighborhood of ; thus, we must have c1 = 0, so that

But now is the only function in the above collection with support in a neighborhood of , so we must have c2 = 0, and proceeding iteratively we conclude that c1 = . . . = cn = 0. Thus, is a linearly independent set, and Condition 2.3 holds.

Now assume (ii). Since , is and all derivatives of order at least N are non-zero at ω = 0. Note are linearly independent if and only if are linearly independent. Defining , this holds if and only if are linearly independent as functions of , where we define . Assume

Differentiating m times for N ⩽ m ⩽ N + n − 1, we obtain

The above holds for all . We now take the limit as to obtain

Since g(m) (0) ≠ 0, we obtain

Since A is a Vandermonde matrix constructed from distinct ωi, det(A) ≠ 0. Since the ωi are non-zero, det(B) ≠ 0. Thus, det(AB) = det(A) det(B) ≠ 0. We conclude AB is invertible and so all ci = 0, which gives Condition 2.3. □

Lemma C.1

Suppose we construct a Morlet wavelet with parameter ξ, that is for . Then, for almost all , the wavelet satisfies Condition 2.3.

Proof.

The Fourier transform has form

for some constant depending on ξ, so that

From direct calculation or a computer algebra system, one obtains

where Hn(ξ) is the nth degree physicist’s Hermite polynomial. We have g′(0) = 0, but for n > 1, g(n)(0) = 0 only when ξ is a root of the above polynomial. Since the set of roots of the polynomials is countable, if ξ is selected at random from , it is not a root of any of these polynomials with probability 1, and g(n)(0) ≠ 0 for all n. Thus, the wavelet satisfies criterion (ii) of Proposition 2.1, and thus the linear independence Condition 2.3. □

D. Supporting results: classic MRA

This appendix contains supporting results for Section 3. The first two lemmas (Lemmas D.1 and Lemma D.2) establish additive noise bounds for the power spectrum and are needed to prove Proposition 3.1. The next two lemmas (Lemmas D.3 and Lemma D.4) establish additive noise bounds for wavelet invariants and are needed to prove Proposition 3.2.

Lemma D.1

Let ε(x) be a white noise processes on with variance σ2. Then, for all frequencies ω, ξ,

| (D.1) |

| (D.2) |

| (D.3) |

Proof.

By Proposition J.1,

which shows (D.1). By Proposition J.2,

which shows (D.2). Finally, by Proposition J.3, we have

which gives (D.3). □

Lemma D.2

Let ε(x) be a white noise processes on with variance σ2. Then, for any signal ,

Proof.

Since and by Lemma D.1,

We now control Var[(P(f + ε))(ω)]. Note that:

and that

since even when x = s = p, . Ignoring the terms with zero expectation, we thus get

where the last line follows from Lemma D.1. Thus,

□

Proposition 3.1

Assume Model 1. Define the following estimator of (Pf)(ω):

Then, with probability at least 1 − 1/t2,

| (3.1) |

Proof.

Let so that . We first note since , the power spectrum is translation invariant, that is for all ω, tj. Thus, by Lemma D.2,

and

Since the yj are independent,

Applying Chebyshev’s inequality to the random variable , we obtain

Observing that gives (3.1). □

Lemma D.3

Let ε(x) be a white noise processes on with variance σ2. Then,

Proof.

Since by Lemma D.1, we have

Since by Lemma D.1, , we also have

□

Lemma D.4

Let ε(x) be a white noise processes on with variance σ2. Then, for any signal

Proof.

Utilizing and Lemma D.3, we have

To bound , note that

When we take expectation, any term involving one or three ε terms disappears, so that

where the last line follows from Lemma D.3. Thus,

□

Proposition 3.2

Assume Model 1. Define the following estimator of (Sf)(λ):

Then, with probability at least 1 − 1/t2,

| (D.4) |

Proof.

Let so that . We first note that the wavelet invariants are translation invariant, that is for all tj. We now compute the mean and variance of the coefficients (Syj)(λ). By Lemma D.4,

and

Since the yj are independent,

Applying Chebyshev’s inequality to the random variable gives

By Young’s convolution inequality, , which gives (D.4). □

E. Supporting results: dilation MRA

This appendix contains the technical details of the dilation unbiasing procedure that is central to Propositions 4.1, 4.2 and 5.1. Lemma 4.1 bounds the bias and variance of the estimator, and Lemma 4.2 bounds the error of the estimator given M independent samples.

Lemma 4.1

Let Fλ(τ) = L((1 − τ)λ) for some function L ∈ Ck+2(0, ∞) and a random variable τ satisfying the assumptions of Section 2.1, and let k ⩾ 2 be an even integer. Assume there exist functions , such that

and define the following estimator of L(λ):

Then Gλ(τ) satisfies

where

and E is the absolute constant defined in (4.3).

Proof.

We Taylor expand Fλ(τ) about τ = 0

We note

which motivates an unbiasing with the first k/2 even derivatives, and thus a Taylor expansion of these derivatives

Multiplication of the ith even derivative by Biηi gives

We want an estimator that targets Fλ(0) = L(λ). We thus consider the following variable as an estimator:

and show that for constants Bi chosen according to (4.1). We have

That is,

where

Since (4.1) guarantees that

the coefficients of η2, η4, . . . , ηk vanish, and we obtain

First we bound the bias H1(λ). In the remainder of the proof we let B0 = −1 to simplify notation, so that

We first obtain a bound for |BiRi(λ, τ)ηi|. Note

We observe that

so that

Now assume first of all that τ is positive. We have

where the last line follows since for . A similar argument can be applied when τ is negative, and we can conclude

| (E.1) |

which gives

We thus obtain

which establishes the bound on the bias. We now bound the variance. We note

Thus,

and we proceed to bound each term.

where 1E is an indicator function indicating that i,j,ℓ,s are not all zero. We have

Noting that only terms where j + s is even survive expectation, and letting and , we obtain

for coefficients such that C0,0 = 0, if is odd, and . Thus,

Next we bound .

Utilizing Equation (E.1), we have

which gives

so that

Finally we bound the cross term .

| (E.2) |

Since and from (E.1), we have

so that

The same bound holds for the terms of Fλ(0)H1(λ), which arise from i = 0, j = 0 in (E.2), so that

Thus, and the lemma is proved. □

Lemma 4.2

Let the assumptions and notation of Lemma 4.1 hold, and let τ1, . . . , τM be independent. Define

Then, with probability at least 1 − 1/t2,

Proof.

By Lemma 4.1 and the independence of the τj, we have

so by Chebyshev’s inequality we can conclude that with probability at least 1 − 1/t, we have

which gives

□

F. Supporting results: noisy dilation MRA

This appendix contains supporting results needed to prove Proposition 5.1, which defines a wavelet invariant estimator for noisy dilation MRA. Lemma 5.1 controls the additive noise error and Lemma 5.2 controls the cross-term error. Lemma F.1 guarantees that the dilation unbiasing procedure applied to the additive noise still has mean σ2, which is needed to prove Lemma 5.1.

Lemma 5.1

Let the notation and assumptions of Proposition 5.1 hold, and let Aλ be the operator defined in (5.4). Then, with probability at least 1 − 1/t2,

Proof.

Let

By Lemma D.1, , and we thus obtain

where we have used Lemma F.1 to conclude m = 2, . . . , k. Also since by the Cauchy–Schwarz inequality, we obtain

where we let denote and B0 = 1. By Lemma D.1, we have for all frequencies ω, ξ, so that

where the last line follows from Corollary B.1 in Appendix B. We thus obtain

so that

Thus,

so that by Chebyshev’s inequality with probability at least 1 − 1/t2

□

Lemma 5.2

Let the notation and assumptions of Proposition 5.1 hold, and let Aλ be the operator defined in (5.4). Then, with probability at least 1 − 1/t2,

Proof.

We have

where

The random variable Yj has randomness depending on both εj and τj. Note that

since Yj is integrable. Thus, since , we obtain , which yields . We also have

Letting B0 = 1 and applying Lemma B.2, we have

since guarantees . Also,

where the second line follows from Lemma D.1 in Appendix D and the next to last line from Corollary B.1 in Appendix B. We thus have

and an identical argument can be applied to the so that by Chebyshev’s inequality with probability at least 1 − 1/t2

□

Lemma F.1

Assume ψ is k-admissible. Then,

| (F.1) |

for all 1 ⩽ m ⩽ k.

Proof.

We recall that since ψ is k-admissible, , and to simplify notation we let and

We first establish that

| (F.2) |

The proof is by induction. When k = 1, we obtain

and

so the base case is established. We now assume that Equation (F.2) holds and show it also holds for k+1 replacing k. By the inductive hypothesis

so that

Thus, (F.2) is established. We now use integration by parts to show (F.2) implies (F.1) in the Lemma. The proof of (F.1) is once again by induction. When k = 1, we have already shown

| (F.3) |

Integration by parts gives

Note ωgλ(ω) vanishes at ±∞ since guarantees , and thus gλ must decay faster that ω−1. Utilizing (F.3),

and the base case is established. We now assume

By integrating Equation (F.2), we obtain

We are guaranteed vanishes at ±∞ since in the proof of Lemma 4.3 we showed , and implies vanishes at ±∞. □

G. Moment estimation for noisy dilation MRA

In this appendix we outline a moment estimation procedure for noisy dilation MRA (Model 2) in the special case t = 0, i.e. signals are randomly dilated and subjected to additive noise but are not translated. This procedure is a generalization of the method presented in Section 6.3.

Given the additive noise level, the moments of the dilation distribution τ can be empirically estimated from the mean and variance of the random variables βm(yj) defined by

| (G.1) |

for integer m ⩾ 0. To account for the effect of additive noise on the above random variables, we define

| (G.2) |

and an order m additive noise adjusted squared coefficient of variation by

| (G.3) |

Remark G.1

If the noisy signals are supported in instead of , (G.2) is replaced with

The following proposition mirrors Proposition 6.1 for dilation MRA; its proof appears at the end of Appendix G.

Proposition G.1

Assume Model 2 with t = 0 and CV0, CV1 defined by (G.1), (G.2) and (G.3). Then,

Once again we cannot compute CVm exactly, but by replacing Var, with their finite sample estimators, we obtain approximations that can be used to define estimators of the dilation moments.

Definition G.1

Assume Model 3 with t = 0 and , the empirical counterparts of (G.3). Define the second-order estimator of η2 by . Define the fourth-order estimators of (η2, C4η4) by the unique positive solution (, ) of

As M → ∞, the second-order moment estimator is accurate up to O(η4) and the fourth-order moment estimators are accurate up to O(η6). However, in the finite sample regime, the gm(ℓ, σ) appearing in (G.3) will be replaced with , so that the estimators given in Definition G.1 are subject to an error of order . More generally, the additive noise fluctuations imply that to estimate the first k/2 even moments of τ up to an O(ηk+1) error will require , or M ⩾ σ4η−2(k+1).

Having established an empirical moment estimation procedure for noisy dilation MRA when t = 0, we repeat the simulations of Section 5.2 on the restricted model, but estimate the additive and dilation moments empirically. Since accurately estimating the moments of τ is difficult for σ large, we make three modifications to the oracle set-up. First, we lower the additive noise level by a factor of 2 from the oracle simulations, and consider all parameter combinations resulting from σ = 2−5, 2−4 (giving SNR = 9.0, 2.2) and η = 0.06, 0.12. Secondly, we take M substantially larger than for the oracle simulations, with 16, 384 ⩽ M ⩽ 370, 727. Thirdly, we compute WSC k = 4 only for large dilations. For large dilations (η2, C4η4) are approximated with fourth-order estimators, while for small dilations η2 is approximated with a second-order estimator (see Definition G.1).

Results are shown in Fig. G7, and the same overall behavior observed in the oracle simulations for large M holds. The additive noise level was estimated empirically as described in Section 6.2. For the medium- and high-frequency signal, WSC k = 2 has substantially smaller error than both PS k = 0 and WSC k = 0; for the large-frequency signal, the error is decreased by at least a factor of 2 for large dilations and a factor of 4 for small dilations relative to both zero order estimators. When WSC k = 4 is defined, it has a smaller error than WSC k = 2 for the high-frequency signal, while WSC k = 2 is preferable for the low- and medium-frequency signal. We observe that for the oracle simulations WSC k = 4 is preferable for all frequencies, so this is most likely due to error in the moment estimation degrading the WSC k = 4 estimator. For the low-frequency signal, PS k = 0 once again achieves the smallest error for small dilations, while for large dilations the higher order wavelet methods appear to surpass PS k = 0 for M large enough.

Fig. G7.

L2 error with standard error bars for noisy dilation MRA model (t = 0, empirical moment estimation). First, second and third column shows results for low-, medium- and high-frequency signals. All plots have the same axis limits.

Proof of Proposition G.1.

Since , we have

We now compute the variance. We first establish that

By Thm 4.5 of [49]

so that

We thus obtain

Thus,

Dividing by gives

and the remainder of the proof is identical to the proof of Proposition 6.1. □

H. Additional simulations for noisy dilation MRA

We investigate the L2 error of estimating the power spectrum using PS (k = 0) and WSC (k = 0, 2, 4) for three additional high-frequency functions:

The multiplicative constants were chosen so that the L2 norms of f4, f5, f6 are comparable with the L2 norms of the Gabor signals f1, f2, f3 defined in Section 4.4. The signal f4 is not continuous and has compact support, with a slowly decaying, oscillating Fourier transform given by . The signal f5 is a linear chirp with a constantly varying instantaneous frequency. The signal f6 is slowly decaying in space, with a discontinuous Fourier transform of compact support given by .

Implementation details were as described in Section 6, and simulations were run with oracle moment estimation on the full model (parameter values as described in Section 5.2). Figure H8 shows the L2 error. As for the high-frequency Gabor in Section 5.2, WSC (k = 2) and WSC (k = 4) significantly outperformed the zero order estimators. In addition for large dilations, the WSC (k = 4) outperformed WSC (k = 2) on f4 and f6.

Fig. H8.

L2 error with standard error bars for noisy dilation MRA model (oracle moment estimation). First, second and third columns show results for f4, f5 and f6. All plots for the same signal have the same axis limits.

I. Expectation maximization algorithm for noisy dilation MRA