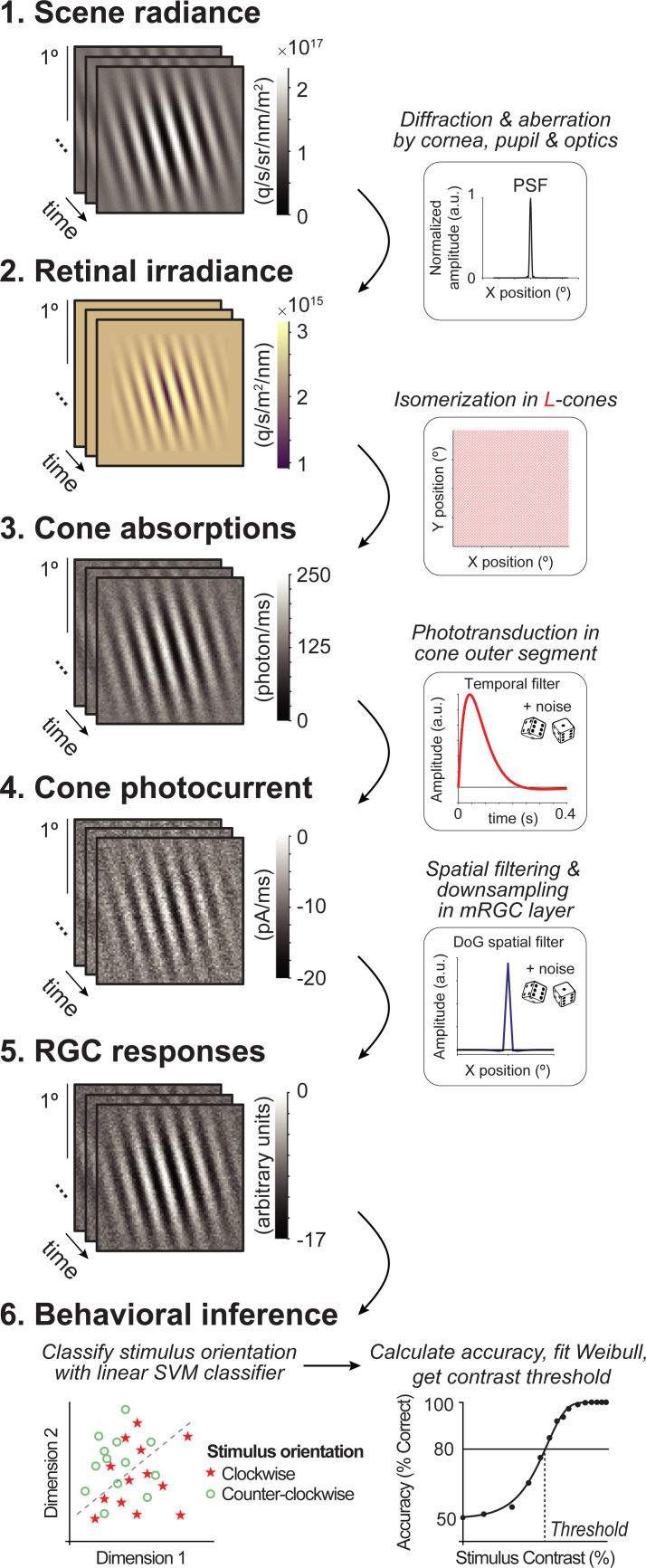

Fig 3. Overview of computational observer model with additional mRGC layer.

A 1-ms frame of a 100% contrast Gabor stimulus is used at each computational step for illustration purposes. (1) Scene radiance. Photons emitted by the visual display, resulting in a time-varying scene spectral radiance. Gabor stimulus shows radiance summed across 400–700 nm wavelengths. (2) Retinal irradiance. Emitted photons pass through simulated human cornea, pupil, and optics, indicated by the schematic point spread function (PSF) in the top right-side box, resulting in time-varying retinal irradiance. Gabor stimulus shows irradiance with wavelengths converted to RGB values for illustration purposes. (3) Cone absorptions. Retinal irradiance is isomerized by a rectangular cone mosaic, resulting in time-varying photon absorption rates for each L-cone with Poisson noise. (4) Cone photocurrent. Absorptions are converted to photocurrent via temporal integration, gain control, followed by adding Gaussian white noise. This results in time-varying photocurrent for each cone. (5) Midget RGC responses. Time-varying cone photocurrents are convolved with a 2D Difference of Gaussians spatial filter (DoG), followed by additive Gaussian white noise and subsampling. (6) Behavioral inference. A linear support vector machine (SVM) classifier is trained on the RGC outputs to classify stimulus orientation per contrast level. With 10-fold cross-validation, left-out data are tested, and accuracy is fitted with a Weibull function to extract the contrast threshold at ~80%.