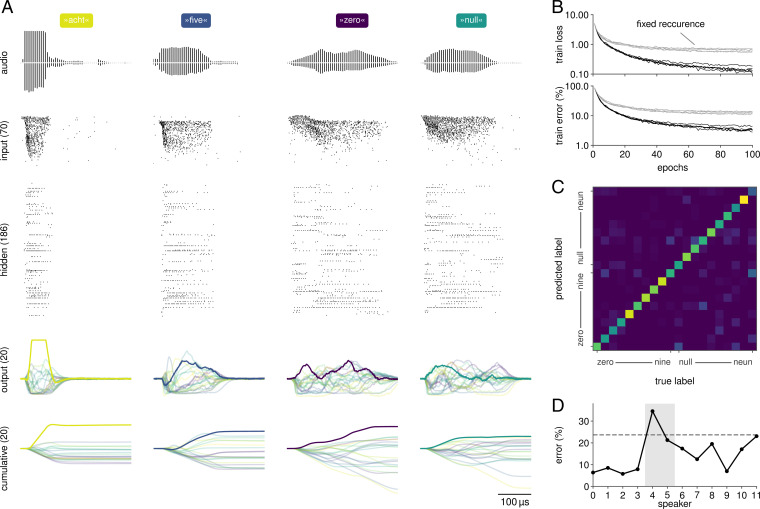

Fig. 5.

Classification of natural language with recurrent SNNs on BrainScaleS-2. (A) Responses of a recurrent network when presented with samples from the SHD dataset. The input spike trains, originally derived from recordings of spoken digits (illustrations), were reduced to 70 stimuli. The network was trained according to a sum-over-time loss based on the output units’ membrane traces. For visualization purposes, we also show their cumulative sums. (B) Over 100 epochs of training, the network developed suitable representations as evidenced by a reduced training loss and error, here shown for five distinct initial conditions. When training the network with fixed recurrent weights, it converges to a higher loss and error. (C) Classification performance varies across the 20 classes, especially since some of them exhibit phonemic similarities (»nine« vs. »neun«). (D) The trained network generalizes well on unseen data from most speakers included in the dataset. The discrepancy between training and overall test error (dashed line) arises from the composition of the dataset: 81% of the test set’s samples stem from two exclusive speakers (highlighted in gray).