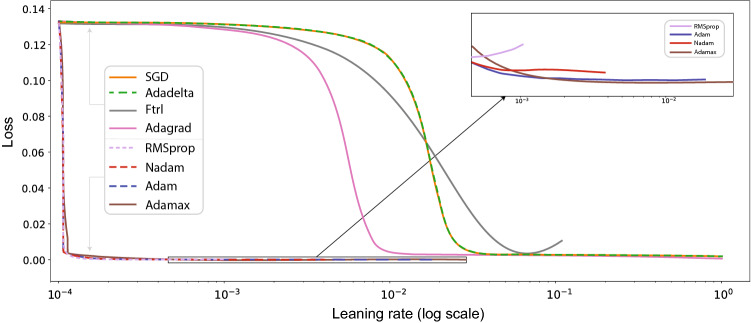

Fig. 3.

Results on the learning rate range test/loss values, showing the moving averages. The Adamax optimizer takes the largest learning rate value and provides the smallest loss. Here, the abbreviations are stochastic gradient descent (SGD), follow the regularized leader (Ftrl), adaptive gradient (Adagrad), root-mean-square propagation (RMSprop), Nesterov-accelerated adaptive moment (Nadam), and adaptive moment (Adam). Adadelta extends Adagrad, and Adamax is a variant of Adam based on the infinity norm