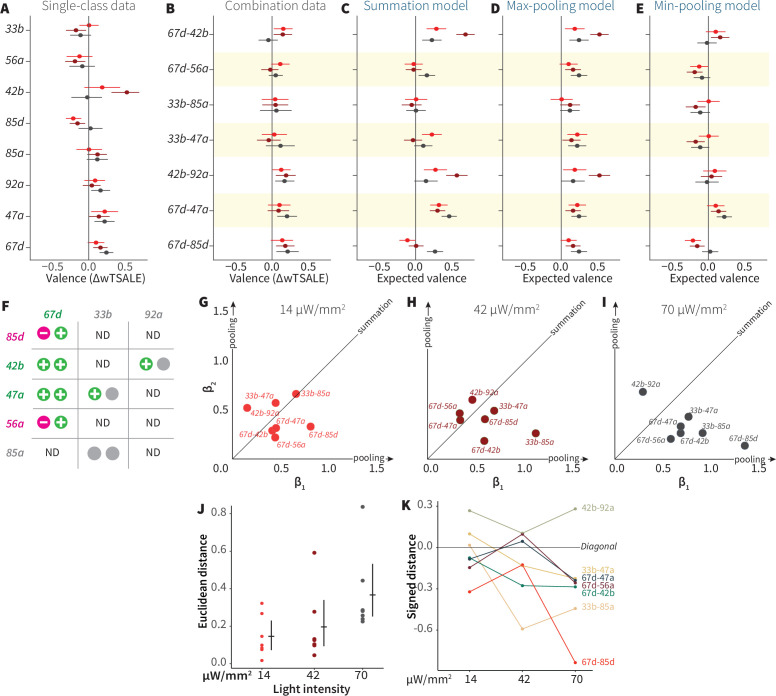

Figure 5. ORN-valence combinations follow complex rules.

(A) Valence responses of the single-ORN lines used to generate ORN-combos (replotted from Figure 1). The dots represent the mean valence between control (N≅ 104) and test (N ≅ 52) flies (∆wTSALE with 95% CIs). The shades of red signify the three light intensities. (B) The valence responses produced by the ORN-combos in the WALISAR assay in three light intensities. (C–E) ORN-combo valences as predicted by the summation (C), max pooling (D) and min pooling (E) models. (F) Three positive (green), two negative (magenta), and three neutral (gray) ORNs were used to generate seven ORN two-way combinations. (G–I) Scatter plots representing the influence of individual ORNs on the respective ORN-combo valence. The red (G), maroon (H), and black (I) dots indicate ORN-combos at 14, 42, and 70 μW/mm2 light intensities, respectively. The horizontal (β1) and vertical (β2) axes show the median weights of ORN components in the resulting combination valence. (J) Euclidean distances of the ORN-combo β points from the diagonal (summation) line in panel J. The average distance increases as the light stimulus intensifies: 0.14 [95CI 0.06, 0.23], 0.20 [95CI 0.06, 0.34], and 0.37 [95CI 0.20, 0.53], respectively. (K) The β weights of the ORN-combos from the multiple linear regression are drawn as the signed distances of each ORN-combo from the diagonal line over three light intensities. The ORN weightings change magnitude and, in a few cases, the dominant partner changes with increasing optogenetic stimulus.