Abstract

Background:

Apraxia of speech (AOS) is considered a speech motor planning/programming disorder. While it is possible that co-occurring phonological impairments exist, the speech motor planning/programming deficit often makes it difficult to assess the phonological encoding stage directly. Studies using on-line methods have suggested that activation of phonological information may be protracted in AOS (Rogers, Redmond, & Alarcon, 1999).

Aims:

The present study was designed to investigate the integrity of the phonological encoding stage in AOS and aphasia. We tested two specific hypotheses, the Frame Hypothesis and the Segment Hypothesis. According to the Frame Hypothesis, speakers with AOS have an impairment in retrieving metrical frames (e.g., number of syllables); according to the Segment Hypothesis, speakers with AOS have an impairment in retrieving segments (e.g., consonants).

Methods & Procedures:

Four individuals with AOS and varying degrees of aphasia, two speakers with aphasia, and 13 age-matched control speakers completed an on-line priming task in which participants name pictures in sets that do or do not share number of syllables (e.g., balcony-coconut-signature vs. balcony-carrot-sock), the initial consonant (e.g., carpenter-castle-cage vs. carpenter-beaver-sun), or both (e.g., boomerang-butterfly-bicycle vs. boomerang-sausage-cat). Error rates and reaction times were measured.

Outcomes & Results:

Data for controls replicated previous literature. Reaction time data supported the Segment Hypothesis for speakers with AOS and for one speaker with aphasia without AOS, with no differences in pattern from controls for the other speaker with aphasia without AOS.

Conclusions:

These results suggest that speakers with AOS may also have difficulties at the phonological encoding stage. Theoretical and clinical implications of these findings are discussed.

Keywords: Apraxia of Speech, Phonological Encoding, Priming, Reaction Time, Aphasia, Speech Production

Apraxia of speech (AOS) is a neurogenic speech disorder characterized by speech sound distortions, distorted substitutions, reduced speech rate, and dysprosody (Duffy, 2005; Wambaugh, Duffy, McNeil, Robin, & Rogers, 2006). Although pure AOS has been defined as a disruption of speech motor planning and/or programming following intact phonological encoding, phonological encoding impairments may co-exist with AOS (Ballard, Garnier, & Robin, 2000; Code, 1998; Croot, Ballard, Leyton, & Hodges, 2012; Duffy, 2005; Laganaro, 2012; McNeil, Robin, & Schmidt, 2009; Rogers, Redmond, & Alarcon, 1999).

The possibility of concomitant phonological encoding disruptions poses difficulties for differential diagnosis and for using evidence from speakers with AOS to inform theories of speech motor planning (e.g., Ziegler, 2009). Few studies on AOS directly address or examine phonological encoding, presumably in part because speech motor planning deficits complicate assessment of phonological encoding (Maas & Mailend, 2012; Rogers & Storkel, 1999; Ziegler, 2002). The present study uses a real-time task to examine phonological encoding in speakers with AOS.

SPEECH PLANNING1

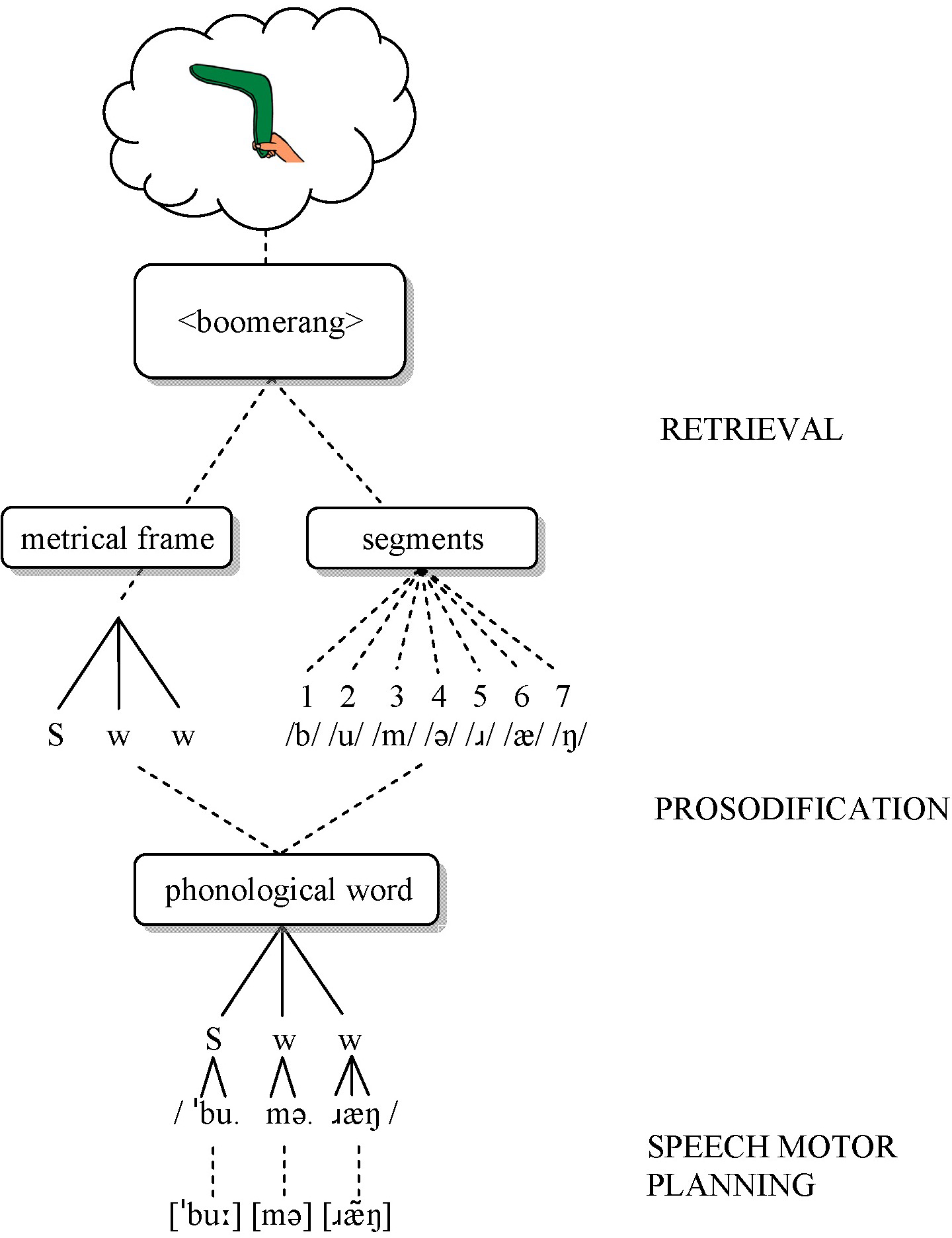

Speech production models assume at least two stages of speech planning, including formulating a word’s sound structure (phonological encoding) and planning the articulator movements (speech motor planning) (Levelt, Roelofs, & Meyer, 1999). The present study largely follows the Nijmegen model (Levelt et al., 1999; Roelofs, 1997; see Figure 1).

Figure 1.

Schematic overview of the speech planning process (after Roelofs, 1997). S = strong (stressed); w = weak (unstressed).

According to this model, phonological encoding involves two steps: retrieval and prosodification. First, speakers retrieve segments and metrical frames separately but in parallel. The linearly-ordered segments correspond to phonemes (e.g., /b/, /u/, /m/, /ə/, /ɹ/, /æ/, /ŋ/ for boomerang). Frames specify the number of syllables, and perhaps the stress pattern, but not syllable structure (e.g., Strong-weak-weak for boomerang) (Levelt et al., 1999). Second, prosodification inserts the segments into the frame according to syllabification rules, generating a syllabified phonological representation (e.g., /ˈbu.mə.ɹæŋ/). Intermediate, partially prosodified representations are stored in a phonological buffer until all segments are inserted. Once the word is prosodified, corresponding syllable motor plans are accessed, either by retrieving precompiled syllable-sized motor plans for frequent syllables or by assembling segment-sized motor plans for infrequent syllables (Cholin, Dell, & Levelt, 2011; Levelt & Wheeldon, 1994). Articulation may begin as soon as the first syllable motor plan is available (Meyer, Roelofs, & Levelt, 2003), or it may be delayed until all motor plans for the word are ready (Levelt & Wheeldon, 1994), in which case preceding motor plans are temporarily stored in an articulatory buffer (Meyer et al., 2003; Rogers & Storkel, 1999).

Support for this model comes from speech errors (e.g., Shattuck-Hufnagel, 1983) and from chronometric tasks such as preparation priming (e.g., Meyer, 1990; Santiago, 2000). In preparation priming tasks, speakers produce the same words in two different conditions: one in which all words share a phonological property (homogeneous context; e.g., cat-cake-comb), and another in which they differ (heterogeneous context; e.g., cat-sock-bomb). Reaction times (RTs) are faster in the homogeneous context (‘priming’) (e.g., Meyer, 1990; Santiago, 2000), but only when frames (number of syllables and stress pattern) are also shared (Roelofs & Meyer, 1998). No priming is observed when only the initial segment but not the frame is shared (e.g., boat-beaver-barbecue) or vice versa (e.g., balcony-coconut-signature). This latter finding indicates that segments and frames are retrieved separately (both types of information are needed in advance), and that the two retrieval processes take approximately the same amount of time (Roelofs & Meyer, 1998). If one retrieval process were to take longer, then advance information about that process would shorten its duration, which would shorten RT because the slowest process determines the lower limit on RT.

SPEECH PLANNING IN APRAXIA OF SPEECH

Several proposals localize the speech problem in AOS within the Nijmegen model, in particular the speech motor planning stage (Aichert & Ziegler, 2004; Rogers & Storkel, 1999; Varley & Whiteside, 2001). For example, Rogers and Storkel (1999) proposed that AOS reflects a buffer limitation, such that speakers with AOS can only plan one syllable at a time. In contrast, Varley and Whiteside (2001) suggested that AOS involves a disruption of the retrieval of precompiled speech motor plans, leaving speakers with AOS with only the more cumbersome, error-prone assembly process (which may also be impaired). Finally, another proposal is that the speech motor plans themselves are damaged in AOS (as opposed to their retrieval; Aichert & Ziegler, 2004; Ziegler, 2009), based on quantitative modeling of speech error patterns. Although promising and innovative, this approach stipulates that perceived errors reflect speech motor planning deficits and that phonological encoding is intact (Ziegler, 2009).

Most research on AOS uses off-line methods (involving analyses of the final speech output), which complicate interpretation relative to affected stages (Maas & Mailend, 2012; Rogers & Storkel, 1999). The psycholinguistic literature has seen a shift towards use of on-line methods (Levelt et al., 1999), yet few studies have applied such methods to AOS (Rogers et al., 1999; see Maas & Mailend, 2012, for review).

In the present context, a study by Rogers et al. (1999) is particularly relevant, because these authors used a priming paradigm in which speakers named pictures (e.g., fork) while ignoring semantically (e.g., knife) or phonologically related (e.g., cork) distractor words presented at various time points relative to picture onset. Speakers with AOS showed delayed effects of phonological distractors on RT, compared to control speakers and speakers with aphasia; the offset of semantic distractor effects was also delayed in the AOS group. Rogers et al. hypothesized that activation of phonological information is reduced or slower, and that sustained semantic activation may be necessary to adequately activate phonological information. They further speculated that insufficient phonological activation contributes to speech motor planning deficits in AOS. This latter speculation is consistent with conceptualizations of speech planning in which activation cascades from phonological encoding to speech motor planning (Cholin et al., 2011; Goldrick & Blumstein, 2006). In this view, phonologically activated segments immediately begin to activate their corresponding speech motor plans (before a final selection is made), thus activating multiple motor plans.

Although the Rogers et al. (1999) study represented an important step towards using on-line priming methods to study AOS, distractor effects were compared to a silent condition rather than to an unrelated control condition. As such, they observed interference rather than priming. Furthermore, Rogers et al. did not attempt to determine whether different types of phonological information were differentially affected.

THE PRESENT STUDY

The purpose of the present study was to further examine the hypothesis that phonological activation is slowed in individuals with AOS (Rogers et al., 1999). We used a preparation priming paradigm to determine, first, whether there are phonological activation delays in individuals with AOS, and second, which type of phonological information is affected. The preparation priming paradigm is ideally suited to this purpose, because it allows the separate manipulation of overlap in segments and frames, and because it has independently been argued to tap into phonological encoding rather than speech motor planning (Cholin et al., 2004; Roelofs, 1999) without relying on ambiguous speech errors. Below, we briefly review the main arguments that support the phonological interpretation of form-based preparation priming.

First, speakers show priming effects for shared initial consonants (e.g., faster RT for can in coat-can than in boat-can) (Meyer, 1991), even though articulatory gestures may differ due to coarticulatory demands (e.g., anticipatory lip rounding vs. lip spreading). This suggests that the preparation effect involves abstract representations, akin to phonemes.

Second, the magnitude of the preparation effect (i.e. the RT difference) increases with knowledge of subsequent information such as the following vowel or second syllable (e.g., greater priming for cat-can than for coat-can; greater priming for salivate-salary than for saturate-salary) (Meyer, 1990, 1991). This finding argues against an articulator-preparation interpretation (getting articulators in the starting position), because articulation could start as soon as the initial segment is known (leaving no opportunity for the second sound/syllable to influence RT), and because speakers cannot position articulators for a subsequent syllable due to intervening articulatory gestures.

Third, the magnitude of the effect is the same for word-initial onsets (e.g., book-ball) and word-internal onsets (e.g., tomorrow-tomato) (Meyer, 1991). An articulator-preparation account would predict a larger effect for word-initial onsets because prepositioning is not possible for word-internal onsets.

Fourth, the preparation effect only occurs when place, manner, and voicing of the initial phoneme are shared (e.g., bed-book-ball), but not when one of the words differs in one feature (e.g., bed-book-pill) (Roelofs, 1999). This suggests that speakers cannot prepare combinations of articulatory gestures unless these combinations correspond to phonemes. If the preparation effect were motor-based, then such a motor-based interpretation must be constrained to assume isomorphy between motor plans and phoneme representations, though there is no reason a priori to assume such isomorphy (Folkins & Bleile, 1990).

Fifth, there are no syllable structure preparation effects (Roelofs & Meyer, 1998). While there is debate about whether syllable structure is represented at the phonological level (Romani, Galluzzi, Bureca, & Olson, 2011) or whether syllable structure emerges at the interface between phonological and speech motor planning (Cholin et al., 2004), there is agreement that syllable structure is present at the speech motor level (cf. Ziegler, 2009). Thus, if the task tapped into speech motor planning, then both phonological and motor accounts would predict syllable structure effects. The fact that no such effects are observed suggests that the task taps into phonological encoding and that syllable structure is not represented at this level (Levelt et al., 1999).

The Nijmegen model provides a coherent, detailed, and computationally instantiated account of the empirical effects reviewed above, and attributes them to the phonological encoding stage, in particular the prosodification process (Roelofs, 1997; see Cholin et al., 2004; Chen, Chen, & Dell, 2002; Santiago, 2000, for concurring views). Although alternative interpretations in terms of speech motor planning are conceivable, to our knowledge no such account has been proposed that provides a unified explanation for these findings. Most models assume syllable-sized speech motor plans, at least for high-frequency syllables (Cholin et al., 2011; Levelt et al., 1999; cf. Guenther, Ghosh, & Tourville, 2006; Klapp & Jagacinski, 2011); such accounts therefore do not predict segment-priming. In the Nijmegen model, access to motor plans (for high or low frequency syllables) must await prosodification (Cholin & Levelt, 2009; Roelofs, 1997). As detailed below, our initial syllables were all in the high-frequency range and shared only the initial consonant. Since knowledge of only the initial consonant is insufficient to activate the syllable motor plan, any preparation effects would reflect stages prior to prosodification (i.e. phonological retrieval), but not subsequent stages (Cholin et al., 2004; Cholin & Levelt, 2009).

In sum, there is consensus in the psycholinguistic literature that form-based preparation priming reflects phonological encoding. Given our interest in phonological encoding in AOS, and the difficulties of interpreting speech errors as phonological or phonetic, we proceeded by deriving testable hypotheses from a detailed, well-established, and independently motivated theoretical framework and applying its associated experimental methodology to AOS in order to provide cross-validation and continuity with the literature.

The hypothesis of slow activation of phonological information in AOS predicts disproportionate priming effects (greater RT differences between conditions with versus without shared form properties, compared to control speakers), because advance information about the phonological structure of targets should be particularly beneficial for individuals who have difficulty activating this information. If both types of phonological information are equally affected, then priming should occur only when both frame and segment information are known in advance, as in unimpaired speakers.

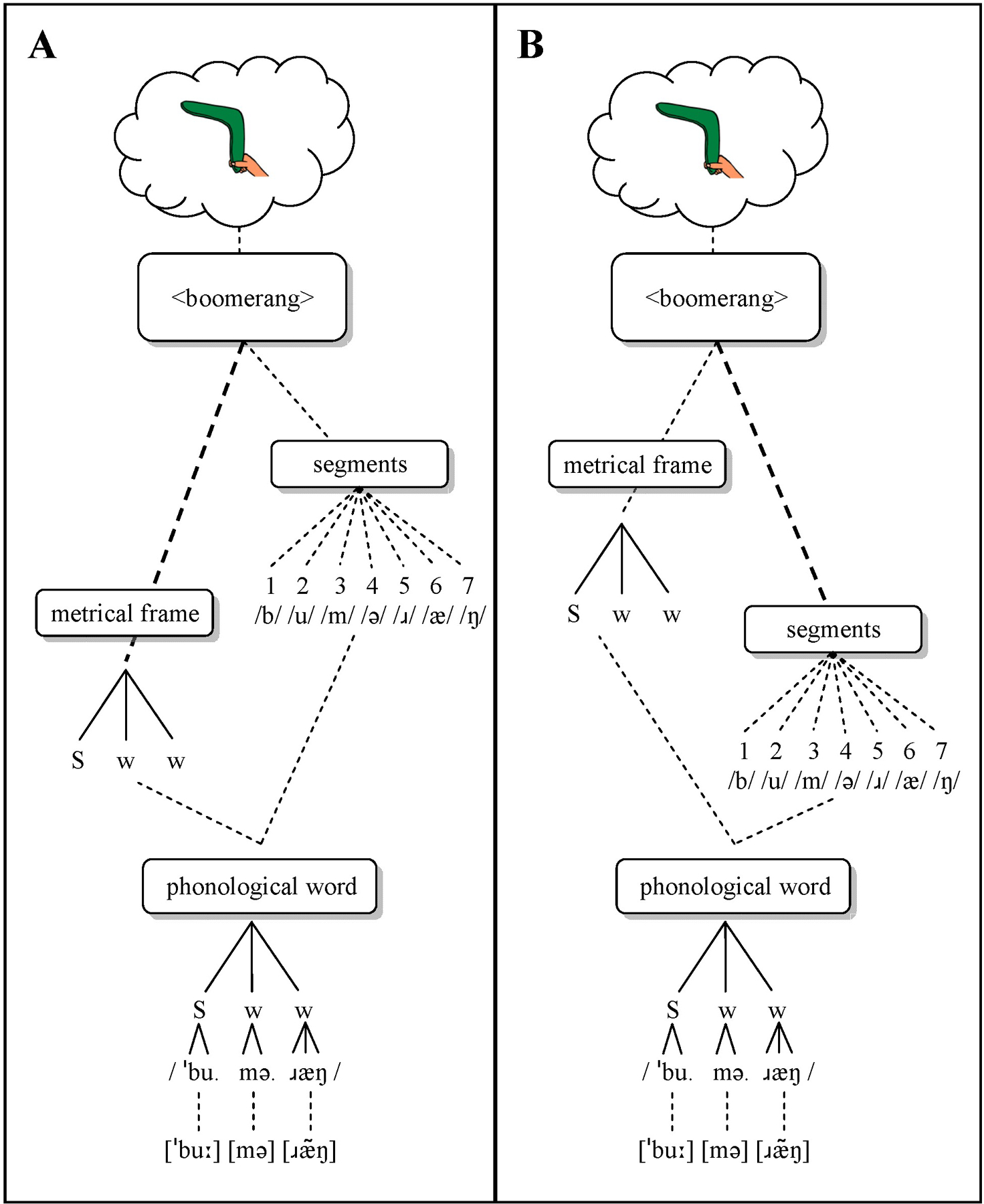

Two more specific hypotheses follow from the account of phonological encoding discussed above. The Frame Hypothesis (Figure 2A) states that AOS disproportionately affects frame retrieval, while segment retrieval is relatively intact. This hypothesis is consistent with often observed prosodic difficulties (Kent & Rosenbek, 1983; Odell et al., 1991). Speech sound distortions may occur if activated segments decay while the frame is being activated, thereby increasing competition from other segments, potentially resulting in multiple speech motor plans becoming partially activated (Goldrick & Blumstein, 2006). The Frame Hypothesis predicts that advance information about the frame alone should shorten the longer frame retrieval process (left branch, Figure 2A) and thus result in priming, whereas no pure segment priming should occur.

Figure 2.

Schematic overview of the Frame Hypothesis, in which the frame retrieval process is slowed (A), and the Segment Hypothesis, in which the segment retrieval process is slowed (B). S = strong (stressed); w = weak (unstressed).

By contrast, the Segment Hypothesis (Figure 2B) states that segment retrieval is disproportionately affected in AOS. This hypothesis is consistent with distortions and distorted substitutions, assuming cascading activation flow. Prosodic difficulties may result as a secondary effect if a frame’s activation decays while segments are being activated. This hypothesis predicts pure segment priming, because advance information about the initial segment should shorten this slower process (right branch in Figure 2B), but not pure frame priming.

We tested these hypotheses in four speakers with AOS and varying degrees of aphasia and two speakers with aphasia without AOS. The speakers with aphasia without AOS were included to explore whether any abnormal priming patters in speakers with AOS are specific to AOS. Speakers with aphasia often produce phonological paraphasias, and thus may also show abnormal priming patterns, though the type of phonological disruption may differ. Given the absence of prosodic errors in fluent aphasia (Odell et al., 1991), the most likely locus of difficulties is the retrieval or prosodification of segments, potentially resulting in pure segment priming.

METHODS

PARTICIPANTS

All participants were monolingual native English speakers, and included four speakers with AOS and varying degrees of aphasia, two speakers with aphasia without AOS, and 13 age-matched control speakers (see Table 1). Three additional participants were recruited but excluded (one with AOS and one with aphasia because they suffered a second stroke before completion of testing; one with AOS due to severe AOS and anomia). Three additional control speakers were recruited but excluded (one due to bilingualism, two due to computer error).

Table 1.

Information for participants with AOS and/or aphasia.

|

|

AOS |

Aphasia |

Controls |

||||

|---|---|---|---|---|---|---|---|

| 200_Tuc | 201_Tuc | 802_Syd | 803_Syd | 300_Tuc | 901_Syd | (N=13) | |

|

| |||||||

| Age | 58 | 68 | 48 | 62 | 59 | 49 | 60 (8) |

| Sex | M | M | M | F | M | F | 6F, 7M |

| Hand | R | R | R | R | R | R | 11R, 2L |

| Education (yrs) | 12 | 22 | 15 | 12 | 16 | 12 | 17 (3) |

| Language | AmE | AmE | AusE | AusE | AmE | AusE | 10 AusE; 3AmE |

| Hearing 1 | pass | pass | pass | pass | pass | pass | pass |

| Time post (y;m) | 4;6 | 6;9 | 1;0 | 5;11 | 13;0 | 5;5 | - |

| Etiology | LH-CVA | LH-CVA | LH-CVA | LH-CVA | LH-CVA | LH-CVA | - |

| Lesion | B; I; PreC | n/a | FTP | n/a | B; I; AG; SMG; MTG; STG (W); TP |

LMCA territory | - |

| Aphasia type 2 | WNL | Anomic | Broca’s | Anomic | Wernicke’s | Broca’s | - |

| WAB AQ 2 | 94.2/100 | 93.2/100 | 41.6/100 | 88.1/100 | 74.9/100 | 56.5/100 | - |

| AOS severity 3 | mild-mod | mild | mod | mod | none | none | - |

| Dysarthria 4 | none | mild | none | none | none | none | - |

| Oral apraxia 3 | mild | mild | mod | mild | mild | mild-mod | - |

| Limb apraxia 3 | none | mild | mild | mild | none | mild | - |

AmE = American English; AusE = Australian English; BE = British English; LH = Left hemisphere; RH = Right hemisphere; CVA = cerebro-vascular accident; n/a = not available; WNL = within normal limits; B = Broca’s area; I = Insula; PreC = Precentral gyrus; FTP = fronto-temporo-parietal; FP = Frontoparietal; PL = Parietal; FT = Fronto-temporal; PT = Posterior & middle Temporal lobe; IO = Inferior Occipital lobe; T = Temporal lobe; PF= Posterior Frontal lobe; O = Occipital lobe; AG = Angular gyrus; SMG = Supramarginal gyrus; MTG = Middle temporal gyrus; STG = superior temporal gyrus; W = Wernicke’s area; TP = temporal pole.

Pure tone hearing screening at 500, 1000, 2000, and 4000 Hz; pass at 45 dB level for better ear.

Based on WAB-R (Kertesz, 2006)

Based on ABA-2 (Dabul, 2000) and clinical judgment

Dysarthrias were diagnosed perceptually based on a motor speech exam (Duffy, 2005) and were all of the unilateral upper motor neuron type.

Referral diagnosis relative to presence/absence of AOS was independently confirmed by a certified speech-language pathologist or a clinical researcher with approximately 10 years of experience in diagnosing AOS (first author), based on presence of the following perceptual features observed in structured and informal speaking contexts (Wambaugh et al., 2006): slow speech rate with lengthened segment durations and segmentation, sound distortions and distorted substitutions, errors relatively consistent in type and location in the utterance, and abnormal prosody. Normal rate and normal prosody were exclusionary criteria (Wambaugh et al., 2006). All participants were administered the Western Aphasia Battery–Revised (WAB-R; Kertesz, 2006) and (portions of) the Apraxia Battery for Adults–Second edition (ABA-2; Dabul, 2000). These tests provided a variety of speech tasks and samples, which provided the basis for the diagnosis of AOS.2

To further increase confidence in the diagnoses, two additional experienced speech-language pathologists independently rated the presence/absence of AOS in each participant from audio- and video-recordings of the assessment. Raters demonstrated unanimous agreement on the presence/absence of AOS in all but two cases; in both cases (200_Tuc, 300_Tuc; see below), one rater indicated “possible AOS”. Given that agreement among experienced clinicians is not always high (Haley et al., 2012), the observed agreement among clinicians strengthens the confidence in the diagnostic classification of our sample.

Participants were recruited and tested at the University of Sydney or the University of Arizona and received monetary compensation. All participants gave informed consent, and all procedures were approved by the University of Arizona Institutional Review Board and the University of Sydney Human Research Ethics Committee.

Participants with AOS

Participant 802_Syd was a 48-year-old right-handed man who was 1.0 year post onset of a left-hemisphere middle cerebral artery stroke. MRI scans indicated a lesion involving left frontal, parietal, and temporal regions, including left inferior frontal gyrus, premotor cortex, post central gyrus, superior temporal gyrus, and insula. He had completed fifteen years of education and was a self-employed pattern-maker in construction until his stroke. He had no history of speech or language disorders and no neurological history other than his stroke. His AOS was judged to be of moderate severity and was characterized mainly by markedly slow speech rate with speech limited to one to two word effortful utterances, syllable segmentation, speech sound distortions, and equal stress on multisyllabic words. He also had mild limb apraxia and moderate oral apraxia, but no evidence of dysarthria. He reported mild weakness in his right arm but continued to use it for writing, walked unassisted, and had returned to driving. He also had a moderate-severe Broca’s aphasia (AQ = 41.6), based on the WAB-R. Testing revealed mild-moderately impaired auditory comprehension, moderately impaired naming and severely impaired repetition and spontaneous speech output.

Participant 803_Syd was a 62-year-old right-handed woman who was almost 6 years post onset of a left-hemisphere stroke in the middle cerebral artery territory, subsequent to myocardial infarction (total occlusion of right coronary artery requiring coronary artery stent). No detailed lesion information was available. She had completed high school and was a factory worker before her stroke. She was diagnosed with moderate AOS, with reduced speech rate, syllable segmentation, speech sound distortions, and equal stress on multisyllabic words. Her primary complaint was her speech, with language remaining comparatively intact (AQ = 88.1, anomic aphasia) and characterized mainly by occasional word finding difficulties (9.6/10) and mildly impaired repetition (7.6/10). Auditory comprehension was within normal limits on the WAB-R (9.85/10). She demonstrated mild oral and limb apraxias, with no evidence of dysarthria. She experienced right-sided weakness, with reduced use of her right arm, but was able to walk unassisted.

Participant 200_Tuc was a 58-year-old right-handed man who was 4.5 years post onset of a left-hemisphere stroke in the middle cerebral artery territory. He had completed high school and worked as a store clerk before and after his stroke. He had no history of speech or language disorders and no neurological history other than his stroke. MRI scans revealed lesions in Broca’s area, anterior insula, and precentral gyrus. His primary complaint was his speech, whereas his language was relatively intact, characterized mainly by occasional word finding difficulties. Standardized testing using the WAB-R confirmed this impression (AQ = 94.2; within normal limits). His AOS was considered mild-to-moderate, and characterized primarily by reduced speech rate, syllable segmentation, and speech sound distortions. One of the three clinicians rated his AOS as “possible AOS”; we classified him in the AOS group based on the agreement between the remaining two clinicians and the referring SLP. He also had a very mild unilateral upper motor neuron dysarthria with a mildly breathy voice, a mild oral apraxia, and weakness in his right arm, but nolimb apraxia.

Participant 201_Tuc was a 68-year-old right-handed man who suffered a left-hemisphere stroke in the middle cerebral artery territory more than 6 years before the study. He also reported a concussion 6 years before his stroke, with no lasting consequences. No lesion information was available. He had a Masters degree and finished several years of a doctoral program. His occupation was computer/IT specialist prior to his stroke. The WAB-R revealed a mild anomic aphasia (AQ = 93.2), characterized by occasional word finding problems and some difficulties with sequential commands. His AOS was relatively mild, and characterized mainly by slow speech rate, syllable segmentation, and speech sound distortions, especially on longer words. He also had a mild limb and oral apraxia, and he exhibited right-sided facial weakness, consistent with a mild unilateral upper motor neuron dysarthria. His right arm also displayed some weakness.

Participants with aphasia without AOS

Participant 901_Syd was a 49-year-old right-handed woman who was almost 5.5 years post onset of a left-hemisphere middle cerebral artery aneurysm. A clinical scan 5 years post-onset showed dilatation of the left lateral ventricle with advanced atrophy of the LMCA territory. She had completed high school and was a homemaker before her stroke. Her speech output was characterized by marked word retrieval difficulty with spontaneous speech restricted to single words and short phrases. Speech errors tended to be phonemic paraphasias, with intact word level prosody and minimal evidence of speech sound distortions. The WAB-R revealed a moderate Broca’s aphasia (AQ = 56.5). There was no evidence for unilateral upper motor neuron dysarthria, but her scores on the ABA-2 suggested moderate oral apraxia and mild limb apraxia. She demonstrated a right hemiparesis and was able to walk with a single point cane.

Participant 300_Tuc was a 59-year-old right-handed man, who was 13 years post onset of a single left hemisphere infarct in the middle cerebral artery territory. He reported two incidents in which he lost consciousness briefly when he was ten years old. MRI scans indicated a large lesion involving the inferior frontal lobe (including Broca’s area and insula), inferior parietal lobule (including angular gyrus and supramarginal gyrus), part of the superior parietal lobule, and superior and middle temporal gyri (including Wernicke’s area) extending anteriorly to the temporal pole. The lesion extended subcortically to the left lateral ventricle, with preserved white matter immediately surrounding the ventricle. He had a Bachelors degree and worked as a computer/IT specialist before his stroke. His speech output was fluent and characterized primarily by semantic paraphasias, empty speech and circumlocution, and occasional phonological paraphasias. The WAB-R revealed an aphasia on the border between conduction aphasia and Wernicke’s aphasia; his comprehension score placed him in the mild-moderate Wernicke’s aphasia range (AQ = 74.9). He produced occasional speech sound distortions and displayed some articulatory groping and self-corrections on longer, more complex words, which led to one of the three raters indicating possible AOS. This participant is classified as no-AOS based on his relatively normal rate and prosody (Wambaugh et al., 2006). There was no evidence for unilateral upper motor neuron dysarthria or limb apraxia, but he did have a mild oral apraxia. Function in his upper and lower extremities was unimpaired.

MATERIALS

Materials included 27 color line-drawings (some adapted from Snodgrass & Vanderwart, 1980, some custom-drawn in similar style) to create nine sets: three homogeneous sets of three words for each of three Overlap conditions (Frame-only, Segment-only, Frame+Segment). The 27 pictures were recombined into nine heterogeneous sets of three words in which neither frame nor initial segment were shared (see Table 2); these heterogeneous sets served as a baseline condition for comparison with the homogeneous sets. Obvious semantic or associative relations among words within sets were avoided.

Table 2.

Overview of materials and conditions. Odd set numbers are homogeneous sets; even set numbers are heterogeneous sets. Each set was presented in a separate block of trials.

| OVERLAP | CONTEXT |

|||||||

|---|---|---|---|---|---|---|---|---|

| Set | Homogeneous | Set | Heterogeneous | |||||

|

| ||||||||

| Frame-only | 1 | balcony | coconut | signature | 2 | balcony | carrot | sock |

| 3 | bucket | carrot | singer | 4 | bucket | coat | signature | |

| 5 | bomb | coat | sock | 6 | bomb | coconut | singer | |

|

| ||||||||

| Segment-only | 7 | barbecue | beaver | boat | 8 | barbecue | soccer | cage |

| 9 | seventy | soccer | sun | 10 | seventy | castle | boat | |

| 11 | carpenter | castle | cage | 12 | carpenter | beaver | sun | |

|

| ||||||||

| Frame + Segment | 13 | boomerang | butterfly | bicycle | 14 | boomerang | sausage | cat |

| 15 | saddle | sausage | sailor | 16 | saddle | cake | butterfly | |

| 17 | comb | cake | cat | 18 | comb | bicycle | sailor | |

Materials were selected to meet several criteria. First, words had to be pictureable to enable elicitation without an auditory model or written cues. Second, the Frame-only condition required words with shared metrical frames (defined here in terms of number of syllables) but different initial consonants (e.g., balcony-coconut-signature), and the other conditions required words with shared initial segments (e.g., carpenter-castle-cone; boomerang-butterfly-bicycle). All conditions required different initial consonants (and frames) in the heterogeneous (baseline) conditions; given that heterogeneous sets were created by repairing words from homogeneous sets, the three homogeneous Segment-only and Frame+Segment sets each included different shared onset consonants (see Table 2). To increase comparability, all three Overlap conditions included the same three initial consonants. Third, to minimize any potential for articulatory preparation in heterogeneous conditions (and both Frame-only conditions), initial sounds differed in place and manner of articulation (/b/, /s/, /k/). Fourth, to facilitate precise and reliable measurement of reaction times (RT), we selected items with relatively clear acoustically-defined onsets. Plosives (/b/, /k/) and the sibilant /s/ have clear onsets that can be measured reliably (supported by our reliability observations; see below). Finally, the Overlap conditions were matched (all ps > 0.12) on psycholinguistic variables that could influence RT, including word length, word frequency, phonotactic probability, neighborhood density, and syllable frequency (see Appendix A and Supplementary Materials).

TASK & PROCEDURES

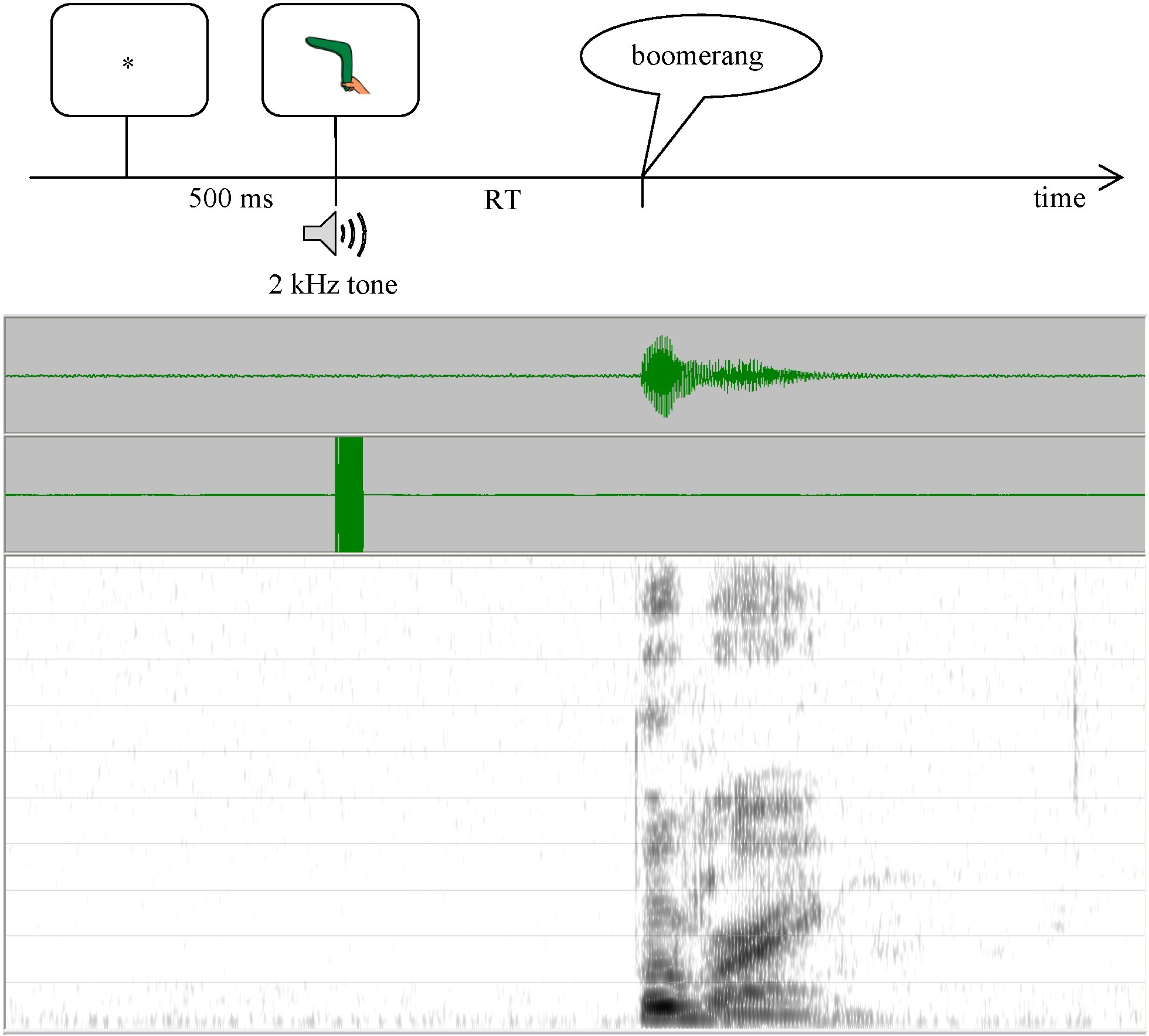

This experiment used a picture-naming task controlled by E-Prime software (v.2; Psychology Software Tools, Inc.); sessions were audio-recorded for analysis. Each trial started with an asterisk (500 ms), followed by a picture accompanied by a 2-kHz tone (to enable acoustic RT measurement3). Participants were asked to name the picture as quickly as possible. After each response, the experimenter judged response accuracy. Incorrect responses (wrong word, self-corrections, RT > 5 sec.) were rerun at the end of each block (Maas et al., 2008a) until 6 correct responses were collected for each target, or until each target had been presented 10 times (i.e. between 18 and 30 trials per block). Within blocks, pictures were presented in pseudo-randomized order (no more than two immediate repetitions).

The experiment included 18 sets, each presented in a separate block (9 homogeneous, 9 heterogeneous; see Table 2). Before each block, all three pictures in that block were shown with the words written underneath. The experimenter read the words and the participant named the pictures. Next, the written words disappeared, and participants named the pictures again. These procedures were intended to familiarize participants with the targets and minimize lexical retrieval difficulties. Once participants had named the pictures correctly, the block started. Participants were tested individually in a quiet room and were offered breaks between blocks.

DESIGN & ANALYSIS

Order of overlap conditions was counterbalanced across participants following a Latin Square design. Within each overlap condition, half of the participants received the homogeneous before the heterogeneous context; the other half received the reverse order. The order of the three sets within each condition was randomized for each participant. Thus, each participant repeatedly saw alternating runs of three homogeneous and three heterogeneous sets, starting with either homogeneous or heterogeneous runs.

The dependent variable was mean reaction time (RT) for correct responses4, measured from the acoustic record using TF32 (Milenkovic, 2000) (see Figure 3). We measured RT acoustically because voice-key measures may be unreliable and/or invalid due to lip smacks, extraneous sounds, soft-spoken responses, etc. (Rastle & Davis, 2002); our findings also indicated unacceptably low correlations between voice-key measures and gold-standard hand-measured RTs (r = 0.4287 for controls; r = 0.4886 for AOS; and r = 0.8022 for APH). Inter-rater reliability of RT measurement based on double-scoring of 4% of data was high (r = 0.9925; mean absolute difference = 7.7 ms).

Figure 3.

Overview of the picture naming task (top) and associated waveform and spectrogram used in RT measurement (bottom).

Only correct responses were included in the RT analyses. RTs shorter than 150 ms and longer than 1500 ms were considered outliers and removed from data analysis. Data points more than 3 standard deviations from a participant’s or item’s overall mean were replaced with the overall participant or item mean. All participants had at least 28/54 correct trials per condition (minimum 52/54 for controls; minimum 45/54 for participants with aphasia and/or AOS except 901_Syd; see Supplementary Materials).

Given the unequal sample sizes, separate group analyses were conducted for the control group and the AOS group. Group data were analyzed with 3 (Overlap) x 2 (Context) repeated measures ANOVAs by-participants (F1) and by-items (F2), with Tukey post-hoc tests. Analyses were based on the means of the item (participant) means in each condition. The variance was similar between conditions in each group, and data were normally distributed within conditions for each group. Data from participants with AOS and/or aphasia were further analyzed individually to determine whether overall RTs differed from the control group in each condition (using SingleBayes_ES.exe; http://www.abdn.ac.uk/~psy086/dept/; Crawford & Garthwaite, 2007) and to test for disproportionately large or small priming effects (using DiffBayes_ES.exe; http://www.abdn.ac.uk/~psy086/dept/; Crawford, Garthwaite, & Porter, 2010). Results tables below report significant effects and trends (2-tailed); exact p-values and normalized effect size estimates with credible intervals are provided in the Supplementary Materials. Alpha level was 0.05 for all statistical analyses5.

Predictions were as follows. First, control speakers will show priming only in the Frame+Segment condition (Roelofs & Meyer, 1998). Second, if individuals with AOS have difficulties retrieving both segmental and frame information, then they should show longer overall RT and disproportionate priming effects in the Frame+Segment condition. The Frame Hypothesis predicts priming in both the Frame+Segment and Frame-only conditions but not in the Segment-only condition. Conversely, the Segment Hypothesis predicts priming in the Frame+Segment and Segment-only conditions, but not in the Frame-only condition. If speakers with AOS have no difficulty at the phonological encoding stage, then we expect normal priming patterns (though overall RT may be longer than in controls, due to speech motor planning difficulties; Maas et al., 2008a). Finally, speakers with aphasia without AOS may show priming in the Frame+Segment and Segment-only conditions, if segment retrieval is the locus of their speech sound difficulties.

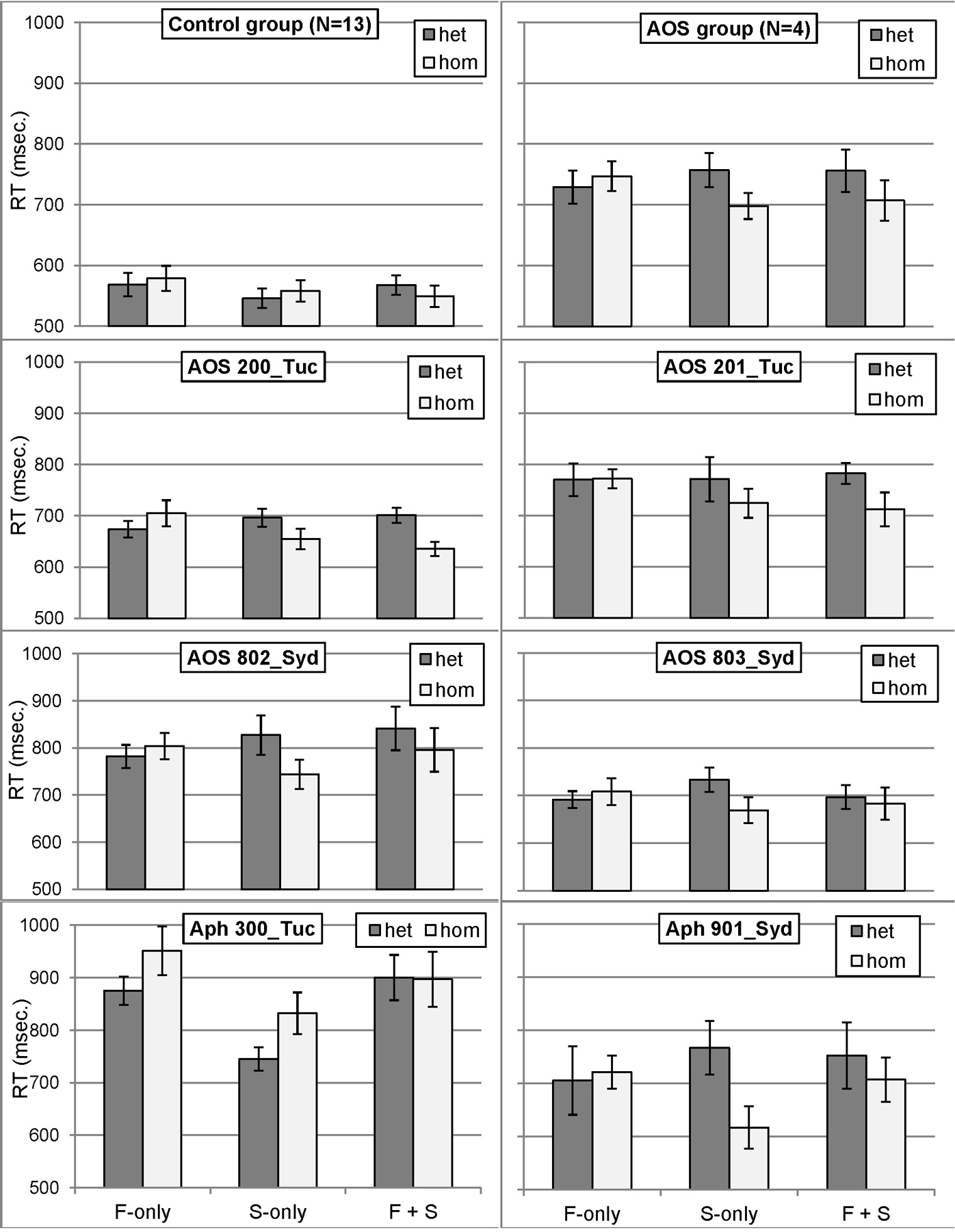

RESULTS

RT data are presented in Figure 4 and Table 3. For control speakers, analysis of mean RT showed no effect of Overlap (F1[2,24] = 3.06, MSE = 3,128, p = 0.0657; F2 < 1, n.s.) or Context (Fs < 1, n.s.). Critically, there was a significant Overlap x Context interaction (F1[2,24] = 11.41, MSE = 1,939, p = 0.0003; F2[2,24] = 3.93, MSE = 1,191, p = 0.0333). Follow-up Tukey tests indicated that RTs were faster in the Frame+Segment homogeneous condition (550 ms) than in the corresponding heterogeneous condition (568 ms; p = 0.0167 by-participants; p = 0.3690 by-items); there were no Context effects for the Frame-only and Segment-only conditions.

Figure 4.

RT means by condition for the control group, the AOS group, and individual participants with AOS and/or aphasia. Error bars represent standard error. F-only = Frame-only; S-only = Segment-only; F + S = Frame + Segment; het = heterogeneous; hom = homogeneous.

Table 3.

Reaction time (RT) by condition for controls and for individual participants with AOS and/or aphasia.

| Group | Participant | Overlap | Context |

Difference | |

|---|---|---|---|---|---|

| Het | Hom | ||||

|

| |||||

| Controls | (N=13) | Frame | 568 (70) | 579 (75) | −10 (26) |

| Segment | 546 (58) | 558 (64) | −13 (20) | ||

| Both | 568 (57) | 550 (64) | 18 (28) | ||

|

| |||||

| AOS | (N=4) | Frame | 729 (54) | 747 (49) | −18 (12) |

| Segment | 757 (56) | 698 (43) | 59 (19) | ||

| Both | 756 (70) | 707 (67) | 49 (26) | ||

|

| |||||

| AOS | 200_Tuc | Frame | 674 (48) | 705 (76) | −31 |

| Segment | 696 (53) | 655 (59) | 41 | ||

| Both | 701 (44) | 636 (42) | 65 | ||

|

| |||||

| 201_Tuc | Frame | 770 (95) | 772 (56) | −2 | |

| Segment | 771 (131) | 724 (85) | 47 | ||

| Both | 783 (62) | 713 (99) | 70 | ||

|

| |||||

| 802_Syd | Frame | 782 (74) | 804 (84) | −22 | |

| Segment | 827 (126) | 744 (94) | 83 | ||

| Both | 841 (139) | 796 (139) | 45 | ||

|

| |||||

| 803_Syd | Frame | 692 (53) | 708 (84) | −16 | |

| Segment | 733 (78) | 669 (82) | 64 | ||

| Both | 697 (75) | 683 (102) | 14 | ||

|

| |||||

| Aphasia | 300_Tuc | Frame | 875 (81) | 951 (139) | −76 |

| Segment | 745 (67) | 832 (119) | −87 | ||

| Both | 900 (129) | 897 (157) | 3 | ||

|

| |||||

| 901_Syd | Frame | 705 (194) | 721 (93) | −16 | |

| Segment | 767 (143) | 616 (113) | 151 | ||

| Both | 752 (165) | 707 (118) | 45 | ||

Values for groups represent the mean (SD). Differences between individual participants with AOS and/or aphasia and controls are based on Crawford and Garthwaite’s (2007) Single Bayesian comparison method for single conditions and Bayesian Standardized Difference method for difference scores. Significant effects (p < 0.05) are indicated in bold, trends (p < 0.10) in italics. Het = heterogeneous; Hom = homogeneous.

Speakers with AOS revealed a main effect of Context (F1[1,3] = 49.56, MSE = 5,366, p = 0.0059; F2[1,24] = 10.81, MSE = 14,707, p = 0.0031), indicating shorter RT in the homogeneous condition (717 ms) than in the heterogeneous condition (747 ms). The Overlap effect was not significant (Fs < 1, n.s.). Importantly however, the Context x Overlap interaction was significant (F1[2,6] = 14.52, MSE = 3,484, p = 0.0050; F2[2,24] = 7.24, MSE = 9,853, p = 0.0035). Follow-up tests showed that RT in homogeneous sets was faster than in heterogeneous sets for the Frame+Segment condition (707 vs. 756 ms; p = 0.0308 by-participants, p = 0.0157 by-items) and the Segment-only condition (698 vs. 757 ms; p = 0.0126 by-participants, p = 0.0323 by-items), but not for the Frame-only condition (747 vs. 729 ms; p = 0.6074 by-participants, p = 0.8308 by-items).

Individual analyses indicated that all six participants with AOS and/or aphasia revealed longer RT in at least two conditions, compared to controls. Importantly, individual analyses of the speakers with AOS supported the group analysis: all four demonstrated a significantly reversed Context effect for the Segment-only condition (i.e. segment priming), compared to the control group. Two of the participants with AOS (200_Tuc, 201_Tuc) also revealed a trend for larger priming in the Frame+Segment condition. One of the two speakers with aphasia without AOS also demonstrated segment priming (901_Syd), whereas the other speaker (300_Tuc) showed a trend in the other direction (faster RT in heterogeneous than in homogeneous sets).

DISCUSSION

This study used the preparation priming task to investigate the integrity of phonological encoding in four speakers with AOS and two speakers with aphasia without AOS. Results revealed the predicted priming pattern for control speakers (priming only when both frame and initial segment were shared). To the best of our knowledge, this represents the first replication of this important finding (Roelofs & Meyer, 1998), in a within-participant design and based on acoustically-measured RTs rather than voicekey measures. Since our control speakers were older adults, these findings further imply that the relative speed of each retrieval process is unaffected by normal aging, even if retrieval of phonological information overall is delayed in older adults (Neumann, Obler, Gomes, & Shafer, 2009). These findings are consistent with current models of phonological encoding (Levelt et al., 1999), and support a phonological interpretation of the priming effect in the present study.

In contrast, a distinct pattern emerged in the RT data for speakers with AOS: All speakers with AOS (including two with no/minimal aphasia based on clinical evaluation) demonstrated disproportionate priming effects in the Segment-only condition, compared to controls. This pattern was predicted by the Segment Hypothesis, and suggests that retrieval of segments was slower than retrieval of frames, unlike in control speakers. This finding provides positive experimental evidence that phonological encoding impairments may be present in speakers with AOS.

We argued above for a phonological interpretation of the empirical findings in the literature and in the present study, and although parsimony suggests a phonological interpretation for speakers with AOS as well, it is possible that the task instead reflects speech motor planning in speakers with AOS. For example, one could argue that Segment-only priming arises from a segment-by-segment speech motor planning process (Varley & Whiteside, 2001). However, several observations undermine the plausibility of this view. First, speakers with AOS are sensitive to syllable frequency and syllable boundaries (Aichert & Ziegler, 2004; Laganaro, 2008), suggesting that they can retrieve precompiled motor plans for high-frequency syllables. The initial syllables in our segment-overlap conditions (especially the Segment-only condition) were on average from the high-frequency range, and were thus unlikely to involve segment-by-segment motor planning. Second, speakers with AOS have difficulty producing segments in isolation (Aichert & Ziegler, in press; Kendall, Rodriguez, Rosenbek, Conway, & Gonzalez Rothi, 2006; Schoor, Aichert, & Ziegler, 2012). Third, advance knowledge of mouth shape information (i.e. an articulatory gesture) does not differentially benefit speakers with AOS (Wunderlich & Ziegler, 2011), suggesting that these speakers cannot take advantage of partial (subphonemic) information to position their articulators. For these reasons, the parsimonious interpretation is that the effects in speakers with AOS arise at the same planning stage as in control speakers, namely phonological encoding.

On this view, our findings are consistent with the general hypothesis proposed by Rogers et al. (1999) that activation of phonological information is delayed or protracted in AOS. The present findings go a step further in pinpointing this slow accrual of phonological activation specifically to segmental information.

At least three interpretations of the relation between AOS and phonological encoding deficits can be conceptualized. First, it is possible that phonological encoding deficits (i.e. aphasic impairments) are independent of speech motor planning deficits. On this view, there is no direct relation between the two impairments, and the co-occurrence may be driven by a third variable, such as a lesion affecting adjacent neural regions (cf. Ziegler, 2003), and/or the type or severity of aphasia. However, the effect was present in speakers with different aphasia types and severities, and in at least one speaker (200_Tuc) without aphasia based on a standard aphasia test6. If the two types of deficit are indeed independent, it should in principle be possible to find individuals with aphasia without AOS who reveal the same pattern, and individuals with AOS who do not reveal such a pattern. While we observed an example of the former, we did not observe the latter in our sample: All participants with AOS in our (admittedly small) sample clearly demonstrated the Segment-only priming pattern. Second, it is possible that phonological encoding deficits play a causal role in, or exacerbate, speech motor planning difficulties (Rogers et al., 1999). Difficulty retrieving the correct segments at the right time may disrupt prosodification, which could in turn slow down activation of speech motor plans. For example, frame activation might decay while segments are being retrieved, potentially forcing reactivation of frames or piecemeal activation of speech motor plans for incomplete phonological words (resulting in segmented speech). It is also conceivable that failure to activate the correct segments at the right time could lead to partial activation of competing speech motor plans (cf. Cholin et al., 2011; Goldrick & Blumstein, 2006), resulting in distortions. Whether segment retrieval difficulties alone can account for primary speech characteristics of AOS or whether such difficulties merely exacerbate an already compromised speech motor planning system remains a question for future study, although the fact that one of our participants with aphasia but without AOS showed the same pattern suggests that segment retrieval difficulties are not sufficient to produce AOS.

Third, speech motor planning disruptions could be the source (as opposed to result) of phonological encoding difficulties. For example, difficulties activating speech motor plans (Mailend & Maas, 2013) may pose a “bottleneck” in the system, imposing a greater demand on the phonological buffer. If information in the buffer decays rapidly, then sustained or repeated activation of phonological information may be necessary, either from the semantic stage (Rogers et al., 1999) or through advance preparation (present study). This view would predict that phonological encoding difficulties are present in all individuals with AOS, as a secondary consequence of the speech motor planning impairment. The present study cannot distinguish between these interpretations of the relation between phonological and speech motor planning deficits; further research is needed to specifically address these alternatives.

An alternative interpretation worth considering is that the pattern of segment priming does not reflect a phonological activation impairment but rather a buffer capacity limit of one syllable (Rogers and Storkel, 1999). In the present study, items in the Segment-only condition shared the first consonant but the frame (number of syllables) differed. If speakers with AOS planned these items in a syllable-by-syllable fashion, then these items would all have the same frame (a single syllable), effectively creating a Frame+Segment condition. While this provides an elegant explanation, a posthoc analysis revealed a length effect on RT for speakers with AOS and controls (longer RT for longer words). This suggests that the speech plan comprised multiple syllables, although the length effect must be interpreted cautiously because the materials were matched by overlap condition and not by word length. Further, the absence of Frame-only priming suggests that such a buffer capacity limitation is not due to difficulty retrieving frames at the phonological level, but reflects a limitation at the speech motor planning level.

The two participants with aphasia without AOS revealed two different patterns. One (901_Syd) showed Segment-only priming, similar to the AOS group, suggesting that she also had difficulties retrieving segments. This speaker had a Broca’s aphasia and relatively nonfluent speech, but was judged clinically to have no AOS. This finding places an important constraint on the interpretation of our findings: Segmental retrieval difficulties as revealed by abnormal Segment-only priming do not necessarily lead to AOS (i.e. abnormal Segment-only priming is not differentially diagnostic for AOS). The other speaker with aphasia without AOS (300_Tuc) demonstrated longer overall RT (possibly attributable to lexical retrieval difficulties) but his RT pattern resembled that of controls. The absence of differences in pattern from controls does not necessarily mean that phonological encoding was intact in this participant. The phonological paraphasias in his fluent speech do suggest phonological difficulties, but these difficulties were not evident in the RT patterns; advance knowledge of shared segments did not appear to facilitate segment retrieval. In fact, he showed a trend for a disproportionate difference in the other direction (i.e. the pattern observed in controls), suggesting possible interference from producing words that start with the same sound. Further studies are needed to replicate and extend these findings with larger sample sizes in order to explore the potential differential diagnostic value of these patterns.

Following Rogers et al. (1999), we would like to state explicitly that we do not view AOS as a phonological disorder. Rather, our claim is that phonological encoding disruptions may co-exist in AOS, which has potential ramifications for the study of AOS and for theories of AOS and speech motor planning based on performance of speakers with AOS (e.g., Ziegler, 2009).

CLINICAL IMPLICATIONS

The clinical implications of this work must remain speculative, given the limited sample size. With that caveat in mind, several points deserve mention. First, the possible presence of co-existing phonological disruptions in AOS highlights the importance of avoiding “either/or” thinking about neurogenic speech production deficits (Laganaro, 2012). While it is widely acknowledged that AOS often co-occurs with aphasia (including phonological impairment), the evaluation of phonological encoding separately from speech motor planning in people with AOS is complicated (Ziegler, 2002), and rarely addressed explicitly in participant descriptions (e.g., Laganaro, 2008; Schoor et al., 2012; but see Croot et al., 2012; Laganaro, 2012). If replicated in further studies, development of a clinically feasible preparation priming task may be worth exploring to determine whether phonological deficits are present in a given individual with AOS. The inclusion of RT measures may be important, because such measures may provide a window into phonological encoding, which is usually obscured by speech motor planning difficulties and may not be captured by error patterns (Maas & Mailend, 2012).

Second, the presence and type of phonological disruptions may inform the selection and elicitation of treatment targets. It is possible that some speakers with AOS and/or aphasia may benefit from blocked (homogeneous) sets, whereas others may benefit more from randomized (heterogeneous) sets. The motor literature suggests that randomized elicitation benefits retention and transfer of learning (Maas et al., 2008b). The available evidence from the AOS treatment literature is limited but supports this benefit of random compared to blocked practice (Knock, Ballard, Robin, & Schmidt, 2000; Wambaugh, Martinez, McNeil, & Rogers, 1999). For example, Wambaugh et al. (1999) observed overgeneralization of treatment in a speaker with AOS and aphasia when using homogeneous sets (blocked by initial sound, all monosyllabic; e.g., pie, pear, puck), but not when using heterogeneous sets (different initial sounds, all monosyllabic; e.g., pie, car, shell). Wambaugh et al. (1999: 834) urged future studies to “elucidate the role that subject characteristics and treatment parameters play in the generation of the overgeneralization effect.” The presence of phonological encoding deficits may be one such relevant characteristic, and thus the ability to assess phonological encoding independently from speech motor planning deficits is important.

CONCLUSIONS

The present study reported findings from four speakers with AOS and two individuals with aphasia without AOS on a preparation priming task. For unimpaired speakers, our findings represent the first replication of the basic pattern of priming only when both frame and initial segment are shared, in a within-subject design. This cross-validation of our methods provides an interpretive anchor point for the findings of the speakers with AOS, which support the hypothesis that phonological information is slow to activate in individuals with AOS (Rogers et al., 1999). The present study further refines this hypothesis by suggesting that it is the segment retrieval process that is affected. The specific relation between phonological encoding impairments and speech motor planning deficits (whether or how the two are causally related) remains an area for future research. Finally, while these data should be interpreted with caution given the limited sample size, the present findings also underscore the potential added value, theoretically and methodologically, of adopting a perspective on AOS that emphasizes the time course and stages of processing (Maas & Mailend, 2012).

Supplementary Material

Acknowledgements

The authors gratefully acknowledge Carol Bender at the University of Arizona’s Biomedical Research Abroad: Vistas Open! (BRAVO!) program (supported by NIH T37 MD001427), awarded to Keila Gutiérrez. Ballard’s contribution was funded by the National Health and Medical Research Council of Australia (Project No. 632763) and Australian Research Council Future Fellowship (FF120100355). Portions of these data were presented at the 41st Clinical Aphasiology Conference (Research Symposium in Clinical Aphasiology fellowship awarded to Keila Gutiérrez). We also thank Genevieve Dodge, Krista Durr, Allison Koenig, Claire Layfield, Ariel Maglinao, Marja-Liisa Mailend, Audrey Minot, and Faith Ridgway for assistance with data collection and analysis; Janet Hawley for assistance with participant recruitment; Pélagie Beeson, Elizabeth Hoover, and Swathi Kiran for providing background information about participants; and Borna Bonakdarpour for interpretation of MRI scans. Finally, we thank our participants for their time.

APPENDIX A.

Stimulus properties and matching by overlap condition (means and standard deviations). None of the conditions differed significantly from any other (all ps > 0.10). See Supplementary Materials for further item-specific details.

| Variable | Frame + Segment | Frame-only | Segment-only | |||

|---|---|---|---|---|---|---|

| Mean | (SD) | Mean | (SD) | Mean | (SD) | |

|

| ||||||

| # of phonemes | 4.89 | (1.76) | 4.89 | (1.76) | 4.89 | (1.96) |

| KF Log Freqa | 1.7056 | (0.5293) | 1.9005 | (0.3710) | 1.8624 | (0.3574) |

| SUBTLEXUS Word Formb | 17.6 | (22.4) | 17.6 | (17.8) | 19.3 | (23.3) |

| SUBTLEXUS Log Freqc | 2.672 | (0.539) | 2.786 | (0.390) | 2.723 | (0.520) |

| SUBTLEXUS Diversityd | 2.435 | (0.488) | 2.565 | (0.333) | 2.444 | (0.473) |

| Nbors (substitution)e | 9.3 | (11.8) | 7.9 | (10.8) | 10.1 | (11.2) |

| Nbor (subst.) LF Meanf | 1.01 | (1.03) | 1.18 | (1.09) | 1.21 | (0.98) |

| Nbors (deletion, addition)g | 11.2 | (13.5) | 8.7 | (12.0) | 11.9 | (12.8) |

| Nbor (del.+add.) LF Meanh | 1.14 | (0.95) | 1.21 | (1.09) | 1.48 | (0.92) |

| Segment prob. averagei | 0.0551 | (0.0142) | 0.0636 | (0.0104) | 0.0615 | (0.0175) |

| Biphone prob. averagej | 0.0035 | (0.0023) | 0.0051 | (0.0019) | 0.0053 | (0.0030) |

| First Syll Freqk Mean | 793 | (1544) | 292 | (492) | 1406 | (2645) |

| First Syll Freqk Median | 245 | 215 | 330 | |||

| First Syll Freq Rankl | 11.9% | (15.0%) | 15.1% | (15.8%) | 10.2% | (15.6%) |

Logarithmic word frequency (Kučera & Francis, 1967)

SUBTLEXUS word form frequency per million words (Brysbaert & New, 2009)

SUBTLEXUS log10 word form frequency (Brysbaert & New, 2009)

SUBTLEXUS log10 frequency of number of different sources (Brysbaert & New, 2009)

Number of neighbors (substitution only) (Nusbaum et al., 1984; http://neighborhoodsearch.wustl.edu/neighborhood/Home.asp)

Logarithmic frequency of substitution neighbors (Nusbaum et al., 1984; http://neighborhoodsearch.wustl.edu/neighborhood/Home.asp)

Number of neighbors (substitution, deletion, addition) (Nusbaum et al., 1984; http://neighborhoodsearch.wustl.edu/neighborhood/Home.asp)

Logarithmic frequency of substitution, deletion, addition neighbors (Nusbaum et al., 1984; http://neighborhoodsearch.wustl.edu/neighborhood/Home.asp)

Average of segment probabilities (Storkel & Hoover, 2010)

Average of biphone probabilities (Storkel & Hoover, 2010)

First syllable frequency per million syllables in first position (Baayen, Piepenbrock, & Gulikers, 1995)

Frequency rank of syllables in initial position, expressed as percentage of total number of word-initial syllables (8,574; Baayen et al., 1995).

Footnotes

We use the term “speech planning” generally to refer to the set of processes that transform a selected lexical item into a code that drives the speech articulators. In other words, this term deliberately does not distinguish between more specific stages of processing, and is similar in spirit to Rogers and Storkel’s (1999) PE/MP (phonological encoding through motor programming; cf. Mailend & Maas, 2013). More specific terms are used where intended.

Given the relatively small sample of individuals with aphasia without AOS in the standardization sample of the ABA-2 (N=5; Dabul, 2000) and the lack of description of these individuals, the ABA-2 has not been shown to discriminate AOS from phonological paraphasia (e.g., Duffy, 2005; McNeil et al., 2009). As such, we do not rely on the ABA-2 scores for diagnosing AOS.

Due to differences in equipment set-up, the tone was audible for the Sydney participants but not for Tucson participants (tone was recorded directly on a separate channel, as shown in Figure 3). Inspection of the data indicates that this difference did not affect the results (i.e. Sydney participants were no faster than Tucson participants). Critically, the go-signal was present in all conditions and therefore is not expected to affect the pattern of results.

Error rates were also analyzed but revealed no consistent patterns, except (a) all participants with AOS and/or aphasia produced more errors than controls in at least three of the conditions, and (b) the control group and the AOS group revealed nonsignificant trends for more errors in the homogeneous conditions, a finding not uncommon in the literature (e.g., Meyer, 1990; Roelofs & Meyer, 1998). Due to space limitations, the interested reader is referred to the Supplementary Materials for further details of the error analyses.

No adjustments were made for multiple comparisons in the individual analyses, because (a) the tests within each participant were not independent, (b) such adjustment would increase Type II error rate (decrease power), and (c) there are well-known difficulties surrounding the delineation of “family” in “family-wise” adjustment (cf. Tutzauer, 2003). However, we note that the data patterns critical to the purpose of our study (i.e. the comparisons of difference scores, as these relate to abnormal priming patterns) survive a conservative Bonferroni correction in all cases (adjusted alpha = 0.05/3 overlap conditions = 0.0167; see Supplementary Data for precise p-values).

By definition, impairments in phonological encoding are considered language impairments, i.e. aphasia. At issue here is whether such phonological encoding impairments co-exist in individuals with AOS (whether or not these individuals are classified as having aphasia on a standardized test), and what the nature of such impairments might be.

REFERENCES

- Aichert I, & Ziegler W (2004). Syllable frequency and syllable structure in apraxia of speech. Brain and Language, 88, 148–159. [DOI] [PubMed] [Google Scholar]

- Aichert I, & Ziegler W (in press). Segments and syllables in the treatment of apraxia of speech: An investigation of learning and transfer effects. Aphasiology, DOI: 10.1080/02687038.2013.80228. [DOI] [Google Scholar]

- Baayen RH, Piepenbrock R, & Gulikers L (1995). The CELEX Lexical Database (CD-ROM). Linguistic Data Consortium, University of Pennsylvania, Philadelphia, PA. [Google Scholar]

- Ballard KJ, Granier JP & Robin DA (2000). Understanding the nature of apraxia of speech: Theory, analysis, and treatment. Aphasiology, 14, 969–995. [Google Scholar]

- Brendel B, & Ziegler W (2008). Effectiveness of metrical pacing in the treatment of apraxia of speech. Aphasiology, 22, 77–102. [Google Scholar]

- Brysbaert M, & New B (2009). Moving beyond Kučera and Francis: A critical evaluation of current word frequency norms and the introduction of a new and improved word frequency measure for American English. Behavior Research Methods, 41, 977–990. [DOI] [PubMed] [Google Scholar]

- Chen J-Y, Chen T-M, & Dell GS (2002). Word-form encoding in Mandarin Chinese as assessed by the implicit priming task. Journal of Memory and Language, 46, 751–781. [Google Scholar]

- Cholin J, Dell GS, & Levelt WJM (2011). Planning and articulation in incremental word production: Syllable-frequency effects in English. Journal of Experimental Psychology: Learning, Memory, and Cognition, 37, 109–122. [DOI] [PubMed] [Google Scholar]

- Cholin J, & Levelt WJM (2009). Effects of syllable preparation and syllable frequency in speech production: Further evidence for syllabic units at a post-lexical level. Language and Cognitive Processes, 24, 662–684. [Google Scholar]

- Cholin J, Schiller NO, & Levelt WJM (2004). The preparation of syllables in speech production. Journal of Memory and Language, 50, 47–61. [Google Scholar]

- Code C (1998). Models, theories, and heuristics in apraxia of speech. Clinical Linguistics & Phonetics, 12, 47–65. [Google Scholar]

- Crawford JR, & Garthwaite PH (2007). Comparison of a single case to a control or normative sample in neuropsychology: Development of a Bayesian approach. Cognitive Neuropsychology, 24, 343–372. [DOI] [PubMed] [Google Scholar]

- Crawford JR, Garthwaite PH, & Porter S (2010). Point and interval estimates of effect sizes for the case-controls design in neuropsychology: Rationale, methods, implementations, and proposed reporting standards. Cognitive Neuropsychology, 27, 245–260. [DOI] [PubMed] [Google Scholar]

- Croot K, Ballard KJ, Leyton CE, & Hodges JR (2012). Apraxia of speech and phonological errors in the diagnosis of nonfluent/agrammatic and logopenic variants of primary progressive aphasia. Journal of Speech, Language, and Hearing Research, 55, S1562–S1572. [DOI] [PubMed] [Google Scholar]

- Dabul B (2000). The Apraxia Battery for Adults (Second Edition). Austin, TX: Pro-Ed, Inc. [Google Scholar]

- Duffy JR (2005). Motor Speech Disorders: Substrates, Differential Diagnosis, and Management (Second Edition). St. Louis, MO: Mosby-Year Book, Inc. [Google Scholar]

- Folkins JW, & Bleile KM (1990). Taxonomies in biology, phonetics, phonology, and speech motor control. Journal of Speech and Hearing Disorders, 55, 596–611. [DOI] [PubMed] [Google Scholar]

- Goldrick M, & Blumstein SE (2006). Cascading activation from phonological planning to articulatory processes: Evidence from tongue twisters. Language and Cognitive Processes, 21, 649–683. [Google Scholar]

- Guenther FH, Ghosh SS, & Tourville JA (2006). Neural modeling and imaging of the cortical interactions underlying syllable production. Brain and Language, 96, 280–301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kendall DL, Rodriguez AD, Rosenbek JC, Conway T, & Gonzalez Rothi LJ (2006). Influence of intensive phonomotor rehabilitation on apraxia of speech. Journal of Rehabilitation Research & Development, 43, 409–418. [DOI] [PubMed] [Google Scholar]

- Kent RD, & Rosenbek JC (1983). Acoustic patterns of apraxia of speech. Journal of Speech and Hearing Research, 26, 231–249. [DOI] [PubMed] [Google Scholar]

- Kertesz A (2006). The Western Aphasia Battery – Revised. San Antonio, TX: Pearson. [Google Scholar]

- Klapp ST, & Jagacinski RJ (2011). Gestalt principles in the control of motor action. Psychological Bulletin, 137, 443–462. [DOI] [PubMed] [Google Scholar]

- Knock TR, Ballard KJ, Robin DA, & Schmidt RA (2000). Influence of order of stimulus presentation on speech motor learning: A principled approach to treatment for apraxia of speech. Aphasiology, 14, 653–668. [Google Scholar]

- Kučera H, & Francis WN (1967). Computational analysis of present day American English. Providence, RI: Brown University Press. [Google Scholar]

- Laganaro M (2008). Is there a syllable frequency effect in aphasia or in apraxia of speech or both? Aphasiology, 22, 1191–1200. [Google Scholar]

- Laganaro M (2012). Patterns of impairments in AOS and mechanisms of interaction between phonological and phonetic encoding. Journal of Speech, Language, and Hearing Research, 55, S1535–S1543. [DOI] [PubMed] [Google Scholar]

- Levelt WJM, Roelofs A, & Meyer AS (1999). A theory of lexical access in speech production. Behavioral and Brain Sciences, 22, 1–38. [DOI] [PubMed] [Google Scholar]

- Levelt WJM, & Wheeldon L (1994). Do speakers have access to a mental syllabary? Cognition, 50, 239–269. [DOI] [PubMed] [Google Scholar]

- Maas E, & Mailend M-L (2012). Speech planning happens before speech execution: On-line reaction time methods in the study of apraxia of speech. Journal of Speech, Language, and Hearing Research, 55, S1523–S1534. [DOI] [PubMed] [Google Scholar]

- Maas E, Robin DA, Austermann Hula SN, Freedman SE, Wulf G, Ballard KJ, & Schmidt RA (2008b). Principles of motor learning in treatment of motor speech disorders. American Journal of Speech-Language Pathology, 17, 277–298. [DOI] [PubMed] [Google Scholar]

- Maas E, Robin DA, Wright DL, & Ballard KJ (2008a). Motor programming in apraxia of speech. Brain and Language, 106, 107–118. [DOI] [PubMed] [Google Scholar]

- Mailend M-L, & Maas E (2013). Speech motor planning in apraxia of speech: Evidence from a delayed picture-word interference task. American Journal of Speech-Language Pathology, 22, S380–S396. [DOI] [PubMed] [Google Scholar]

- McNeil MR, Robin DA, & Schmidt RA (2009). Apraxia of speech: Definition and differential diagnosis. In McNeil MR (Ed.), Clinical Management of Sensorimotor Speech Disorders (Second Edition) (pp. 249–268). New York: Thieme. [Google Scholar]

- Meyer AS (1990). The time course of phonological encoding in language production: The encoding of successive syllables of a word. Journal of Memory and Language, 29, 524–545. [Google Scholar]

- Meyer AS (1991). The time course of phonological encoding in language production: Phonological encoding inside a syllable. Journal of Memory and Language, 30, 69–89. [Google Scholar]

- Meyer AS, Roelofs A, & Levelt WJM (2003). Word length effects in object naming: The role of a response criterion. Journal of Memory and Language, 48, 131–147. [Google Scholar]

- Neumann Y, Obler LK, Gomes H, & Shafer V (2009). Phonological vs sensory contributions to age effects in naming: An electrophysiological study. Aphasiology, 23, 1028–1039. [Google Scholar]

- Nusbaum HC, Pisoni DB, & Davis CK (1984). Sizing up the Hoosier mental lexicon (Research on Spoken Language Processing Rep No. 10, pp. 357–376). Bloomington, IN: Speech Research Laboratory, Indiana University. [Google Scholar]

- Odell K, McNeil MR, Rosenbek JC, & Hunter L (1991). Perceptual characteristics of vowel and prosody production in apraxic, aphasic, and dysarthric speakers. Journal of Speech and Hearing Research, 34, 67–80. [DOI] [PubMed] [Google Scholar]

- Rastle K, & Davis MH (2002). On the complexities of measuring naming. Journal of Experimental Psychology: Human Perception and Performance, 28, 307–314. [DOI] [PubMed] [Google Scholar]

- Roelofs A (1997). Syllabification in speech production: Evaluation of WEAVER. Language and Cognitive Processes, 12, 657–693. [Google Scholar]

- Roelofs A (1999). Phonological segments and features as planning units in speech production. Language and Cognitive Processes, 14, 173–200. [Google Scholar]

- Roelofs A, & Meyer AS (1998). Metrical structure in planning the production of spoken words. Journal of Experimental Psychology: Learning, Memory, and Cognition, 24, 922–939. [Google Scholar]

- Rogers MA, Redmond JJ, & Alarcon NB (1999). Parameters of semantic and phonologic activation in speakers with aphasia with and without apraxia of speech. Aphasiology, 13, 871–886. [Google Scholar]

- Rogers MA, & Storkel HL (1999). Planning speech one syllable at a time: The reduced buffer capacity hypothesis in apraxia of speech. Aphasiology, 13, 793–805. [Google Scholar]

- Romani C, Galluzzi C, Bureca I, & Olson A (2011). Effects of syllable structure in aphasic errors: Implications for a new model of speech production. Cognitive Psychology, 62, 151–192. [DOI] [PubMed] [Google Scholar]

- Santiago J (2000). Implicit priming of picture naming: A theoretical and methodological note on the implicit priming task. Psicológica, 21, 39–59. [Google Scholar]

- Schoor A, Aichert I, & Ziegler W (2012). A motor learning perspective on phonetic syllable kinships: How training effects transfer from learned to new syllables in severe apraxia of speech. Aphasiology, 26, 880–894. [Google Scholar]

- Shattuck-Hufnagel S (1983). Sublexical units and suprasegmental structure in speech production planning. In MacNeilage PF (Ed.), The Production of Speech (pp. 109–136). New York: Springer-Verlag. [Google Scholar]

- Snodgrass JG, & Vanderwart M (1980). A standardized set of 260 pictures: Norms for name agreement, image agreement, familiarity, and visual complexity. Journal of Experimental Psychology: Human Learning and Memory, 6, 174–215. [DOI] [PubMed] [Google Scholar]

- Storkel HL & Hoover JR (2010). An on-line calculator to compute phonotactic probability and neighborhood density based on child corpora of spoken American English. Behavior Research Methods, 42(2), 497–506. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tutzauer F (2003). On the sensible application of family-wise alpha adjustment. Human Communication Research, 29, 455–463. [Google Scholar]

- Varley R, & Whiteside SP (2001). What is the underlying impairment in acquired apraxia of speech? Aphasiology, 15, 39–49. [Google Scholar]

- Wambaugh JL, Duffy JR, McNeil MR, Robin DA, & Rogers MA (2006). Treatment guidelines for acquired apraxia of speech: A synthesis and evaluation of the evidence. Journal of Medical Speech-Language Pathology, 14, xv–xxxiii. [Google Scholar]

- Wambaugh JL, Martinez AL, McNeil MR, & Rogers MA (1999). Sound production treatment for apraxia of speech: Overgeneralization and maintenance effects. Aphasiology, 13, 821–837. [Google Scholar]

- Wunderlich A, & Ziegler W (2011). Facilitation of picture-naming in anomic subjects: Sound vs mouth shape. Aphasiology, 25, 202–220. [Google Scholar]

- Ziegler W (2002). Psycholinguistic and motor theories of apraxia of speech. Seminars in Speech and Language, 23, 231–243. [DOI] [PubMed] [Google Scholar]

- Ziegler W (2003). Speech motor control is task-specific: Evidence from dysarthria and apraxia of speech. Aphasiology 17, 3–36. [Google Scholar]

- Ziegler W (2009). Modeling the architecture of phonetic plans: Evidence from apraxia of speech. Language and Cognitive Processes, 24, 631–661. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.