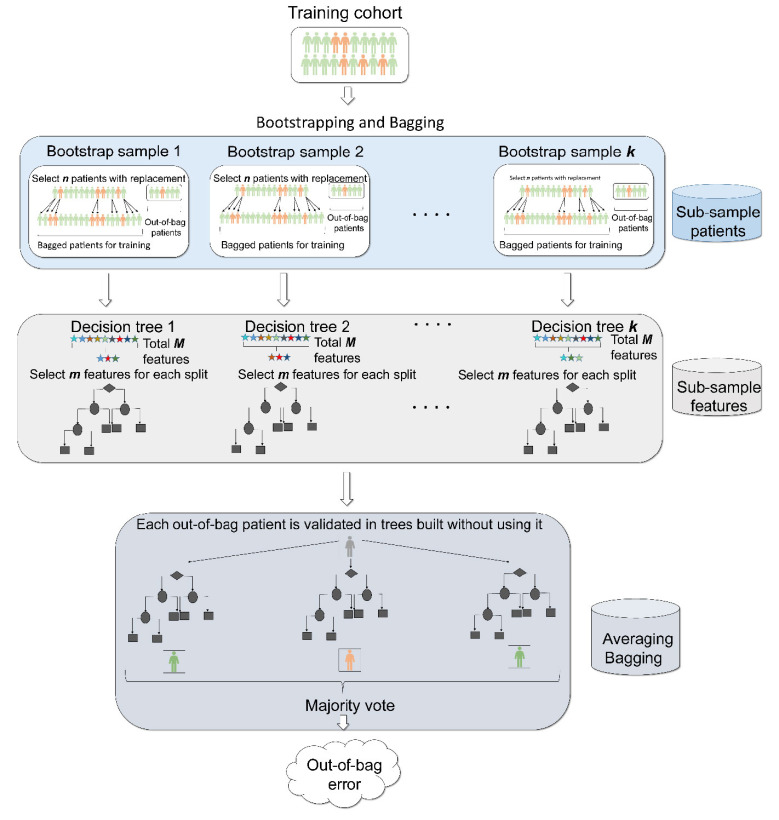

Fig. (4).

Schematics for Random Forests. Random Forests has three unique features (i) bootstrapping (top), feature selection (middle) and bagging (bottom). First Random Forests performs bootstrapping whereby n random patients are chosen with replacement from a patient set of size n. This process is continued k times, producing k different patient subsets. Each patient subset (known as bootstrap sample) is used to build a decision tree. Patients considered for building a tree are known as bagged patients and patients that are left out of the bootstrap samples are known as out-of bag samples. Next, to build a tree, at each split, out of total M features available, a subset of m features is considered. This process is known as feature selection. Finally, after building k decision trees, Random Forests performs a procedure called bagging whereby each patient from out of bag subsets is validated across trees that did not use that specific patient while building their trees. The final output by the Random Forests is the prediction from each tree is recorded and the vote is selected as the final output of the model, known as average bagging. Finally, to evaluate the predictive ability of the Random Forests, out of bag error is calculated. (A higher resolution / colour version of this figure is available in the electronic copy of the article).