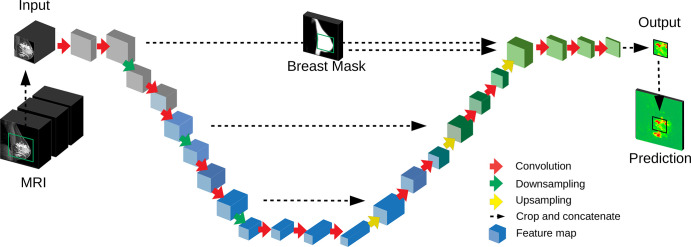

Figure 3:

Deep convolutional neural network used for segmentation. A three-dimensional (3D) U-Net with a total of 16 convolutional layers (red arrows) resulting in 3D feature maps (blue blocks). The input MRI includes several modalities (Fig 2A). The network output is a prediction for a two-dimensional sagittal section, with probabilities for cancer for each voxel (green and red map). The full volume is processed in nonoverlapping image patches (green square on input MRI). A breast mask provides a spatial prior as input to the U-Net.