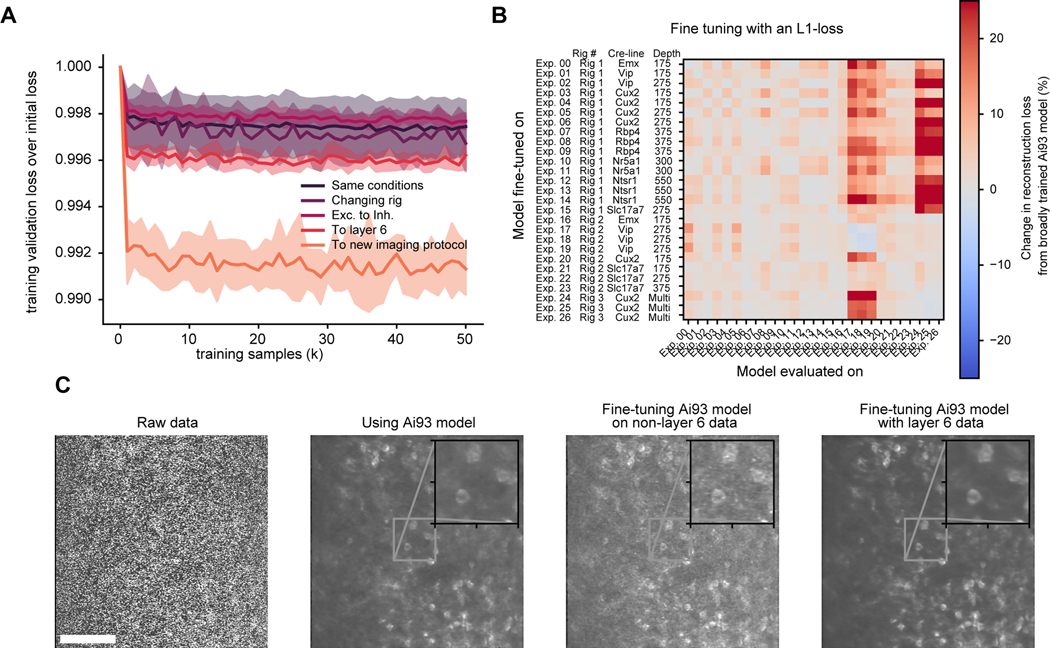

Fig. 3 |. Fine-tuning pre-trained DeepInterpolation models recover reconstruction performance with minimal training data.

(A) Training reconstruction loss in validation data throughout fine-tuning a pre-trained model (Ai93 DeepInterpolation model) on previously unseen two-photon calcium movies. For training, experimental conditions differed as follows: switching to a different experimental rig with the same recording conditions (Changing rig), using data from inhibitory neurons (Exc. to inh.) recorded with a different reporter line (Ai148), using data from layer 6 (To layer 6) recorded with an Ntsr1-Cre_GN220;Ai148 mouse line, using data from the same mouse line but imaged at 10Hz (instead of 30Hz) and performing volume scanning with a piezo objective scanner (To new imaging protocol). Dashed lines represent the standard deviation across rounds of training with similar conditions. (B) Cross-model evaluation performance when varying the recording conditions. The broadly trained Ai93 DeepInterpolation model was fine-tuned separately on 26 recording experiments with varying imaging conditions. We then evaluated each fine-tuned model on all 26 experiments separately to plot the cross-condition reconstruction performance. The performance was normalized to the broadly trained Ai93 model. (C) Comparison of an example raw frame recorded with an Ntsr1-Cre_GN220;Ai148 mouse line in the layer 6 (left) with its corresponding frame after DeepInterpolation with various models. First, we used a broadly pre-trained Ai93 model, then a fine-tuned model on non-layer 6 data and finally a model trained on the same exact layer 6 experiment. Scale bar is 100 μm.