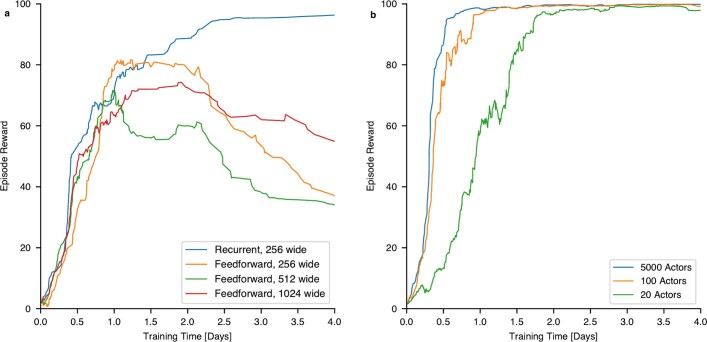

Extended Data Fig. 5. Training progress.

Episodic reward for the deterministic policy smoothed across 20 episodes with parameter variations enabled, in which 100 means that all objectives are perfectly met. a comparison of the learning curve for the capability benchmark (as shown in Fig. 2) using our asymmetric actor-critic versus a symmetric actor-critic, in which the critic is using the same real-time-capable feedforward network as the actor. In blue is the performance with the default critic of 718,337 parameters. In orange, we show the symmetric version, in which the critic has the same feedforward structure and size (266,497 parameters) as the policy (266,280 parameters). When we keep the feedforward structure of the symmetric critic and scale up the critic, we find that widening its width to 512 units (in green, 926,209 parameters) or even 1,024 units (in red, 3,425,281 parameters) does not bridge the performance gap with the smaller recurrent critic. b comparison between using various amounts of actors for stabilizing a mildly elongated plasma. Although the policies in this paper were trained with 5,000 actors, this comparison shows that, at least for simpler cases, the same level of performance can be achieved with much lower computational resources.