Abstract

Primary care has increasingly adopted integrated behavioral health (IBH) practices to enhance overall care. The IBH Cross-Model Framework clarifies the core processes and structures of IBH, but little is known about how practices vary in the implementation of these processes and structures. This study aimed to describe clusters of clinics using the IBH Cross-Model Framework for a large sample of primary care clinics, as well as contextual variables associated with differences in implementation. Primary care clinics (N = 102) in Minnesota reported their level of implementation across 18 different components of IBH via the site self-assessment (SSA). The components were mapped to all five principles and four of the nine structures of the IBH Cross-Model Framework. latent class analysis was used to identify unique clusters of IBH components from the SSA across the IBH Cross-Model Framework’s processes and structures. Latent classes were then regressed onto context variables. A four-class model was determined to be the best fit: Low IBH (39.6%), Structural IBH (7.9%), Partial IBH (29.4%), and Strong IBH (23.1%). Partial IBH clinics were more urban than the other three classes, lower in SES risk than Structural IBH clinics, and located in smaller organizations than Strong IBH clinics. There were no differences between classes in race/ethnicity of the clinic area or practice size. Four groups of IBH implementation were identified representing unique profiles of integration. These clusters may represent patterns of community-based implementation of IBH that indicate easier and more challenging aspects of IBH implementation.

Keywords: Integrated behavioral health, Primary care, Behavioral health, Mental health, Implementation science, Latent class analysis

Integrated behavioral health (IBH) is a rapidly growing approach in primary care that can improve patient outcomes related to mental health and chronic condition care, increase patient and provider satisfaction, improve access to care, and reduce overall health care costs (Archer et al., 2012; Cohen et al., 2015a, b; Davidson et al., 2003; Hunter et al., 2018; Kroenke & Unutzer, 2017; Reiss-Brennan et al., 2016; Vogel et al., 2017; Young et al., 2012). Clinics with IBH utilize behavioral health providers within their clinics to provide integrated medical and behavioral healthcare to target whole-person care, use evidence informed behavioral and mental health interventions, and provide care management across all primary care teams (Cohen et al., 2015a, b; Peek & The National Integration Academy Council, 2013; Vogel et al., 2017). With both providers in the same space, seeing patients in concert, providers can have frequent, brief interactions that convey critical whole-person health information (Hunter & Goodie, 2010; Hunter et al., 2018). Despite this growing approach, little is known about how clinics implement the processes and structures of IBH.

Common Models of IBH

Many models of IBH have been proposed to support team-based care approaches, in addition to more simple co-location of a behavioral health provider in a primary care clinic. Two prominent, specific models of IBH include the Collaborative Care Model (CoCM; Unützer et al., 2013) and the Primary Care Behavioral Health model (PCBH; Reiter et al., 2018). Each model has a slightly different operational and clinical structure, but there are many common principles and themes. Briefly, CoCM is a highly structured model that always includes the primary care provider (PCP), a care manager (generally a licensed behavioral health professional or nurse), a psychiatric consultant, and the patient (Unützer et al., 2013). Components of CoCM include a registry for tracking caseloads and driving care, formal routinized consultation between the psychiatric consultant and the care manager, and “enrolling” patients into the program to target specific diagnoses, such as depression (Unützer et al., 2013). PCBH includes a behavioral health clinician physically integrated into the clinic care structures who typically sees patients with behavioral health problems and biopsychosocially influenced health conditions (i.e., chronic medical or mental health condition management, crisis intervention, case management) to enhance the primary care team’s ability to manage and treat such problems/conditions (Hunter & Goodie, 2010; Hunter et al., 2018). Generally speaking, CoCM is more diagnosis-specific and structured, whereas PCBH focuses on population health of all patients, enhancing primary care teams regardless of diagnosis, and does not require that patients formally enroll in structured services. CoCM and PCBH are not necessarily mutually exclusive, and in fact many organizations and clinics that implement IBH include components of both models (Hunter & Goodie, 2010) . Extensive evidence supports the effectiveness of CoCM for certain conditions (e.g., depression; Archer et al., 2012), some evidence supports PCBH (Hunter et al., 2018; Possemato et al., 2018), and co-location has less clear evidence for improving outcomes.

Cross‑Model IBH Framework Guiding this Study

Clinics may also choose to implement certain aspects of IBH but not intentionally follow either CoCM or PCBH, such as clinics that co-locate behavioral health clinicians with medical providers but do not fully integrate them into a care team structure. All of these approaches can be considered to be under the “umbrella” of IBH, but it can be challenging to compare across clinics due to this variation in model selection as well as variation in implementation success. The IBH Cross-Model Framework is the first IBH framework which encompasses and transcends the current model-based approach (e.g., CoCM, PCBH) and uses a mixed-model, expert-consensus-based approach to define specific processes and structures in primary care that represent IBH (Stephens et al., 2020). The development of the IBH Cross-Model Framework included experts in IBH models such as CoCM and PCBH and policy and practice experts with experience in implementation. A key step in the IBH Cross-Model Framework development was assessing the measurability of the processes and structures identified, including through surveying the consulted experts and mapping the framework to the Practice Integration Profile (PIP; Kessler et al., 2016) and the NCQA PCMH and Distinction in Behavioral Health Integration (BHI) criteria (National Committee for Quality Assurance, 2017; see Stephens et al. 2020 for full details).

Observational studies (Cohen et al., 2015a, b) outside of implementation studies for specific models, that examine models of integration, have found primary care clinics often do not use common models like CoCM and PCBH, but rather blended models of integrated care processes. Yet, little is known whether clinics tend to cluster around certain processes and structures identified as part of these common models. The current study examined IBH implementation across a heterogenous sample of clinics that used various models of IBH in their practices and therefore it was essential to utilize a framework that was able to capture this variation successfully. The framework consists of 29 processes that map to five principles (e.g., patient-centered, team-based, evidence-based care) and nine core structures (e.g., sustainable fiscal strategies), maps well to a validated measure of IBH, the Practice Integration Profile and Patient Centered Medical Home criteria by the National Committee for Quality Assurance, and has been adopted across several large health systems as a community standard. Utilizing the framework in the current study will inform IBH research in the future by demonstrating its flexibility and utility in evaluating heterogenous samples through various measures of IBH, including self-report by clinic personnel.

Variability in Implementation of IBH

While many clinics adopt components of IBH that can map to these common models and framework, research has shown varying adherence to the core components of IBH. Beehler et al. (2015) found that within the Veterans’ Health Administration in primary care, behavioral health provider practice patterns varied. These differences may be driven by clinic-level implementation. In particular, different clinical contexts potentially provide different resources to support IBH implementation based on factors such as patient demographics, geographic location, and practice size. A recent study by Unützer et al. (2020) examined the relationship between clinic characteristics and depression treatment outcomes in clinics having implemented CoCM, and suggested that context may impact implementation, which subsequently may impact outcomes. What Unützer et al. (2020) did not actually assess, however, was the result of implementation of collaborative care, using the level of implementation support the clinic received as a proxy for implementation outcomes (i.e., fidelity to the CoCM model, etc., see Proctor et al., 2011). More specific fidelity assessment is needed.

There is currently a gap in research on clinic-level contextual factors, and how they connect to implementation of IBH (Kwan & Nease, 2013). IBH implementation research has generally focused on the patient (i.e., treatment outcomes) or provider (i.e., roles) levels. Context is key in understanding which clinics engage in IBH and to what degree, and in understanding what works well across common processes and structures. Kwan and Nease (2013) note the heterogeneity in effect sizes of the impact of IBH on clinical outcomes and suggest additional variables (i.e., contextual variables) likely influence effectiveness of IBH implementation. Contextual factors such as patient populations, practice sites, and community variables such as rurality (Jensen & Mendenhall, 2018; Stamm et al., 2007), socioeconomic risk (Santiago et al., 2013), and racial/ethnic minority status of patients (Agency for Healthcare Research & Quality, 2020; Churchwell et al., 2020; Cook et al., 2017; Holden et al., 2014) have been shown to drive differences in quality and access to care and may be important to examine in understanding variable implementation of IBH models. It is possible that these factors may result in patients at some practices having access to more or higher-quality care through the nature of scarcity in rural settings, lack of resources in clinics serving lower-income patients, and racist structures or beliefs influencing the distribution or uptake of resources to clinics with larger minority populations. Some additional contextual factors include practice size (e.g., patient population and provider totals; Briggs et al., 2016 ; Kearney et al., 2015) and organization size (Jacobs et al., 2015; Kaplan et al., 2010). Examining all of these contextual factors is essential to ensure that IBH is being implemented in equitable ways and, if it is not currently, to identify where there is room for improvement.

The Current Study

This study examines patterns of IBH implementation according to the IBH Cross-Model Framework and examine the association of various contextual factors (i.e., clinic location, organization size, practice size, racial/ethnic make-up of the area, and clinic-level socioeconomic risk of the patients) with patterns of IBH implementation. We used latent class analysis (LCA) to analyze our sample with the goal of gaining a holistic understanding of IBH implementation at the clinic level that is not possible with other statistical methods such as regression. LCA is an analytic approach utilized when it is hypothesized that there are homogenous groups present within a heterogeneous sample (Lanza et al., 2012). The groups are not known ahead of time but rather understood through statistical analysis of the data. LCA has been utilized for under-standing IBH implementation (specifically, fidelity) at the level of the individual provider (Beehler et al., 2015), and for other aspects of mental health services at the level of the facility (Mauro et al., 2016). Therefore, it is likely appropriate to consider clinic-level IBH implementation through a person-centered approach. We hypothesized that clinics may also follow patterns in their adherence/fidelity to elements of IBH similar to that found by Beehler et al. (2015).

Methods

Participants

The sample included N = 102 primary care clinics across 14 healthcare organizations from across the state of Minnesota. Clinics were part of Minnesota health care organizations that were members of the MN Health Collaborative, a collaborative effort activated by the Institute for Clinical Systems Improvement (ICSI) to address the major health and social concerns around opioids and mental health in the state of Minnesota. Fourteen organizations were approached, encompassing 331 clinics, with 102 (31%) clinics responding to the survey request, which included Family Medicine, Pediatrics, and Internal Medicine clinics. A total of 106 surveys regarding clinics were returned. Four of those surveys were excluded due to their survey representing more than one clinic in a single survey. Clinics were located across the state of Minnesota, including urban, suburban, and rural settings. Of the 102 clinics, 83 reported their estimated patient populations, for total of 1,336,800 (M = 16,106, median = 8746) patients. Using the median to estimate the missing patient population data, the clinics covered approximately 1.5 million patients, or about 27% of the population of Minnesota. The study was declared exempt by the University of Minnesota IRB due to use of administrative data and no human subjects.

Procedures

Surveys were collected from each clinic by ICSI as part of their work through the MN Health Collaborative, to conduct a baseline assessment of IBH. Clinics were requested to have at least two people collaboratively complete the survey, at least one provider and one administrator, with only one final survey submission, to capture a more global clinic perspective rather than the view of only one individual. Participating staff at each clinic indicated their roles and included medical providers, behavioral health providers, nurses, and administrators, ranging from 1 to 7 participants per clinic (M = 2.09, SD 1.08). Surveys were completed via SurveyMonkey.

Measures

Site Self Assessment (SSA)

The SSA (Scheirer et al., 2010) was an 18-item measure of patient/family-centered integrated care developed for the Maine Health Access Foundation, and is recommended for use by the US Agency for Healthcare Research and Quality (https://integrationacademy.ahrq.gov/products/behavioral-health-measures-atlas/measure/c8-site-self-assessment-evaluation-tool ). The developers modeled the SSA after the Assessment of Primary Care Resources and Supports for Chronic Disease Self-Management (PCRS; Brownson et al., 2007), a validated tool developed for primary care teams to self-assess their current delivery and identify areas for improvement (Scheirer et al., 2010). The SSA has not been validated psychometrically, but during a search for an appropriate measure, ICSI staff found the SSA to have strong face validity compared to other potential options. Participants rated their clinic on a scale 1–10, with grouping descriptions for clusters on the scale of 1, 2–4, 5–7, and 8–10, using scale anchors customized to each question. In order to clarify the meaning of study analyses, each of the 18 items on the SSA was dichotomized into two options: the clinic had achieved fully integrated IBH on the given component (defined as a score of 8–10) or not fully integrated (defined as a score of 1–7).

Clinic Rurality

Rurality was based on the USDA Rural–Urban Commuting Area Codes (RUCA) of the clinic ZIP code (obtained from https://ruralhealth.und.edu/ruca). This scale ranged from 1 to 10 based on population density and commuting patterns, and research has demonstrated the utility of treating the scale as a continuous variable rather than using categories such as urban, suburban, and rural (Yaghjyan et al., 2019 ). Clinics that fall on the urban side of the RUCA spectrum (1 on the scale) were in the larger cities of the state, such as Minneapolis, St. Paul, Rochester, Duluth, and their immediate suburbs. Clinics that fell on the rural side (10 on the scale) were in or near small towns without immediate access to larger cities.

Clinic‑Level Patient SES Risk

Higher scores reflect lower socioeconomic status and greater disadvantage. Determined by MNCM (MN Community Measurement, 2020), this variable was a composite, clinic-level score of: patient-level risk factors (i.e., health insurance product type—commercial, Medicare, Medicaid, uninsured, unknown), patient age, and deprivation index. The deprivation index was reflective of analysis of each clinic’s patient home address data. It includes patient ZIP code-level averages of poverty, public assistance, unemployment, single female with child(ren), and food stamp usage. Each clinic has a unique risk score for each clinical outcome, because the specific patients included for each disease varies. For this study, clinic risk scores were included for depression follow-up, adult and child asthma, vascular disease, and diabetes. Scores for each clinic were averaged among these outcome risk scores to create a total clinic risk score, weighted by the number of patients reported for each outcome. For full calculation details, please contact the first author.

Clinic Area Race/Ethnicity Make‑Up

Race/ethnicity for each clinic’s city location (incorporating the full city population) was obtained from the 2017 American Community Survey (obtained from https://www.census.gov/acs/www/data/data-tables-and-tools/data-profiles/2017/). Estimated counts and percentages of White, Black, American Indian, Asian, Hawaiian/Pacific Islander, other, and two races/ethnicities were included.

Organization Size

Organization size was based on number of primary care clinics for each health service organization. Number of clinics was obtained through Minnesota Community Measurement data and verified by conferring with ICSI personnel.

Practice Size

Practice size was reported by the clinics on their SSA responses. It was measured as the estimated active patient population, that is number of patients that had been seen in roughly the last year. No additional data points regarding size [such as number of providers or full-time equivalent (FTE)] were available.

Crosswalk of SSA with IBH Cross‑Model Framework

We created a crosswalk to map the SSA to the IBH Cross-Model Framework and examined how well the SSA covered the various processes and structures in the framework. A crosswalk is utilized when mapping two different schemas, or structures of concepts which have equivalent elements. The first and fourth authors completed an initial draft of the SSA-IBH-Cross-Model Framework crosswalk. This initial version allowed for multiple categories to overlap, such that one SSA question may have matched several processes of the IBH Cross-Model Framework. The first author then determined final categories for the crosswalk and a second ICSI staff member reviewed and confirmed the final draft (see Table 2). Finally, the primary developer of the IBH Cross-Model Framework (also the last author on this paper) reviewed the crosswalk to validate that the integrity of the framework constructs remained.

Table 2.

Crosswalk of cross-model framework principles and structures with site self-assessment (SSA) questions

| SSA | Cross-model framework principles |

Structures |

||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| M (SD) | Patient-centric care | Treatment to target | Use EBTs | Conduct efficient team care | Population-based care | Financial billing sustain-ability | Admin. support and supervision | EHR | Behavioral health provider | |

| 1. Level of integration: primary care and mental/behavioral health care | 6.08 (2.92) | X | ||||||||

| 2. Screening and assessment for emotional/behavioral health needs | 8.04 (1.79) | X | ||||||||

| 3. Treatment plan(s) for primary care and behavioral/mental health care | 6.43 (2.16) | X | ||||||||

| 4. Patient care that is based on/informed by best practice evidence for behavioral health and primary care | 7.13 (1.91) | X | ||||||||

| 5. Patient/family involvement in care plan | 7.03 (2.10) | X | ||||||||

| 6. Communication with patients about integrated care | 6.03 (2.16) | X | ||||||||

| 7. Follow-up of assessments, tests, treatment, referrals and other services | 6.62 (2.05) | X | ||||||||

| 8. Social support (for patients to implement recommended treatment) | 6.36 (2.16) | X | ||||||||

| 9. Linking to community resources | 6.44 (1.92) | X | ||||||||

| 10. Organizational leadership for integrated care | 6.16 (2.51) | X | ||||||||

| 11. Patient care team for implementing integrated care | 5.54 (2.76) | X | ||||||||

| 12. Providers’ engagement with integrated care (“buy-in”) | 6.70 (2.43) | X | ||||||||

| 13. Continuity of care between primary care and behavioral/mental health | 6.58 (2.29) | X | ||||||||

| 14. Coordination of referrals and specialists | 6.25 (2.10) | X | ||||||||

| 15. Data systems/patient records | 7.50 (2.19) | X | ||||||||

| 16. Patient/family input to integration management | 5.01 (2.39) | X | ||||||||

| 17. Physician, team and staff education and training for integrated care | 5.16 (2.62) | X | ||||||||

| 18. Funding sources/resources | 5.17 (2.73) | X | ||||||||

| M (SD) | 6.02 (1.94) | 6.50 (1.82) | 6.78 (1.82) | 5.91 (2.04) | 8.04 (1.79) | 6.08 (2.92) | 6.16 (2.51) | 7.50 (2.19) | 5.17 (2.74) | |

There are five core structures that were not represented among the 18 SSA items and therefore are not shown in the crosswalk

In order to create the IBH Cross-Model Framework components for analysis, composite scores were created from the SSA data based on mappings. A total of nine composite scores were thus identified for each of the five principles and across four of the nine structures: (1) patient-centric care (3 items, α = 0.67), (2) treatment to target (4 items, α = 0.83), (3) use evidence-based behavioral treatments 2 items, (α = 0.62), (4) conduct efficient team care (4 items, α = 0.72), and (the following are all one-item) (5) population based care, (6) sustainable fiscal strategies, (7) physical integration, (8) organizational leadership support for integrated care, and (9) shared EHR system. Each composite score was then dichotomized such that any score 7.5 and above (8–10 and rounding up) was labeled “2” (integration fully achieved) and below was labeled “1” (integration not fully achieved).

Analytic Plan

We conducted a latent class analysis to identify various clusters of IBH implementation using the cross-walked IBH Cross-Model Framework categories of processes and structures. The final class count was determined through an examination of the Akaike Information Criteria (AIC), Bayesian Information Criteria (BIC), adjusted BIC, and bootstrap likelihood ratio test (BLRT; Lanza et al., 2012; Nylund et al., 2007). For the final aim, the five specified context variables (i.e., rurality, area race/ethnicity, patient SES risk, clinic size, and organization size) were examined as predictors of class membership. Mplus 8.4 was used for both the latent class analysis and subsequent examination of class difference (Muthén & Muthén, 2017). The automatic 3-step process available in Mplus was used (R3STEP; Asparouhov & Muthén, 2014). This approach first estimates classes without the influence of covariates and then incorporates classification uncertainty in order to examine covariates as predictors of class membership.

Missing Data Management

Multiple imputation (Lanza et al., 2012) was used to correct for missing data using the automatic 3-step process in Mplus version 8.4. Whereas complete data were available for all SSA items as well as rurality and organization size, several missing values were found for measures of SES (21.6%), area racial/ethnic distribution (2.9%), clinic size (18.6%). Multiple imputation (Lanza et al., 2012) is a better alternative than list- or case-wise deletion, which is known to introduce bias. Upon manual review of missing values, many appeared to be merely due to the nature of the clinic, i.e., clinics that did not serve children (such as internal medicine clinics) did not provide child/adolescent data, and pediatrics clinics typically did not provide adult data. Therefore, these data can be considered missing not at random, which is sufficiently managed with the multiple imputation method.

Managing Nested Data

The COMPLEX feature of Mplus which adjusts standard errors and the chi-square test of model fit was used to address nesting of the 102 clinics across the 14 healthcare organizations (range = 1–27 clinics per organization). This approach took into account the stratification, non-independence of observations, and unequal probability of selection inherent in nested data, without requiring a multilevel approach.

Results

Clinic Sample Description

A total of 102 clinics participated in the study (see Table 1 for full clinic descriptives) . Clinics tended to be slightly higher SES-risk (M = 1.05, SD 0.1) and more urban than rural (M = 2.22 on RUCA scale, SD 2.6). Generally, clinics tended to be embedded in larger organizations (M = 23.16 clinics per organization, SD 11.83) and patient population sizes varied widely, from 196 patients to 120,000 patients (M = 16,106 patients). Most clinics were located in majority White areas (M = 79.7%) which reflected the race/ethnicity make-up of the state of Minnesota (83.7% White; https://www.census.gov/quickfacts/MN ). The clinics tended to fall in the mid-range for all 18 SSA components (see Table 2), scoring lowest (M = 5.01, SD 2.39) on “Patient/family input to integration management” and highest (M = 8.04, SD 1.79) on “Screening and assessment for emotional/behavioral health needs.”

Table 1.

Clinic sample descriptives

| Variable | N | Min | Max | M | SD |

|---|---|---|---|---|---|

| Clinic weighted risk ratio | 87 | .94 | 1.42 | 1.05 | 0.10 |

| Clinic RUCA | 102 | 1.0 | 10.3 | 2.22 | 2.60 |

| Organization Size (total number of PC clinics) | 102 | 3 | 54 | 23.16 | 11.83 |

| Clinic size (active patient population) | 83 | 196 | 120,000 | 16,106 | 20,112 |

| % White | 99 | 46.8 | 99.2 | 79.7 | 13.3 |

| % Black | 99 | 0.0 | 28.0 | 8.6 | 7.5 |

| % Native American | 99 | 0.0 | 3.8 | 0.7 | 0.6 |

| % Asian | 99 | 0.0 | 18.0 | 5.4 | 4.6 |

| % Hawaiian/Pacific Islander | 99 | 0.0 | 0.3 | 0.0 | 0.0 |

| % Other | 99 | 0.0 | 18.3 | 2.5 | 2.8 |

| % Multiracial | 99 | 0.1 | 5.4 | 3.1 | 1.4 |

PC primary care

The IBH Cross-Model Framework composite scores showed that clinics generally scored lowest on the physical integration of a behavioral health provider into the clinic (M = 5.17, SD 2.74, and highest on population-based care (M = 8.04, SD 1.79). As detailed in the Methods section, the IBH Cross-Model Framework components were subsequently dichotomized for the latent class analysis.

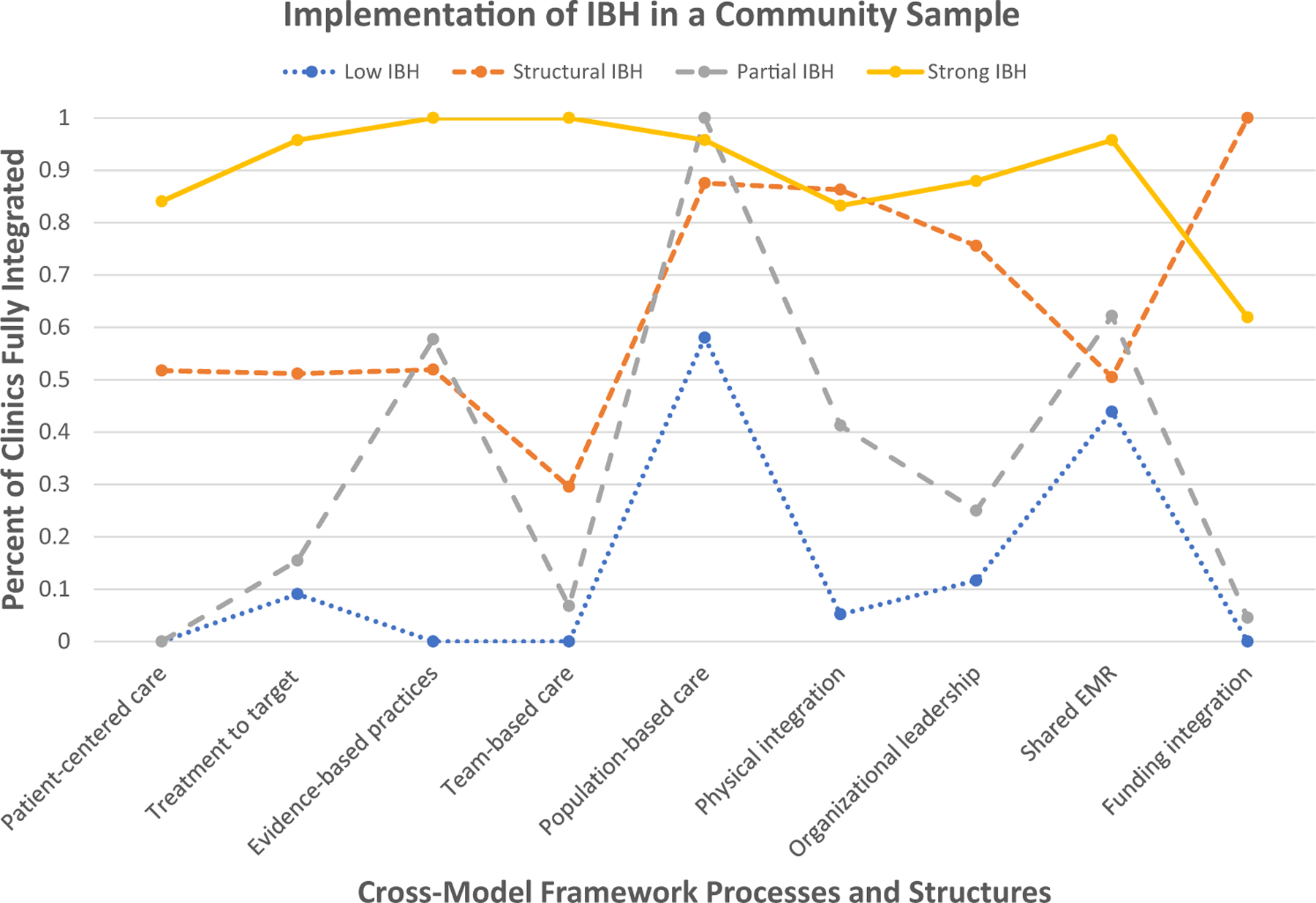

Defining Clusters of IBH Implementation

Latent class modeling (Asparouhov & Muthén, 2019; Lanza et al., 2012) was used to empirically derive clusters of clinics using the IBH Cross-Model IBH Framework indicators. Starting at two classes and proceeding to five classes (after which the model no longer converged), we examined appropriate fit criteria. Entropy was above 0.8 for all models, indicating a clear fit between cases and their classes. See Table 3 for class enumeration details. After examining the Akaike Information Criteria (AIC), Bayesian Information Criteria (BIC), adjusted BIC, and bootstrap likelihood ratio test (BLRT), some decision criteria indicated each of the 2, 3, and 4 classes could be appropriate (see Table 4). Theoretically, the most interpretable and applicable solution was the 4-class solution; upon reviewing the results it appeared that the 4-class solution identified unique classes fundamentally important to the study of IBH implementation. In addition, this solution was the lowest on AIC and Adjusted BIC; therefore, it was selected for the final model (see Fig. 1). The four classes were identified as: Strong IBH (23.1% of clinics), Structural IBH (7.9%), Partial IBH (29.4%), and Low IBH (39.6%).

Table 3.

Latent class enumeration (N = 102)

| Classes | AIC | BIC | Adj BIC | LL | BLRT | Entropy |

|---|---|---|---|---|---|---|

| 1 | 1137.61 | 1161.23 | 1132.80 | − 559.80 | – | – |

| 2 | 877.23 | 927.11 | 867.09 | − 419.62 | 0.000 | 0.956 |

| 3 | 873.26 | 949.38 | 857.78 | − 407.63 | 0.030 | 0.882 |

| 4 | 873.21 | 975.58 | 852.40 | − 397.60 | 0.286 | 0.852 |

| 5 | 879.51 | 1008.13 | 853.36 | − 390.75 | 0.308 | 0.871 |

Table 4.

Descriptives of clinics’ adherence to the cross-model framework

| Cross-model framework principles/structures | Number of health systems with at least 50% of reporting clinics meeting the top criteria | Percent | Number of clinics meeting the top criteria | Percent |

|---|---|---|---|---|

| Patient-centered care | 1 | 7.14 | 24 | 23.53 |

| Treatment to target | 3 | 21.43 | 35 | 34.31 |

| Evidence-based practices | 7 | 50.00 | 45 | 44.12 |

| Team-based care | 0 | 0.00 | 28 | 27.45 |

| Population-based care | 13 | 92.86 | 83 | 81.37 |

| Physical integration | 5 | 35.71 | 41 | 40.20 |

| Organizational leadership | 3 | 21.43 | 39 | 38.24 |

| Shared EMR | 8 | 57.14 | 63 | 61.76 |

| Funding integration | 1 | 7.14 | 24 | 23.53 |

| Overall SSA | 0 | 0.00 | 11 | 10.78 |

| Total | 14 | 102 |

“Top criteria” means 8–10 out of 10 on each SSA question that is encompassed by the Cross-Model Framework process

Fig.1.

Four-class latent class solution

Estimated probabilities (see Table 5) demonstrate the differences in the classes. Strong IBH clinics had overall estimated probabilities of 70% or above for all nine principles and structures except for funding integration, which was at 61.9%. This means that 61.9% of the clinics in this class answered that they are fully integrated on their funding between behavioral and medical funding streams. The Low IBH class had no principle or structure probability above 70%, with the closest being population-based care at 57.9%. Structural IBH was the smallest group and demonstrated a pattern where all clinics in the class indicated full funding integration. They also scored highly on population-based care (87.6%), physical integration (86.3%), and organizational leadership support (75.5%), but differed from the Strong IBH class with low probability of integration in conduct efficient team care (29.5%) and mixed probabilities on the remaining five principles and structures. Finally, the Partial IBH class all reported engaging in population-based care, and mixed probabilities of using evidence-based treatments (57.4%) and shared EHR systems (62.3%) . Forty-one percent of the Partial IBH class reported having a behavioral health clinician physically on-site with shared physical resources (shared waiting room, etc.) and only 30% reported conducting efficient team care.

Table 5.

Posterior probabilities by class (N = 102)

| Class | Class label | Cross-model framework processes grouped by principles (left) and structures (right) |

||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Patient-centric care | Treatment to target | Use evidence-based behavioral treatments | Conduct efficient team care | Population-based care | Physical integration | Organizational leadership support | Shared HER system | Funding integration | ||

| 1 | Low IBH | 0 | 0.09 | 0 | 0 | 0.58 | 0.05 | 0.12 | 0.46 | 0 |

| 2 | Structural IBH | 0.52 | 0.51 | 0.52 | 0.3 | 0.88 | 0.86 | 0.76 | 0.51 | 1.0 |

| 3 | Partial IBH | 0 | 0.15 | 0.57 | 0.07 | 1.0 | 0.41 | 0.25 | 0.62 | 0.05 |

| 4 | Strong IBH | 0.84 | 0.96 | 1.0 | 1.0 | 0.96 | 0.83 | 0.88 | 0.96 | 0.62 |

Bolded numbers indicate posterior probabilities of .7 or above, while italicized numbers indicate posterior probabilities of .3 or below

Examining Role of Contextual Factors with IBH Implementation

Contextual factors, including rurality, race/ethnicity, patient risk ratios, organization size, and practice size, were examined as predictors of membership in the four resulting IBH implementation classes from the latent class analysis (see Table 6 ). Because we made no specific hypotheses of which classes might vary and in what manner, we examined all pairwise class differences. Upon examining these pairs, Partial IBH was the only class to demonstrate differences with all other classes. Specifically, Partial IBH clinics were more likely to be urban than clinics in other classes (Low IBH: B = 0.48, p < 0.001; Structural IBH: B = 0.63, p = 0.04; Strong IBH: B = 0.34, p < 0.001), less likely to be high risk than Structural IBH clinics (B = 13.79, p = 0.04), and less likely to be within larger healthcare organizations than were Strong IBH clinics (B = 0.05, p = 0.04).

Table 6.

Regression results of IBH class membership on context variables using class 3 (Partial IBH) as reference class

| Partial IBH vs | B | SE | p |

|---|---|---|---|

| Low IBH | |||

| Rurality | 0.48 | 0.12 | 0.00* |

| Clinic-level patient SES risk | 4.01 | 5.60 | 0.47 |

| Area race (%White) | 0.01 | 0.05 | 0.91 |

| Org size (#clinics) | 0.05 | 0.04 | 0.19 |

| Practice size (#patients) | 0.01 | 0.04 | 0.85 |

| Structural IBH | |||

| Rurality | 0.63 | 0.31 | 0.04* |

| Clinic-level patient SES risk | 13.79 | 6.85 | 0.04* |

| Area race (% White) | − 0.01 | 0.06 | 0.84 |

| Org size (#clinics) | 0.00 | 0.04 | 0.95 |

| Practice size (#patients) | 0.04 | 0.05 | 0.33 |

| Strong IBH | |||

| Rurality | 0.34 | 0.08 | 0.00* |

| Clinic-level patient SES risk | 4.17 | 4.76 | 0.38 |

| Area race (% White) | 0.01 | 0.04 | 0.77 |

| Org size (#clinics) | 0.05 | 0.02 | 0.04* |

| Practice size (#patients) | 0.03 | 0.04 | 0.39 |

Indicate significance at p < 0.05 or less

Discussion

The main aim of this study was to describe a community sample of primary care clinics’ implementation of IBH using Cross-Model Framework on processes and structures that reflect common models of IBH, by specifically identifying implementation patterns naturally occurring within clinics that have implemented IBH at various levels. Overall, findings indicated that the IBH Cross-Model Framework can be operationalized to examine a heterogeneous sample of clinics’ implementation of IBH, that IBH implementation may fall into identifiable patterns, and that clinics may be influenced toward certain patterns by their social context.

Normative patterns were identified for where clinics are at in the spectrum of IBH implementation. Clinics that demonstrated almost universal high integration (Strong IBH clinics) had one vulnerability, funding integration. Given that payers offer few robust payment reimbursement opportunities to drive financial sustainability for integrated behavioral health providers in primary care (Ma et al., 2021), this finding was not surprising. There were also clinics with predictably low IBH integration, which is likely any clinic that has not begun the IBH implementation process. A number of these clinics had achieved population-based care (58%) and shared their EHR with behavioral health providers (46%). This may be due to state policies requiring certain screening and reporting for mental health and use of electronic medical records. Two mixed classes of IBH integration also emerged, some of which are able to implement some principles and structures more than others. Specifically, many of these mixed class clinics had made progress toward IBH (i.e., 86% of Structural and 41% of Partial IBH clinics had a behavioral health provider onsite who shared clinic space and resources with the medical providers), but they also experienced barriers, particularly around team processes central to the IBH Cross-Model Framework (i.e., only 30% of Structural and 7% of Partial IBH clinics scored high on team-based care). Team-based care in this study encompassed four processes, with two in particular which have been shown to improve rates of integration, namely buy-in of providers and physician, team, and staff training (Eghaneyan et al., 2014 ). The low level of team-based care occurred in all classes except the Strong IBH class; even in clinics with strong organizational leadership support for IBH and even when actual physical integration and funding integration had already occurred, likely due to the complexity of establishing consistent team-based care (Brown-Johnson et al., 2018; Fiscella & McDaniel, 2018). This finding demonstrates the importance of assessing the specific components of integration within clinics that have IBH, which may explain the variation in clinical outcomes (i.e., depression remission) in studies such as Unützer et al. (2020). It also provides specific targets for implementation intervention and support. In order to develop successful implementation interventions and supports, it is important to follow what Garland et al. (2010) suggest for behavioral health services in general; that is, measuring implementation through feedback systems and training behavioral health providers in common elements of evidence-based care. This will be essential for improving fidelity to effective models of care in a variety of clinical settings.

Our findings revealed mixed associations between contextual variables and implementation classes. Structural IBH clinics tended toward higher SES risk patients than Partial IBH clinics. This was only a contrast between two classes and not across the board, so conclusions drawn are tentative. Clinics with higher SES risk patients had moved toward implementing elements of IBH more than clinics with lower SES risk patients, particularly the structures of IBH such as physical integration and funding integration, and had stronger leadership support for IBH. Patients with higher SES risk often have more complex biopsychosocial and care management needs (Cook et al., 2019), and these clinics may have found that coordinating care was easier in an IBH model. However, some were either still in the implementation process or had hit barriers in implementing patient-centric care, treatment to target, evidence-based practice use, team-based care, and shared EHR. Increasing implementation support for these clinics in these specific domains may improve success with improving implementation of IBH and then in turn, provider and patient satisfaction and clinical outcomes.

Strong IBH clinics only differed from Partial IBH clinics by being in larger organizations. Larger organizations likely have more resources for clinics to implement IBH and can supplement insufficient funding streams more easily, which may be particularly important since many of the Strong IBH clinics did not have full funding integration (Jacobs et al., 2015; Kaplan et al., 2010). The sum of the differences between Partial IBH clinics and the others, namely that they were urban, tended to be in smaller organizations and tended to serve higher-SES patients, indicates that these clinics likely referred patients elsewhere for mental health treatment. Research has demonstrated that higher-income and more dense urban areas have more availability of mental health providers (Holzer et al., 2000); in addition, smaller organizations may have fewer resources to initiate practice transformation (Kaplan et al., 2010). More traditional referral to community may have worked well enough for these clinics, which warrants further research.

Another key point of interest in the findings related to clinic context were the negative findings, namely no differences were found in race/ethnicity make-up of the area or clinic size. This provides some initial indication that even smaller clinics may be finding ways to implement IBH. A limitation with the race variable was that it was the whole city where the clinic was located, rather than the patient population of the clinic itself. More research is needed to determine if more versus less racially diverse areas differ in access to IBH services and if people of diverse races/ethnicities are accessing or are able to access IBH services equitably.

Finally, a clinic self-report measure of integration (Scheirer et al., 2010) was able to be mapped to a framework (Stephens et al., 2020). While the self-report did not encompass all aspects of the framework, what it did capture was consistent with the concepts of the framework, and provided a clearly defined naturally occurring clustering of IBH processes and structures across a diverse set of primary care clinics. Future research might examine (1) additional measures of integration and their ability to map to the IBH Cross-Model Framework, and (2) whether mapping different measures of integration to the framework varies the patterns of IBH found across clinics that can offer more direction on how to target and improve implementation.

Strengths and Limitations

This is the first study to demonstrate that application of a generalized IBH framework across different models of IBH can provide insight into implementation gaps in a more nuanced manner than relying on model labels. Categorizing clinics by their IBH implementation will allow us to begin to see which elements of IBH are related or cluster together, and hypothesize for future research where and how to intervene in the implementation process to increase a clinic’s success at achieving full integration. This study was the first to consider, on a broad scale, what relationship various contexts may have with a clinic’s IBH implementation. Clinics in various contexts and serving various populations have implemented different levels of processes and structures of IBH.

Limitations include a small sample size of 102 clinics for a latent class analysis. While the four-class solution was the best option for this dataset, the classes should be replicated and validated with new and/or larger samples. Second, the SSA was not developed to operationalize the IBH Cross-Model Framework. Therefore, we were unable to test all aspects of the framework, and likely not all nuances of each principle or structure were represented in the indicators utilized in this study. Other measures of integration should be mapped onto the IBH Cross-Model Framework to validate both the measures, the framework, and to move the field toward a more consistent methodology of measurement. In addition, mapping existing measures may allow for comparison across previous studies that has not yet been possible. Another limitation is while the SSA was typically completed by more than one employee at each clinic, it was still a self-report measure that may reflect response biases. Future research should examine our research questions with a larger sample of clinics with greater racial/ethnic diversity, as well as asking specific questions around size and location of the clinic that can further contextualize it, such as FTE of medical and behavioral providers. Questions around clinic set-up, such as location of desks and frequency of medical-behavioral interactions would also contextualize and validate the approach and results in our study. In addition, limitations with our data inherently prevented a more in-depth consideration of the multi-faceted effects of racism on healthcare and the distribution or uptake of resources to clinics with larger minority populations. Consideration of the racial opportunity gap (O’Brien et al., 2020) and access to integrated care is recommended.

Conclusions

This study demonstrates the use of a general framework for evaluating differences in primary care implementation of integrated behavioral health care across diverse settings. IBH implementation efforts may benefit from addressing barriers and facilitators of the more granular principles and structures, rather than an overall yes/no implementation success. IBH implementation may stall or struggle at various levels based on these findings, given some components (e.g., population-based care) were more present in implementations of IBH than others (e.g., team-based care), and clinic context was also associated with variations in implementation. Findings indicated that IBH implementation was not globally “complete,” but benefited from being assessed multidimensionally. Specific patterns of IBH implementation in this study can be used as a foundation for future research in examining how variations in IBH implementation affect healthcare management, clinical outcomes, and find optimal implementation levels to achieve improvements in these out-comes as well as equity across various clinical settings.

Acknowledgments

Funding This work is supported by ICSI, an ongoing collaboration of healthcare systems in Minnesota focused on improving health across the upper Midwest. Additionally, the first author was supported by the National Institutes of Health’s National Center for Advancing Translational Sciences, Grants TL1R002493 and UL1TR002494. The content is solely the responsibility of the authors and does not necessarily represent the official views of either the University of Minnesota or the National Institutes of Health’s National Center for Advancing Translational Sciences.

Footnotes

Declarations

Conflict of interest The authors declare no conflicts of interest.

Research Involving Human and Animal Participants This study does not involve human participants and informed consent was therefore not required.

References

- Agency for Healthcare Research and Quality. (2020). 2019 National healthcare quality and disparities report Retrieved 27 Feb 2021, from https://www.ahrq.gov/sdoh/about.html [PubMed]

- Archer J, Bower P, Gilbody S, Lovell K, Richards D, Gask L, Dickens C, & Coventry P (2012). Collaborative care for depression and anxiety problems. Cochrane Database of Systematic Reviews [DOI] [PubMed] [Google Scholar]

- Asparouhov T, & Muthén BO (2014). Appendices for auxiliary variables in mixture modeling: 3-step approaches using Mplus manually versus all steps done automatically. Structural Equation Modeling [Google Scholar]

- Asparouhov T, & Muthén B (2019). Auxiliary variables in mixture modeling: Using the BCH method in Mplus to estimate a distal outcome model and an arbitrary second model. Mplus Web Notes, 21, 1–27. [Google Scholar]

- Beehler GP, Funderburk JS, King PR, Wade M, & Possemato K (2015). Using the Primary Care Behavioral Health Provider Adherence Questionnaire (PPAQ) to identify practice patterns. Translational Behavioral Medicine, 5(4), 384–392. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Briggs RD, German M, Hershberg RS, Cirilli C, Crawford DE, & Racine AD (2016). Integrated pediatric behavioral health: Implications for training and intervention models. Professional Psychology: Research and Practice, 47(4), 312–319. [Google Scholar]

- Brown-Johnson CG, Chan GK, Winget M, Shaw JG, Patton K, Hussain R, Olayiwola JN, Chang S, & Mahoney M (2018). Primary care 2.0: Design of a transformational team-based practice model to meet the quadruple aim. American Journal of Medical Quality [DOI] [PubMed] [Google Scholar]

- Brownson CA, Miller D, Crespo R, Neuner S, Thompson J, Wall JC, Emont S, Fazzone P, Fisher EB, & Glasgow RE (2007). A quality improvement tool to assess self-management support in primary care. The Joint Commission Journal on Quality and Patient Safety, 33(7), 408–416. [DOI] [PubMed] [Google Scholar]

- Churchwell K, Elkind MSV, Benjamin RM, Carson AP, Chang EK, Lawrence W, Mills A, Odom TM, Rodriguez CJ, Rodriguez F, Sanchez E, Sharrief AZ, Sims M, & Williams O (2020). Call to action: Structural racism as a fundamental driver of health disparities: A presidential advisory from the American Heart Association. Circulation [DOI] [PubMed] [Google Scholar]

- Cohen DJ, Davis M, Balasubramanian BA, Gunn R, Hall J, DeGruy FV, Peek CJ, Green LA, Stange KC, Pallares C, Levy S, Pollack D, & Miller BF (2015a). Integrating behavioral health and primary care: Consulting, coordinating and collaborating among professionals. The Journal of the American Board of Family Medicine, 28(Supplement 1), S21–S31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen DJ, Davis MM, Hall JD, Gilchrist EC, & Miller BF (2015b). A guidebook of professional practices for behavioral health and primary care integration: Observations from exemplary sites Agency for Healthcare Research and Quality. [Google Scholar]

- Cook BL, Hou SS-Y, Lee-Tauler SY, Progovac AM, Samson F, & Sanchez MJ (2019). A review of mental health and mental health care disparities research: 2011–2014. Medical Care Research and Review, 76(6), 683–710. [DOI] [PubMed] [Google Scholar]

- Cook BL, Zuvekas SH, Chen J, Progovac A, & Lincoln AK (2017). Assessing the individual, neighborhood, and policy predictors of disparities in mental health care. Medical Care Research and Review, 74(4), 404–430. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Davidson KW, Goldstein M, Kaplan RM, Kaufmann PG, Knatterud GL, Orleans CT, Spring B, Trudeaum KJ, & Whitlock EP (2003). Evidence-based behavioral medicine: What is it and how do we achieve it? Annals of Behavioral Medicine, 26(3), 161–171. [DOI] [PubMed] [Google Scholar]

- Eghaneyan BH, Sanchez K, & Mitschke DB (2014). Implementation of a collaborative care model for the treatment of depression and anxiety in a community health center: Results from a qualitative case study. Journal of Multidisciplinary Healthcare, 7, 503–513. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fiscella K, & McDaniel SH (2018). The complexity, diversity, and science of primary care teams. American Psychologist, 73(4), 451–467. [DOI] [PubMed] [Google Scholar]

- Garland AF, Bickman L, & Chorpita BF (2010). Change what? Identifying quality improvement targets by investigating usual mental health care. Administration and Policy in Mental Health and Mental Health Services Research, 37(1–2), 15–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holden K, McGregor B, Thandi P, Fresh E, Sheats K, Belton A, Mattox G, & Satcher D (2014). Toward culturally centered integrative care for addressing mental health disparities among ethnic minorities. Psychological Services, 11(4), 357–368. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holzer CE, Goldsmith HF, & Ciarlo JA (2000). The availability of health and mental health providers by population density. Journal of the Washington Academy of Sciences, 86(3), 25–33. [Google Scholar]

- Hunter CL, & Goodie JL (2010). Operational and clinical components for integrated-collaborative behavioral healthcare in the patient-centered medical home. Families, Systems and Health, 28(4), 308–321. [DOI] [PubMed] [Google Scholar]

- Hunter CL, Funderburk JS, Polaha J, Bauman D, Goodie JL, & Hunter CM (2018). Primary care behavioral health (PCBH) model research: Current state of the science and a call to action. Journal of Clinical Psychology in Medical Settings, 25(2), 127–156. [DOI] [PubMed] [Google Scholar]

- Jacobs SR, Weiner BJ, Reeve BB, Hofmann DA, Christian M, & Weinberger M (2015). Determining the predictors of innovation implementation in healthcare: A quantitative analysis of implementation effectiveness. BMC Health Services Research, 15(1), 1–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jensen EJ, & Mendenhall T (2018). Call to action: Family therapy and rural mental health. Contemporary Family Therapy, 40(4), 309–317. [Google Scholar]

- Kaplan HC, Brady PW, Dritz MC, Hooper DK, Linam WM, Froehle CM, & Margolis P (2010). The influence of cotext on quality improvement success in health care: A systematic review of the literature. Milbank Quarterly, 88(4), 500–559. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kearney LK, Smith CA, & Pomerantz AS (2015). Capturing psychologists’ work in integrated care: Measuring and documenting administrative outcomes. Journal of Clinical Psychology in Medical Settings, 22(4), 232–242. [DOI] [PubMed] [Google Scholar]

- Kessler RS, Auxier A, Hitt JR, Macchi CR, Mullin D, van Eeghen C, & Littenberg B (2016). Development and validation of a measure of primary care behavioral health integration. Families, Systems, & Health, 34(4), 342–356. [DOI] [PubMed] [Google Scholar]

- Kroenke K, & Unutzer J (2017). Closing the false divide: Sustainable approaches to integrating mental health services into primary care. Journal of General Internal Medicine, 32(4), 404–410. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kwan BM, & Nease DE (2013). Integrated behavioral health in primary care. In Talen MR & Burke Valeras A (Eds.), Integrated behavioral health in primary care Springer. [Google Scholar]

- Lanza ST, Bray BC, & Collins LM (2012). An introduction to latent class and latent transition analysis. Handbook of Psychology (2nd ed., Vol. 2, pp. 2–4). Wiley. [Google Scholar]

- Ma KPK, Mollis B, Rolfes J, Au M, Prado M, Crocker A, Scholle SH, Kessler R, Baldwin L, & Stephens KAP (2021) . Primary care payment models for integrated behavioral health in hospital-owned and non-hospital-owned clinics. Poster presented at Academy Health 2021 Annual Research Meeting [Google Scholar]

- Mauro PM, Furr-Holden CD, Strain EC, Crum RM, & Mojtabai R (2016). Classifying substance use disorder treatment facilities with co-located mental health services: A latent class analysis approach. Drug and Alcohol Dependence, 163(2016), 108–115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- MN Community Measurement. (2020). Minnesota health care qualilty report 2019 [Google Scholar]

- Muthén LK, & Muthén BO (2017). Mplus user’s guide (8.4; Eighth Edn). Muthén & Muthén. Retrieved 11 Feb 2020, from https://www.statmodel.com/. [Google Scholar]

- National Committee for Quality Assurance. (2017). NCQA PCMH standards and guidelines Retrieved from https://store.ncqa.org/index.php/catalog/product/view/id/2776/s/2017-pcmh-standards-and-guidelines-epub/.

- Nylund KL, Asparouhov T, & Muthén BO (2007). Deciding on the number of classes in latent class analysis and growth mixture modeling: A Monte Carlo simulation study. Structural Equation Modeling, 14(4), 535–569. [Google Scholar]

- O’Brien R, Neman T, Seltzer N, Evans L, & Venkataramani A (2020). Structural racism, economic opportunity and racial health disparities: Evidence from U.S. counties. SSM—Population Health, 11, 100564. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peek CJ, & The National Integration Academy Council. (2013). Lexicon for behavioral health and primary care integration: Concepts and definitions developed by expert consensus. AHRQ publication no.13-IP001-EF Agency for Healthcare Research and Quality. Retrieved 29 April 2019, from https://integrationacademy.ahrq.gov/sites/default/files/Lexicon.pdf. [Google Scholar]

- Possemato K, Johnson EM, Beehler GP, Shepardson RL, King P, Vair CL, Funderburk JS, Maisto SA, & Wray LO (2018). Patient outcomes associated with primary care behavioral health services: A systematic review. General Hospital Psychiatry, 53, 1–11. [DOI] [PubMed] [Google Scholar]

- Proctor E, Silmere H, Raghavan R, Hovmand P, Aarons G, Bunger A, Griffey R, & Hensley M (2011). Outcomes for implementation research: Conceptual distinctions, measurement challenges, and research agenda. Administration and Policy in Mental Health and Mental Health Services Research, 38(2), 65–76. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reiss-Brennan B, Brunisholz KD, Dredge C, Briot P, Grazier K, Wilcox A, Savitz L, & James B (2016). Association of integrated team-based care with health care quality, utilization, and cost. JAMA - Journal of the American Medical Association, 316(8), 826–834. [DOI] [PubMed] [Google Scholar]

- Reiter JT, Dobmeyer AC, & Hunter CL (2018). The primary care behavioral health (PCBH) model: An overview and operational definition. Journal of Clinical Psychology in Medical Settings, 25(2), 109–126. [DOI] [PubMed] [Google Scholar]

- Santiago CD, Kaltman S, & Miranda J (2013). Poverty and mental health: How do low-income adults and children fare in psychotherapy? Journal of Clinical Psychology, 69(2), 115–126. [DOI] [PubMed] [Google Scholar]

- Scheirer MA, Leonard BA, Ronan L, & Boober BH (2010). Site self assessment tool for the maine health access foundation integration initiative Augusta, Maine: Maine Health Access Foundation. [Google Scholar]

- Stamm BH, Lambert D, Piland NF, & Speck NC (2007). A rural perspective on health care for the whole person. Professional Psychology: Research and Practice, 38(3), 298–304. [Google Scholar]

- Stephens KA, van Eeghen C, Mollis B, Au M, Brennhofer SA, Martin M, Clifton J, Witwer E, Hansen A, Monkman J, Buchanan G, & Kessler R (2020). Defining and measuring core processes and structures in integrated behavioral health in primary care: A cross-model framework. Translational Behavioral Medicine, 10(3), 527–538. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Unützer J, Harbin H, Schoenbaum M, & Druss BG (2013). The collaborative care model: An approach for integrating physical and mental health care in Medicaid health homes. In Health Home: Information Resource Center (Issue May). [Google Scholar]

- Unützer J, Carlo AC, Arao R, Vredevoogd M, Fortney J, Powers D, & Russo J (2020). Variation in the effectiveness of collaborative care for depression: Does it matter where you get your care? Health Affairs, 39(11), 1943–1950. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vogel ME, Kanzler KE, Aikens JE, & Goodie JL (2017). Integration of behavioral health and primary care: Current knowledge and future directions. Journal of Behavioral Medicine, 40(1), 69–84. [DOI] [PubMed] [Google Scholar]

- Yaghjyan L, Cogle CR, Deng G, Yang J, Jackson P, Hardt N, Hall J, & Mao L (2019). Continuous rural-urban coding for cancer disparity studies: Is it appropriate for statistical analysis? International Journal of Environmental Research and Public Health, 16(6), 1–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Young J, Gilwee J, Holman M, Messier R, Kelly M, & Kessler R (2012). Mental health, substance abuse, and health behavior intervention as part of the patient-centered medical home: A case study. Translational Behavioral Medicine, 2(3), 345–354. [DOI] [PMC free article] [PubMed] [Google Scholar]