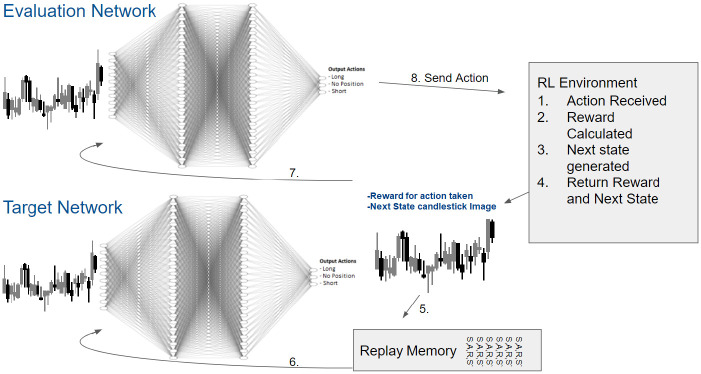

Fig 6. DDQN structure and workflow with RL environment.

1. An action is received by the RL environment. 2. The reward is calculated. 3. the next state candlestick image, is generated. 4. The reward and next state are returned by the RL Environment. 5. The next state is stored in the Replay memory. 6. The replay memory stores 1000 previous state, action, reward, next state observations. The target network is trained using these randomly selected previous states. Every 100 steps, the target network weights are copied to the evaluation network. 7. The next state is also directly inputted into the evaluation network. 8. The next action is outputted by the DDQN and sent to the RL Environment.