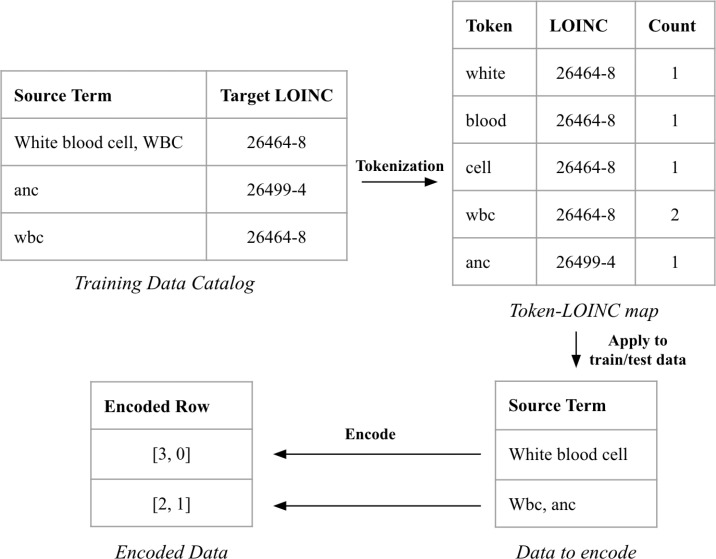

Figure 1:

Tokenization Encoding process. Training data gets processed and tokenized, forming the token-LOINC map. This map is then used to encode both the training and test data, leading to vectorized rows. The encoded row has an index for each LOINC that exists in the training data catalog (here only 2 LOINCs), and the value at each index represents how many tokens overlap with training data tokens mapped to that LOINC. The first row gets encoded as [3, 0] since there are 3 tokens in the source term that match a token for the LOINC 26464-8, and 0 tokens that match the LOINC 26499-4.