Abstract

The solar ultraviolet index (UVI) is a key public health indicator to mitigate the ultraviolet-exposure related diseases. This study aimed to develop and compare the performances of different hybridised deep learning approaches with a convolutional neural network and long short-term memory referred to as CLSTM to forecast the daily UVI of Perth station, Western Australia. A complete ensemble empirical mode decomposition with adaptive noise (CEEMDAN) is incorporated coupled with four feature selection algorithms (i.e., genetic algorithm (GA), ant colony optimization (ACO), particle swarm optimization (PSO), and differential evolution (DEV)) to understand the diverse combinations of the predictor variables acquired from three distinct datasets (i.e., satellite data, ground-based SILO data, and synoptic mode climate indices). The CEEMDAN-CLSTM model coupled with GA appeared to be an accurate forecasting system in capturing the UVI. Compared to the counterpart benchmark models, the results demonstrated the excellent forecasting capability (i.e., low error and high efficiency) of the recommended hybrid CEEMDAN-CLSTM model in apprehending the complex and non-linear relationships between predictor variables and the daily UVI. The study inference can considerably enhance real-time exposure advice for the public and help mitigate the potential for solar UV-exposure-related diseases such as melanoma.

Keywords: Deep learning, Hybrid model, Solar ultraviolet index, Optimization algorithms, Public health

Introduction

Solar ultraviolet (UV) radiation is an essential component in the sustenance of life on Earth (Norval et al. 2007). The UV irradiance consists of a small fraction (e.g., 5–7%) of the total radiation and produces numerous beneficial effects on human health. It has been in use since ancient times for improving body’s immune systems, such as strengthening bones and muscles (Juzeniene and Moan 2012) as well as treating various hard-to-treat skin diseases such as atopic dermatitis, psoriasis, phototherapy of localised scleroderma (Furuhashi et al. 2020; Kroft et al. 2008), and vitiligo (Roshan et al. 2020). UV-stimulated tanning has a positive mood changing and relaxing effect on many (Sivamani et al. 2009). Further, UV-induced nitrogen oxide (NO) plays a vital role in reducing human blood pressure (Juzeniene and Moan 2012; Opländer Christian et al. 2009).

UV light has also been widely used as an effective disinfectant in the food and water industries to inactivate disease-producing microorganisms (Gray 2014). Because of its effectiveness against protozoa contamination, the use of UV light as a drinking water disinfectant has achieved increased acceptance (Timmermann et al. 2015). To date, most of the UV-installed public water supplies are in Europe. In the United States (US), its application is mainly limited to groundwater treatment (Chen et al. 2006). However, its use is expected to increase in the future for the disinfection of different wastewater systems. Developing countries worldwide find it useful as it offers a simple, low-cost, and effective disinfection technique in water treatment compared to the traditional chlorination method (Mäusezahl et al. 2009; Pooi and Ng 2018).

The application of UV light has also shown potency in fighting airborne-mediated diseases for a long time (Hollaender et al. 1944; Wells and Fair 1935). For instance, a recent study demonstrated that a small dose (i.e., 2 mJ/cm2 of 222-nm) of UV-C light could efficiently inactivate aerosolized H1N1 influenza viruses (Welch et al. 2018). The far UV-C light can also be used to sterilize surgical equipment. Recently, the use of UV-C light as the surface disinfectant has been significantly increased to combat the global pandemic (COVID-19) caused by coronavirus SARS-CoV2. A recent study also highlighted the efficacy of UV light application in the disinfection of COVID-19 surface contamination (Heilingloh et al. 2020).

However, the research on UV radiation has also been a serious concern due to its dichotomous nature. UV irradiance can also have detrimental biological effects on human health, such as skin cancer and eye disease (Lucas et al. 2008; Turner et al. 2017). Chronic exposure to UV light has been reported as a significant risk factor responsible for melanoma and non-melanoma cancers (Saraiya et al. 2004; Sivamani et al. 2009) and is associated with 50–90% of these diseases. In a recent study, the highest global incidence rates of melanoma were observed in the Australasia region compared to other North American and European parts (Karimkhani et al. 2017). Therefore, it is crucial to provide correct information about the intensity of UV irradiance to the people at risk to protect their health. This information would also help people in different sectors (e.g., agriculture, medical sector, water management, etc.).

The World Health Organization (WHO) formulated the global UV index (UVI) as a numerical public health indicator to convey the associated risk when exposed to UV radiation (Fernández-Delgado et al. 2014; WHO 2002). However, UV irradiance estimation in practice requires ground-based physical models (Raksasat et al. 2021) and satellite-derived observing systems with advanced technical expertise (Kazantzidis et al. 2015). The installation of required equipment (i.e., spectroradiometers, radiometers, and sky images) is expensive (Deo et al. 2017) and difficult for remote regions, primarily mountainous areas. Furthermore, the solar irradiance is also highly impacted by many hydro-climatic factors, e.g., clouds and aerosol (Li et al. 2018; Staiger et al. 2008) and ozone (Baumgaertner et al. 2011; Tartaglione et al. 2020) that can insert considerable uncertainties into the available process-based and empirical models (details also given in the method section). Therefore, the analysis of sky images may also require extensive bias corrections, i.e., cloud modification (Krzyścin et al. 2015; Sudhibrabha et al. 2006), which creates further technical as well as computational burdens. An application of data-driven models can be helpful to minimize these formidable challenges. Specifically, the non-linearity into the data matrix can easily be handled using data-driven models that traditional process-based and semi-process-based models fail. Further, the data-driven models are easy to implement, do not demand high process-based cognitions (Qing and Niu 2018; Wang et al. 2018), and are computationally less burdensome.

As an alternative to conventional process-based and empirical models, applying different machine learning (ML) algorithms as data-driven models have proven tremendously successful because of the powerful computational efficiency. With technological advancement, computational efficiency has been significantly increased, and researchers have developed many ML tools. Artificial neural networks (ANNs) are the most common and extensively employed in solar energy applications (Yadav and Chandel 2014). However, many studies, such as the multiple layer perceptron (MLP) neural networks (Alados et al. 2007; Alfadda et al. 2018), support vector regression (SVR) (Fan et al. 2020; Kaba et al. 2017), decision tree (Jiménez-Pérez and Mora-López 2016), and random forest (Fouilloy et al. 2018) have also been extensively applied in estimating the UV erythemal irradiance. The multivariate adaptive regression splines (MARS) and M5 algorithms were applied in a separate study for forecasting solar radiation (Srivastava et al. 2019). Further, the deep learning network such as the convolutional neural network (CNN) (Szenicer et al. 2019) and the long short-term memory (LSTM) (Ahmed et al. 2021b, c; Huang et al. 2020; Qing and Niu 2018; Raksasat et al. 2021) are recent additions in this domain.

However, the UVI indicator is more explicit to common people than UV irradiance values. Further, only a few data-driven models have been applied for UVI forecasting. For example, an ANN was used in modeling UVI on a global scale (Latosińska et al. 2015). An extreme learning method (ELM) was applied in forecasting UVI in the Australian context (Deo et al. 2017). There have not been many studies that used ML methods to forecast UVI. Albeit the successful predictions of these standalone ML algorithms, they have architectural flaws and predominantly suffer from overfitting efficiency (Ahmed and Lin 2021). Therefore, the hybrid deep learning models receive increased interest and are extremely useful in predictions with higher efficiency than the standalone machine learning models. Hybrid models such as particle swarm optimization (PSO)-ANN, wavelet-ANN (Zhang et al. 2019), genetic algorithm (GA)-ANN (Antanasijević et al. 2014), Boruta random forest (BRF)-LSTM (Ahmed et al. 2021a, b, c, d), ensemble empirical mode decomposition (EEMD) (Liu et al. 2015), adaptive neuro-fuzzy inference system (ANFIS)-ant colony optimization (ACO) (Pruthi and Bhardwaj 2021) and (ACO)-CNN-GRU have been applied across disciplines and attained substantial tractions. However, a CNN-LSTM (i.e., CLSTM) hybrid model can efficiently extract inherent features from the data matrix than other machine learning models and has successfully predicted time series air quality and meteorological data (Pak et al. 2018). This study incorporates four feature selection algorithms (i.c., GA, PSO, ACO, and DEV) to optimize the training procedure and try different predictor variables selected by the feature selection algorithms. Adapting different feature selection approaches would give a diverse understanding of the predictors and effectively quantify the features of UVI. Moreover, integration of convolutional neural network as a feature extraction method gives a further improvement of UVI forecasting, as confirmed by numerous researchers (c 2021a; Ghimire et al. 2019; Huang and Kuo 2018; Wu et al. 2021). The application of such a hybrid model for predicting sequence data, i.e., the UVI for consecutive days, can be an effective tool with excellent predictive power. However, the forecasting of UVI with a CLSTM hybrid machine learning model is yet to be explored and was a key motivation for conducting this present study.

In this study, we employed a new model of EMD called complete ensemble empirical mode decomposition with adaptive noise (CEEMDAN) (Ahmed et al. 2021b; Prasad et al. 2018). In CEEMDAN-based decomposition, Gaussian white noise with a unit variance is added consecutively at each stage to reduce the forecasting procedure’s complexity (Di et al. 2014). Over the last few years, CEEMDAN techniques have been successfully implemented in forecasting soil moisture (Ahmed et al. 2021b; Prasad et al. 2018, 2019a, b), draught (Liu and Wang 2021), precipitation (Wang et al. 2022), and wind energy (Liang et al. 2020; Zhang et al. 2017). However, a previous version (i.e., EEMD) was used in forecasting streamflow (Seo and Kim 2016) and rainfall (Beltrán-Castro et al. 2013; Jiao et al. 2016; Ouyang et al. 2016). The machine learning algorithm used in the study is CLSTM, which has not been coupled with the EEMD or CEEMDAN to produce a UVI forecast system.

This study aims to apply a CLSTM hybrid machine learning model, which can exploit the benefits of both convolutional layers (i.e., feature extraction) and LSTM layers (i.e., storing sequence data for an extended period) and evaluate its ability to forecast the UVI for the next day efficiently. The model was constructed and fed with hydro-climatic data associated with UV irradiance in the Australian context. The model was optimized using ant colony optimization, genetic algorithms, particle swarm optimization, and differential evolutional algorithms. The model accuracy (i.e., efficiency and errors involved in UVI estimations) was assessed with the conventional standalone data-driven models’ (e.g., SVR, decision tree, MLP, CNN, LSTM, gated recurrent unit (GRU), etc.) performance statistics. The inference obtained from the modeling results was also tremendously valuable for building expert judgment to protect public health in the Australian region and beyond.

Materials and methods

Study area and UVI data

The study assessed the solar ultraviolet index of Perth (Latitude: − 31.93° E and Longitude: 115.10° S), Western Australia. The Australian Radiation Protection and Nuclear Safety Agency (ARPANSA) provided the UVI data for Australia from https://www.arpansa.gov.au (ARPANSA 2021). Figure 1 shows the monthly UVI data, the location of Perth, and the assessed station. The figure shows that Perth has had low to extreme UV concentrations between 1979 and 2007. The summer season (December to February) had the most extreme UV concentration. In contrast, autumn (March to May) has a moderate to high UVI value, and winter (June to August) demonstrates a lower to moderate, and spring (September to November) has a higher to extreme UVI value in Perth.

Fig. 1.

Study site (Perth, Australia) of the work and monthly average noon clear-sky UV index based on gridded analysis from the Bureau of Meteorology’s UV forecast model using NASA/GFSC TOMS OMI monthly ozone data sets between 1979 and 2007

Malignant melanoma rates in Western Australia are second only to those in Queensland, Australia’s most populated state (Slevin et al. 2000). Australia has the highest incidence of NMSC (Non-melanoma skin cancer) globally (Anderiesz et al. 2006; Staples et al. 1998). Approximately three-quarters of the cancer cases have basal cell carcinoma (BCC) and squamous cell carcinoma (SCC) types. These are attributed to the fair-skinned population’s high exposure to ambient solar radiation (Boniol 2016; McCarthy 2004). As a result, Australia is seen as a world leader in public health initiatives to prevent and detect skin cancer. Programs that have brought awareness of prevention strategies and skin cancer diagnoses have data to show that people act on their knowledge (Stanton et al. 2004). Several studies have found that decreasing sun protection measures are associated with a reduction in the rates of BCC and SCC in younger populations. They might have received cancer prevention messages as children (Staples et al. 2006). Considering the diversified concentration of UVI concentration, this study considers Perth an ideal study area (Fig. 2).

Fig. 2.

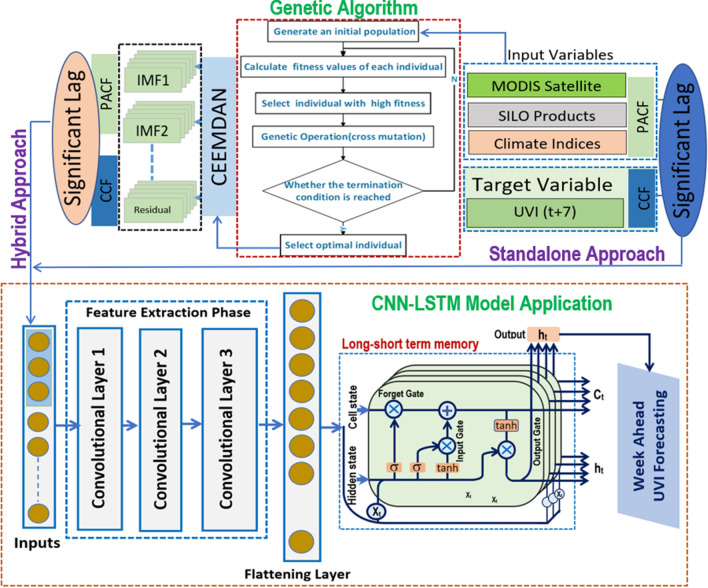

The developed model architecture of (Convolutional Neural Network, CNN) with the 4 layered long short term memory for a hybrid CNN-LSTM model to forecast a week daily maximum UV Index with Genetic Algorithm

Datasets of predictor variables

Three distinct data sources were used to collect the predictor variables in this analysis. The Moderate Resolution Imaging Spectroradiometer (MODIS) satellite datasets capture land surface status and flow parameters at regular temporal resolutions. These are supplemented by ground-based Scientific Information for Landowners (SILO) repository meteorological data for biophysical modeling and climate mode indices to help achieve Sea Surface Temperature (SST) over Australia. Geospatial Online Interactive Visualization and Analysis Infrastructure (GIOVANNI) is a geoscience data repository that provides a robust online visualization and analysis platform for geoscience datasets. It collects data from over 2000 satellite variables (Chen et al. 2010). The MODIS- aqua yielded 8 predictor variables for our study: a high-temporal terrestrial modeling system consisting of a surface state and providing daily products with a high resolution (250 m at nadir). A list of predictors of the MODIS Satellite can be obtained from the National Aeronautics and Space Administration (NASA) database (Giovanni 2021).

The surface UVI is influenced by atmospheric attenuation of incident solar radiation (Deo et al. 2017). The angle subtended from the zenith (θs) to the solar disc is another factor that affects the intensity of solar UV radiation (Allaart et al. 2004). The ultraviolet attenuation of clear-sky solar radiation is dependent on ozone and atmospheric aerosol concentrations, along with cloud cover (Deo et al. 2017). This implies that the measurements of biologically effective UV wavelengths are affected by total ozone column concentration. Incident radiation at the Earth’s surface is reduced by aerosols such as dust, smoke, and vehicle exhausts (Downs et al. 2016; Román et al. 2013). Moreover, Lee et al. (2009) found a significant correlation between UV solar radiation and geopotential height. Considering the direct influence of the predictors over ultraviolet radiation and UV index, this study collected ozone total column, aerosol optical depth (550 nm and 342.5 nm), geopotential height, cloud fraction, and combined cloud optical thickness data from the Geospatial Online Interactive Visualization and Analysis Infrastructure (GIOVANNI) repository.

Therefore, meteorological predictor variables (i.e., temperature, u- and v-winds) were significant while modeling UVI (Lee et al. 2009). Moreover, the cloud amount and diurnal temperature range have a strong positive correlation, while rainfall and cloud amount show a strong negative correlation (Jovanovic et al. 2011). Although overall cloud patterns agree with rainfall patterns across Australia, the higher-quality cloud network is too coarse to represent topographic influences accurately. Changes in the amount of cloud cover caused by climate change can result in long-term changes in maximum and minimum temperature. Owing to the relations of hydro-meteorological variables with UVI and their interconnections with cloud cover, the study selected nine meteorological variables from the Scientific Knowledge for Land-Owners (SILO) database to expand the pool of predictor variables, allowing for more practical application and model efficiency. SILO data are managed by Queensland’s Department of Environment and Research and can be obtained from https://www.longpaddock.qld.gov.au/silo.

Aerosol-rainfall relationships are also likely to be artifacts of cloud and cloud-clearing procedures. During the Madden–Julian Oscillation (MJO) wet phase, the high cloud’s value increases, the cloud tops rise, and increased precipitation enhances wet deposition, which reduces aerosol mass loading in the troposphere (Tian et al. 2008). The MJO (Lau and Waliser 2011; Madden and Julian 1971, 1994) dominates the intra-seasonal variability in the tropical atmosphere. A relatively slow-moving, large-scale oscillation in the deep tropical convection and baroclinic winds exists in the warmer tropical waters in the Indian and western Pacific Oceans (Hendon and Salby 1994; Kiladis et al. 2001; Tian et al. 2008). The study used the Real-time Multivariate MJO series 1 (RMM1) and 2 (RMM2) obtained from the Bureau of Meteorology, Australia (BOM 2020). Though RMM1 and RMM2 indicate an evolution of the MJO independent of season, the coherent off-equatorial behavior is strongly seasonal (Wheeler and Hendon 2004). Pavlakis et al. (2007, 2008) studied the spatial and temporal variation of long surface and short wave radiation. A high correlation was found between the longwave radiation anomaly and the Niño3.4 index time series over the Niño3.4 region located in the central Pacific.

Moreover, Pinker et al. (2017) investigated the effect of El Niño and La Nina cycles on surface radiative fluxes and the correlations between their anomalies and a variety of El Niño indices. The maximum variance of anomalous incoming solar radiation is located just west of the dateline. It coincides with anomalous SST (Sea surface temperature) gradient in the traditional eastern Pacific El Niño Southern Oscillation (ENSO). However, we derive the Southern Oscillation Index highly correlated with solar irradiance and mean Northern Hemisphere temperature fluctuations reconstructions (Yan et al. 2011). In North America and the North Pacific, land and sea surface temperatures, precipitation, and storm tracks are determined mainly by atmospheric variability associated with the Pacific North American (PNA) pattern. The modern instrumental record indicates a recent trend towards a positive PNA phase, which has resulted in increased warming and snowpack loss in northwest North America (Liu et al. 2017). This study used fifteen climate mode indices to increase the diversity. Table 1 shows the list of predictor variables used in this study.

Table 1.

Description of global pool of 24 predictor variables used to design and evaluate hybrid CEEMDAN-CLSTM predictive model for the daily maximum UV Index forecasting

| MODIS-satellite | ||

| OTC | Ozone total column | DU |

| GH | Geopotential height (daytime) | – |

| AO | Aerosol optical depth 550 nm | – |

| AOD2 | Aerosol optical depth 342.5 nm | – |

| TCW | Total column water vapour (daytime) | kg/m2 |

| CF | Cloud fraction (daytime) | – |

| CP | Cloud pressure (daytime) | hPa |

| CCO | Combined cloud optical thickness (mean) | – |

| SILO (ground-based observations) | ||

| T.Max | Maximum temperature | °C |

| T.Min | Minimum temperature | °C |

| Rain | Rainfall | mm |

| Evap | Evaporation | mm |

| Radn | Radiation | MJ m−2 |

| VP | Vapour pressure | hPa |

| RHmaxT | Relative humidity at Temperature T.Max | % |

| RHminT | Relative Humidity at Temperature T.Min | % |

| FAO56 | Morton potential evapotranspiration overland | mm |

| SYNOPTIC-SCALE (climate mode indices) | ||

| Nino3.0 | Average SSTA over 150°–90° W and 5° N–5° S | NONE |

| Nino3.4 | Average SSTA over 170° E–120° W and 5° N–5° S | |

| Nino4.0 | Average SSTA over 160° E–150° W and 5° N–5° S | |

| Nino1 + 2 | Average SSTA over 90° W–80° W and 0°–10° S | |

| AON | Arctic oscillation | |

| AAO | Antarctic oscillation | |

| EPO | East Pacific oscillation | |

| GBI | Greenland blocking index (GBI) | |

| WPO | Western Pacific Oscillation (WPO.) | |

| PNA | Pacific North American Index | |

| NAO | North Atlantic oscillation | |

| SAM | Southern annular mode index | |

| SOI | Southern oscillation Index, as per Troup (1965) | |

| RMM1 | Real-time multivariate MJO indices 1 | |

| RMM2 | Real-time multivariate MJO indices 1 | |

Standalone models

Multilayer perceptron (MLP)

The MLP is a simple feedforward neural network with three layers and is commonly used as a reference model for comparison in machine learning application research (Ahmed and Lin 2021). The three layers are the input layer, a hidden layer with n-nodes, and the output layer. The input data are fed into the input layer, transformed into the hidden layer via a non-linear activation function (i.e., a logistic function). The target output is estimated, Eq. (1).

| 1 |

where w = the vector of weights, xi = the vector of inputs, b = the bias term; f = the non-linear sigmoidal activation function, i.e., .

The computed output is then compared with the measured output, and the corresponding loss, i.e., the mean squared error (MSE), is estimated. The model parameters (i.e., initial weights and bias) are updated using a backpropagation method until the minimum MSE is obtained. The model is trained for several iterations and tested for new data sets for prediction accuracy.

Support vector regression (SVR)

The SVR is constructed based on the statistical learning theory. In SVR, a kernel trick is applied that transfers the input features into the higher dimension to construct an optimal separating hyperplane as follows (Ji et al. 2017):

| 2 |

where w is the weight vector, b is the bias, and indicates the high-dimensional feature space. The coefficients w and b, which define the location of the hyperplane, can be estimated by minimizing the following regularized risk function:

| 3 |

where C is the regularization parameter, and are slack variables. Equation (7) can be solved in a dual form using the Lagrangian multipliers as follows:

| 4 |

where is the non-linear kernel function. In this present study, we used a radial basis function (RBF) as the kernel, which is represented as follows:

where is the bandwidth of the RBF.

Decision tree (DT)

A decision tree is a predictive model used for classification and regression analysis (Jiménez-Pérez and Mora-López 2016). As our data is continuous, we used it for the regression predictions. It is a simple tree-like structure that uses the input observations (i.e., x1, x2, x3, …, xn) to predict the target output (i.e., Y). The tree contains many nodes, and at each node, a test to one of the inputs (e.g., x1) is applied, and the outcome is estimated. The left/right sub-branch of the decision tree is selected based on the estimated outcome. After a specific node, the prediction is made, and the corresponding node is termed the leaf node. The prediction averages out all the training points for the leaf node. The model is trained using all input variables and corresponding loss; the mean squared error (MSE) is calculated to determine the best split of the data. The maximum features are set as the total input features during the partition.

Convolutional neural network (CNN)

The CNN model was developed initially for document recognition (Lecun et al. 1998) and used for predictions. Aside from the input and output layer, the CNN architecture has three hidden layers: convolutional, pooling, and fully connected. The convolutional layers abstract the local information from the data matrix using a kernel. The primary advantage of this layer is the implementation of weight sharing and spatial correlation among neighbors (Guo et al. 2016). The pooling layers are the subsampling layers that reduce the size of the data matrix. A fully connected layer is similar to the traditional neural network added at the final pooling layer after completing an alternate stack of convolutional and pooling layers.

Long short-term memory (LSTM)

An LSTM network is a unique form of recurrent neural network that stores sequence data for an extended period (Hochreiter and Schmidhuber 1997). The LSTM structure has three gates: an input gate, an output gate, and a forget gate. The model regulates all these three gates and determines how much data must be stored and transferred to the next steps from previous time steps. The input gate controls the input data at the current time as follows:

| 5 |

where = the input received from the ith node at time t; = the result of the hth node at time t − 1; = the cell state (i.e., memory) of the cth node at time t − 1. The symbol ‘w’ represents the weight between nodes, and the f is the activation function. The output gate transfers the current value from Eq. (5) to the output node, Eq. (6). Then, at the final stage, the current value is stored as the cell state in the forget gate, Eq. (7).

| 6 |

| 7 |

Gated recurrent unit (GRU) network

The GRU network is an LSTM variant having only two gates, such as reset and update gates (Dey and Salem 2017). The implementation of this network can be represented by the following equations, Eqs. (14–17):

| 8 |

| 9 |

| 10 |

| 11 |

where = the sigmoidal activation function; = the input value at time t; = the output value at time t-1; and the , ,, ,, are the weight matrices for each gate and cell state. The symbols r and z represent the reset and update gates, respectively. is the activation function, and the dot [.] represents the element-wise dot product.

The proposed hybrid model

CLSTM (or CNN-LSTM) hybrid model

In this paper, a deep learning method using optimization techniques is constructed on top of a forecast model framework. This study demonstrates how the CNN-LSTM (CLSTM) model, comprised of four-layered CNN, can be effectively used for UVI forecasting. The CNN is employed to integrate extracted features to forecast the target variable (i.e., UVI) with minimal training and testing error. Likewise, the CNN-GRU (CGRU) hybrid model is prepared for the same purpose.

Optimization techniques

Ant colony optimization

Ant colony optimization (ACO) algorithm model is the graphical representation of the real ants’ behavior. In general, ants live in colonies, and they forage for food as a whole by communicating with each other using a chemical substance, the pheromones (Mucherino et al. 2015). An isolated ant cannot move randomly; they always optimize their way towards the food deposit to their nests by interacting with previously laid pheromones marks on the way. The entire colony optimizes their routes with this communication process and establishes the shortest path to their nests from feeding sources (Silva et al. 2009). In ACO, the artificial ants find a solution by moving on the problem graph. They deposit synthetic hormone pheromones on the graph so that upcoming artificial ants can follow the pattern to build a better solution. The artificial ants calculate the model’s intrinsic mode functions (IMFs) anticipation by testing artificial pheromone values against the target data. The probability of finding the best IMFs increases for every ant because of changing pheromones value throughout the IMFs. The whole process is just like an ant’s finding the optimal option to reach the target. The probability of selecting the shortest distance between the target and the IMFs of the input variable can be mathematically expressed as follows (Prasad 2019a, b):

| 12 |

where denotes decision point, and express as short and long distance to the target at an instant is the total amount of pheromone . The probability of the longest path can be determined where . The testing update on the two branches is described as follows:

| 13 |

| 14 |

where and the value of represent the remainder in the model. denotes the number of ants in the node at a certain period is given by:

| 15 |

The ACO algorithm is the most used simulation optimization algorithm where myriad artificial ants work in a simulated mathematical space to search for optimal solutions for a given problem. The ant colony algorithm is dominant in multi-objective optimization as it follows the natural distribution and self-evolved simple process. However, with the increase of network information, the ACO algorithm faces various constraints such as local optimization and feature redundancy for selecting optimal pathways (Peng et al. 2018).

Differential evaluation optimization

The differential evolution (DEV) algorithm is renowned for its simplicity and powerful stochastic direct search method. Besides, DEV has proven an efficient and effective method for searching global optimal solutions for the multimodal objective function, utilizing N-D-dimensional parameter vectors (Seme and Štumberger 2011). It does not require a specific starting point, and it operates effectively on a population candidate solution. The constant value N denotes the population; in every module, a new generation solution is determined and compared with the previous generation of the population member. It is a repetition process and runs until it reaches the maximum number of generations (i.e., Gmax). The defines the generation number of populations which can be written in mathematical proportional order. If the initial population vector is , then and

The initial population is generated using random within given boundaries, which can be written in the following equation:

| 16 |

where [0, 1] is the uniformly distributed number at the interval [0,1], which is chosen a new for each . represents the boundary condition. In contrast, and represents the upper and lower limit of the boundary vector parameters. For every generation, a new random vector is randomly created, selecting vectors from the previous generation from the following manner:

| 17 |

where is the number of optimizations, is the candidate vector, and control parameter. is the randomly selected index that ensures the difference between the candidate vector and the generation vector. The population for new the new generation will be assembled from the vector of the previous generation and the candidate vectors the following equation can describe selection:

| 18 |

The process repeats with the following generation population number until it satisfies the pre-defined objective function.

Particle swarm optimization

The particle swarm optimization (PSO) method was developed for continuous non-linear functions optimization having roots in artificial life and evolutionary computation (Kennedy and Eberhart 1995). The method was constructed using a simple concept that tracks each particle’s current position in the swarm by implementing a velocity vector for particle’s previous to the new position. However, the movement of the particles in the swarm depends on the individuals’ external behavior. Therefore, the process is very speculative, uses each particle’s memory to calculate a new position and gain knowledge by the swarm as a whole. Nearest neighbor velocity matching and craziness, eliminating ancillary variables, and incorporating multidimensional search and acceleration by distance were the precursor of PSO algorithm simulation (Eberhart and Shi 2001). Each particle in the simulation coordinates in the n-dimensional space calculation and responds to the two quality factors called ‘gbest’ and ‘pbest’. gbest represents the best location and value of particle in the population globally, and pbest represents the best-fitted solution achieved by the particle so far in the population swarm. Thus, at each step in the swarm, the PSO concept stands, each particle changing its acceleration towards its two best quality factor locations. The acceleration process begins by separating random numbers and presenting the optimal ‘gbest’ and ‘pbest’ locations. The basic steps for the PSO algorithm are given below, according to (Eberhart and Shi 2001):

The process starts with initializing sample random particles with random velocities and locations on n-dimensions in the design space.

The velocity vector for each particle in the swarm is carried out in the next step as the initial velocity vector value.

Plot the velocity vector value and compare particle fitness evaluation with particle’s pbest. If the new value is better than the initial value, update the new velocity vector value as pbest and previous location equal to the current location in the design space.

This step compares the fitness evaluation with the particles’ overall previous global best. If the current value is better than gbest, update it to a new gbest value and location.

- The velocity and position of the particle can be changed according to the equations:

19 20 Repeat step 2 and continue until the sufficiently fitted value and position are achieved.

Particle swarm optimization is well known for its simple operative steps and performance for optimizing a wide range of functions. PSO algorithm can successfully solve the design problem with many local minima and deal with regular and irregular design space problems locally and globally. Although PSO can solve problems more accurately than other traditional gradient-based optimizers, the computational cost is higher in PSO (Ventor and Sobieszczanski-Sobieski 2003).

Genetic algorithm

The genetic algorithm (GA) is a heuristic search method based on natural selection and evolution principles and concepts. This method was introduced by John Holland in the mid-seventies, inspired by Darwin’s theory of descent with modification by natural selection. To determine the optimal set of parameters, GA mimics the reproduction behavior of the biological populations in nature. It has been proven effective for solving cutting-edge optimization problems in the selection process. It can also handle regular and irregular variables, non-traditional data partitioning, and non-linear objective functions without requiring gradient information (Hassan et al. 2004). The basic steps for the PSO algorithm are given below:

-

The determination of the maximum outcomes from an objective function, the genetic algorithm uses the following function:

21 where is the number of decision variables with a discretization step . The initial boundary conditions determined at the beginning of the simulation. is the determines the physical parameters performances in the experiment. These decision variables are represented by a sequence of binary digits (.

The decisions variables are given within initial boundary conditions , where refers to the value of GENES. is the bit length of each GENE, which is the first integer where . The total number of bits in each DNA refers . The algorithm process starts with a random selection of objectives. After evaluation of each objective in the fitness function , and rank them from best to worst.

The genetic similarity determines the selection progress indicator. These random individual objectives with rank are transferred to the next generation. The remaining individuals participate in the steps of selection, crossover, and mutation. The individual objective parent selection process can happen several times, and this can be achieved by many different schemes, such as the roulette-wheel ranked method. For any pair of objective parents’ selection, crossover, and mutation process of next generation is defined. After that, the fitness of all individuals scheduled for the next generation is evaluated. This process repeats from generation to generation until a termination criterion is met.

GA methodology is quite like another stochastic searching algorithm PSO. Both methods begin their search from a randomly generated population of designs that evolve over successive generations. They do not require any specific starting point for the simulation. The first operator is the “selection” procedure similar to the “Survival for the Fittest” principle. The second operator is the “Crossover” operator, mimicking mating in a biological population. Both methods use the same convergence criteria for selecting the optimal solution in the problem space (Hassan et al. 2004). However, GA differs in two ways from the most traditional optimization methods. First, GA does not operate directly on the design parameter vector, but a symbolic parameter known as a chromosome. Second, it optimizes the whole design chromosomes at once, unlike other optimization methods single chromosome at a time (Weile and Michielssen 1997).

Complete ensemble empirical mode decomposition with adaptive noise (CEEMDAN)

The complete ensemble empirical mode decomposition with adaptive noise (CEEMDAN) decomposition approach is initiated by discretizing the n-length predictors of any model χ(t) into IMFs (intrinsic model functions) and residues to conform with tolerability. However, to ensure no information leakage in the IMFs and residues, the decomposition is performed separately by training and testing subsets. The actual IMF is produced by taking the empirical mode decomposition (EMD)-grounded IMFs across a trial and combining white noise to model the predictor-target variables. The CEEMDAN is used in machinery, electricity, and medicine such as impact signal denoising, daily peak load forecasting, health degradation monitoring for rolling bearings, friction signal denoising combined with mutual information (Li et al. 2019).

The CEEMDAN process is as follows:

Step 1 The decomposition of p-realizations of using EMD to develop their first intrinsic approach, as explained according to the equation:

| 23 |

Step 2 Putting k = 1, the 1st residue is computed following Eq. (1).

| 24 |

Step 3 Putting k = 2, the 2nd residual is obtained as

| 25 |

Step 4 Setting k = 2… K calculates the kth residue as.

| 26 |

Step 5 Now, we decompose the realizations until their first model of EMD is reached; Here the (k + 1) is

| 27 |

Step 6 Now, the k value is incremented, and steps 4–6 are repeated. Consequently, the final residue is achieved:

| 28 |

Here, K is the limiting case (i.e., the highest number of modes). To comply with the replicability of the earliest input, the following is performed for the CEEMDAN approach.

| 29 |

Model implementation procedure

It is crucial to optimize the objective model’s architecture to incorporate the relationship between predictors and model. A multi-phase CNN-GRU and GRU model is built using Python-based deep learning packages such as TensorFlow and Keras. A total of nine statistical metrics was used to investigate the forecasting robustness of the models that have been integrated. An Intel i7 powered the model with a 3.6 GHz processor and 16 GB of memory. Deep learning libraries like Keras (Brownlee 2016; Ketkar 2017) and TensorFlow (Abadi et al. 2016) were used to demonstrate algorithms for the proposed models. In addition, packages like matplotlib (Barrett et al. 2005) and seaborn (Waskom 2021) were used for visualization.

The determination of the model’s valid predictors does not have any precise formula. However, the literature suggests three methods, i.e., trial-and-error, the autocorrelation function (ACF), partial autocorrelation function (PACF), and the cross-correlation function (CCF) approaches, for selecting lagged UVI memories and predictors to make an optimal model. In this study, the PACF was used to determine significant antecedent behavior in terms of the lag of UVI (Tiwari and Adamowski 2013; Tiwari and Chatterjee 2010). Figures 3f and 4b demonstrated the PACF for UVI time series showing the antecedent behavior in terms of the lag of UVI and decomposed UVI (i.e., IMFn) where antecedent daily delays are apparent. Generally, the CCF selects the input signal pattern based on the predictors’ antecedent lag (Adamowski et al. 2012). The CCF determined the predictors’ statistical similarity to the target variable (Figs. 3a–e, 4a). A set of significant input combinations was selected after evaluating each predictor’s rcross with UVI. The figure shows that the highest correlation between predictor variables and UVI was found for all stations at lag zero (i.e., rcross = 0.22 – 0.75). AOD and GBI demonstrated significant rcross from 0.65 to 0.80 and 0.68 to 0.75, respectively. Some predictors with insignificant lags such as AO, CT, and OTC were also considered to increase the predictors’ diversity. The CCF with UVI with predictors significantly varied for all other stations. However, selecting lags from the cross-correlation function is identical to the objective stations.

Fig. 3.

a–e Correlogram showing the covariance between the objective variable (UVI) and the predictor variables in terms of the Cross-correlation coefficient (rcross) and f Partial autocorrelation function (PACF) plot of the UVI time series exploring the antecedent behavior in terms of the lag of UVI every day

Fig. 4.

a Correlogram showing the covariance between the objective variable (UVI) and the CEEMDAN decomposed T.Max (IMF1T.Max to ResidualsT.Max) in terms of the Cross-correlation coefficient (rcross) and b Partial autocorrelation function (PACF) plot of the CEEMDAN decomposed UVI time series exploring the antecedent behavior in terms of the lag of UVI every day

As mentioned, the CEEMDAN method was used to decompose the data sets. The daily time series of UVI data and predictor variables were decomposed into respective daily IMFs and a residual component using CEEMDAN procedures. The example of the IMFs and the residual component of the respective CEEMDAN is shown in Fig. 5. PACF was applied to the daily IMFs and residual component time series generated above. An individual input matrix was created for each IMF, and the residual component was made up based on the significant lagged memory with that of IMF of target UVI. These separate input matrices were used to forecast future IMFs and residual components. Next, the anticipated IMFs and residuals were combined to produce daily forecasts of UVI values. Note that the CEEMDAN transformations are completely self-adaptive and data-dependent multi-resolution techniques. As such, the number of IMFs and the residual component generated are contingent on the nature of the data.

Fig. 5.

An example time-series showing data features in IMFs and residuals produced by the CEEMDAN transformation of daily maximum UV Index for the case of Perth study site

The predictor variables were used to forecast the UVI were normalized between 0 and 1 to minimize the scaling effect of different variables as follows:

| 30 |

In Eq. (30), is the respective predictors, is the minimum value for the predictors, is the maximum value of the data and is the normalized value of the data. After normalizing the predictor variables, the data sets were partitioned. To build predictive models, input data must be divided into training, testing, and validation sets. The training set is used to train the model, then used to learn more about the data over time. This validation technique tries to provide information for modifying model hyperparameters. Separate from training sets, validation sets are used to examine and validate models. After training a model, the test set is frequently used to evaluate it. This study uses the first 70% of the data sets for training, the middle 15% were used for testing, and the remaining 15% of the data sets were considered validation data.

The CLSTM model was followed by developing a hybrid LSTM model with 3-layered CNN and 4-layered LSTM, as illustrated in Fig. 2. The traditional antecedent lagged matrix of the daily predictors’ variables was applied using the conventional models. The prior application of the optimization algorithm was made before using CCF and PACF and before significant predictors were removed from the model. The theoretical details of CNN and LSTM are already given in Sect. 2. Based on a trial-and-error approach, the hyper-parameters (as stated in Table 4 located in the “Appendix”) for all respective models. The computational complexity cost associated with the learning procedure of ML models is a significant concern; this cost is inversely proportional to the size of the dataset used for training and the algorithm used for hyperparameter selection, and it is directly related to the dataset size used for training (Ghimire et al. 2019). This time-consuming process requires a grid search for the optimal parameters for each model. For example, the search for each model takes approximately 10–11 h. After finding the optimal parameters, the computational time for training and testing becomes significantly less (< 10 min), as shown in Fig. 2. Using a pooling layer to control overfitting issues in the training phase, the CLSTM hybrid predictive model may be made smaller and more controlled, reducing the number of parameters and computation required the network. All the flattening layer outputs are routed to the respective inputs of the LSTM recurrent layer, which is routed to the final output of the flattening layer. Table 2 shows the selected predictors using four optimization techniques associated with the UVI, and the optimal parameters of four feature selection algorithms are tabulated in Table 5 from the “Appendix”.

Table 4.

The optimum hyper-parameter of the CLSTM model

| Model | Model hyper-parameter Names | Search space for optimal hyper-parameters |

|---|---|---|

| Optimally selected hyper-parameters | ||

| CLSTM | Convolution Layer 1 (C1) | 40 |

| C1- Activation function | Relu | |

| C1-Pooling Size | 1 | |

| Convolution Layer 2 (C2) | 20 | |

| C2- Activation function | Tanh | |

| C2-Pooling Size | 1 | |

| Convolution Layer 3 (C3) | 50 | |

| C3- Activation function | Relu | |

| C3-Pooling Size | 1 | |

| LSTM Layer 1 (L1) | 100 | |

| L1- Activation function | Relu | |

| LSTM Layer 2 (L2) | 80 | |

| L2- Activation function | ReLU | |

| LSTM Layer 3 (L4) | 100 | |

| L3- Activation function | Relu | |

| LSTM Layer 4 (L4) | 50 | |

| L4- Activation function | ReLU | |

| Drop-out rate | 0.2 | |

| Optimiser | SDG | |

| Learning Rate | 0.001 | |

| Padding | Same | |

| Batch Size | 5 | |

| Epochs | 1000 | |

Table 2.

List of selected input variables prior applying in the proposed model using four optimization techniques (i.e., ACO, DEV, GA and PSO)

Table 5.

The optimum characteristics of four feature selection algorithms (i.e., GA, ACO, PSO, and DEV)

| Characteristics | Optimal value |

|---|---|

| Genetic algorithm (GA) | |

| Number of chromosomes | 10 |

| Maximum number of generations | 100 |

| Crossover rate | 0.8 |

| Mutation rates | 0.3 |

| Ant colony optimization (ACO) | |

| Number of ants | 10 |

| Maximum number of iterations | 100 |

| Coefficient control tau | 1 |

| Coefficient control eta | 2 |

| Initial tau | 1 |

| Initial beta | 1 |

| Pheromone | 0.2 |

| Coefficient | 0.5 |

| Differential evolution (DEV) | |

| Number of vectors | 14 |

| Maximum number of generations | 100 |

| Crossover rate | 0.9 |

| Particle Swarm Optimization (PSO) | |

| Number of particles | 10 |

| Maximum number of iterations | 150 |

| Cognitive factor | 2 |

| Social factor | 2 |

| Maximum velocity | 6 |

| Maximum bound on inertia weight | 0.9 |

| Minimum bound on inertia weight | 0.4 |

Model performance assessment

In this study, the effectiveness of the deep learning hybrid model was assessed using a variety of performance evaluation criteria, e.g., Pearson’s Correlation Coefficient (r), root mean square error (RMSE), Nash–Sutcliffe efficiency (NSE) (Nash and Sutcliffe 1970), and mean absolute error (MAE). The relative RMSE (denoted as RRMSE) and relative MAE (denoted as RMAE) were used to explore the geographic differences between the study stations.

The exactness of the relationship between predicted and observed values were used to evaluate a predictive model's effectiveness. When the error distribution in the tested data is Gaussian, the root means square error (RMSE) is a more appropriate measure of model performance than the mean absolute error (MAE) (Chai and Draxler 2014), but for an improved model evaluation, the Legates-McCabe’s (LM) Index is used as a more sophisticated and compelling measure (Legates and McCabe 2013; Willmott et al. 2012). Mathematically, the metrics are as follows:

- Correlation coefficient (r):

31 - Mean absolute error (MAE):

32 - Root mean squared error (RMSE):

33 - Nash–Sutcliffe Efficiency (NS):

34 - Legates–McCabe’s Index (LM):

35 - Relative Root Mean Squared Error (RRMSE, %):

36 - Relative Mean Absolute Error (RMAE, %):

37

In Eqs. (31–37), and represents the observed and forecasted values for ith test value; and refer to their averages, accordingly, and N is defined as the number of observations, while the CV stands for the coefficient of variation.

Results

This section describes results obtained from the proposed hybrid deep learning model (i.e., CEEMDAN-CLSTM) and other hybrid models (i.e., CEEMDAN-CGRU, CEEMDAN-LSTM, CEEMDAN-GRU, CEEMDAN-DT, CGRU, and CLSTM), and the standalone LSTM, GRU, DT, MLP, and SVR models. Four feature selection algorithms (i.e., ACO, DEV, GA, and PSO) were incorporated to obtain the optimum features in model building. Seven statistical metrics from Eqs. (31)–(37) were used to analyze the models in the testing dataset and visual plots to justify the forecasted results’ justification.

The hybrid deep learning model (i.e., CEEMDAN-CLSTM) demonstrated high r and NS values and low RMSE and MAE compared to their standalone models (Table 3). The best overall performance was recorded using the CEEMDAN-CLSTM model with the Genetic Algorithm with the highest correlation (i.e., r = 0.996), the highest data variance explained (i.e., NS = 0.997), and the lowest errors (i.e., RMSE = 0.162 and MAE = 0.119). The performance was followed by the same model with PSO (i.e., r ≈ 0.996; NS ≈ 0.992; RMSE ≈ 0.216; MAE ≈ 0.163) and ACO (i.e., r ≈ 0.996; NS ≈ 0.993; RMSE ≈ 0.220; MAE ≈ 0.165). The single deep learning models (i.e., LSTM and GRU) performed better than the single machine learning models (i.e., DT, SVR, and MLP). Moreover, the hybrid deep learning models without a CNN (i.e., CEEMDAN-GRU and CEEMDAN-GRU) also demonstrated higher forecasting accuracy (i.e., r = 0.973 – 0.993; RMSE = 0.387 – 0.796) in comparison with standalone deep learning models (i.e., r ≈ 0.959 – 0.981; RMSE ≈ 0.690 – 0.986). The following models’ performance is then predicted by the CNN-GRU, CEEMDAN-GRU, and GRU models in that order.

Table 3.

Evaluation of hybrid CEEMDAN-CLSTM vs. benchmark (CNN-GRU, CNN-LSTM, CEEMDAN-GRU, CEEMDAN-LSTM, GRU and LSTM) models for Perth observation sites

The correlation coefficient (r), root mean square error (RMSE), mean absolute error (MAE) and Nash–Sutcliffe coefficient (NS) are computed between forecasted and observed UVI for 7 Day ahead periods in testing phase. The optimal model is boldfaced (blue)

RRMSE and LM for all tested models were used to assess the robustness of the proposed hybrid models and for comparisons. The magnitude of RRMSE (%) and LM for the objective model (CEEMDAN-CLSTM) shown in Fig. 6 indicates that the proposed hybrid model performed significantly better than other benchmark models. The RRMSE and LM values ranged between 2 and 3.5% and between 0.982 and 0.991, respectively. The performance indices (i.e., RRMSE and LM) using four optimization algorithms were higher for the CEEMDAN-CGRU model. Overall, the CEEMDAN-CLSTM model with the GA optimization methods provided the best performance (i.e., RRMSE = ~ 2.0%; LM = 0.991), indicating its high efficiency in forecasting the future UV-Index a higher degree of accuracy.

Fig. 6.

Comparison of the forecasting skill for all proposed models in terms of the relative error: RRMSE (%) and Legate McCabe Index (LM) within the testing period

A precise comparison of forecasted and observed UVI can also be seen by examining the scatterplot of forecasted (UVIfor) and observed (UVIobs) UVI for four optimization algorithms (i.e., ACO, PSO, DEV, and GA) (Fig. 7). Here, scatter plots showed the coefficient of determination (r2) and a least-square fitting line, along with the equation for UVI and an observed UVI close to the forecasted UVI. As demonstrated in Fig. 7, it also appears that the proposed hybrid model performed better when compared with other applied models. However, among the four optimization techniques applied, the hybrid deep learning model (i.e., CEEMDAN-CLSTM) optimized with the GA outperformed the other models in forecasting the UVI. The hybrid CEEMDAN-CLSTM model calculated magnitudes from the GA, which came the closest to unity, with an m|r2 of 0.976|0.995 in pairs. The performance is followed by ACO and DEV algorithms with a potential pair (ACO: 0.975|0.995; DEV: 0.966|0.994). The outliers (i.e., the extremes) are closer to the fitted line, while the y-intercept (i.e., the starting point) is approximately 0.05 units away from zero (0) using the GA method. The other models had outliers, resulting in their intercepts deviating from the ideal value. In conclusion, the CEEMDAN-CLSTM model performed the best for the GA.

Fig. 7.

Scatter plot of forecasted with observed UVI (UVI) of Perth station CEEMDAN-CLSTM model. A least square regression line and coefficient of determination (R2) with a linear fit equation are shown in each sub-panel

The proposed hybrid deep learning model (i.e., CEEMDAN-CLSTM) was further assessed employing the ECDFs of absolute forecast error (|FE|) (Fig. 8). Total 95% forecasted values using the CEEMDAN-CLSTM model with GA demonstrated a small error ranging between 0.01 and 0.299, with a substantially larger error for the CCGRU model (i.e., 0.477), followed by the CLSTM model (i.e., 0.626) and CGRU (i.e., 1.104). For the other optimization algorithms, nearly the same level of performance was observed. Predictions ranging between the 95th and 98th percentile were preferred over objective models, which performed best in the current forecast. However, Fig. 9 showed the effect of applying CEEMDAN as a feature extraction method on the percent change in RMAE values within the testing phase of UVI forecast incrementally. The contribution of the data decomposition method (i.e., CEEMDAN) was significant in the model implementation. The increment of RMAE in percent using GA was found between 17 to 63%, whereas the CLSTM showed the highest percentage of decrement (i.e., 63%). Moreover, the PSO optimized model showed that the RMAE (%) values with the deep learning model appeared to decrease by ~ 2 to 60%, and the lowest decreasing RMAE was found for the ACO algorithm with a reduction of ~ 3% to 36%. However, the CLSTM model using four optimization methods showed the highest improvement among all the deep learning approaches that reduced the RMSE from 36 to 63%. It is worth mentioning that the percent increase in RMAE was ~ 83% for the DEV algorithm using the SVR method. Overall, the CEEMDAN, as a data decomposition algorithm for UVI forecasting with four optimization algorithms, showed significant improvement over the testing phase.

Fig. 8.

Empirical cumulative distribution function (CDF) in forecasting error |FE| for CEEMDAN-CGRU, CEEMDAN-CLSTM, CNN-GRU, and CNN-LSTM model, shown for the 95 percentile on ECDF

Fig. 9.

Effect on percent change (%) of RMAE using CEEMDAN as a feature extraction approach in forecasting UVI at Perth station using Genetic Algorithm (GA), Ant Colony Optimization (ACO), Particle Swarm Optimization (PSO), and Differential Evolution (DEV)

After additional analysis, the forecasted-to-observed UVI and absolute forecasting errors are displayed in Fig. 10. The absolute forecasted error has a maximum dispersion of (|FE| =|UVIfor – UVIobs|). The box plot demonstrated the data dispersal of the observed and forecasted UVI from the proposed deep learning approaches and other comparing models. Figure 10 provides a clear visualization of the data concerning quartiles distinctly outliers. The lower end of the plot lies between the lower quartile (25th percentile) and upper quartile (75th percentile). It is evident that the median of the forecasted and the observed UVI for the CEEMDAN-CLSTM model with the GA optimization. Moreover, the DEV-based CEEMDAN-CLSTM model showed identical forecasting to the GA-based CEEMDAN-CLSTM model with a slight variation. A more in-depth inspection of the absolute forecasted error (|FE|) from the hybrid CEEMDAN-CLSTM model for two optimizations (i.e., GA and DEV) further strengthens the suitability of the hybrid CLSTM approach in forecasting the UVI of Perth station of Australia with the narrowest distribution in comparison with other models. A significant percentage (98%) of the |FE| in the first error brackets (0 <|FE|< 0.15) was observed for the GA-based CEEMDAN-CLSTM model, while for the DEV-based model, the percentage is 95%.

Fig. 10.

Evaluation of the performance of the proposed hybrid deep learning, CEEMDAN-CLSTM model with the comparative benchmark models based on the absolute forecasted error |FE| using four optimization techniques

With the help of a time series plot, we can better understand forecasting ability and refine the proposed model, taking it from standalone to hybrid model. The time series plot of forecasted and observed UVI using CEEMDAN-CLSTM optimized by four optimization methods is depicted in Fig. 11. The results showed that the proposed GA-based CEEMDAN-CLSTM model is close to the observed UVI, indicating that the model has high predictive accuracy. The application of the GA in the model optimization resulted in a significant improvement in forecasted UVI. For other algorithms that use the CEEMDAN-CLSTM model, it is discovered that the forecasted UVI is accurate when compared to the other optimization methods.

Fig. 11.

Time series of daily maximum UV index (UVI) for observed UVI and forecasted UVI for the objective model, CEEMDAN-CLSTM using four optimization approaches

Finally, Fig. 12 presents a comprehensive interpretation by illustrating the absolute forecasting error frequency distributions (|FE|) using all GA-based models for Perth stations of Australia. It is apparent from Fig. 12 that the CEEMDAN-CLSTM model provided significantly improved distributions with the maximum 98% forecasting error (|FE|) within the first error brackets (0 <|FE|< 0.10). It is also noteworthy that the CEEMDAN-CGRU model showed a higher percentage of |FE| between 0 and 0.25 of all forecasting yielded a considerably small error and the remaining 15% of simultaneously produced forecasting error between 0.25 and 1.0. The highest forecasted error was found for machine learning models when all models’ |FE| value (i.e., SVR, MLP, and DT) was considered.

Fig. 12.

Illustration of the frequency of absolute value of estimation errors (|EE|) of the proposed hybrid deep learning CEEMDAN-CLSTM model and comparing models using Genetic Algorithm (GA)

Discussion

The establishment of robust predictive modelling of the UV index and physical interpretation is critical for various practical applications, such as helping policymakers in their daily health impact assessment. These systems emulate how a human expert would solve a complex forecasting problem by reasoning through a set of UVI-related predictors rather than through conventional or procedural methods. These methods warrant continuous irradiance measurement or radiative transfer models, which are tedious (as discussed in the introduction) and often inaccurate. This study demonstrated the efficacy of hybrid deep learning methods in forecasting UVI on a near real-time horizon. The study site was in Perth, Western Australia, Australia, where skin cancer is significantly high. An accurate forecasting system in this region is therefore essential.

To function effectively, alert systems must generate accurate irradiance forecasts. Still, UVI is generally determined by many factors (i.e., the solar zenith, altitude, cloud fraction, aerosol and optical properties, albedo, and vertical ozone profile) (Deo et al. 2017). The study extensively utilized four optimization techniques (i.e., GA, ACO, DEV, and PSO) to have optimum predictors used in UVI forecasting as tabulated in Table 2. The incorporated predictors from three distinct data sets (i.e., SILO, MODIS, and CI) were optimized. The optimization techniques selected a diversified list of variables except for RMM1 and RMM2, as four algorithms selected them both. The predictors like ozone total column, AOD, and cloud fraction were significant using the GA algorithm. In most cases, the hydro-meteorological variables were insignificant by all four algorithms that agree with UV concentration’s general concept. The objective algorithm (i.e., GA) selected SOI, GBI, AAO, Nino4, Nino12, RMM1, and RMM2 as potential predictors as well. The ground-based measurements and modelling studies are essential (Alados et al. 2004, 2007) but are challenging to implement in practice. Furthermore, secondary factors affecting UV levels (i.e., clouds or aerosols) are rarely known with sufficient precision. Considering the practical feasibility, an algorithm that is data-efficient, simple to develop, flexible, and user-friendly should be considered a viable alternative for information (Igoe et al. 2013a, b; Parisi et al. 2016). Therefore, our developed forecasting model will play a vital role in adopting prompt measures without difficulties.

This study shows significant improvement from the previous studies in forecasting UVI in Australia. Deo et al. (2017) applied machine learning techniques to predict the UVI in Australia, demonstrating a substantial performance. However, this study found improved forecasting in a 7-day ahead time horizon by integrating three distinct types of datasets. The study can be further extended to other parts of Australia and around the world to develop an early warning framework of solar radiation UV index for better management and mitigation of UV-related health hazards.

The proposed hybrid deep learning network (i.e., CEEMDAN-CLSTM) for predicting surface UV radiation also demonstrated low errors in forecasting, i.e., showing around 10% error for the next-day forecast and 13–16% error for 7-day up to the 4-week forecast. This further affirms that the quantitative UV forecast is appropriate for heliotherapy applications, which tolerates up to 10–25% error levels. The CEEMDAN-CLSTM’s performance is competitive on UV data from multiple regions. Thus, the CEEMDAN-CLTSM model can be adapted to forecast other beneficial UV action spectra, such as vitamin D production and erythemal UV index. A fundamental limitation of machine learning is its overfitting tendency on the training dataset and often does not generalize well to other datasets from different distributions. In the context of UV forecasting, this dictates that the model must be retrained with data from the weather station to be used for that geographic region. In a geographical region with the highly variable weather condition, such as London in 2019, artificial neural network models’ performance dropped significantly (Raksasat et al. 2021). This capability of the model to extract seasonal patterns may also explain why the addition of ozone, cloud fraction, and AOD information significantly improved the performance of CEEMDAN-CLSTM, particularly when the GA algorithm was applied.

Conclusion

This study conducted a daily UV Index forecasting at Perth station using aggregated significant antecedent satellite-driven variables associated with UV irradiance. The forecasting was made using a novel hybrid deep learning model (i.e., CEEMDAN-CLSTM) and compared with other benchmark models such as LSTM, GRU, DT, SVR, etc. Four optimization methods were employed to extract the crucial features of the response variable (i.e., the UVI). After applying the proposed model and benchmarked models, the model’s merits were evaluated using different statistical metrics, graphical plots, and relevant discussions. The key findings are summarized as follows:

The CEEMDAN-CLSTM hybrid model demonstrated excellent forecasting ability compared to its counterpart models.

The GA optimization algorithm is appeared to be an attractive option for selecting mechanistically meaningful features of the dependable variable compared to the other three optimization techniques.

The performance metrics showed that the GA and CEEMDAN-optimized models had better performance and higher efficiency metrics (i.e., r, NS, and LM) and lower error metrics (i.e., MSE and RMSE).

However, in UVI forecasting, the standalone models’ (i.e., LSTM, GRU, DT, and SVR) performances were poor compared to the proposed hybrid model.

Adapted to an Australian climate in the sub-tropics during peak summer-time conditions, applying a CLSTM model to forecast the UVI is a novel deep learning approach. The forecasts derived from our data were within one UVI unit of the actual measured values indicating the remarkable forecasting capability. Therefore, this data-driven model would be of tremendous help for the decision-makers to promptly protect public health without delay. It has the tremendous potential to be adopted by a more significant segment of the community, particularly children and the elderly facing a greater risk of developing skin cancer (i.e., melanoma) in the Australian region and worldwide.

Acknowledgements

We would like to thank the Australian Radiation Protection and Nuclear Safety Agency for providing the data. This study did not receive any external funding.

Abbreviations

- ACO

Ant colony optimization

- ACF

Autocorrelation function

- ANFIS

Adaptive neuro-fuzzy inference system

- ANN

Artificial neural network

- AO

Arctic oscillation

- ARPANSA

Australian radiation protection and nuclear safety agency

- BCC

Basal cell carcinoma

- BOM

Bureau of meteorology

- CEEMDAN

Complete ensemble empirical mode decomposition with adaptive noise

- CEEMDAN-CLSTM

Hybrid model integrating the CEEMDAN and CNN algorithm with LSTM

- CEEMDAN-CGRU

Hybrid model integrating the CEEMDAN and CNN algorithm with GRU

- CEEMDAN-GRU

Hybrid model integrating the CEEMDAN algorithm with GRU

- CNN-LSTM (or CLSTM)

Hybrid model integrating the CNN algorithm with LSTM

- CNN-GRU (or CGRU)

Hybrid model integrating the CNN algorithm with GRU

- CEEMDAN

Complete ensemble empirical mode decomposition with Adaptive Noise

- CEEMDAN-DT

Hybrid model integrating the CEEMDAN algorithm with DT

- CEEMDAN-MLP

Hybrid model integrating the CEEMDAN algorithm with MLP

- CEEMDAN-SVR

Hybrid model integrating the CEEMDAN algorithm with SVR

- CNN

Convolutional neural network

- COVID-19

Coronavirus disease 2019

- CCF

Cross-correlation function

- EEMD

Ensemble empirical mode decomposition

- EMD

Empirical mode decomposition

- DEV

Differential evolution

- DL

Deep learning

- DT

Decision tree

- DWT

Discrete wavelet transformation

- ECDF

Empirical cumulative distribution function

- ELM

Extreme learning machine

- EMI

El-Nino southern oscillation Modoki indices

- ENSO

El Niño Southern Oscillation

- FE

Forecasting error

- GA

Genetic algorithm

- GB

Giga bite

- GIOVANNI

Geospatial online interactive visualization and analysis infrastructure

- GRU

Gated recurrent unit

- GLDAS

Global land data assimilation system

- GSFC

Goddard space flight centre

- IMF

Intrinsic mode functions

- LM

Legates-McCabe’s index

- LSTM

Long- short term memory

- MAE

Mean absolute error

- MAPE

Mean absolute percentage error

- MARS

Multivariate adaptive regression splines

- MDB

Murray–Darling basin

- MJO

Madden–Julian oscillation

- ML

Machine learning

- MLP

Multi-layer perceptron

- MODWT

Maximum overlap discrete wavelet transformation

- MODIS

Moderate resolution imaging spectroradiometer

- MRA

Multi-resolution analysis

- MSE

Mean squared error

- NAO

North Atlantic oscillation

- NASA

National aeronautics and space administration

- NCEP

National centers for environmental prediction

- NO

Nitrogen oxide

- NOAA

National oceanic and atmospheric administration

- NMSC

Non-melanoma skin cancer

- NSE

Nash–Sutcliffe efficiency

- PACF

Partial autocorrelation function

- PDO

Pacific decadal oscillation

- PNA

Pacific North American

- PSO

Particle swarm optimization

- r

Correlation coefficient

- RMM

Real-time multivariate MJO series

- GA

Genetic algorithm

- BRF

Boruta random forest

- RMSE

Root-mean-square-error

- RNN

Recurrent neural network

- RRMSE

Relative root-mean-square error

- SAM

Southern annular mode

- SARS-CoV-2

Severe acute respiratory syndrome Coronavirus 2

- SCC

Squamous cell carcinoma

- SILO

Scientific information for landowners

- SOI

Southern oscillation index

- SST

Sea surface temperature

- SVR

Support vector regression

- US

United States

- UV

Ultraviolet

- UVI

Ultraviolet index

- WHO

World Health Organization

- WI

Willmott’s index of agreement

Appendix

Authors’ contribution

AAMA: Writing—original draft, Conceptualization, Methodology, Software, Model development, visualization, and application. MHA: Conceptualization, Writing—draft, review and editing. SKS: Writing—review and editing. OA: Data Collection, Writing—review and editing, AS: Data Collection, Writing—review and editing.

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions.

Footnotes

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Contributor Information

A. A. Masrur Ahmed, Email: abulabrarmasrur.ahmed@usq.edu.au, Email: masrur@outlook.com.au.

Mohammad Hafez Ahmed, Email: mha0015@mix.wvu.edu.

Sanjoy Kanti Saha, Email: sanjoyks@ntnu.no.

Oli Ahmed, Email: oliahmed3034@gmail.com.

Ambica Sutradhar, Email: ambicasutradhar@gmail.com.

References

- Abadi M, Barham P, Chen J, Chen Z, Davis A, Dean J, Devin M, Ghemawat S, Irving G, Isard M, Kudlur M, Levenberg J, Monga R, Moore S, Murray DG, Steiner B, Tucker P, Vasudevan V, Warden P, Wicke M, Yu Y, Zheng X (2016) TensorFlow: a system for large-scale machine learning. Presented at the 12th {USENIX} symposium on operating systems design and implementation ({OSDI} 16), pp 265–283

- Adamowski J, Chan HF, Prasher SO, Ozga-Zielinski B, Sliusarieva A. Comparison of multiple linear and non-linear regression, autoregressive integrated moving average, artificial neural network, and wavelet artificial neural network methods for urban water demand forecasting in Montreal, Canada. Water Resour Res. 2012 doi: 10.1029/2010WR009945. [DOI] [Google Scholar]

- Ahmed MH, Lin L-S. Dissolved oxygen concentration predictions for running waters with different land use land cover using a quantile regression forest machine learning technique. J Hydrol. 2021;597:126213. doi: 10.1016/j.jhydrol.2021.126213. [DOI] [Google Scholar]

- Ahmed AAM, Deo RC, Ghahramani A, Raj N, Feng Q, Yin Z, Yang L. LSTM integrated with Boruta-random forest optimiser for soil moisture estimation under RCP4.5 and RCP8.5 global warming scenarios. Stoch Environ Res Risk Assess. 2021 doi: 10.1007/s00477-021-01969-3. [DOI] [Google Scholar]

- Ahmed A, Deo RC, Feng Q, Ghahramani A, Raj N, Yin Z, Yang L. Hybrid deep learning method for a week-ahead evapotranspiration forecasting. Stoch Environ Res Risk Assess. 2021 doi: 10.1007/s00477-021-02078-x. [DOI] [Google Scholar]

- Ahmed A, Deo RC, Raj N, Ghahramani A, Feng Q, Yin Z, Yang L. Deep learning forecasts of soil moisture: convolutional neural network and gated recurrent unit models coupled with satellite-derived MODIS, observations and synoptic-scale climate index data. Remote Sens. 2021;13(4):554. doi: 10.3390/rs13040554. [DOI] [Google Scholar]

- Ahmed AM, Deo RC, Feng Q, Ghahramani A, Raj N, Yin Z, Yang L. Deep learning hybrid model with Boruta-Random forest optimiser algorithm for streamflow forecasting with climate mode indices, rainfall, and periodicity. J Hydrol. 2021;599:126350. doi: 10.1016/j.jhydrol.2021.126350. [DOI] [Google Scholar]

- Alados I, Mellado JA, Ramos F, Alados-Arboledas L. Estimating UV erythemal irradiance by means of neural networks. Photochem Photobiol. 2004;80:351–358. doi: 10.1562/2004-03-12-RA-111.1. [DOI] [PubMed] [Google Scholar]

- Alados I, Gomera MA, Foyo-Moreno I, Alados-Arboledas L. Neural network for the estimation of UV erythemal irradiance using solar broadband irradiance. Int J Climatol. 2007;27:1791–1799. doi: 10.1002/joc.1496. [DOI] [Google Scholar]

- Alfadda A, Rahman S, Pipattanasomporn M. Solar irradiance forecast using aerosols measurements: a data driven approach. Sol Energy. 2018;170:924–939. doi: 10.1016/j.solener.2018.05.089. [DOI] [Google Scholar]

- Allaart M, van Weele M, Fortuin P, Kelder H. An empirical model to predict the UV-index based on solar zenith angles and total ozone. Meteorol Appl. 2004;11:59–65. doi: 10.1017/S1350482703001130. [DOI] [Google Scholar]

- Anderiesz C, Elwood M, Hill DJ. Cancer control policy in Australia. Aust N Z Health Policy. 2006;3:12. doi: 10.1186/1743-8462-3-12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Antanasijević D, Pocajt V, Perić-Grujić A, Ristić M. Modelling of dissolved oxygen in the Danube River using artificial neural networks and Monte Carlo Simulation uncertainty analysis. J Hydrol. 2014;519:1895–1907. doi: 10.1016/j.jhydrol.2014.10.009. [DOI] [Google Scholar]

- ARPANSA (2021) Australian radiation and nuclear protection agency 2021 realtime UV levels [WWW Document]. ARPANSA. https://www.arpansa.gov.au/our-services/monitoring/ultraviolet-radiation-monitoring/ultraviolet-radiation-index. Accessed 9 July 2021

- Barrett P, Hunter J, Miller JT, Hsu J-C, Greenfield P (2005) matplotlib: a portable python plotting package 347, 91

- Baumgaertner AJG, Seppälä A, Jöckel P, Clilverd MA. Geomagnetic activity related NOx enhancements and polar surface air temperature variability in a chemistry climate model: modulation of the NAM index. Atmos Chem Phys. 2011;11:4521–4531. doi: 10.5194/acp-11-4521-2011. [DOI] [Google Scholar]

- Beltrán-Castro J, Valencia-Aguirre J, Orozco-Alzate M, Castellanos-Domínguez G, Travieso-González CM (2013) Rainfall forecasting based on ensemble empirical mode decomposition and neural networks. In: International work-conference on artificial neural networks. Springer, pp 471–480

- BOM (2020) Australia’s official weather forecasts & weather radar: Bureau of Meteorology [WWW Document]. http://www.bom.gov.au/. Accessed 9 July 2021

- Boniol M. Descriptive epidemiology of skin cancer incidence and mortality. In: Ringborg U, Brandberg Y, Breitbart E, Greinert R, editors. Skin cancer prevention. Boca Raton: CRC Press; 2016. pp. 221–242. [Google Scholar]

- Brownlee J (2016) Deep learning with python: develop deep learning models on Theano and tensor flow using Keras. Machine Learning Mastery

- Chai T, Draxler RR. Root mean square error (RMSE) or mean absolute error (MAE)? Arguments against avoiding RMSE in the literature. Geosc Model Dev. 2014;7:1247–1250. doi: 10.5194/gmd-7-1247-2014. [DOI] [Google Scholar]

- Chen JP, Yang L, Wang LK, Zhang B. Ultraviolet radiation for disinfection. In: Wang LK, Hung Y-T, Shammas NK, editors. Advanced physicochemical treatment processes, handbook of environmental engineering. Totowa: Humana Press; 2006. pp. 317–366. [Google Scholar]

- Chen C, Jiang H, Zhang Y, Wang Y (2010) Investigating spatial and temporal characteristics of harmful Algal Bloom areas in the East China Sea using a fast and flexible method. In: 2010 18th international conference on geoinformatics. Presented at the 2010 18th international conference on geoinformatics, pp 1–4. 10.1109/GEOINFORMATICS.2010.5567490