Abstract

Background

Atrial fibrillation (AF) is a common heart rhythm disorder that elevates stroke risk. Stroke survivors undergo routine heart rhythm monitoring for AF. Smartwatches are capable of AF detection and potentially can replace traditional cardiac monitoring in stroke patients.

Objective

The goal of Pulsewatch is to assess the accuracy, usability, and adherence of a smartwatch-based AF detection system in stroke patients.

Methods

The study will consist of two parts. Part I will have 6 focus groups with stroke patients, caretakers, and physicians, and a Hack-a-thon, to inform development of the Pulsewatch system. Part II is a randomized clinical trial with 2 phases designed to assess the accuracy and usability in the first phase (14 days) and adherence in the second phase (30 days). Participants will be randomized in a 3:1 ratio (intervention to control) for the first phase, and both arms will receive gold-standard electrocardiographic (ECG) monitoring. The intervention group additionally will receive a smartphone/smartwatch dyad with the Pulsewatch applications. Upon completion of 14 days, participants will be re-randomized in a 1:1 ratio. The intervention group will receive the Pulsewatch system and a handheld ECG device, while the control group will be passively monitored. Participants will complete questionnaires at enrollment and at 14- and 44-day follow-up visits to assess various psychosocial measures and health behaviors.

Results

Part I was completed in August 2019. Enrollment for Part II began September 2019, with expected completion by the end of 2021.

Conclusion

Pulsewatch aims to demonstrate that a smartwatch can be accurate for real-time AF detection, and that older stroke patients will find the system usable and will adhere to monitoring.

Keywords: Atrial fibrillation, Commercial wearable device, Photoplethysmography, Stroke

Key Findings.

-

•

Emerging evidence suggests that smartwatches may be promising modalities for detection of atrial fibrillation, but they are underexplored in older stroke patients.

-

•

Findings from our study will be critical in identifying ideal applications of these devices to maximize their potential in health care settings.

Introduction

Background

Atrial fibrillation (AF) is the world’s most common serious heart rhythm problem, affecting more than 5 million Americans.1, 2, 3 AF carries a 5-fold risk of stroke,4 a 2-fold risk of heart failure,5 and a 50 % increased risk of dying. The excess annual national cost of treating AF patients totals almost $26 billion, and much of this cost relates not only to its detection but also to treatment of its secondary complications,6 many of which are preventable if diagnosed early.7 AF is the single greatest risk factor for stroke, thus making cardiac rhythm monitoring especially critical for survivors of ischemic strokes. Current American Stroke Association stroke management guidelines recommend: “For patients who have experienced an acute ischemic stroke or TIA with no other apparent cause, prolonged rhythm monitoring (∼7 days) for AF is reasonable” and recommended.8 This is because AF is the primary cause of ∼15 % of all strokes.9,10 Moreover, patients with AF, if not treated with anticoagulation therapies, tend to have larger strokes that lead to significant disability.10 Approximately 200,000 cases of cryptogenic stroke are identified in the United States annually, or 1 in 4 total stroke patients. Of these stroke patients, paroxysmal atrial fibrillation (pAF) is identified in 1 of 5 if long-term postdischarge cardiac monitoring is used.11, 12, 13 However, the timing and symptomology of AF can be highly variable, especially early in the disease course, rendering detection especially challenging. Unfortunately, traditional in-hospital monitoring following stroke identifies AF in only ≈5 % of cases, and longer-term monitoring, although effective, is rarely used because it presently necessitates the implantation of an expensive invasive device.2,3,7 Currently, the monitoring option for the time between in-hospital and longer-term monitoring is conventional rhythm monitoring technologies, such as the 24-hour Holter monitor. However, these technologies not only are too brief to detect many instances of AF but also are burdensome to patients because of the multiple wires that must remain continuously attached to the body via skin-irritating electrodes.8 Approximately 1 in 4 patients with cryptogenic stroke have AF, and the median time to first AF episode is approximately 30 days after monitoring onset.14 Therefore, monitoring periods must be at least 7 days in duration to obtain the highest diagnostic yield for pAF.11 In fact, the findings of CRYSTAL-AF (Cryptogenic Stroke and Underlying AF trial) suggest that longer-term monitoring significantly improves pAF detection rates.15 Additionally, recent results from the STROKE-AF (Stroke of Known Cause and Underlying Atrial Fibrillation) trial demonstrate that long-term AF monitoring may be important in all ischemic stroke survivors.16 Therefore, identifying less burdensome and less invasive methods for longer-term pAF screening in stroke populations remains crucial.

Smartphones and smartwatches are a mainstay in the general population and are increasingly commonplace in older populations. Approximately 74 % of Americans over the age of 65 years currently use mobile phones, and that proportion is increasing. Furthermore, more than two-thirds of mobile phones in use today can run smartphone apps.17 Although smartphone use is increasingly common (10 % increase in the last 3 years) among seniors,17 physical difficulties, skeptical attitudes, and difficulty learning new technologies are barriers in this population. However, once older adults adopt new technologies, these tools often become integral to their lives.17,18

Recently, multiple commercially available wrist-worn wearables have been cleared by the Food and Drug Administration (FDA) for electrocardiogram (ECG)-based rhythm monitoring and AF detection, including the Apple Watch (Cupertino, CA), Samsung Galaxy Watch Active2 (Seoul, South Korea) and Fitbit Sense (San Francisco, CA).19, 20, 21 It should be noted that these ECG-based AF detection approaches do not provide continuous monitoring, as subjects need to touch the crown of a smartwatch sensor with a non-watch worn finger. Photoplethysmography (PPG)-based AF monitoring from smartwatches does provide continuous monitoring, but this approach has not been approved by the FDA. Although other large-scale studies, such as the Apple Heart Study and Huawei Heart Study, have examined the accuracy of smartwatches for AF detection, accuracy is not always assessed in real time, and other important facets of use, such as adherence or usability, have not been examined.22 The dearth of extant research on the usability of smartwatches in the older adult population illustrates a critical need because of specific challenges within this age group, including potential deficits in vision, cognition, or fine motor skills.

Objectives

In this multiphase investigation, we first seek to develop our Pulsewatch system (mobile device and smartwatch applications), with provider and patient input. Furthermore, we aim to evaluate the accuracy of the Pulsewatch system for pAF detection in survivors of stroke and/or transient ischemic attack (TIA) compared to a gold-standard cardiac monitoring device, and to assess its usability and acceptability. Finally, we will evaluate the rates of adherence and participant-level factors associated with successful longer-term use of the Pulsewatch system.

Methods

Study design

We are conducting a multiphase study. The first phase consists of patient and provider focus groups, leading to a Hack-a-thon to inform development of the Pulsewatch app and system design. The second part is a randomized controlled trial (RCT) that will assess the impact of the Pulsewatch system on stroke/TIA survivors in various psychosocial domains, the accuracy of our AF detection algorithm, general usability of the system, and adherence to the Pulsewatch system over time.

Study population

For Part I, the developmental phase of the study, patients will be eligible to participate if they (1) are 50 years of age or older; (2) have a history of stroke or TIA; (3) are presenting at the UMass Memorial Medical Center (UMMMC) inpatient service or ambulatory clinic (neurology and cardiovascular clinics included); (4) are able to provide informed consent; and (5) are willing to participate in a focus group and a Hack-a-thon. For patient caregivers to be eligible to participate, they must (1) have a history caring for a family member or loved one with the patient inclusion criteria listed in the last five years; (2) are able to assent; and (3) are willing to participate in a focus group and a Hack-a-thon. Patients and patient caregivers will be excluded from participation if they (1) have a serious physical illness (ie, unable to interact with a smart device or communicate verbally or via written text) that would interfere with study participation; (2) lack the capacity to sign informed consent or give assent; (3) are unable to read and write in the English language; (4) plan to move from the area during the study period; and (5) are unwillingly to complete all study procedures, including attending a focus group and a Hack-a-thon.

For Part I, Aim 1, providers will be eligible to participate if they (1) are a medical provider (eg, cardiology fellow or neurology resident, attending cardiologist, attending neurologist, stroke or cardiology nurse practitioner) at UMMMC; (2) have more than 3 years of experience providing care to stroke or TIA patients; and (3) are willing to participate in a focus group and a Hack-a-thon.

For Part II, patients will be eligible to participate if they (1) are 50 years of age or older; (2) have a history of stroke or TIA; (3) are presenting at the UMMMC inpatient service or ambulatory clinic (neurology and cardiovascular clinics included); (4) are able to provide informed consent; and (5) are willing and capable of using the Pulsewatch system (smartwatch and smartphone app) daily for up to 44 days and are able to return to UMMMC for up to 2 study visits. Patients will be excluded from participation if they (1) have a major contraindication to anticoagulation treatment (eg, major hemorrhagic stroke); (2) have plans to move out of the area over the 44-day follow-up period; (3) are unable to read or speak the English language; (4) are unable to provide informed consent; (5) have a known allergy or hypersensitivity to medical-grade hydrocolloid adhesives or hydrogel; (6) have a life-threatening arrhythmia that requires in-patient monitoring for immediate analysis; and (7) have an implantable pacemaker, as paced beats interfere with the ECG reading.

Major contraindication to anticoagulation treatment is an exclusion due to the goal of this study, which is to help prevent secondary stroke, and anticoagulation is a mainstay treatment for these patients. Therefore, if patients are unable to receive anticoagulation treatment, they are not in the ideal target population for this study.

Part I: Pulsewatch app development—Focus groups

Focus groups will be conducted to inform app development and will include patients, patient caregivers, and physician provider participants. Separate focus groups will be convened for (1) patients and patient caregivers and (2) providers. Focus groups will last for approximately 60 minutes. Qualitative data gathered at these focus groups will be analyzed using thematic content analysis and will generate aggregated preferences and recommendations about smartphone application message components, user interface, reporting, alerts, and watch functions. These analyses will serve to finalize the Pulsewatch app design.

Patient focus groups will begin with a broad description of the Pulsewatch system (including smartphone app and watch), its purpose, and general functionality. Each participant will be given a smartwatch and smartphone programmed with a rudimentary prototype of the Pulsewatch app and will be asked to use them during the focus group. In keeping with contemporary user-centric app design processes,23,24 facilitators will interact with participants as they use the smartwatch and smartphone app. After users have had sufficient time to wear the watch and interface with the app, we will invite them to ask open-ended questions to explore elements such as the layout of the various app screens; preferred graphical representations of heart rate and rhythm (eg, bar graph, slider, stoplight, emojis); push notification alert prompts (including the appropriate number and timing of prompts delivered per day); and opinions about personalized intervention messages (eg, “Mr. Jones, you’ve not checked your heart rhythm”).

Provider focus groups also will begin with an opportunity to interact with the Pulsewatch system prototype. Conversation will focus on exploring the optimal format of rhythm data the clinicians would like to engage with optimal duration and frequency of patient use, and process considerations to best integrate the smartwatch-generated alerts into clinical workflows.

Part I: Pulsewatch app development—Hack-a-thon

After conducting the focus groups, 4 patient participants and 4 health care provider participants from the focus groups will be invited back to attend a “Hack-a-thon” along with the computer programmers and engineers on the study team to create the final Pulsewatch technology to be deployed in Part II (clinical trial). Modeled on Hack-a-thons in the software engineering industry, we will drive innovation by creating team synergy and accelerating product development.

The purpose of the Hack-a-thon meeting is to optimize the interactivity and usability of Pulsewatch, guided by information gleaned from focus groups. Using this format, end-users (providers and patients) can suggest changes, and programmers will make modifications in real time. Using a combination of focus groups and agile programming is an innovative and sound approach to designing apps. This event will be a critical aspect for the clinical trial phase to help develop the interface and functionality of the app for patients to use. At the Hack-a-thon, participants will be asked to use the most recent prototype of the Pulsewatch app and the system, and then provide feedback on what they think of the system. Programmers will then make modifications to the application to reflect patient feedback.

This is a real-time, iterative process, and a near-complete version of the Pulsewatch app will be developed upon conclusion of the Hack-a-thon. After the event, the study team will meet once again to review and encode final changes to the application before deployment in Part II.

Part II: Trial phase—Accuracy and usability

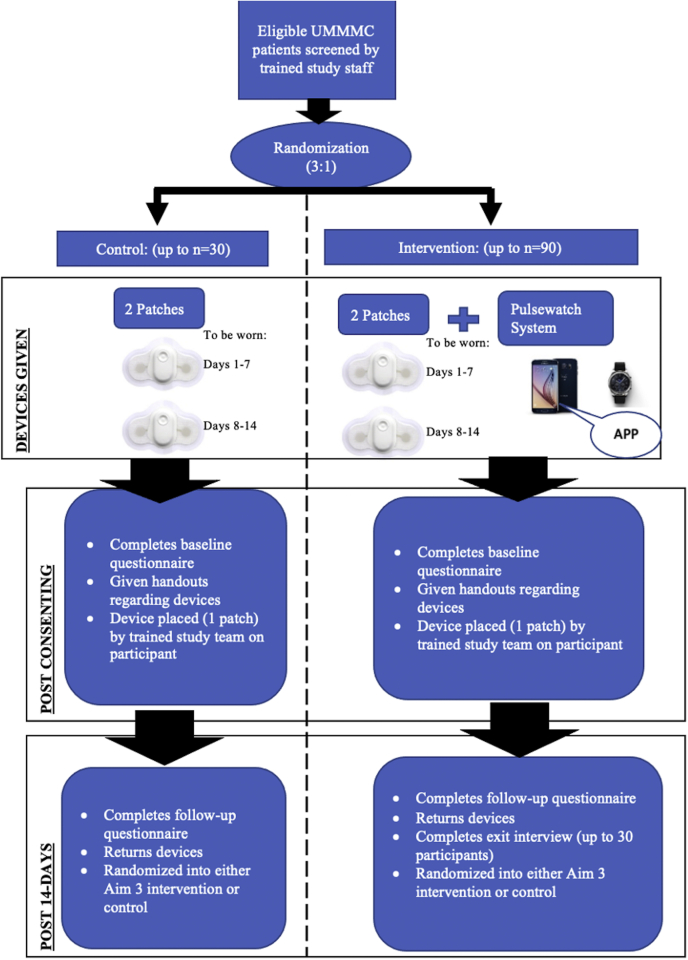

The trial phase of the study will occur after the design and development of the Pulsewatch system (app and watch algorithms) is complete (Part I). For the first phase of Part II, participants will be randomized to either the intervention or control arm. An overview of the 14-day study protocol is detailed in Figure 1.

Figure 1.

Participant process of the trial phase. UMMMC = UMass Memorial Medical Center.

At enrollment, all participants will be asked to complete a standardized baseline questionnaire. After completion of the questionnaire, participants will be randomized to either the intervention or control arm in a 3:1 simple block randomization scheme. We chose to assign a larger proportion of participants to the intervention group because the primary analyses are focused on the intervention group.

Participants randomized to the control group (n = 30) will be given 2 gold-standard FDA-approved Cardiac Insight Cardea Solo cardiac patch monitors (Seattle, WA), the first of which will be placed by study staff. Each patch will run for 7 days to capture heart rhythm data. This patch will allow participants to go home with a continuous AF monitoring method without the need to carry around a bulky Holter device. Participants will be asked to remove the first patch at the end of day 7 and replace it with the second patch and wear it for the next 7 days. Participants randomized to the intervention group (n = 90) will be asked to use the same gold-standard Cardiac Insight cardiac patch monitor device for 14 days. In addition, intervention participants will be given a smartwatch (Samsung Gear S3 or Galaxy Watch 3) and a Samsung smartphone that will have our Pulsewatch study apps downloaded on them. Research staff will conduct appropriate training with participants and any potential caretakers in the use of study devices. After completion of the 14-day period, all participants will return for a follow-up visit at which they will be asked to complete another standardized questionnaire and return all of their study devices. The patch monitors returned by participants at this study visit will be read by a study physician blinded to randomization status. Abnormal findings on the patch monitor device in either the intervention or control group will result in notification to the participant’s treating physician.

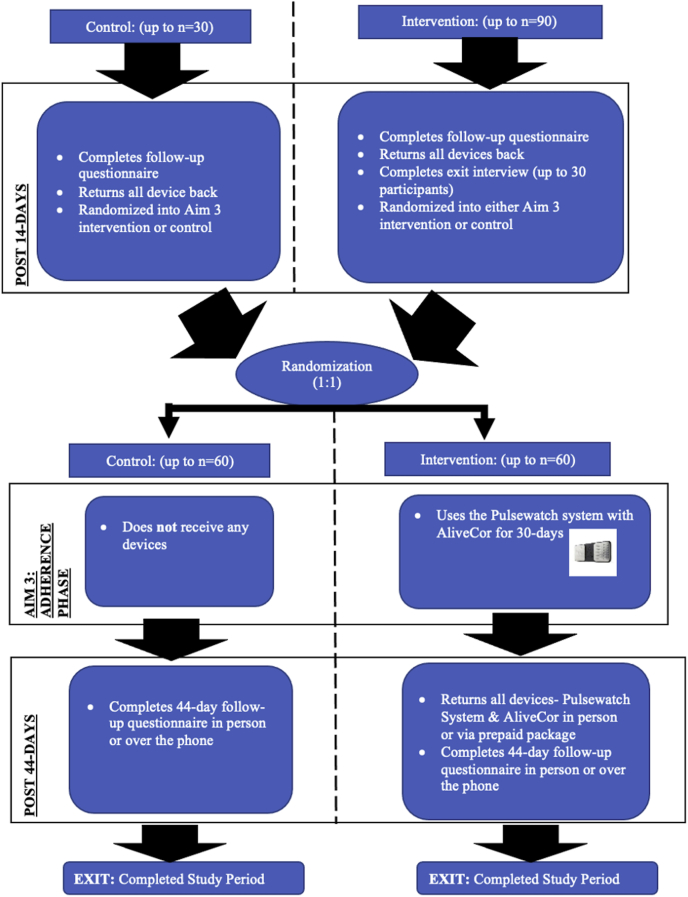

At the 14-day study visit, all participants once again will be randomized for participation in the next part of the clinical trial assessing smartwatch adherence over an additional 30 days. Randomization to either the intervention or control arm at this stage will be done via a 1:1 permuted block randomization scheme. Figure 2 details an overview of the 30-day protocol. Because the initial 14-day period is focused on measuring accuracy, study staff will be monitoring adherence in real time and calling participants to troubleshoot any issues preventing daily watch wear in order to maximize data collection. Thus, the adherence data obtained during this period are not representative of true watch wear time. This additional 30-day period allows for true assessment of adherence to Pulsewatch system use as measured by watch wear patterns and engagement with the app’s features, such as symptom logging over a longer period.

Figure 2.

Participant process of the trial phase continued.

Participants randomized to the control group at this stage (n = 60) will not receive any devices over the Phase II 30-day period. Participants randomized to the intervention group (n = 60) will receive the Pulsewatch system (Samsung smartwatch + Samsung smartphone with study apps), as well as an FDA-cleared AliveCor KardiaMobile device (Mountain View, CA) to be used over a 30-day period. The purpose of the AliveCor device in this 30-day period is to allow the participants to verify their smartwatch pulse readings if they wish, as they will not be wearing an ECG monitor. Upon completion of this additional 30-day wear period, participants will complete a final study questionnaire. This second randomization step will create 4 categories with regard to duration of Pulsewatch system exposure as outlined in Table 1.

Table 1.

Pulsewatch system exposure by randomization status

| Group | 14-Day period (accuracy/usability) | 30-Day period (adherence) | Days of Pulsewatch device exposure |

|---|---|---|---|

| 1 | Intervention | Intervention | 44 |

| 2 | Control | Intervention | 30 |

| 3 | Intervention | Control | 14 |

| 4 | Control | Control | 0 |

Categorizing Pulsewatch exposure into 4 four distinct groups allows for more nuanced analyses in examining differences in various self-reported outcomes across the groups.

Part II: Self-reported measures

At enrollment and at the 14-day and 44-day follow-up study visits, all Part II participants will complete a questionnaire that includes standardized scales to assess various psychosocial measures and health behaviors. Contents and administration schedule of the questionnaires are outlined in Table 2. An overview of the questionnaire instruments also is outlined in Table 2.

Table 2.

Overview of which questionnaires are administered, which are outcomes, and when they are administered

| Measurement | Questionnaire tool | Baseline assessment (all Part II) | 14-Day follow-up assessment (control) | 14-Day follow-up assessment (intervention) | 44-Day follow-up assessment (all Part II) |

|---|---|---|---|---|---|

| Physical assessments | |||||

| Vision | 4 items | ✓ | |||

| Hearing | 3 items | ✓ | |||

| Psychosocial measures | |||||

| Cognitive impairment | Montreal Cognitive Assessment (MoCA) (30 items) | ✓ | |||

| Social support | Social Support Scale (5 items) | ✓ | |||

| Lubben Social Network Scale (6 items) | ✓ | ||||

| Depressive symptoms | Patient Health Questionnaire (PHQ-9) (9 items) | ✓ | |||

| Anxious symptoms | General Anxiety Disorder (GAD-7) (7 items) | ✓ | ✓ | ✓ | ✓ |

| Quality-of-life measures | |||||

| Physical and mental health | Health Survey SF-12 (12 items) | ✓ | ✓ | ✓ | ✓ |

| Patient activation | Consumer Health Activation Index (CHAI) (10 items) | ✓ | ✓ | ✓ | ✓ |

| Disease management self-efficacy | Chronic Disease Management Self-Efficacy Scales: Manage Disease in General Scale (5 items) | ✓ | ✓ | ✓ | |

| Chronic Disease Management Self-Efficacy Scales: Manage Symptoms Scale (5 items) | ✓ | ✓ | ✓ | ||

| Medications | |||||

| Medication adherence | Adherence to Refills and Medication Scale (ARMS) (12 items) | ✓ | ✓ | ✓ | |

| Health-related behavior | |||||

| Social history | Smoking and alcohol use | ✓ | |||

| Other | |||||

| Interaction with provider | Perceived Efficacy in Patient–Physician Interactions (PEPPI) | ✓ | |||

| Technology use | Device ownership/Internet access | ✓ | |||

| Usability assessment | System Usability Scale (SUS) (10 items), investigator-generated questions | ✓ | |||

| App usability assessment | Mobile Application Rating Scale (MARS) App Classification (11 items) | ✓ | |||

| Demographics | Social economic status, employment, race, and ethnicity questions | ✓ |

The domains listed in Table 2 will be assessed via the following items:

Anxiety: Anxiety will be assessed using the Generalized Anxiety Disorder-7 scale (GAD-7),25 a revised version of the anxiety module from the Patient Health Questionnaire, which consists of Diagnostic and Statistical Manual of Mental Disorders (Fourth Edition) (DSM-IV) criteria for generalized anxiety disorder over the past 2 weeks.26 The GAD-7 score ranges from 0 to 27, with scores of 5, 10, and 15 representing validated cutpoints for mild, moderate, and severe levels of anxiety symptoms, respectively. A score ≥10 has high sensitivity (0.89) and specificity (0.82) for psychiatric diagnosed anxiety disorder and correlates significantly with health-related quality of life.25

Physical and Mental Health: The SF-12 is a short-form health survey to assess health-related quality of life. This validated instrument’s domains include general health–related questions and mental health–related questions.27 Scores range from 0 to 100, with higher scores indicating higher levels of health-related quality of life.

Patient Activation: Patient activation refers to a patient’s ability and willingness to manage his or her health. The Consumer Health Activation Index (CHAI) is a 10-item scale that assesses patient activation. Scores range from 10 to 60, which are then transformed via a linear transformation to a scale from 0 to 100, with a higher score associated with fewer depressive and anxiety symptoms, as well as greater physical functioning.28

Cognitive Function: The Montreal Cognitive Assessment Battery (MoCA) is a 10-minute, 30-item screening tool designed to assist physicians in detecting mild cognitive impairment.29 MoCA correlates well (0.89) with the widely used Mini Mental State Exam,30 but it outperforms it in the detection of mild cognitive impairment.29,31 The MoCA score can be used to examine a magnitude of change over time and offers validated, education-adjusted cutpoints for mild and moderate cognitive impairment.

SocialSupport: We will use a 5-item modified Social Support Scale and the 6-item Lubben Social Network Scale.32,33 Together, these measures assess the breadth (eg, help with activities) and depth (eg, network size) of the participant’s social support.

Depressive Symptoms: We will use the 9-item version of the Patient Health Questionnaire (PHQ),26 which can yield both a provisional diagnosis of depression and a severity score that is associated with functional status, disability days, and health care utilization.34 The PHQ-9 consists of the 9 criteria upon which DSM-IV depressive disorders are based. Using a cutpoint > 10 (range 0–27), the PHQ-9 has high sensitivity (0.88) and specificity (0.88) for detecting major depression among patients with cardiovascular disease.35,36

Vision and Hearing: Participants will be asked questions regarding their vision and hearing based on a 4-point Likert scale.

Disease Management Self-Efficacy: Self-efficacy for disease management is associated with engagement in health behaviors and improved medication adherence.37,38 The General Disease Management scale is a 5-item scale assessing confidence in disease self-management (scores 0–50; high scores = greater confidence). The Symptom Management Scale is a 5-item scale assessing confidence in managing chronic disease symptoms.

Medication Adherence: Medication adherence will be measured with the 12-item Adherence to Refills and Medications Scale (ARMS), a well-validated measure of patient-reported adherence.39 ARMS scores range from 1 to 4 (higher scores = poorer adherence).

Social History: Social and behavioral factors such as smoking and alcohol use are related to strokes and cardiovascular conditions. Given that the goal of this study is to prevent recurring strokes in this patient population, we believe it is necessary to obtain this information from participants.

App Usability: We will be using the Mobile Application Rating Scale (MARS) App Classification (11 items) to help us capture the participants’ experience with the Pulsewatch applications.

Ethical approval and trial registration

Formal ethical approval for this study was obtained from the University of Massachusetts Medical School Institutional Review Board (IRB) (Approval Number H00016067). Written informed consent will be collected from all patient participants in Part I and from all participants in Part II. A verbal assent will be taken from caregivers and providers enrolled in Part I. This study is registered on Clinicaltrials.gov Identifier NCT03761394.

COVID-19 adaptations

Due to the unprecedented challenges posed by coronavirus disease 2019 (COVID-19) with regard to in-person human subjects research, we have adapted the protocol to allow for all study encounters to occur over the phone. The consent form was adapted for telephone and approved by the IRB, and eligible participants are called via their contact information in the electronic health record and consented. All study devices, instructions, and compensation will be sent through mail to participants, and, upon receipt via package tracking services, research staff will call participants to conduct the baseline interview, offer device training, and guide the participants through placing of the ECG patch. An abbreviated, validated version of the MoCA will be conducted over the telephone (T-MoCA), omitting items that require engaging with visual elements; all other questionnaire components remain the same as an in-person visit. This protocol was initiated in July 2020, and all participants who are approached over the phone are also offered the option to participate in-person per the original study protocol if they prefer.

Statistical analysis

AF detection

We will examine the system’s accuracy for AF identification as well as estimate AF burden. A participant will be classified as having AF or not at the end of the 14-day monitoring period based on the gold-standard monitor. The smartwatch conducts pulse checks in the following manner: 5 minutes of continuous PPG monitoring, and, if no AF is detected, there is no monitoring for the next 5 minutes. If AF is detected, the smartwatch monitors continuously. Pulse-derived heart rhythm data from the Pulsewatch system are divided into 30-second segments for analysis, and a positive reading will be defined as AF being detected in at least 7 of 10 continuous data windows (5 minutes). The total number of positive readings collected over 14 days from Pulsewatch will be used as the independent variable in a logistic regression to predict the binary outcome of AF as determined by the gold-standard monitor (yes vs no). The area under the receiver operating characteristic (ROC) curve (AUC) will be calculated based on the results of the logistic regression to evaluate Pulsewatch performance for AF screening. From the ROC curve, we can identify the cutoff point of total number of positive readings that produces the highest sensitivity and specificity combination.

Similar analysis will be conducted using the percentage of positive readings among all readings collected from each participant as the independent variable. The 95 % confidence interval (CI) of the AUC will be calculated using the formula given by Hanley and McNeil.40 We will then conduct exploratory analyses to examine whether characteristics affect watch performance over 14 days. For example, we will compare the area under 2 independent ROC curves of female vs male participants (or vision impaired vs not) using the χ2 test.

Usability analysis

We also will examine whether participant characteristics (eg, demographics, level of technology use, cognitive impairment, etc) affect usability of and adherence to the Pulsewatch system. Usability will be assessed by the System Usability Scale (SUS), a validated instrument for perceived system usability. Investigator-generated questions will have responses in a 5-point Likert-like scale, and ordinal regression will be used to evaluate participant characteristics that may be associated with more favorable responses regarding usability of the Pulsewatch system. The SUS score ranges between 10 and 100, and linear regression will be used to examine the participant-level factors that are associated with higher SUS scores. Logistic regression also will be used to examine whether certain participant factors are associated with having a SUS score >68, the acceptable cutoff being deemed as a highly usable system.

Adherence analysis

Adherence will be operationalized to determine whether pulse recordings were present on each day over the 30-day adherence monitoring period after the second randomization. We will examine whether participant characteristics (eg, sex, cognitive impairment, or stroke-related quality of life) affect the likelihood of adherence over the 1-month study period. We will use a mixed effects logistic regression model, including the participant as the random effect to capture the correlation among repeated measures from the same participant, using participant characteristics as the fixed effects, and using a binary indicator of daily adherence as the dependent variable. Additionally, we will conduct secondary analyses by dichotomizing adherence into high vs low based on the number of days the smartwatch was worn depending on our observed distribution and examine participant-level factors that predict adherence to the Pulsewatch system. We also will examine the adherence time-trend by including time (day) as a fixed effect in the model and participant as a random effect to estimate the slope of adherence over time. To examine whether the time trend varies by participant characteristics, we will include the interaction between characteristics and time in the model so that the slope of adherence over time can be estimated for each category of participant characteristic variables and be compared among the categories (eg, AF diagnosed vs no AF). We also will conduct secondary analyses using the number of hours worn (total hours and daily) as an outcome. Group-based trajectory modeling will be used to examine potentially nuanced patterns of watch wear over time, and mixed effects linear regressions will be used to explore associations between any participant characteristics and daily watch wear, using patients as a random effect to account for correlation between repeated measures.

Study power

Preliminary data suggest that Pulsewatch will have sensitivity, specificity, and AUC of at least .9 to detect pAF.41 We calculate the width of 95 % CI for AUC that ranges from .90 to .95 using the proposed sample size of 90 and an estimated rate of 20 % patients with pAF. The width of the 95 % CI ranges from .14 to .20. Therefore, the proposed sample size will give a precise estimate of Pulsewatch performance relative to the gold standard.

Results

Part I (app development) was completed in August 2019. In total, we had 6 patient focus groups that enrolled 17 stroke/TIA survivors and 4 informal caregivers. Table 3 outlines the characteristics of the 17 patient participants.

Table 3.

Characteristics of 17 Part I participants

| Age (y) | 68.8 ± 7.9 |

| Female sex | 5 (29) |

| Race | |

| White | 15 (88) |

| Hispanic or Latino | 1 (6) |

| CHA2DS2-VASc score | 4.2 ± 1.3 |

| Body mass index (kg/m2) | 29.2 ± 5.2 |

| Medical characteristics | |

| Atrial fibrillation | 4 (24) |

| Hypertension | 13 (76) |

| Diabetes mellitus | 1 (6) |

| Prior myocardial infarction | 1 (6) |

| Congestive heart failure | 2 (12) |

| Stroke | 14 (82) |

| Transient ischemic attack | 3 (18) |

Values are given as mean ± SD or n (%).

After completing these focus groups, we generated qualitative data analyses of the focus groups’ aggregated preferences and recommendations about the interface and functions of the smartphone and smartwatch applications.

We also conducted 2 health care provider focus groups, one with 20 UMMMC Cardiology faculty members and one with 10 UMMMC Neurology faculty members. We solicited feedback on the use of smartwatches in various clinical settings, including arrhythmia detection.

On May 31, 2019, we invited 4 stroke/TIA survivors (age 64 ± 2.8 years; 50 % female), 4 providers (1 cardiologist, 1 electrophysiologist, 2 stroke neurologists), and our engineering team to work together at the Hack-a-thon to refine the user interface, design, and functions of the smartphone and smartwatch app. Key screens from the smartphone application and smartwatch application deployed in the clinical trial are shown in Figure 3.

Figure 3.

Key Pulsewatch smartphone and smartwatch app screens. AF = atrial fibrillation; HR = heart rate.

Part II, the RCT portion of the study, began on September 3, 2019. To date, we have enrolled 84 stroke/TIA survivors into our trial. Enrollment was halted in spring 2020 due to COVID-19 and resumed in summer 2020. Recruitment is anticipated to be completed by September 2021. We expect study results to be available by the end of 2021.

Discussion

This protocol paper describes a multiphase investigation to assess the accuracy, usability, and adherence of a smartwatch application to detect AF in stroke and TIA survivors. The first phase of Pulsewatch describes how we developed our user-centered system. The second phase consists of an RCT designed to measure the Pulsewatch system’s accuracy in AF detection, as well as various user-reported outcomes and health behaviors.

Currently, mobile health (mHealth) apps are not designed for maximal acceptability across diverse populations, much less for older patients who tend to be slow adopters of technologies, and mHealth interventions rarely effectively apply health behavior theory.42 Pulsewatch development identified app design features that resonate with the elderly, a population that has an enormous need for effective mHealth interventions. More specifically, there is a great need for mHealth interventions geared toward poststroke patients, particularly interventions that have incorporated end-user input. Outpatient rhythm monitoring is critical to averting recurrent strokes, heart failure, and death, but current technologies have severe methodological shortcomings that result in low adherence and high cost, impeding long-term monitoring. Although the Pulsewatch system is not intended to replace implantable loop recorders (ILRs), the gold standard for long-term AF monitoring, it instead may act as a bridge from poststroke AF monitoring to potential implantation of an ILR for best suited patients. In addition, the Pulsewatch system would be able to offer at least some form of AF monitoring to those patients experiencing barriers to ILR use. Acceptable and accurate technologies for pAF monitoring are needed.43 Forty-five percent of stroke treatment costs are incurred from acute care and monitoring.7 Pulsewatch offers a lower-cost monitor that would accelerate the diagnosis and treatment of pAF in elderly stroke patients, preventing further strokes and thus decreasing the overall cost of treating stroke. The aggregate national cost of stroke care is $26 billion annually.44 If Pulsewatch were used by the ∼200,000 adults in the United States who suffer a cryptogenic stroke to extend monitoring to 1 month (from the typical 7 days), we estimate this would result in ∼10,000 fewer strokes annually, at a cost savings of ∼$20 million in care.6,7 Pulsewatch’s innovative approach to cardiac monitoring is patient-centric and noninvasive. By incorporating features that enhance its accuracy and acceptability among older patients, Pulsewatch will extend the time horizon over which stroke patients can be monitored for pAF, resulting in faster interventions that lead to better outcomes and increased independence in a high-risk population.

Study limitations

The relative homogeneity of the patients enrolled in Part I (app design) with respect to race/ethnicity potentially limited our design process due to a lack of diverse input and perspectives; thus, the system would have to be evaluated by a more diverse set of patients in future studies. Another limitation is that 30 days for adherence monitoring might not be long enough to see nuanced patterns in adherence to our system, especially in the event that we observe high rates of adherence across all users within this time frame. It is imperative for future studies to recruit from larger, more diverse populations to ensure equitable representation of perspectives in app design, and to potentially extend duration monitoring depending on the results of the current study.

Conclusion

We have described a protocol that uses the input of our targeted patient population to build a digital health system to alleviate the patient burden and high cost of poststroke care and monitoring. Furthermore, our protocol describes how to assess the accuracy, usability, and adherence of an AF monitoring system within the poststroke population.

Funding Sources

The Pulsewatch Study is funded by R01HL137734 from the National Heart, Lung, and Blood Institute. Eric Y. Ding’s time is supported by F30HL149335 from the National Heart, Lung, and Blood Institute. Dr McManus’s time is supported by R01HL126911, R01HL137734, R01HL137794, R01HL135219, R01HL136660, U54HL143541, and 1U01HL146382 from the National Heart, Lung, and Blood Institute.

Disclosures

Dr McManus has received honorary, speaking/consulting fee, or grants from Flexcon, Rose Consulting, Bristol-Myers Squibb, Pfizer, Boston Biomedical Associates, Samsung, Phillips, Mobile Sense, CareEvolution, Flexcon Boehringer Ingelheim, Biotronik, Otsuka Pharmaceuticals, and Sanofi; and declares financial support for serving on the Steering Committee for the GUARD-AF study (NCT04126486) and Advisory Committee for the Fitbit Heart Study (NCT04176926) and also editor-in-chief of Cardiovascular Digital Health Journal. The other authors have no disclosures.

Authorship

All authors attest they meet the current ICMJE criteria for authorship.

Patient Consent

All patients provided written informed consent.

Disclaimer

Given his role as Editor-in-Chief, David McManus had no involvement in the peer review of this article and has no access to information regarding its peer review. Full responsibility for the editorial process for this article was delegated to Hamid Ghanbari.

Ethics Statement

The authors designed the study, gathered, and analyzed the data according to the Helsinki Declaration guidelines on human research. The research protocol used in this study was reviewed and approved by the institutional review board.

Footnotes

ClinicalTrials.gov Identifier NCT03761394

References

- 1.Camm A.J., Kirchhof P., Lip G.Y., et al. ESC Committee for Practice Guidelines Guidelines for the management of atrial fibrillation: the Task Force for the Management of Atrial Fibrillation of the European Society of Cardiology (ESC) Europace. 2010;12:1360–1420. doi: 10.1093/europace/euq350. Erratum in: Europace 2011;13:1058. [DOI] [PubMed] [Google Scholar]

- 2.January C.T., et al. 2014 AHA/ACC/HRS guideline for the management of patients with atrial fibrillation: a report of the American College of Cardiology/American Heart Association Task Force on practice guidelines and the Heart Rhythm Society. Circulation. 2014;130:e199–e267. doi: 10.1161/CIR.0000000000000041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Skanes A.C., et al. Focused 2012 update of the Canadian Cardiovascular Society atrial fibrillation guidelines: recommendations for stroke prevention and rate/rhythm control. Can J Cardiol. 2012;28:125–136. doi: 10.1016/j.cjca.2012.01.021. [DOI] [PubMed] [Google Scholar]

- 4.Wolf P.A., Abbott R.D., Kannel W.B. Atrial fibrillation as an independent risk factor for stroke: the Framingham Study. Stroke. 1991;22:983–988. doi: 10.1161/01.str.22.8.983. [DOI] [PubMed] [Google Scholar]

- 5.Samsung. Samsung Simband Documentation. 2016. Available at: https://www.simband.io/documentation/simband-documentation. Accessed July 20, 2021.

- 6.Fak A.S., et al. Expert panel on cost analysis of atrial fibrillation. Anadolu Kardiyol Derg. 2013;13:26–38. doi: 10.5152/akd.2013.004. [DOI] [PubMed] [Google Scholar]

- 7.Palmer A.J., et al. Overview of costs of stroke from published, incidence-based studies spanning 16 industrialized countries. Curr Med Res Opin. 2005;21:19–26. doi: 10.1185/030079904x17992. [DOI] [PubMed] [Google Scholar]

- 8.Furie K.L., et al. American Heart Association Stroke Council, Council on Cardiovascular Nursing, Council on Clinical Cardiology, and Interdisciplinary Council on Quality of Care and Outcomes Research. Guidelines for the prevention of stroke in patients with stroke or transient ischemic attack: a guideline for healthcare professionals from the American Heart Association/American Stroke Association. Stroke. 2011;42:227–276. doi: 10.1161/STR.0b013e3181f7d043. [DOI] [PubMed] [Google Scholar]

- 9.Lubitz S.A., et al. Atrial fibrillation patterns and risks of subsequent stroke, heart failure, or death in the community. J Am Heart Assoc. 2013;2 doi: 10.1161/JAHA.113.000126. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Reiffel J.A. Atrial fibrillation and stroke: epidemiology. Am J Med. 2014;127:e15–e16. doi: 10.1016/j.amjmed.2013.06.002. [DOI] [PubMed] [Google Scholar]

- 11.Kishore A., et al. Detection of atrial fibrillation after ischemic stroke or transient ischemic attack: a systematic review and meta-analysis. Stroke. 2014;45:520–526. doi: 10.1161/STROKEAHA.113.003433. [DOI] [PubMed] [Google Scholar]

- 12.Sanna T., et al. Cryptogenic stroke and underlying atrial fibrillation. N Engl J Med. 2014;370:2478–2486. doi: 10.1056/NEJMoa1313600. [DOI] [PubMed] [Google Scholar]

- 13.Christensen L.M., et al. Paroxysmal atrial fibrillation occurs often in cryptogenic ischaemic stroke. Final results from the SURPRISE study. Eur J Neurol. 2014;21:884–889. doi: 10.1111/ene.12400. [DOI] [PubMed] [Google Scholar]

- 14.Flint A.C., Banki N.M., Ren X., Rao V.A., Go A.S. Detection of paroxysmal atrial fibrillation by 30day event monitoring in cryptogenic ischemic stroke: the Stroke and Monitoring for PAF in Real Time (SMART) Registry. Stroke. 2012;43:2788–2790. doi: 10.1161/STROKEAHA.112.665844. [DOI] [PubMed] [Google Scholar]

- 15.Choe W.C., et al. A comparison of atrial fibrillation monitoring strategies after cryptogenic stroke (from the Cryptogenic Stroke and Underlying AF Trial) Am J Cardiol. 2015;116:889–893. doi: 10.1016/j.amjcard.2015.06.012. [DOI] [PubMed] [Google Scholar]

- 16.Bernstein R.A., et al. Effect of long-term continuous cardiac monitoring vs usual care on detection of atrial fibrillation in patients with stroke attributed to large- or small-vessel disease: the STROKE-AF randomized clinical trial. JAMA. 2021;325:2169–2177. doi: 10.1001/jama.2021.6470. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Smith A. Older Adults and Technology Use. Pew Research Center. http://www.pewinternet.org/2014/04/03/older-adults-and-technology-use Available at. Accessed April 15, 2021.

- 18.Mobile Technology Fact Sheet. Pew Research Center. Available at https://www.pewresearch.org/internet/fact-sheet/mobile/. Accessed April 25, 2021.

- 19.Ding E.Y., Han D., Whitcomb C., et al. Accuracy and usability of a novel algorithm for detection of irregular pulse using a smartwatch among older adults: observational study. JMIR Cardio. 2019;3 doi: 10.2196/13850. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Dörr M., Nohturfft V., Brasier N., et al. The WATCH AF Trial: SmartWATCHes for detection of atrial fibrillation. JACC Clin Electrophysiol. 2018;5:199–208. doi: 10.1016/j.jacep.2018.10.006. [DOI] [PubMed] [Google Scholar]

- 21.Guo Y., Wang H., Zhang H., et al. Mobile photoplethysmographic technology to detect atrial fibrillation. J Am Coll Cardiol. 2019;74:2365–2375. doi: 10.1016/j.jacc.2019.08.019. [DOI] [PubMed] [Google Scholar]

- 22.Perez M.V., Mahaffey K.W., Hedlin H., et al. Apple Heart Study Investigators. Large-scale assessment of a smartwatch to identify atrial fibrillation. N Engl J Med. 2019;381:1909–1917. doi: 10.1056/NEJMoa1901183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Davis F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989;13:319–340. [Google Scholar]

- 24.De Vito Dabbs A., et al. User-centered design and interactive health technologies for patients. Comput Inform Nurs. 2009;27:175–183. doi: 10.1097/NCN.0b013e31819f7c7c. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Spitzer R.L., Kroenke K., Williams J.B.W., Löwe B. A brief measure for assessing generalized anxiety disorder: the GAD-7. Arch Intern Med. 2006;166:1092–1097. doi: 10.1001/archinte.166.10.1092. [DOI] [PubMed] [Google Scholar]

- 26.Spitzer R.L., Kroenke K., Williams J.B. Validation and utility of a self-report version of PRIME-MD: the PHQ primary care study. Primary Care Evaluation of Mental Disorders. Patient Health Questionnaire. JAMA. 1999;282:1737–1744. doi: 10.1001/jama.282.18.1737. [DOI] [PubMed] [Google Scholar]

- 27.Hou T., et al. Assessing the reliably of the short-form 12 (SF-12) health survey in adults with mental health conditions: a report from the Wellness Inactive and Navigation (WIN) study. Health Qual Life Outcomes. 2018;16:34. doi: 10.1186/s12955-018-0858-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Wolf S., et al. Development and validation of the consumer health activation index. Med Decis Making. 2018;38:334–343. doi: 10.1177/0272989X17753392. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Nasreddine Z.S., et al. The Montreal Cognitive Assessment, MoCA: a brief screening tool for mild cognitive impairment. J Am Geriatr Soc. 2005;53:695–699. doi: 10.1111/j.1532-5415.2005.53221.x. [DOI] [PubMed] [Google Scholar]

- 30.Folstein M.F., Folstein S.E., McHugh P.R. ‘Mini-mental state’. A practical method for grading the cognitive state of patients for the clinician. J Psychiatr Res. 1975;12:189–198. doi: 10.1016/0022-3956(75)90026-6. [DOI] [PubMed] [Google Scholar]

- 31.McLennan S.N., Mathias J.L., Brennan L.C., Stewart S. Validity of the Montreal Cognitive Assessment (MoCA) as a screening test for mild cognitive impairment (MCI) in a cardiovascular population. J Geriatr Psychiatry Neurol. 2011;24:33–38. doi: 10.1177/0891988710390813. [DOI] [PubMed] [Google Scholar]

- 32.Lubben J., et al. Performance of an abbreviated version of the Lubben Social Network Scale among three European community-dwelling older adult populations. Gerontologist. 2006;46:503–513. doi: 10.1093/geront/46.4.503. [DOI] [PubMed] [Google Scholar]

- 33.Sherbourne C.D., Stewart A.L. The MOS social support survey. Soc Sci Med. 1991;32:705–714. doi: 10.1016/0277-9536(91)90150-b. [DOI] [PubMed] [Google Scholar]

- 34.Katon W., Lin E.H.B., Kroenke K. The association of depression and anxiety with medical symptom burden in patients with chronic medical illness. Gen Hosp Psychiatry. 2007;29:147–155. doi: 10.1016/j.genhosppsych.2006.11.005. [DOI] [PubMed] [Google Scholar]

- 35.Duncan P.W., et al. Development of a comprehensive assessment toolbox for stroke. Clin Geriatr Med. 1999;15:885–915. [PubMed] [Google Scholar]

- 36.Stafford L., Berk M., Jackson H.J. Validity of the Hospital Anxiety and Depression Scale and Patient Health Questionnaire-9 to screen for depression in patients with coronary artery disease. Gen Hosp Psychiatry. 2007;29:417–424. doi: 10.1016/j.genhosppsych.2007.06.005. [DOI] [PubMed] [Google Scholar]

- 37.Sarkar U., Fisher L., Schillinger D. Is self-efficacy associated with diabetes self-management across race/ethnicity and health literacy? Diabetes Care. 2006;29:823–829. doi: 10.2337/diacare.29.04.06.dc05-1615. [DOI] [PubMed] [Google Scholar]

- 38.Scherer Y., Bruce S. Knowledge, attitudes, and self-efficacy and compliance with medical regimen, number of emergency department visits, and hospitalizations in adults with asthma. Heart Lung. 2001;30:250–257. doi: 10.1067/mhl.2001.116013. [DOI] [PubMed] [Google Scholar]

- 39.Kripalani S., Risser J., Gatti M.E., Jacobson T.A. Development and evaluation of the Adherence to Refills and Medications Scale (ARMS) among low-literacy patients with chronic disease. Value Health. 2009;12:118–123. doi: 10.1111/j.1524-4733.2008.00400.x. [DOI] [PubMed] [Google Scholar]

- 40.Hanley J., McNeil B. The meaning and use of the area under a receiver operating characteristic (ROC) curve. Radiology. 1982;143:29–36. doi: 10.1148/radiology.143.1.7063747. [DOI] [PubMed] [Google Scholar]

- 41.Lee J., et al. Atrial fibrillation detection using an iPhone 4S. IEEE Trans Biomed Eng. 2013;60:203–206. doi: 10.1109/TBME.2012.2208112. [DOI] [PubMed] [Google Scholar]

- 42.Riley W.T., et al. Health behavior models in the age of mobile interventions: are our theories up to the task? Transl Behav Med. 2011;1:53–71. doi: 10.1007/s13142-011-0021-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Benjamin E.J., et al. Prevention of atrial fibrillation: report from a National Heart, Lung, and Blood Institute workshop. Circulation. 2009;119:606–618. doi: 10.1161/CIRCULATIONAHA.108.825380. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Kim M.H., Johnston S.S., Chu B.-C., Dalal M.R., Schulman K.L. Estimation of total incremental health care costs in patients with atrial fibrillation in the United States. Circ Cardiovasc Qual Outcomes. 2011;4:313–320. doi: 10.1161/CIRCOUTCOMES.110.958165. [DOI] [PubMed] [Google Scholar]