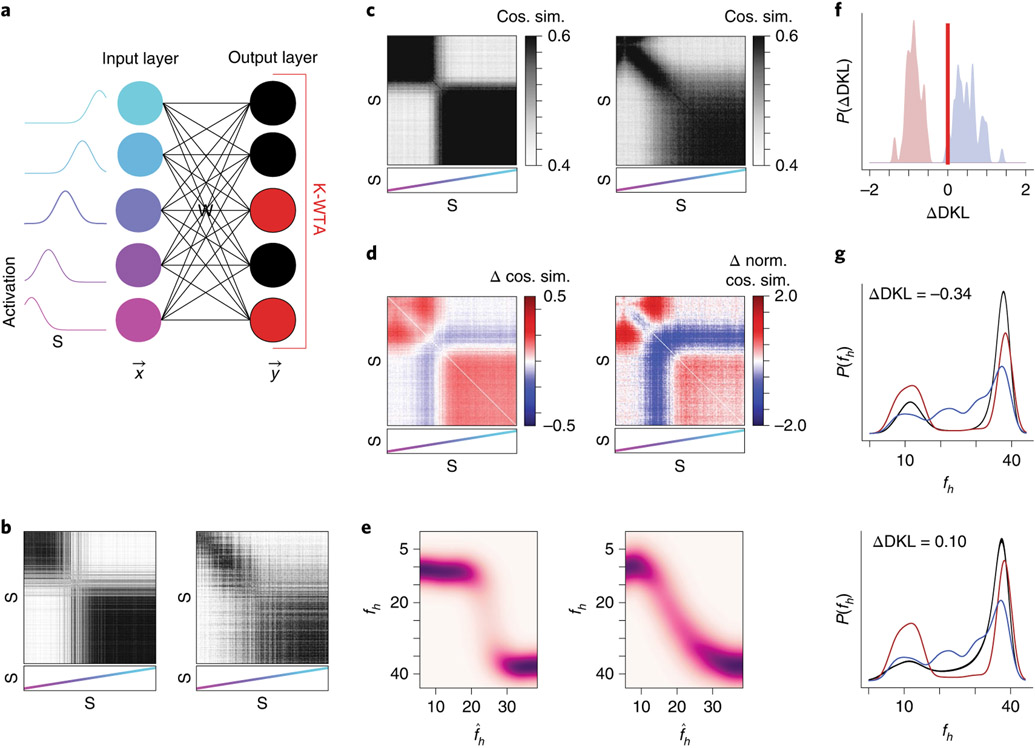

Fig. 7 ∣. Neural context discrimination is well explained by associative-learning models.

a, Schematic of the computational model. The input layer contains neurons that form a basis for representing the stimulus using radial basis functions. Activations are linearly combined via the matrix W, and thresholding is applied to the output layer via a KWTA mechanism to achieve the output activation. W is updated after each stimulus presentation using Hebbian learning. Model training is performed by drawing trials randomly from either the rare morph prior or the frequent morph prior. b, Left: trial-by-trial similarity matrix, as in Fig. 3a, for an example model trained under the rare morph condition. Right: same as the left, but for an example model trained under the frequent morph condition. c, Average trial-by-trial similarity matrices across model instantiations (n = 50) for rare morph (left) and frequent morph (right) trained models. d, Left: the average rare morph similarity matrix minus the average frequent morph similarity matrix. Right: same as the left, but the similarity matrix for each model is z-scored before averaging. e, Q estimates for accumulated rare morph trained models (left) and frequent morph models (right). f, Smoothed histogram of ΔDKL values for rare morph (maroon) and frequent morph (blue) trained models. Red line indicates zero. g, Reconstructed priors for rare morph (top, black, mean ±s.e.m.) and frequent morph (bottom, black, mean ±s.e.m.) trained models. Note that this is the unsupervised recovery of the priors. Ideal rare morph (maroon) and frequent morph (blue) priors are shown for reference. ΔDKL is for the averaged reconstructed prior.