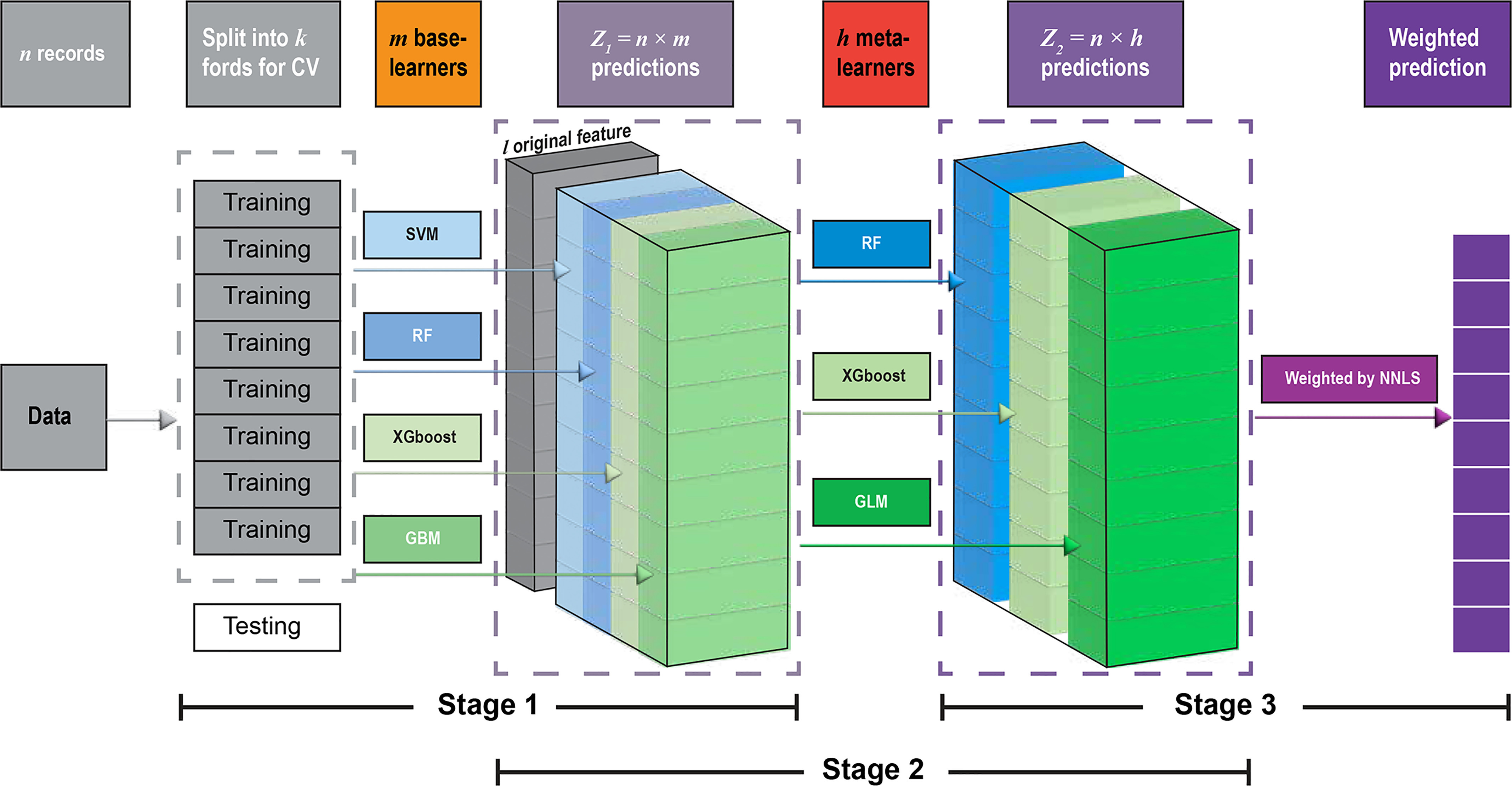

Figure 1.

The framework of the DEML algorithm. is a matrix with rows and columns, which is the combination of predictions for each base model; l represents the original features; h denotes the number of meta-models; is a matrix with row and columns, which is the combination of predictions for each meta model. We finally get as the input to obtain the weights of the meta models by using the NNLS algorithm and get the final prediction; k is the number of folds for CV, and we select the same valid rows for the base and meta models; is the number of records of all data; denotes the number of base models. Note: CV, cross-validation analysis; DEML, the three-stage stacked deep ensemble machine learning method; GBM, gradient boosting machine; GLM, generalized linear model; NNLS, nonnegative least squares algorithm; RF, random forest; SVM, support vector machine; XGBoost, extreme gradient boosting.