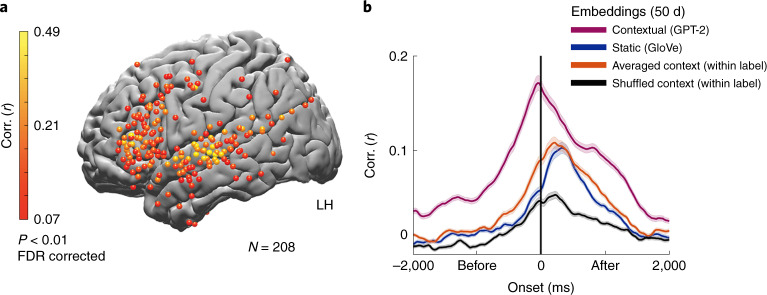

Fig. 6. Contextual (GPT-2) embeddings improve the modeling of neural responses before word onset.

a, Peak correlation between predicted and actual word responses for the contextual (GPT-2) embeddings. Using contextual embeddings significantly improved the encoding model’s ability to predict the neural signals for unseen words across many electrodes. b, Encoding model performance for contextual embeddings (GPT-2) aggregated across 160 electrodes with significant encoding for GloVe (Fig. 3c): contextual embeddings (purple), static embeddings (GloVe, blue), contextual embeddings averaged across all occurrences of a given word (orange), contextual embeddings shuffled across context-specific occurrence of a given word (black). The shaded regions indicate the s.e. of the encoding models across electrodes.