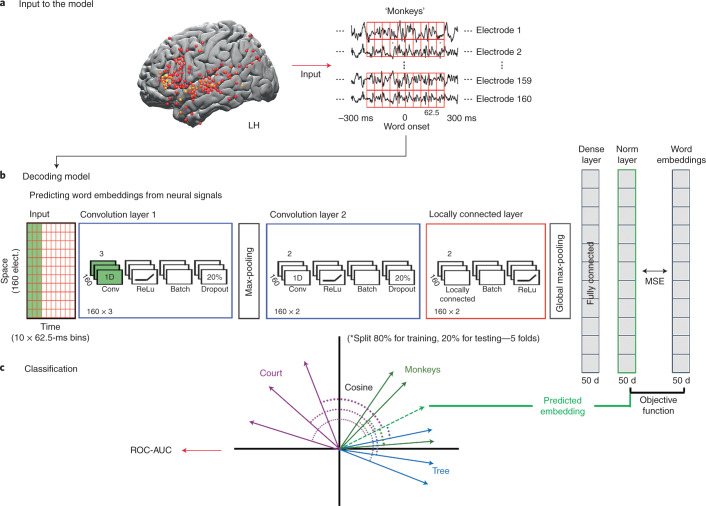

Fig. 7. Deep nonlinear decoding model used to predict words from neural responses before and after word onset.

a, Neural data from left-hemisphere electrodes with significant encoding model performance using GloVe embeddings were used as input to the decoding model. For each fold, electrode selection was performed on 80% of the data that were not used for testing the model. The stimulus was segmented into individual words and aligned to the brain signal at each lag. b, Schematic of the feed-forward deep neural network model that learns to project the neural signals for the words into the arbitrary embedding, static semantic embedding (GloVe) or contextual embedding (GPT-2) space (Appendix I). The input (currently represented as a 160 × 10 matrix) changes its dimensions for each of the five folds based on the number of significant electrodes for each fold. The model was trained to minimize the mean squared error (MSE) when mapping the neural signals into the embedding space. c, The decoding model was evaluated using a word classification task. The quality of word classification is based on the embedding space used to construct ROC-AUC scores. This enabled us to assess how much information about specific words is extractible from the neural activity via the linguistic embedding space.