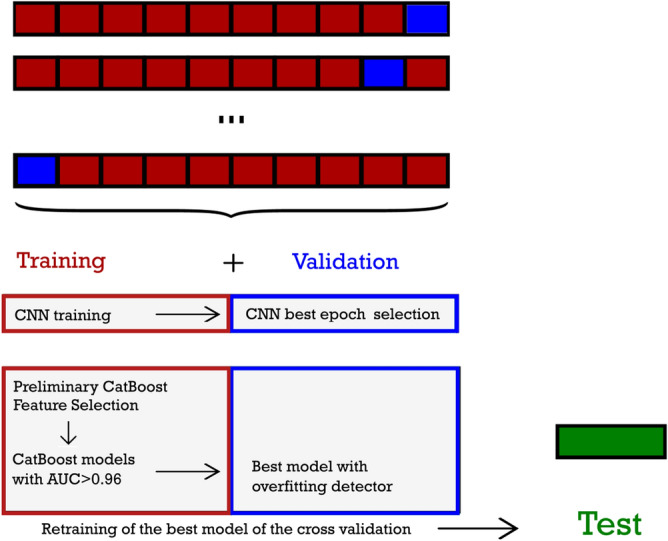

Figure 6.

A sketch of the cross validation procedure with feature selection. The dataset is split in test, used only for final evaluation, and training/validation, used for CNN training and evaluation, deep learned feature extraction, feature selection and hyperparameter tuning. Ten fold cross validation is applied in the training/validation set. CNN is trained on the training set (upper left red box), evaluated for hyperparameters on the validation set (upper right blue box). Extracted features are combined with non-imaging features, and selected in the training set, with a preliminary model (lower left red box: Preliminary CatBoost+Feature Selection). Bayesian optimization with Optuna is used for the preliminary model hyperparameters choice. Feature selection is effected with BorutaSHAP. CatBoost hyperparameters tuning on the selected feature set was effected in two steps, first with abayesian optimization in order to reduce the hyperparameter (lower left red box: CatBoost models with AUC) and then with overfitting detector (lower right blue box: best model in validation set). The best model of cross validation is retrained on the combined training/validation set, and evaluated on the test.