Abstract

We propose the idea of using an open data set of doctor-patient interactions to develop artificial empathy based on facial emotion recognition. Facial emotion recognition allows a doctor to analyze patients' emotions, so that they can reach out to their patients through empathic care. However, face recognition data sets are often difficult to acquire; many researchers struggle with small samples of face recognition data sets. Further, sharing medical images or videos has not been possible, as this approach may violate patient privacy. The use of deepfake technology is a promising approach to deidentifying video recordings of patients’ clinical encounters. Such technology can revolutionize the implementation of facial emotion recognition by replacing a patient's face in an image or video with an unrecognizable face—one with a facial expression that is similar to that of the original. This technology will further enhance the potential use of artificial empathy in helping doctors provide empathic care to achieve good doctor-patient therapeutic relationships, and this may result in better patient satisfaction and adherence to treatment.

Keywords: artificial empathy, deepfakes, doctor-patient relationship, face emotion recognition, artificial intelligence, facial recognition, facial emotion recognition, medical images, patient, physician, therapy

Introduction

Good doctor-patient communication is one of the key requirements of building a successful, therapeutic doctor-patient relationship [1]. This type of communication enables physicians to provide better-quality care that may impact patients’ health. Studies on good doctor-patient communication have demonstrated a strong positive correlation between physician communication skills and patient satisfaction, which is likely associated with patients’ adherence to treatment; their experience of care; and, consequently, improved clinical outcomes [2-5].

We acknowledge the importance of good doctor-patient communication; doctors must understand patients’ perspectives through verbal conversation and nonverbal behaviors (eg, posture, gesture, eye contact, facial expression, etc) [6,7]. Establishing communication involving nonverbal messages is very important in building a good doctor-patient relationship because such communication conveys more expressive and meaningful messages than those conveyed in a verbal conversation [8]. One research study indicates that nonverbal messages contribute to up to 90% of messages delivered in human interactions [6]. Another study also estimates that more than half of outpatient clinic patients believe that establishing positive nonverbal behaviors indicates that a doctor is more attentive toward their patient and thus results in better patient satisfaction and adherence to treatment [8].

Although several studies have reported that human nonverbal behaviors are significantly associated with patient satisfaction and compliance to a treatment plan, physicians are often clueless about nonverbal messages [6]. Doctors should be more aware of their nonverbal behaviors because patients are cognizant of them. Doctors also need to recognize and evaluate patients’ nonverbal behaviors and their own nonverbal behaviors toward patients.

Artificial intelligence (AI) offers great potential for exploring nonverbal communication in doctor-patient encounters [9]. For example, AI may help a doctor become more empathic by analyzing human facial expressions through emotion recognition. Once an emotionally intelligent AI identifies an emotion, it can guide a doctor to express more empathy based on each patient’s unique emotional needs [10].

Empathy refers to the ability to understand or feel what another person is experiencing, and showing empathy may lead to better behavioral outcomes [9]. Empathy can be learned, and the use of AI technology introduces a promising approach to incorporating artificial empathy in the doctor-patient therapeutic relationship [11]. However, human emotions are very complex. An emotionally intelligent AI should learn a range of emotions (ie, those that patients experience) from facial expressions, voices, and physiological signals to empathize with human emotions [12]. These emotions can be captured by using various modalities, such as video, audio, text, and physiological signals [13].

Among all forms of human communication channels, facial expressions are recognized as the most essential and influential [14-16]. The human face can express various thoughts, emotions, and behaviors [15]. It can convey important aspects in human interpersonal communication and nonverbal expressions in social interactions [17,18]. Compared to the amount of information that can be conveyed via emotion recognition technology, facial expressions convey 55% of the emotional expression transmitted in multimodal human interactions, whereas verbal information, text communication, and communication via physiological signals only convey 20%, 15%, and 10% of the total information in interactions, respectively [19].

Many researchers have been studying facial expressions by using automatic facial emotion recognition (FER) to gain a better understanding of the human emotions linked with empathy [20-24]. They have proposed various machine learning algorithms, such as support vector machines, Bayesian belief networks, and neural network models, for recognizing and describing emotions based on observed facial expressions recorded on images or videos [20-22]. Although mounting literature has been introduced on machine learning and deep learning for automatically extracting emotions from the human face, developing a highly accurate FER system requires a lot of training data and a high-quality computational system [21]. In addition, the data set must include diverse facial views in terms of angles, frame rates, races, and genders, among others [21].

Many public data sets are available for FER [25]. However, most public data sets are not sufficient for supporting doctor-patient interactions. Creating our own medical data sets is also not possible, since this process is expensive and time consuming [26]. Moreover, researchers often struggle with acquiring sufficient data for training a face recognition model due to privacy concerns. Data sharing and the pooling of medical images or videos are not even possible, as these approaches may violate patient privacy. Herein, we present our study on the emerging AI is known as deepfakes—a technology that enables face deidentification for recorded videos of patients’ clinical encounters. This technology can revolutionize FER by replacing patients’ faces in images or videos with an unrecognizable face, thereby anonymizing patients. This could protect patients’ privacy when it comes to clinical encounter videos and allow medical video data sharing to become more feasible. Moreover, using an open clinical encounter video data set can also promote more in-depth research within the academic community. Thus, deepfake technology will further enhance the clinical application of artificial empathy for medical application purposes.

Methods

Human FER

Human FER plays a significant role in understanding people's nonverbal ways of communicating with others [19]. It has attracted the interest of scientific populations in various fields due to its superiority among other forms of emotion recognition [22]. As it is not only limited to human-computer interactions or human-robot interactions, facial expression analysis has become a popular research topic in various health care areas, such as the diagnosis or assessment of cognitive impairment (eg, autism spectrum disorders in children), depression monitoring, pain monitoring in Parkinson Disease, and clinical communication in doctor-patient consultations [27].

The main objective of FER is to accurately classify various facial expressions according to a person’s emotional state [21]. The classical FER approach is usually divided into the following three major stages: (1) facial feature detection, (2) feature extraction, and (3) emotion recognition [21,28]. However, traditional FER has been reported to be unable to extract facial expressions in an uncontrolled environment with diverse facial views [21,28]. On the other hand, a recent study using a deep learning–based FER approach has successfully achieved superior accuracy over that of traditional FER [20-22].

Deepfake Technology

The rapid growth of computer vision and deep learning technology has driven the recently emerged phenomena of deepfakes (deep learning and fake), which can automatically forge images and videos that humans cannot easily recognize [29-31]. In addition, deepfake techniques offer the possibility of generating unrecognizable images of a person’s face and altering or swapping a person’s face in existing images and videos with another face that exhibits the same expressions as the original face [29]. Various deepfake attempts have been used for negative purposes, such as creating controversial content related to celebrities, politicians, companies, and even individuals to damage their reputation [30]. Although the harmful effects of deepfake technology have raised public concerns, there are also advantages to using this technology. For example, it can provide privacy protection in some critical medical applications, such as face deidentification for patients [32]. Further, although deepfake technology can easily manipulate the low-level semantics of visual and audio features, a recent study suggested that it might be difficult for deepfake technology to forge the high-level semantic features of human emotions [31].

Deepfake technology is mainly developed by using deep learning—an AI-based method that can be used to train deep networks [29]. The popular approach to implementing deepfake techniques is based on the generative adversarial network (GAN) model [33,34]. There are several types and examples of deepfakes, such as photo deepfakes, audio deepfakes, video deepfakes, and audio-video deepfakes.

Data Set

To simulate how deepfake technology enables face deidentification for recorded videos of doctor-patient clinical encounters, we recruited 348 adult patients and 4 doctors from Taipei Municipal Wanfang Hospital and Taipei Medical University Hospital from March to December 2019. After excluding video data from 21 patients due to video damage, we collected video data from 327 patients. The data set focused on the interactions between doctors and patients in dermatology outpatient clinics. The subjects in the data set are all from the Taiwanese population.

The FER System in the Deepfake Model Setup

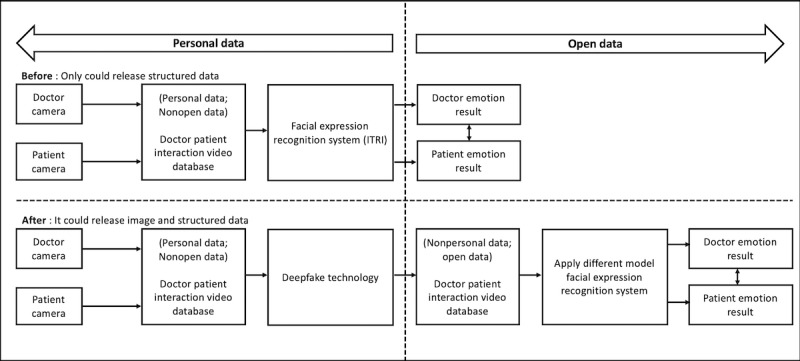

Figure 1 illustrates the workflow of the FER system before and after proposing deepfake technology. First, we created synchronized recordings by using 2 cameras to capture doctor-patient interactions in the dermatology outpatient clinic. We assumed that the face was the most relevant and accessible channel for nonverbal communication in health care [6]. Therefore, we then used a facial expression recognition system developed by the Industrial Technology Research Institute to detect emotions and analyze the emotional changes of the doctors and patients across time. This facial expression recognition system has been deployed using training data from 28,710 Asian face images and has an accuracy of 95% for the extended Cohn-Kanade data set [35].

Figure 1.

The facial emotion recognition system workflow. ITRI: Industrial Technology Research Institute.

We identified facial expressions by using the main points of an individual’s face (eg, eyes, eyebrows, the tip of the nose, lip corners, etc) to track facial movement. This allowed us to observe the emotional experiences of the doctors and patients when they expressed the following seven facial expressions: anger, disgust, fear, neutral, happiness, sadness, and surprise. The system then provided a summary of the emotional changes of both the doctors and the patients with a temporal resolution of up to 1 second. Additionally, our model managed to filter out any irrelevant face targets (ie, faces other than those of the doctors and patients). Finally, the summary results of the doctor and patient emotion analyses were used as a reference data set to develop artificial empathy. The system then created recommendations, so that doctors could provide an immediate response based on patients’ situations.

It should be noted however that our artificial empathy reference data training set was built by using limited face recognition data sets. Therefore, we tried to improve the model by proposing the use of open data from a clinical encounter video manipulated by deepfake technology, which can enable medical data sharing without violating patient privacy. Furthermore, these open data allowed us to connect with real-world clinical encounter video data sets, so that we could use different model facial expression recognition systems to analyze patients’ and doctors’ emotional experiences (Figure 1).

Ethics Approval

Our study was approved by Taipei Medical University (TMU)-Joint Institutional Review Board (TMU-JIRB No: N201810020).

Results

The clinical encounter video—the source of our face recognition data set—consists of video data from 327 patients—208 female patients and 119 male patients (age: mean 51, SD 19.06 years). The average consultation time on the recorded video was 4.61 (SD 3.04) minutes; the longest duration of a consultation was 25.55 minutes, and the shortest was 0.33 minutes. Our artificial empathy algorithm was developed by using FER algorithms. This algorithm learned a range of patient emotions by analyzing expressions, so that doctors could provide an immediate response based on patients’ emotional experiences. In general, this FER system achieved a mean detection rate of >80% on real-world data.

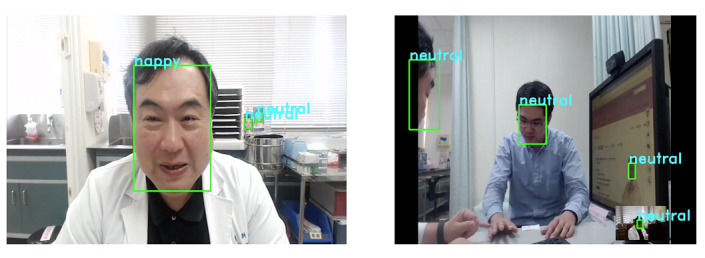

Our face recognition data set for artificial empathy was solely based on basic emotions. The system evaluation reported expressions of anger, happiness, disgust, and sadness, which were more likely to be expressed by the doctors than by the patients (P<.001). Moreover, patients also tended to more commonly express neutral emotions and surprise when compared to doctors (P<.001). The overall emotions of the doctors were dominated by emotions of sadness (expressions: 8580/17,397, 49.3%), happiness (expressions: 7541/17,397, 43.3%), anger (expressions: 629/17,397, 3.6%), surprise (expressions: 436/17,397, 2.5%), and disgust (expressions: 201/17,397, 1.2%), whereas the emotions of patients consisted of happiness (expressions: 5766/12,606, 45.7%), sadness (expressions: 5773/12,606, 45.8%), surprise (expressions: 890/12,606, 7.1%), and anger (expressions: 126/12,606, 0.9%). Figure 2 illustrates the emotional expressions of both doctors and patients. The system used the results of the emotion analysis to remind the doctors to change their behaviors according to patients’ situations, so that the patients felt like the doctors understood their emotions and situations.

Figure 2.

Screenshots of the recorded video simulation of the doctor-patient relationship in the dermatology outpatient clinic.

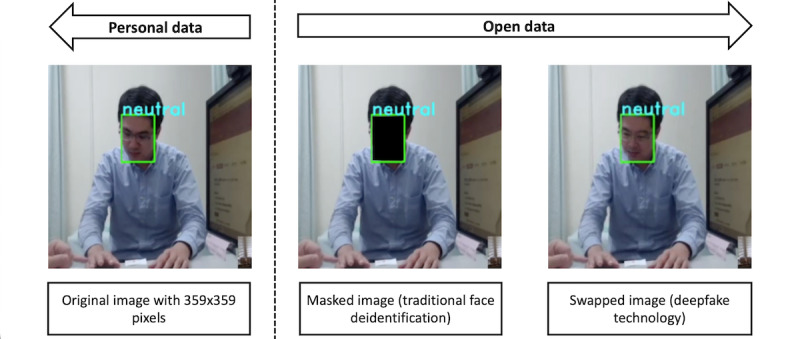

The original face recognition data set consists of personal data (ie, patients’ faces). However, we can only release the results of the emotional expression analysis as a reference for the development of artificial empathy. As noted previously, our approach only involved using a small amount of training data (only Asian face images). Therefore, to improve model performance, we need to anonymize the clinical interaction video by performing face deidentification. Face deidentification allows us to share our face recognition data set as open data for clinical research. To enable face image data sharing, a researcher can perform traditional face deidentification techniques, such as masking an image by covering a patient’s face region with a colored box (Figure 3).

Figure 3.

Comparison between traditional face deidentification and face swapping by using deepfake technology on an image of a patient's face.

Of note however, as our research aims to develop artificial empathy to support good doctor-patient relationships, the masking method cannot be performed, as it is very difficult to validate masked images with the results of an emotion expression analysis. Deepfake technology offers a method for swapping a patient's original face with another from an open-source face data set to generate an unrecognizable image with similar expressions and attributes to those of the original face image. This face swapping method can be adopted for use with the face recognition reference data set for our artificial empathy algorithm to avoid violating patient privacy and ethical concerns. We adopted video deepfake technology based on face swapping (Figure 3), which was proposed in the first order motion model for image animation [36]. This approach involved adopting a novel deep learning framework for image animation known as Monkey-Net and modifying it by using a set of self-learned key points combined with local affine transformations [36]. This framework enables a dense motion transfer network to generate a video in which the source image is animated according to a given driving video sequence with complex motions [36]. Unlike the original GAN model, which relied on costly ground-truth pretrained models that resulted in the poor generation quality of image or video outputs, the first order motion model for image animation can handle high-resolution data sets with profile images and can thus become a reference benchmark model for our face recognition data set.

Discussion

Principal Findings

Our FER study revealed how doctors more commonly express emotions like anger, happiness, disgust, and sadness than patients. Because nonverbal messages like facial expressions contribute the most to the messages delivered in human interactions, doctors need to be more careful when expressing their emotions during clinical interactions. For example, doctors should never be used to expressing anger, disgust, or other negative emotions that represent poor communication skills, as this may ruin treatment goals and result in frustration for both patients and health care practitioners [6].

Positive emotions (eg, happiness) represent good communication skills, as they may help people understand how another person feels and what they think and allow people to understand each other better [37]. Furthermore, positive emotions can help build patients' trust in their doctors [38]. Trust from a patient’s perspective refers to the acceptance of a vulnerable situation in which patients believe that doctors will provide adequate and fair medical care to help them based on their needs [39]. When patients trust their doctors, they are more likely to share valid and reliable information related to their condition, acknowledge health problems more readily, comprehend medical information efficiently, and comply with treatment plans accordingly [39]. They also tend to seek preventive care earlier and return for follow-up care, which may prevent further disease complications [39].

In addition to physicians’ medical knowledge and clinical skills, patients’ perceptions of physicians’ ability to provide adequate information, actively listen, and empathize are believed to be associated with patient satisfaction and trust [3]. A physician's capability to exhibit effective communication skills and provide empathic care is beneficial for patients in terms of improving good doctor-patient relationships and for the physicians themselves, as these factors can increase job performance satisfaction and lower the risk of stress and physical burnout among physicians [40]. Empathic care may also reduce the rate of medical errors and help to avoid conflict with patients [38].

We believe that our FER system and face recognition data set can serve as a decision support system that can guide doctors when a patient requires special attention for achieving therapeutic goals. For example, if doctors express a negative facial expression (eg, anger, disgust, and sadness), the system will remind them to change their facial expressions. Moreover, if a patient also expresses a negative facial expression, the system will suggest that the doctor should use a different approach to accommodate the patient’s emotional situation. Based on our results, the major shortcoming that we need to address is that FER technology relies on the quality of data training and the quantity of training data [26,32]. We believe that in the future, we can improve the system’s precision and accuracy by collecting more data from more subjects with various sociodemographic backgrounds. This is only possible if we adopt deepfake technology (eg, GANs), which can learn the facial features of a human face on images and videos and replace it with another person's face [41]. Thus, deepfake technology can replace a patient’s face image and create fake face images with similar facial expressions in videos. With the use of deepfake technology, the recorded video database of outpatient doctor-patient interactions will become more accessible. Applying deepfakes to deidentify FER data sets may benefit the development of artificial empathy, as this approach may not violate the privacy and security of interpersonal situations.

Similar to our study, a recent study reported using deepfake technology to generate open-source, high-quality medical video data sets of Parkinson disease examination videos to deidentify subjects [32]. This study also applied the face swapping technique and real-time multi-person system to detect human motion key points based on open-source videos from the Deep Fake Detection data set [32]. Meanwhile, our approach involved using a self-supervised formulation consisting of self-learned key points combined with local affine transformations [36]. We believe that this self-learned model could preserve the represented emotional states of people in the original face recognition data set.

Our study has some limitations. First, our approach only involved using a single information modality—video deepfakes—which could have resulted in inaccurate emotion classification. In the future, we can combine both video and audio deepfakes to better represent the emotional states of a target person. Second, moral and ethical concerns need to be considered when using deepfake technology for the deidentification of medical data sets. However, our study highlighted the positive ways of using deepfakes for privacy protection when using face recognition data sets in medical settings. Thus, instead of raising an ethical problem, this study will help prevent the use of deepfakes for malicious purposes and encourage their use in medical applications.

Conclusion

We propose using an open data set of clinical encounter videos as a reference data training set to develop artificial empathy based on an FER system, given that FER technologies rely on extensive data training. Yet, due to privacy concerns, it has always been difficult for researchers to acquire a face recognition data set. Therefore, we suggest the adoption of deepfakes. Deepfake technology can deidentify faces in images or videos and manipulate them so that the proper target face becomes unrecognizable, thereby preventing the violation of patient privacy. Such technology can also generate the same facial expressions as those in the original image or video. Therefore, this technology might promote medical video data sharing, improve the implementation of FER systems in clinical settings, and protect sensitive data. Furthermore, deepfake technology will further enhance the potential use of artificial empathy in helping doctors provide empathic care based on patients’ emotional experiences to achieve a good doctor-patient therapeutic relationship.

Acknowledgments

This research is funded by the Ministry of Science and Technology (grants MOST 110-2320-B-038-029-MY3, 110-2221-E-038-002 -MY2, and 110-2622-E-038 -003-CC1).

Abbreviations

- AI

artificial intelligence

- FER

facial emotion recognition

- GAN

generative adversarial network

Footnotes

Conflicts of Interest: None declared.

References

- 1.Ha JF, Longnecker N. Doctor-patient communication: a review. Ochsner J. 2010;10(1):38–43. http://europepmc.org/abstract/MED/21603354 . [PMC free article] [PubMed] [Google Scholar]

- 2.Zachariae R, Pedersen CG, Jensen AB, Ehrnrooth E, Rossen PB, von der Maase H. Association of perceived physician communication style with patient satisfaction, distress, cancer-related self-efficacy, and perceived control over the disease. Br J Cancer. 2003 Mar 10;88(5):658–665. doi: 10.1038/sj.bjc.6600798. http://europepmc.org/abstract/MED/12618870 .6600798 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Sullivan LM, Stein MD, Savetsky JB, Samet JH. The doctor-patient relationship and HIV-infected patients' satisfaction with primary care physicians. J Gen Intern Med. 2000 Jul;15(7):462–469. doi: 10.1046/j.1525-1497.2000.03359.x. https://tinyurl.com/2p8dw9fk .jgi03359 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Renzi C, Abeni D, Picardi A, Agostini E, Melchi CF, Pasquini P, Puddu P, Braga M. Factors associated with patient satisfaction with care among dermatological outpatients. Br J Dermatol. 2001 Oct;145(4):617–623. doi: 10.1046/j.1365-2133.2001.04445.x.4445 [DOI] [PubMed] [Google Scholar]

- 5.Cánovas L, Carrascosa AJ, García M, Fernández M, Calvo A, Monsalve V, Soriano JF, Empathy Study Group Impact of empathy in the patient-doctor relationship on chronic pain relief and quality of life: A prospective study in Spanish pain clinics. Pain Med. 2018 Jul 01;19(7):1304–1314. doi: 10.1093/pm/pnx160.3964520 [DOI] [PubMed] [Google Scholar]

- 6.Ranjan P, Kumari A, Chakrawarty A. How can doctors improve their communication skills? J Clin Diagn Res. 2015 Mar;9(3):JE01–JE04. doi: 10.7860/JCDR/2015/12072.5712. http://europepmc.org/abstract/MED/25954636 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Butow P, Hoque E. Using artificial intelligence to analyse and teach communication in healthcare. Breast. 2020 Apr;50:49–55. doi: 10.1016/j.breast.2020.01.008. https://linkinghub.elsevier.com/retrieve/pii/S0960-9776(20)30009-6 .S0960-9776(20)30009-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Khan FH, Hanif R, Tabassum R, Qidwai W, Nanji K. Patient attitudes towards physician nonverbal behaviors during consultancy: Result from a developing country. ISRN Family Med. 2014 Feb 04;2014:473654. doi: 10.1155/2014/473654. http://europepmc.org/abstract/MED/24977140 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Cuff BMP, Brown SJ, Taylor L, Howat DJ. Empathy: A review of the concept. Emot Rev. 2014 Dec 01;8(2):144–153. doi: 10.1177/1754073914558466. [DOI] [Google Scholar]

- 10.Aminololama-Shakeri S, López JE. The doctor-patient relationship with artificial intelligence. AJR Am J Roentgenol. 2019 Feb;212(2):308–310. doi: 10.2214/AJR.18.20509. [DOI] [PubMed] [Google Scholar]

- 11.Iqbal U, Celi LA, Li YCJ. How can artificial intelligence make medicine more preemptive? J Med Internet Res. 2020 Aug 11;22(8):e17211. doi: 10.2196/17211. https://www.jmir.org/2020/8/e17211/ v22i8e17211 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Sebe N, Cohen I, Gevers T, Huang TS. Multimodal approaches for emotion recognition: A survey. Electronic Imaging 2005; January 16-20 2005; San Jose, California, United States. 2005. Jan 17, [DOI] [Google Scholar]

- 13.Bänziger T, Grandjean D, Scherer KR. Emotion recognition from expressions in face, voice, and body: the Multimodal Emotion Recognition Test (MERT) Emotion. 2009 Oct;9(5):691–704. doi: 10.1037/a0017088.2009-17981-010 [DOI] [PubMed] [Google Scholar]

- 14.Lazzeri N, Mazzei D, Greco A, Rotesi A, Lanatà A, De Rossi DE. Can a humanoid face be expressive? A psychophysiological investigation. Front Bioeng Biotechnol. 2015 May 26;3:64. doi: 10.3389/fbioe.2015.00064. doi: 10.3389/fbioe.2015.00064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Frank MG. Facial expressions. In: Smelser NJ, Baltes PB, editors. International Encyclopedia of the Social & Behavioral Sciences. Amsterdam, Netherlands: Elsevier; 2001. pp. 5230–5234. [Google Scholar]

- 16.Mancini G, Biolcati R, Agnoli S, Andrei F, Trombini E. Recognition of facial emotional expressions among Italian pre-adolescents, and their affective reactions. Front Psychol. 2018 Aug 03;9:1303. doi: 10.3389/fpsyg.2018.01303. doi: 10.3389/fpsyg.2018.01303. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Jack RE, Schyns PG. The human face as a dynamic tool for social communication. Curr Biol. 2015 Jul 20;25(14):R621–R634. doi: 10.1016/j.cub.2015.05.052. https://linkinghub.elsevier.com/retrieve/pii/S0960-9822(15)00655-7 .S0960-9822(15)00655-7 [DOI] [PubMed] [Google Scholar]

- 18.Frith C. Role of facial expressions in social interactions. Philos Trans R Soc Lond B Biol Sci. 2009 Dec 12;364(1535):3453–3458. doi: 10.1098/rstb.2009.0142. http://europepmc.org/abstract/MED/19884140 .364/1535/3453 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Saxena A, Khanna A, Gupta D. Emotion recognition and detection methods: A comprehensive survey. Journal of Artificial Intelligence and Systems. 2020;2:53–79. doi: 10.33969/ais.2020.21005. https://iecscience.org/uploads/jpapers/202003/dnQToaqdF8IRjhE62pfIovCkDJ2jXAcZdK6KHRzM.pdf . [DOI] [Google Scholar]

- 20.Mehendale N. Facial emotion recognition using convolutional neural networks (FERC) SN Appl Sci. 2020 Feb 18;2(446):1–8. doi: 10.1007/s42452-020-2234-1. https://link.springer.com/article/10.1007/s42452-020-2234-1 . [DOI] [Google Scholar]

- 21.Akhand MAH, Roy S, Siddique N, Kamal MAS, Shimamura T. Facial emotion recognition using transfer learning in the deep CNN. Electronics (Basel) 2021 Apr 27;10(9):1036. doi: 10.3390/electronics10091036. https://mdpi-res.com/d_attachment/electronics/electronics-10-01036/article_deploy/electronics-10-01036-v2.pdf . [DOI] [Google Scholar]

- 22.Song Z. Facial expression emotion recognition model integrating philosophy and machine learning theory. Front Psychol. 2021 Sep 27;12:759485. doi: 10.3389/fpsyg.2021.759485. doi: 10.3389/fpsyg.2021.759485. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Uddin MZ. Chapter 26 - A local feature-based facial expression recognition system from depth video. In: Deligiannidis L, Arabnia HR, editors. Emerging Trends in Image Processing, Computer Vision and Pattern Recognition. Burlington, Massachusetts: Morgan Kaufmann; 2015. pp. 407–419. [Google Scholar]

- 24.John A, Abhishek MC, Ajayan AS, Kumar V, Sanoop S, Kumar VR. Real-time facial emotion recognition system with improved preprocessing and feature extraction. 2020 Third International Conference on Smart Systems and Inventive Technology (ICSSIT); August 20-22, 2020; Tirunelveli, India. 2020. Oct 06, [DOI] [Google Scholar]

- 25.Minaee S, Minaei M, Abdolrashidi A. Deep-emotion: Facial expression recognition using attentional convolutional network. Sensors (Basel) 2021 Apr 27;21(9):3046. doi: 10.3390/s21093046. https://www.mdpi.com/resolver?pii=s21093046 .s21093046 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Ng HW, Nguyen VD, Vonikakis V, Winkler S. Deep learning for emotion recognition on small datasets using transfer learning. ICMI '15: International Conference on Multimodal Interaction; November 9-13, 2015; Seattle, Washington, USA. 2015. Nov, pp. 443–449. [DOI] [Google Scholar]

- 27.Leo M, Carcagnì P, Mazzeo PL, Spagnolo P, Cazzato D, Distante C. Analysis of facial information for healthcare applications: A survey on computer vision-based approaches. Information (Basel) 2020 Feb 26;11(3):128. doi: 10.3390/info11030128. https://mdpi-res.com/d_attachment/information/information-11-00128/article_deploy/information-11-00128-v2.pdf . [DOI] [Google Scholar]

- 28.Surcinelli P, Andrei F, Montebarocci O, Grandi S. Emotion recognition of facial expressions presented in profile. Psychol Rep. 2021 May 26;:332941211018403. doi: 10.1177/00332941211018403. [DOI] [PubMed] [Google Scholar]

- 29.Nguyen TT, Nguyen QVH, Nguyen DT, Nguyen DT, Huynh-The T, Nahavandi S, Nguyen TT, Pham QV, Nguyen CM. Deep learning for deepfakes creation and detection: A survey. arXiv. Preprint posted online on February 6, 2022 https://arxiv.org/pdf/1909.11573.pdf . [Google Scholar]

- 30.Guarnera L, Giudice O, Battiato S. DeepFake detection by analyzing convolutional traces. 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW); June 14-19, 2020; Seattle, Washington, USA. 2020. Jul 28, [DOI] [Google Scholar]

- 31.Hosler B, Salvi D, Murray A, Antonacci F, Bestagini P, Tubaro S, Stamm MC. Do deepfakes feel emotions? A semantic approach to detecting deepfakes via emotional inconsistencies. 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW); June 19-25, 2021; Nashville, Tennessee, USA. 2021. Sep 01, pp. 1013–1022. [Google Scholar]

- 32.Zhu B, Fang H, Sui Y, Li L. Deepfakes for medical video de-identification: Privacy protection and diagnostic information preservation. AIES '20: AAAI/ACM Conference on AI, Ethics, and Society; February 7-9, 2020; New York, New York, USA. 2020. Feb, pp. 414–420. [DOI] [Google Scholar]

- 33.Floridi L. Artificial intelligence, deepfakes and a future of ectypes. Philos Technol. 2018 Aug 1;31(3):317–321. doi: 10.1007/s13347-018-0325-3. [DOI] [Google Scholar]

- 34.Kietzmann J, Lee LW, McCarthy IP, Kietzmann TC. Deepfakes: Trick or treat? Bus Horiz. 2020;63(2):135–146. doi: 10.1016/j.bushor.2019.11.006. [DOI] [Google Scholar]

- 35.Zhizhong G, Meiying S, Yiyu S, Jiahua W, Ziyun P, Minghong Z, Yuxian X. Smart Monitoring Re-Upgraded--Introducing Facial Emotion Recognition [in Chinese] Computer and Communication. 2018 Oct 25;(175):29–31. doi: 10.29917/CCLTJ. [DOI] [Google Scholar]

- 36.Siarohin A, Lathuilière S, Tulyakov S, Ricci E, Sebe N. First order motion model for image animation. 33rd International Conference on Neural Information Processing Systems; December 8-14, 2019; Vancouver, Canada. 2019. Dec, pp. 7137–7147. [Google Scholar]

- 37.Markides M. The importance of good communication between patient and health professionals. J Pediatr Hematol Oncol. 2011 Oct;33 Suppl 2:S123–S125. doi: 10.1097/MPH.0b013e318230e1e5.00043426-201110001-00011 [DOI] [PubMed] [Google Scholar]

- 38.Kerasidou A. Artificial intelligence and the ongoing need for empathy, compassion and trust in healthcare. Bull World Health Organ. 2020 Apr 01;98(4):245–250. doi: 10.2471/BLT.19.237198. http://europepmc.org/abstract/MED/32284647 .BLT.19.237198 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Thom DH, Hall MA, Pawlson LG. Measuring patients' trust in physicians when assessing quality of care. Health Aff (Millwood) 2004;23(4):124–132. doi: 10.1377/hlthaff.23.4.124. [DOI] [PubMed] [Google Scholar]

- 40.Bogiatzaki V, Frengidou E, Savakis E, Trigoni M, Galanis P, Anagnostopoulos F. Empathy and burnout of healthcare professionals in public hospitals of Greece. Int J Caring Sci. 2019;12(2):611–626. http://www.internationaljournalofcaringsciences.org/docs/4_boyiatzaki_original_12_2.pdf . [Google Scholar]

- 41.Jeong YU, Yoo S, Kim YH, Shim WH. De-identification of facial features in magnetic resonance images: Software development using deep learning technology. J Med Internet Res. 2020 Dec 10;22(12):e22739. doi: 10.2196/22739. https://www.jmir.org/2020/12/e22739/ v22i12e22739 [DOI] [PMC free article] [PubMed] [Google Scholar]