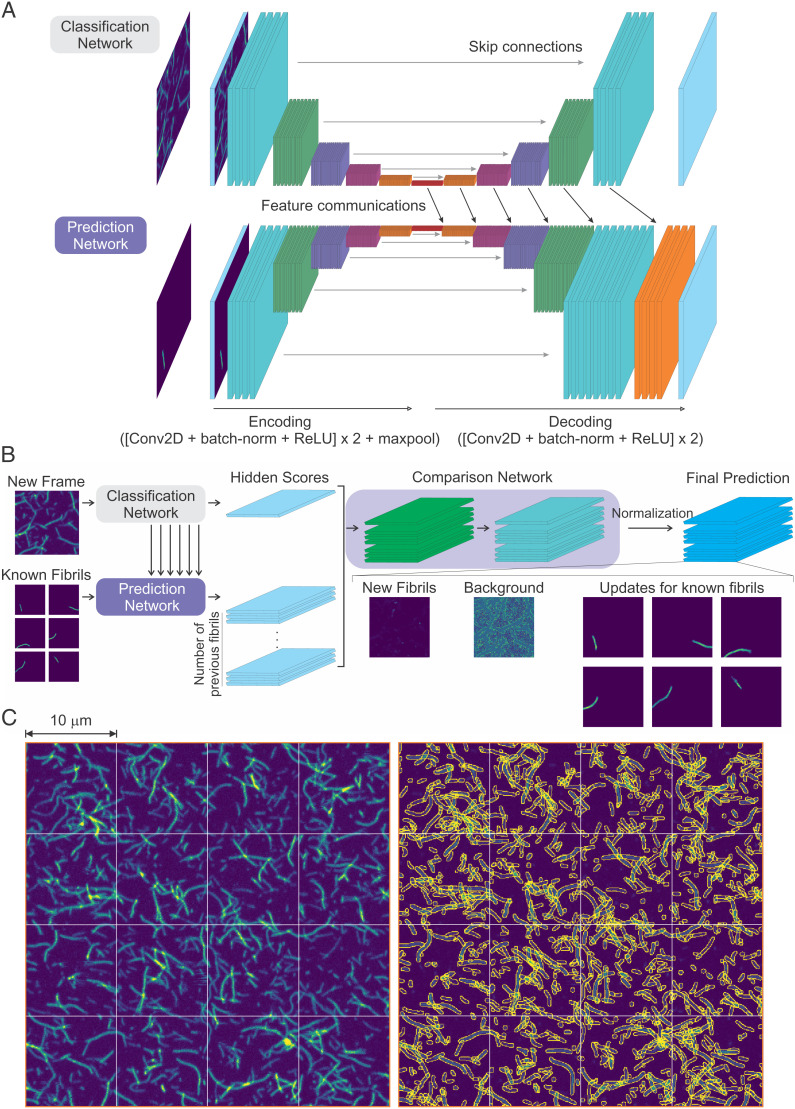

Fig. 2.

Deep neural network (FNet) architecture. (A) Classification network and prediction network. Each network takes an image as an input. The input of the classification network is a new frame image. The background prediction network uses a background prediction image of the previous frame as an input, and the growth prediction network uses prediction images of individual fibrils of the previous frame (known fibrils) as an input. The prediction network connects the hidden features of the classification network to generate predictions (feature communications). (B) The output of the classification network and the prediction networks are compared to generate the final prediction. The comparison network has two applications of a convolution, a batch-normalization and an ReLU activation layer, followed by a convolution and a PReLU activation layer. The output of PReLU activation is normalized pixel by pixel to make the number of photons in each pixel of the summed output image equal to that of the original input image of the new frame. This results in an image of new fibrils, a background image, and the updated images of the known fibrils from the previous frame (see Fig. 3A). (C, Left) Highly overlapping fibril images from Experiment 1. The 4 × 4 (10- × 10-μm2) scanned images are the last frames of the experiment (26-h incubation). (C, Right) Segmentation of fibrils in the same image data on the left side using FNet. Peripheries of individual fibrils are colored in yellow. The fibrils touching the boundaries of 10- × 10-μm2 areas were merged with the continuing fibrils in the adjacent areas.