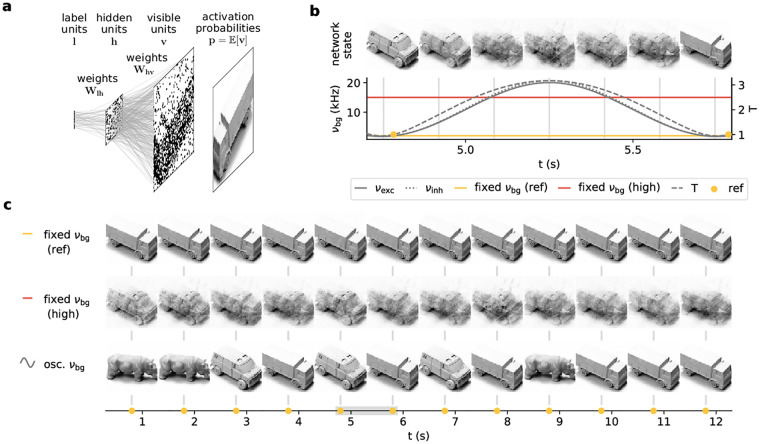

Fig 3. Background oscillations improve generative properties of spiking sampling networks.

(a) Architecture of a hierarchical 3-layer (visible v, hidden h and label l) network of LIF neurons and example layerwise activity. For a better representation of the visible layer statistics, we consider neuronal activation probabilities p(v|h) rather than samples thereof, to speed up the calculation of averages over (conditional) visible layer states. Here, we show a network trained on images from the NORB dataset. (b) Evolution of the activation probabilities of the visible layer (top) over one period of the background oscillation (bottom). (c) Evolution of the visible layer over multiple periods of the oscillation compared to a network with constant background input at the reference rate (2 kHz, top) and at a high rate (10 kHz, middle), cf. also yellow and red lines in (b). The activation probabilities are shown whenever the reference rate (see panel b) is reached. The gray bar denotes the period shown in (b).