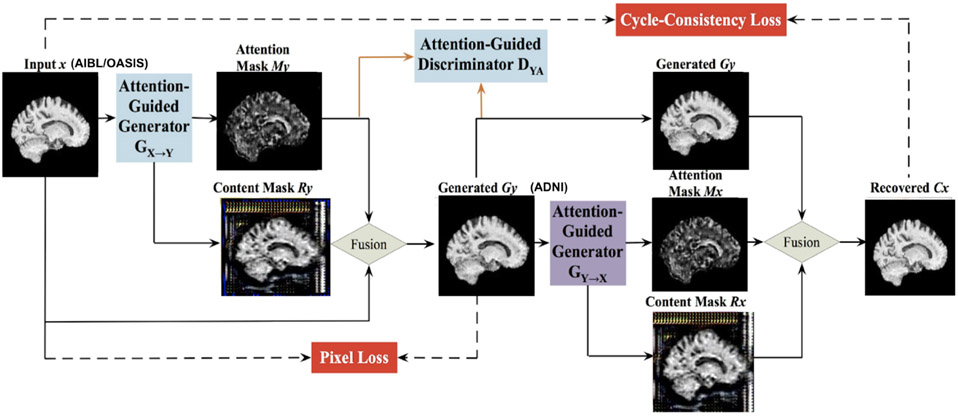

Figure 4.

The framework of the Attention-Guided Generative Adversarial Network (AG-GAN) model used here, showing the mathematical transformation mapping for translating subject x to subject y. There are in-built attention mechanisms in the generators to identify the part of images with maximum distinctions. Architecture adapted from 17, with component panels shown from our neuroimaging application.