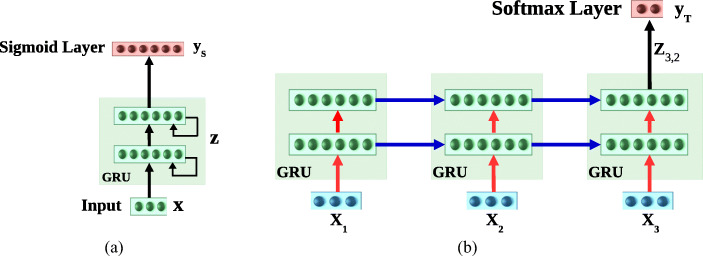

Fig. 6.

a HealthNet trained via supervised learning for multiple source tasks simultaneously using final activation as sigmoid. b Fine-tuning HealthNet for a new target task using final activation as softmax. Here, blue and red arrows correspond to recurrent and feed forward weights of the recurrent layers, respectively. Only feed forward (red) weights are regularized while fine-tuning the HealthNet. RNN with L = 2 hidden layers is shown unrolled over τ = 3 time steps