Abstract

Objective A learning health care system (LHS) uses routinely collected data to continuously monitor and improve health care outcomes. Little is reported on the challenges and methods used to implement the analytics underpinning an LHS. Our aim was to systematically review the literature for reports of real-time clinical analytics implementation in digital hospitals and to use these findings to synthesize a conceptual framework for LHS implementation.

Methods Embase, PubMed, and Web of Science databases were searched for clinical analytics derived from electronic health records in adult inpatient and emergency department settings between 2015 and 2021. Evidence was coded from the final study selection that related to (1) dashboard implementation challenges, (2) methods to overcome implementation challenges, and (3) dashboard assessment and impact. The evidences obtained, together with evidence extracted from relevant prior reviews, were mapped to an existing digital health transformation model to derive a conceptual framework for LHS analytics implementation.

Results A total of 238 candidate articles were reviewed and 14 met inclusion criteria. From the selected studies, we extracted 37 implementation challenges and 64 methods employed to overcome such challenges. We identified common approaches for evaluating the implementation of clinical dashboards. Six studies assessed clinical process outcomes and only four studies evaluated patient health outcomes. A conceptual framework for implementing the analytics of an LHS was developed.

Conclusion Health care organizations face diverse challenges when trying to implement real-time data analytics. These challenges have shifted over the past decade. While prior reviews identified fundamental information problems, such as data size and complexity, our review uncovered more postpilot challenges, such as supporting diverse users, workflows, and user-interface screens. Our review identified practical methods to overcome these challenges which have been incorporated into a conceptual framework. It is hoped this framework will support health care organizations deploying near-real-time clinical dashboards and progress toward an LHS.

Keywords: learning health care system, electronic health records and systems, clinical decision support, hospital information systems, clinical data management, dashboard, digital hospital

Background and Significance

The use of electronic health records (EHR) is now widespread across the United States and many other developed countries. 1 Because of this shift from paper to electronic data capture and storage, many new models of digitally enabled health care delivery are being explored to increase the ability of static health care resources to meet the ever-increasing demand for care.

The traditional “set and forget” paradigm, where services and providers establish models of delivery and evaluate their efficiency and outcomes at a later date, is being questioned. 2 These traditional models of monitoring hospital performance relied on paper based or static data collections, as well as intermittent and delayed reporting of system outcomes and errors. 3 This entrenched lack of continuous and timely oversight of patient outcomes can have catastrophic consequences. This was demonstrated by the recent Bacchus Marsh/Djerriwarrh Health Service scandals where potentially avoidable child and perinatal deaths occurred as a result of a flawed system of care and inadequate timely monitoring of health care outcomes. 4

Digital health provides a potential solution for positively disrupting these traditional models of hospital quality and safety and shifting from incident detection to continuous and iterative improvement. 5

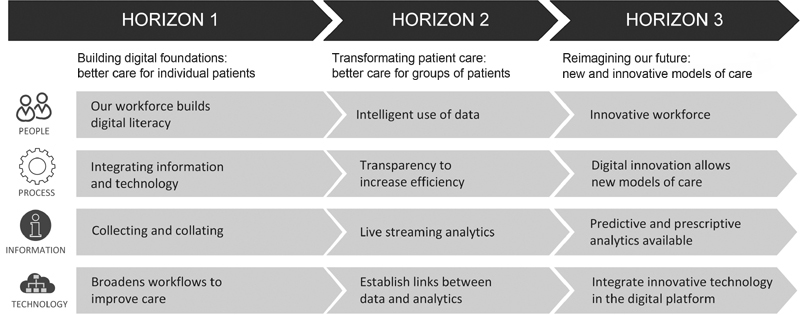

As health care organizations embark on a digital transformation journey ( Fig. 1 ), they can start to routinely collect large amounts of data digitally for every consumer, every time they interact with the system in real time (horizon one), to leverage the real-time data collected during routine care to create analytics (horizon two), and then develop new models of care using the data and digital technology (horizon three). 6 By reaching the third horizon of digital health transformation, health care organizations can use the near-real-time data to establish continuous learning cycles and care improvement, enabling a learning health care system (LHS). 7

Fig. 1.

Three horizons framework for digital health transformation. 6

An LHS is defined as a health care delivery system “that is designed to generate and apply the best evidence for the collaborative health care choices of each patient and provider; to drive the process of discovery as a natural outgrowth of patient care; and to ensure innovation, quality, safety, and value in health care.” 8 An LHS aims to gather health information from clinical practice and information systems to improve real-time clinical decision-making by clinicians who enables better quality and safety of patient care. 9

In many industries, particularly manufacturing, a learning system has been established for decades. Early digitization of workflows has allowed for data to be collected at each step of the production process, and a continuous monitoring system established to rapidly identify and resolve any blocks to efficient workflow and production. 10 Such a closed-loop learning model has not yet been widely adopted in health care, despite known serious consequences of delays in health care processes and workflows. For example, extended patient wait times in emergency departments are directly proportional with an increased risk of death. 11

Research Questions and Objective

Within the context of the three-horizon model ( Fig. 1 ), EHRs provide the foundation for horizon one. Despite the widespread adoption of EHRs, many providers are struggling to transition to horizons two and three to enable an LHS. An essential step in this maturation is the transition from EHR data only being used at point of care to the provision of real-time aggregated EHR data and analytics as dashboards to clinicians to enable better quality and safety of patient care. Therefore, in this paper, we examine the implementation of such dashboards to support organizations in their efforts to enable an LHS. We investigated the following research questions (RQs):

| RQ-1. What challenges to clinical dashboard implementation are commonly identified? | |

| RQ-2. What successful methods have been used by health care organizations to overcome these challenges? | |

| RQ-3. How has clinical dashboard implementation been assessed and how effective has their implementation been for health care organizations? |

In addressing these research questions, our overall aim is to:

Systematically identify and critically appraise research assessing the implementation of near real-time clinical analytics in digital hospitals designed to improve patient outcomes.

Develop a conceptual framework for implementing near real-time clinical analytics tools within an LHS.

Analysis of Prior Work

Between 2014 and 2018, seven reviews were identified that were relevant to the implementation of dashboards within health care organizations. 12 13 14 15 16 17 18 These reviews encompassed 148 individual studies published between 1996 and 2017. The key areas of scope expansion, clarified in Table 1 , were in the definition of included dashboards, the source of data, whether the data were real-time, whether the dashboard was implemented and the targeted health care setting.

Across the prior reviews, there were many kinds of health care dashboards investigated including, clinical, quality, performance (strategic, tactical, and operational) and visualization dashboards. Our study focused on clinical dashboards only. We drew on the work of Dowding et al 14 to define clinical dashboards as a visual display of data providing clinicians with access to relevant and timely information across patients that assists them in their decision-making and thus improves the safety and quality of patient care.

In contrast, performance dashboards tend to focus on nonclinical, managerial staff, and provide information to summarize and track process and organizational key performance indicators. 13 Visualization dashboards can also be clinical dashboards but may contain more sophisticated or innovative data visualizations. We included these studies if they met our inclusion criteria.

Despite the broader scope of these prior reviews, they provided important insights into the implementation of health care dashboards. For this reason, evidence related to our research questions was manually extracted from these reviews and listed into the Supplementary Tables S1 – S4 (available in the online version).

Table 3. Study characteristics of included articles.

| Study (year) and Country | Study design | Participants and sample size | Duration | Target user and intervention/s | Outcome measures (1) and Implementation (2) |

|---|---|---|---|---|---|

| Mlaver et al (2017) 25 and the United States | CSS | Tertiary academic hospital n = 793(bed) n = 98 (patients) n = 6 (PAs) |

Pre = 16 months Post = 1 weeks |

The rounding team: the PSLL patient safety dashboard provided real-time alert/notification, and flagged in the dashboard with different color coding. |

1. Health–information technology usability evaluation scale (ITUES) survey. 2. Patient-level and unit-level dashboards within EHR were integrated into and foster interdisciplinary bedside rounding. |

| Fletcher et al (2017) 23 and the United States | RMDS | Academic medical center n = 413 (bed) n = 19,000 (annual adms) n = 6,736 (eligible adms) |

Pre = 2 months Post = 20 weeks |

Rapid response team (RRT): provided the visibility at a glance to timely and accurate critical patient safety indicator information for multiple patients. | 1. Incidence ratio of all RRT activations. Measured the reduction for unexpected ICU transfers, unexpected cardiopulmonary arrests and unexpected deaths. 2. User interface in the preexisting EHR to provide real-time information. |

| Cox et al (2017) 22 and the United States | RCS | Tertiary academic hospital n = 366 (initial cohort) n = 150 (random cohort) |

Pre = 6 months Post = 3 months |

Heart failure providers: the heart failure dashboard provided the list of patients with heart failure diseases and described their clinical profiles using a color-coded system. | 1. Automatically identify the heart failure admissions and assess the characteristics of the disease and medical therapy in real time. 2. Dashboard created within the EHR and directly links to each patient's EHR. |

| Franklin et al (2017) 29 and the United States | CSS | Training and academic hospitals, community hospitals, private hospitals n = 19 (PAs) |

Pre = 400 hours (at least 75 hours of observation per facility) | Clinicians, medical directors, ED directors, charge nurses: the dashboard visualizations increased situation awareness and provided a snapshot of the department and individual stages of care in real time. | 1. Anecdotal evidence. 2. Adopt machine learning algorithms into the EHR-based EWS and the implementation of real-time data supports clinical decision-making and provides rapid intervention to workflow. |

| Ye et al (2019) 28 and the United States | RPCS | Acute care two Berkshire health n = 54,246 ( n = 42,484 retrospective cohort; n = 11,762 prospective cohort) | Pre = 2 years Post = 10 months |

Clinicians: the EWS system provided real-time alert/notification when the patient's situation met with the predefined predict threshold and risk scores. The algorithms provided real-time early warning of mortality risk in a health system with preexisting EHR. |

1. Evaluated the machine learning algorithms by identifying high-risk patients; and alerting staff for patients with high risk of mortality. 2. Early warning system embedded in existing EHR system. |

| Yoo et al (2018) 34 and Korea | CSS | Tertiary teaching hospital n = 2,000 (bed) n = 79,000 (annual visit) n = 52 (PAs) |

Pre = 5 years Post = 41 days |

Physicians, nurses: the dashboard has visualized the geographical layout of the department and patient location; patient-level alert for workflow prioritization; and provided real-time summary data about ED performance/state. | 1. Survey questionnaire include: •System usability scale (SUS); •Situational awareness index (SAI), composed based on situation awareness rating technique (SART) 2. A separate electronic dashboard outside EHR were developed to visualize ED performance status on wall-mounted monitors and PCs. A separate dashboard for patients and families were implemented using wall-mounted monitors, kiosks, and tablets. |

| Schall et al (2017) 27 and the United States | CSS | Medical center 11 inpatient units n = 7 (PAs; n = 6 nurses, n = 1 physician) |

N/A | Nurse and physician: provided the visibility at a glance to timely and accurate critical patient safety indicator information. | 1. The dashboard reduced errors rates on task-based evaluation as it avoids visual “clutter” compared with conventional EHR displays. 2. Dashboard embedded into pre-existing EHR. |

| Fuller et al (2020) 24 and the United States | CSS | Academic medical center 30 inpatient units n = 24 (PAs; attending physicians, residents, physician assistants) |

Post =12 months | Physicians, physician assistants: the dashboard provided direct access within the EHR and obtained information about opioid management via real time and displayed into the dashboard via color-coding. The dashboard alerted clinicians about pain management issues and patient risks. | 1. Study task usability evaluation using standardized sheet to gather tasks from EHR and dashboard separately, and also record audio comments and computer screen activity using Morae. Survey questionnaire using NASA raw task load index (RTLX) to evaluate cognitive and workload. 2. Dashboard application launching directly from a link within the EHR. |

| Merkel et al (2020) 32 and the United States | CS | Acute care, and critical care: statewide n = N/A |

Pre =19 days Post = ongoing |

Emergency operations committees (EOCs) command center operator: allowed each individual health system to track hospital resources in real near time. | 1. No outcome measure was reported. 2. Near-time data populated to a web application independent of the EHR. |

| Bersani et al (2020) 21 and the United States | SW | Academic, acute-care hospital n = 413 unique logins n = 53 survey participants |

Post (random cohort) = 18 months | Prescribers, nurses, patients, caregivers: dashboard accessed directly via EHR, displaying consolidated EHR information via color coding, allowing critical patient safety indicator information for multiple patients. | 1. Dashboard usage (number of logins) and usability (Health-ITUES) 2. Real-time patient safety data dashboard integrated into an EHR, with color grading system. |

| Ibrahim et al (2020) 30 and the United Arab Emirates | CS | Tertiary academic hospital 8,000 admitted COVID-19 patients |

Pre = 30 days Post = 30 days |

The rounding team (nurse, attending resident, resident, intern): dashboard created to demonstrate clinical severity of COVID-19 patients and patient location using up-to-date, color coded displays on a single screen |

1. Percentage of patients requiring urgent intubation or cardiac resuscitation on general medical ward 2. A separate electronic dashboard external to the EHR. |

| Kurtzman et al (2017) 31 and the United States | MM | University owned teaching hospital n = 80 residents n = 23 residents (focus group) |

Post = 6 months | Internal medicine residents/trainees: dashboard visualizations increased resident-specific rates of routine laboratory orders in real time. | 1. Dashboard utilization using e-mail read-receipts and web-based tracking 2. A separate electronic dashboard external to EHR was developed to visualize routine laboratory tests. |

| Paulson et al (2020) 26 and the United States |

CSS | Twenty-one hospital sites (3,922 inpatient beds) n = unclear |

Unclear | RRT, palliative care teams, virtual quality team (VQT) nurse: EWS and advance alert monitor (AAM) dashboard providing near real-time notification when patient meets predefined thresholds and risk scores |

1. Number of alerts triggered and percentage activating a call from VQT RN to RRT RN. Rates of nursing/physician documentation. 2. EWS and AAM dashboard embedded directly into the existing EHR. Deployed (AAM) program in 19 more hospitals (two pilots). Developed a governance structure, clinical workflows, palliative care workflows, and documentation standards. |

| Staib et al (2017) 33 Australia | CS | Tertiary hospital n = N/A |

N/A | Physicians, nurses: ED-inpatient interface (Edii) dashboard to manage patient transfers from ED to inpatient hospital services. | 1. ED length of stay and mortality rates 2. A separate electronic dashboard outside EHR was developed and displayed on mounted monitors and PCs. |

Abbreviations: adm (s), admission (s); COVID-19, novel coronavirus disease 2019; CS, case study; CSS, cross-sectional study; ED, emergency department; EHR, electronic health record; EWS, early warning system; ICU, intensive care unit; MM, mixed methods; N/A, not available; PA (s), participant (s); PC, palliative care; PCS, prospective cohort study; PSLL, Patient Safety Learning Laboratory; RCS, retrospective cohort study; RMDS, repeated measures design study; RPCS, retrospective and prospective cohort study; SW, stepped wedge study.

The prior reviews identify 34 challenges to dashboard implementation (RQ-1) that are spread reasonably evenly across the three horizon model categories of people (12), information (8), and technology (9), with a further five in the process category (refer to Supplementary Table S1 , available in the online version). This may reflect the very wide-ranging organizational impacts of dashboard implementation. The most common challenge identified was the high financial and human resource cost. 12 13 18 None of the reviews prioritized or provided an indication of the scale of each challenge, making it difficult to ascertain where the major financial and resource burden lies. Most of the challenges (21) were associated with horizon two activities, that is, those implementation activities related to the extraction of data from the EHR and presentation to clinicians within a digital dashboard. Whereas a little over half as many (12) were associated with horizon three activities, that is, those implementation activities associated with the reengineering of clinical care models to align with LHS practices.

With respect to RQ-2, Supplementary Table S2 (available in the online version) lists eight approaches to overcoming some of the challenges identified. All of the approaches were sourced from Khairat et al 15 and of the eight identified, five directly related to the design of the dashboard, leaving just three related to the broader implementation processes. Given the large number of implementation challenges identified across the seven review studies (i.e., 34), it was surprising to find so little research reporting on best practice clinical dashboard implementation. Also highlighted by this analysis is that most of the best practice approaches identified related to improving the design of the dashboard (five of eight), a horizon-two activity, and a few approaches related to people-oriented issues of horizon three, for example, trying to help clinicians to create new models of care. These shortfalls present important research gaps which our review examines further.

The consolidated findings from the prior review studies for RQ-3 are listed in Supplementary Tables S3 and S4 (available in the online version). With respect to the impact of dashboards on care, overall, Dowding et al found mixed evidence. They concluded that the implementation of clinical and quality dashboards “can improve adherence to quality guidelines and may help improve patient outcomes.” 14 Based on the review papers, the evaluation of clinical dashboards and assessment of outcomes were analyzed in more detail.

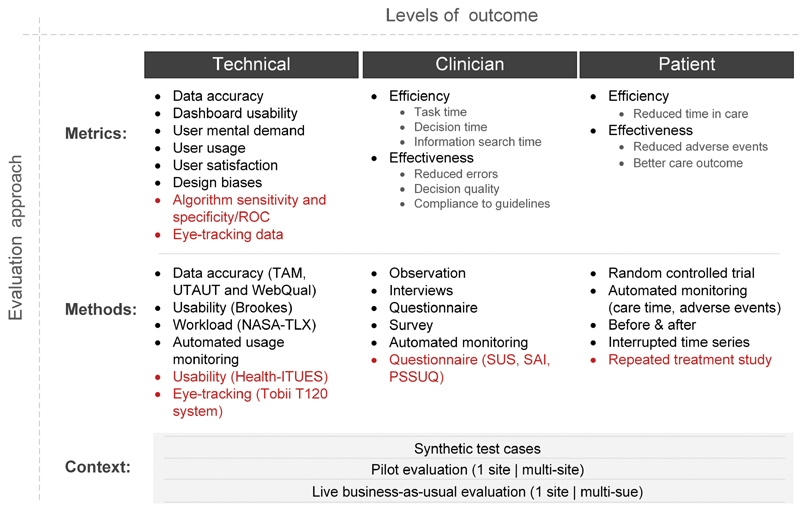

Four reviews identified 30 methods of assessing dashboard implementation and three reviews identified 17 different types of impacts. Taken together, we have grouped the wide range of evaluation metrics, methods, and contexts (together called an evaluation approach) by the level at which the outcome is seen, that is, at the technical level or by the clinician (user) or by the patient ( Fig. 2 ).

Fig. 2.

Framework to identify the different evaluation approaches (metrics, methods and contexts) for each level of implementation outcome (technical, clinical and patient outcomes). Health-ITUES, Health–Information Technology Usability Evaluation Scale; NASA-TLX, National Aeronautics and Space Administration-Task Load Index; PSSUQ, poststudy system usability questionnaire; ROC, receiver operating characteristics; SAI, situational awareness index; SUS, system usability scale; TAM, technology acceptance model; UTAUT, unified theory of acceptance and use of technology; WebQuaI, web site quality instrument.

Fig. 3.

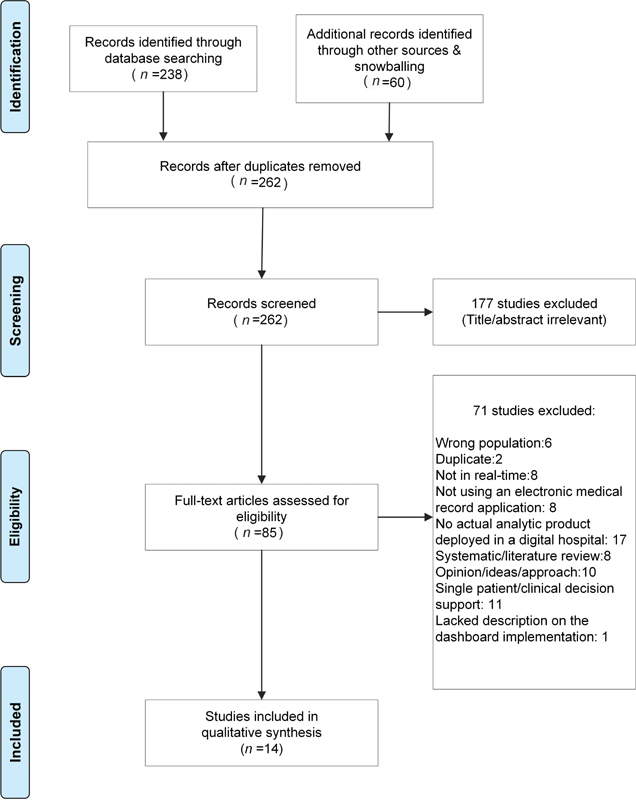

The Preferred Reporting Items for Systematic Reviews and Meta-analyses (PRISMA) flowchart for study selection.

The framework highlights that upstream metrics at the technical level can impact clinical outcomes. For example, poor dashboard usability may impact clinician decision quality which may lead to increased adverse events for patients.

The proposed framework serves two purposes. First, as a means of positioning each dashboard implementation study by its evaluation approach (i.e., metrics, methods, and context) at each level of outcome (i.e., technical, clinician, and patient). We found that in all of the reviews, comments were made regarding the wide ranging, heterogeneous nature of study assessment approaches, and outcomes; so this framework helps to group studies along similar assessment lines. Second, as an aid for organizations setting out on a dashboard implementation journey to clarify the evaluation approach to be considered for each level of outcome that is targeted.

Methods

Data Sources and Search Strategy

The Preferred Reporting Items for Systematic Reviews and Meta-analyses (PRISMA) 16 compliant systematic review was conducted ( Supplementary Table S5 , available in the online version). Literature searches were performed in three databases (Embase, PubMed, and Web of Science). A search strategy comprised keywords and the Medical Subject Headings (MeSH) terms related to clinical analytics tools (excluding clinical decision support systems for individual patient care) implemented at digital hospitals were developed and reviewed by a librarian ( Supplementary Table S6 , available in the online version). The search was performed during March 2021. Digital hospitals were defined as any hospital utilizing an EHR. Due to the rapid development of the technologies involved, the search period was limited to publications in the past 6 years (March 2015–March 2021). Backward citation searches and snowballing techniques were undertaken on included articles.

Study Selection: Eligibility Criteria

Studies were selected according to the criteria outlined in Table 2 .

Table 1. Comparison of the inclusion criteria between the current review and prior review studies.

| Meta study | Care setting | Data timeliness | Data source | Dashboard type | Implementation state |

|---|---|---|---|---|---|

| Wilbanks and Langford 18 | Acute | Any | EHR | Any | Any |

| Dowding et al 14 | Any | Any | Any | Clinical and quality | Implemented |

| West et al 17 | Any | Any | EHR | Visualization | Any |

| Maktoobi and Melchiori et al 16 | Any | Any | Any | Clinical | Any |

| Buttigieg et al 13 | Acute | Any | Any | Performance | Any |

| Khairat et al 15 | Any | Any | Any | Visualization | Any |

| Auliya et al 12 | Any | Any | Any | Any | Any |

| Our study | Acute | Real time | EHR | Clinical | Implemented |

Abbreviation: EHR, electronic health record.

Screening and Data Extraction

A two-stage screening system was utilized. During the first stage, two reviewers (J.D.P. and C.M.S.) screened all articles via title and abstract for relevance to the research question.

In the second stage, two independent reviewers (H.C.L. and A.K.R.) performed full-text review. Data extraction was then performed by three reviewers (H.C.L., J.M., and A.V.D.V.) within Covidence systematic review software. 19 Each of the included articles were assessed independently against the inclusion criteria with consensus obtained after deliberation between reviewers.

The following data were extracted from all final included studies: (1) study design, (2) country, (3) health care setting, (4) target population, (5) sample size, (6) duration/time, (7) target user, (8) interventions, (9) clinical care outcome measures, (10) clinical process outcome measures, (11) algorithm sensitivity/specificity, (12) anecdotal evidence, (13) implementation, (14) main findings, and (15) evidence for research questions RQ1 to RQ3

Quality Assessment

Quality assessment was undertaken independently by two reviewers (J.A.A. and H.C.L.) utilizing the Quality Assessment for Studies with Diverse Designs (QATSDD) tool 20 ( Supplementary Table S7 , available in the online version). Consensus was obtained after deliberation between reviewers.

Development of a Conceptual Framework for Implementation of Near-Real-Time Clinical Analytics in Digital Hospitals

We synthesized the research question findings from the prior reviews and this review and mapped these across the three horizons of digital health transformation 6 to construct a conceptual framework designed to support health care organizations plan for the implementation of new clinical dashboards.

Results

Study Selection

The search strategy retrieved 238 studies from three databases (Embase, PubMed, and Web of Science; Supplementary Table S6 [available in the online version]) and the snowballing citation search retrieved 60 studies. Figure three outlines the screening process resulting in 14 articles.

Study Demographics

The characteristics of the study samples and outcomes are summarized in Table 3 .

Table 2. Inclusion criteria for the present review.

| Inclusion criteria | |

|---|---|

| Population | •Adult population (≥18 years of age) •Emergency department or inpatient digital hospital setting |

| Intervention of interest | •Implementation of a real-time/near-real-time analytics product based upon aggregated data within a hospital using an EHR. Excludes single-patient-view-only dashboards |

| Study design | •All study designs |

| Publication date | •March 2015–March 2021 |

| Language | •English |

Abbreviation: EHR, electronic health record.

With respect to dashboard positioning, eight studies described the implementation of real-time analytics tools in an existing EHR. 21 22 23 24 25 26 27 28 The remaining six studies 29 30 31 32 33 34 implemented the dashboard outside an existing EHR, including displaying the dashboard in a separate monitor 29 33 34 and hosted on an independent web application. 32

Research Question Findings

RQ-1 Findings: Dashboard Implementation Challenges

Papers meeting our inclusion criteria identified 37 implementation challenges which are listed in Supplementary Table S8 (available in the online version). All but two papers 23 28 contributed challenges (average = 3, range = 1:8), indicating that most projects experienced problems implementing digital dashboards. The most widely reported challenges were (1) difficulties with lag times between the data in the EHR and loading the dashboard 21 24 25 29 30 ; and (2) designing the dashboard to support the amount and complexity of data desired. 25 27 29

The challenges have been classified ( Supplementary Table S8 , available in the online version) into a matrix of the relevant digital health horizon 6 and category (people, process, and information and technology [IT]).

Forty-three per cent of challenges (16/37) were related to technology problems, indicating that health organizations are still grappling with the technology required to implement dashboards. A further 30% (11/37) were people related, including clinician resistance, 25 26 lack of clinician time to use the dashboard, 31 and concern over IT resources. 32 Most of the remaining challenges (22%, 8/37) were process related including the wide and diverse array of implementation environments 21 29 and disagreement over clinical ownership of dashboard elements. 21 Few information related challenges arose (5%, 2/37) in the reviewed studies.

RQ-2 Findings: Methods to Overcome Challenges

Our review identified 64 methods that were used or proposed by health organizations to overcome dashboard implementation issues.

In relation to dashboard design and development, the following methods were commonly used: (1) prototyping, including interactive prototyping, 25 29 34 (2) human centered design, 21 32 (3) Multidisciplinary design panels, 22 27 30 and (4) designing for future change. 30 32 33 In relation to implementation, the most common practice was to utilize a multidisciplinary team. 21 30 33 34 Many of these same authors recommended a staged or iterative dashboard release process. 21 29 30 33 Pilot implementations were also utilized 21 22 as was addressing cultural and workflow issues early in the project. 21 31 33

Many studies reported on the desired content of the dashboard. Due to known concerns around alert fatigue, 35 alert functionality and management were popular topics, 21 23 26 especially among papers that reported on patient warning systems. Proposed dashboard content, 22 27 functionality, 27 29 and color considerations 21 27 34 were also discussed, for example, color-coded systems were used to aid visual display (e.g., indicate risk severity), 21 22 23 24 25 27 29 32 34 and symbols were often used to flag patient dispositions. 27 29 34 Multilevel displays were common, for example, a department- and patient-level views 21 24 25 29 while another expanded further to incorporate geographical location, health system, and hospital unit level views for dashboard implementation at a statewide level. 32 Importance was placed on the need for customizable views 23 27 29 and filters available to limit results on display such as patient characteristics, physical locations, attending physicians, or bed types. 29 32 Additionally, sort features were discussed (e.g., by level of risk) 23 or the ability to hover and display additional levels of clinical detail. 22 29 Others built interactive check boxes enabling users to indicate when an item had been actioned. 21 25

The findings were considered in the context of the three-horizon model but because many of the methods identified did not sit naturally within a single horizon or category (people, process, and IT), no categorization was performed, for example, utilizing a multidisciplinary team for implementation will impact challenges in all horizons and most horizon categories.

RQ-3 Dashboard Evaluation Methods and Dashboard Impact

Dashboards were evaluated using methods summarized in Fig. 2 (red font indicates additional methods identified within our review).

Dashboard impacts are outlined in Supplementary Table S9 (available in the online version). Only four included studies assessed patient health outcomes. 23 27 30 33 Six studies measured clinical process outcomes. 21 24 25 26 31 34

Discussion and Proposed Dashboard Implementation Conceptual Framework

In this section, we discuss how the current review compares with the prior reviews which were analyzed in “Analysis of Prior Work”. Then, we propose a conceptual framework for health care organizations planning to implement a real-time digital dashboard.

Integrating Prior Work with this Review

RQ-1: Challenges to Dashboard Implementation

Challenges identified in the prior work and this review were grouped together into challenge areas within the horizon and category (people, process, and IT) and depicted in Table 4 . This table reveals similarities and differences between challenges identified in the prior work when compared with our study.

Overall, the prior research and this review accounted for challenges within 26 challenge areas apiece of which 11 (42%) areas were common to both. This suggests that the challenges may have changed or shifted. This could result from the age of the studies contained in the reviews, the difference in review purposes or simply the result of what has been reported. The prior research collected studies between 1996 and 2017, whereas our review looked at studies since 2015. One of the greatest differences between our study and prior work is the challenges that were identified under the Information category in horizon two (C9–C17). Prior reviews identified seven distinct areas of information challenges of which only one overlaps with our review (C14). Conversely our review identified just three challenges in the same Information category.

This may reflect that in older projects, many organizations were implementing EHR systems and therefore struggling with more rudimentary challenges related to the size and complexity of this data. Whereas, now that most health care organizations have EHR systems, 36 much more EHR expertise exists for handling the data. The challenges in more recent years may therefore have shifted to problems with the wider-scale implementation of dashboards and the inherent problems that arise beyond the pilot implementation. Evidence to support this includes the challenges implementing within different environments (C6), supporting diverse users, workflows and user-interface screens (C28), and resolving new clinical model responsibilities (C39). Finally, many more challenges are reported in our work that relate to the design of the dashboard (C18–19) and alerts (C40–41).

Although our review focused on the use of real-time, EHR data that was not a prerequisite for prior reviews, we did not see an uptick in real-time data related challenges. The common real-time problem of lagging information between the EHR and the dashboard was similarly present in both works (C21).

RQ-2: Methods Identified for Overcoming Challenges

Very few methods arose from the prior work (eight) to overcome the challenges that were identified in the same review papers. This was a limitation of the prior work that this review sought to address. It may also be posited that in recent years, the best practice methods more have emerged and been reported within the literature. In this review, over 60 methods were identified. All of the methods, both prior and current, have been grouped into method areas and assigned to one of six implementation facets, depicted in Table 5 .

Table 4. Consolidated view of RQ-1: challenges to implementation.

| RQ-1 Consolidation of challenges | Review source | ||

|---|---|---|---|

| Horizon 2: digital dashboard delivery | Prior | Current | |

| People | C1: Training & training time | [7,8] | [7] |

| C2: Resourcing arrangements | [12] | [8] | |

| Process | C3: Financial and resource costs | [14] | [8] |

| C4: Organizational culture | [16] | ||

| C5: Lack of clinical guidelines/benchmarks | [17] | ||

| C6: Changing implementation environment | [15,28,29] | ||

| C7: Implementation time constraints | [17] | ||

| C8: Difficult to assess | [35] | ||

| Information | C9: Quantity of data | [18,19] | |

| C10: Complexity of data | [19] | ||

| C11: Uncertainty of data | [20] | ||

| C12: Quality of data | [21] | ||

| C13: Missing required data | [36] | ||

| C14: Normalization/regularization of data | [22,25] | [20] | |

| C15: Additional manual data entry | [23] | ||

| C16: Lack of nomenclature standardization | [24] | ||

| C17: Need for bioinformatician to extensively code | [21] | ||

| Technology | C18: Getting and presenting temporal data | [26] | [25,26,2] |

| C19: Presenting so much data and different types | [27] | [6,23,27,32,34] | |

| C20: Linking dashboard to EHR data | [28] | ||

| C21: Making data real-time | [29,32] | [22,37] | |

| C22: Dashboard reliability/connectivity | [30] | ||

| C23: Integration of heterogeneous data | [31] | [20,24] | |

| C24: Sourcing patient outcome information | [33] | ||

| C25: Handling rare events/small data sets | [34] | ||

| C26: Clinicians having enough info on dashboard | [5] | ||

| C27: Tech teething problems turns off users | [13] | ||

| C28: Support diverse users/workflows/screens | [28,29,31,33] | ||

| C29: Support change to environment | [30] | ||

| Horizon 3: clinical model | |||

| People | C30: Negative impact of dashboard on clinician | [1-5] | [4] |

| C31: Negative impact of dashboard on patient | [1-5] | ||

| C32: Clinician resistance | [6,9] | [1,11] | |

| C33: Resource concerns | [12] | ||

| C34: Integrating clinician thinking with dashboard | [3] | ||

| C35: Lack of clinician time | [9] | ||

| C36: Understanding variability of data | [26] | ||

| Process | C37: Ethical concerns over data usage | [13] | |

| C38: Different needs in different clinical settings | [15] | [2,28] | |

| C39: Clinical responsibility/disagreement problems | [12,14,16] | ||

| C40: Patient rescue/alert trade off | [18] | ||

| C41: Earlier alert is good, but clinicians see no benefit | [19] | ||

Abbreviations: EHR, electronic health record; RQ, research question.

Note: Challenges derived from prior reviews and this review were grouped into challenge areas. The source raw challenges are identified within square brackets and relate to the numbered challenges listed in Supplementary Table S1 (prior work) and Supplementary S8 (this review).

Table 5. Consolidated view of RQ-2: methods to overcome implementation challenges.

| Review source | |||

|---|---|---|---|

| Implementation facet | RQ-2: Summary of solutions methods | Prior | Current |

| Dashboard design method | M1: Human centered design | [1] | [32,38] |

| M2: (Interactive) prototyping | [3] | [1,15,16,21] | |

| M3: Multidisciplinary/panel design team | [7,24,58] | ||

| M4: Design for change and re-use | [58,63,36] | ||

| M5: Other design method | [14,37,59,61] | ||

| Implementation method | M6: Interdisciplinary implementation approach | [2] | [19,20,45,57,62] |

| M7: Pilot implementation | [6] | [5,48] | |

| M8: Stakeholder engagement | [43,44,46] | ||

| M9: Staged (iterative) release of dashboard | [17,48,58,60] | ||

| M10: Address workflow/cultural issues upfront | [49,51,64] | ||

| M11: Assess feedback/barriers early and rectify | [41] | ||

| M12: Design for early wins for users | [47] | ||

| M13: Usage feedback (competitive) reports | [42,58,59] | ||

| M14: Other specific implementation methods | [3,9,30,34,35,52,56,62] | ||

| M15: Evaluation methods | [13,16] | ||

| Other dashboard considerations | M16: Suggested dashboard content | [5-8] | [8,11,29] |

| M17: Suggested dashboard functionality | [12,26,28] | ||

| M18: Alert considerations | [2,4,39,53,54,56 | ||

| M19: Color considerations | [22,29,39] | ||

| M20: metrics considered | [25,27] | ||

| M21: Dashboard access | [31] | ||

| Training | M22: Live training (at-the-elbow) | [18,40] | |

| M23: Other training approach | [40,50] | ||

| Resources and costs | M24: Personnel considerations | [4,10,35,53] | |

| M25: Method to reduce cost | [4] | ||

| Technology | M26: Technology | [33] | |

Abbreviation: RQ, research question.

Note: Manually grouped into method areas from the prior research and this current review. The source raw methods are identified within square brackets and relate to the numbered methods listed in Supplementary Table S2 (prior work) and Supplementary S10 (this review).

Most of the method areas (80%, 21/26) align to just three implementation components: (1) dashboard design methodologies, (2) dashboard implementation methods, and (3) Other dashboard considerations. Except for a single method to reduce cost (M25), all of the methods mentioned in the prior work are also reported by studies in this review. In addition, a further 20 method areas were created to capture the wide variety of positive implementation methods identified in this review.

It is striking that none of the methods identified in Table 5 are peculiar to the health care sector. Well-accepted software implementation methodologies, such as prototyping, user-centered design and iterative deployment also apply to the health care domain. What is not explicit within the methods of Table 5 is the exaggerated risk that the health care domain presents. The introduction of a clinical dashboard can represent a dramatic change to the care outcomes of consumers. This risk mitigation is performed through robust testing and evaluation regimes.

It was unclear if studies included in our review used implementation science principles to guide translation of their clinical analytics tools into practice. Pragmatic implementation strategies underpinned by evidence-based implementation science are needed. Some relevant examples include the integrated Promoting Action on Research in Health Services (i-PARIHS) 37 or Consolidated Framework for Implementation Research (CFIR) 38 ; these have had numerous successful real-world applications for translating technological innovations into routine health care. 39 40

RQ-3: Methods Identified Evaluating Dashboards and Their Impact on Patient Care

Our review identified similar study evaluation metrics and methods employed across the three categorized levels of dashboard impact (technical, clinician, and patient) when compared with previous reviews. Additional technical impact evaluations were most commonly noted, for example, emphasis on dashboard embedded algorithm sensitivities and specificities. 22 28 While such evaluations lack direct assessment of clinician and patient care outcomes, they can offer insights into potential impacts to widespread dashboard adoption. For example, missing provider-generated EHR data were cited as an issue for algorithm sensitivity 22 which in turn impacts the number of patients correctly identified for further care.

No new clinician or patient-focused metrics were identified and only a limited number of new methodologies were discussed. This is likely due to the standard scientific quantitative and qualitative research methods being employed in addition to the distinct lack of research currently focused on patient care outcomes. Given the primary focus of health care centers is patient care, it is surprising to observe a continued lack of research focused on the evaluation of clinical care outcomes and dashboard implementation. As a result, our review was unable to contribute any further to the conclusions drawn by Dowding et al in 2015, stating clinical and quality dashboards “may help improve patient outcomes.” 14 It is unclear why such an absence still exists in the literature. These research questions need to be addressed to guide future dashboard design and implementation in a safe and judicious manner. It is worth noting that certain studies assessing clinical process outcomes in our review alluded to future work that will focus on measuring clinical outcomes. 25 26 28 29

Real-Time Clinical Dashboard Implementation Conceptual Framework

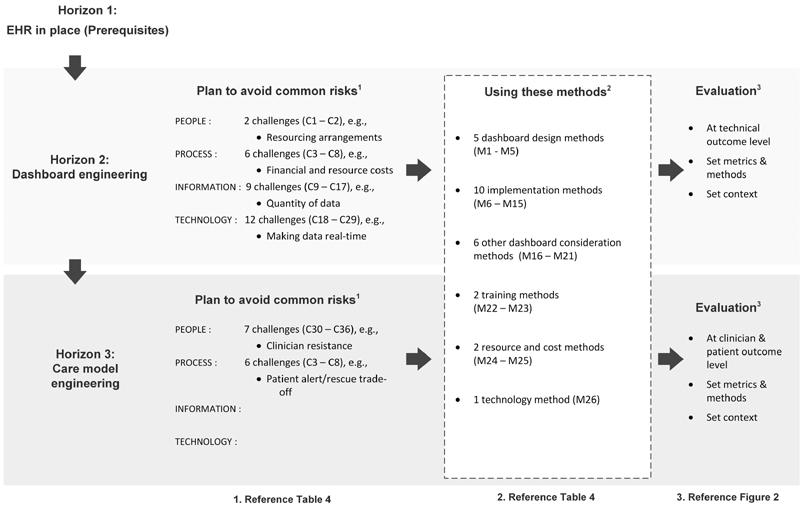

In this review, we have drawn together evidence from prior reviews, in addition to a recent review of the literature on health care dashboard implementation. In this section, we synthesize this evidence into a conceptual framework ( Fig. 4 ).

Fig. 4.

Proposed real-time clinical dashboard implementation conceptual framework. EHR, electronic health record.

The framework utilizes the three-horizon model 6 to propose an iterative evolution toward an LHS. For each horizon, the framework user can identify pertinent challenges that their organization may face, the methods they may use to overcome challenges, and the evaluation approach that is relevant to their implementation.

Implications for Practice

The purpose of the framework is to provide health care organizations with a step-wise approach to plan the implementation risks of a new clinical dashboard. Specifically, it helps the organization to identify key risks and decide on the implementation and evaluation methods to use to mitigate these risks. The framework is not definitive but may provide practical health care organization experience that can augment more formal generic software implementation methodologies, as discussed in Section “RQ-2: Methods Identified for Overcoming Challenges.” In this section, we highlight how the framework can be used in practice.

Horizon One: Building Digital Foundations

The EHR system must be operational and EHR data accessible for reuse. Some of the information problems appearing in horizon two ( Table 4 ) may be related to EHR data issues, for example, clinical workflows, the quality of data (C12), missing data (C13), and lack of nomenclature standardization (C16). In particular, clinical coding practices and the need for clinical bioinformaticians to extensively code (C17) may impede new dashboard projects.

Horizon Two: Data and Analytics Products

For horizon-two work, the focus is on extracting data and presenting the digital dashboard to clinicians.

The key to clinical dashboard usability and usage is data recency. Some studies quoted a 5 minutely refresh of data, 23 25 32 but others indicated that it was a key challenge to the uptake of the dashboard, that is, the lag time in the initial load or refresh of data on the dashboard. 24 25 29 Additionally, studies found that issues occurring early on can hinder longer term uptake of the dashboard, 21 so resolving these issues quickly is essential. Utilizing iterative design and implementation techniques can mitigate these risks. 21 25 29 30 34

Although this horizon emphasizes the technical aspect of presenting a new digital dashboard to clinicians, the evidence suggests that the implementation team needs to include clinicians, as well as technical staff, to design, 22 30 34 implement, and validate the dashboard. 21 26 29 33 34 This, combined with live training, such as at-the-elbow training, can reduce the risk of clinician resistance and poor usage. 21 29

Tactical methods, such as designing the dashboard for early wins 21 and different dashboard content, functionality, and alert considerations 21 22 23 26 27 may alleviate concerns over the dashboard design and alert fatigue. Promotion, clinician engagement, and review of work domain ontologies during the developmental stages may help to overcome issues surrounding user adoption as clinicians attempt to effectively integrate these systems into clinical practice. 29 41 Provider education focused on the potential benefits of such tools may be of benefit. For example, near-real-time analytics tools are capable of gathering and summarizing information automatically from health information systems without requiring additional data inputs by clinicians.

Horizon Three: New Models of Care

For horizon-three work, the focus is on reengineering the clinical model to provide new levels of patient care. The framework user can review the key challenges associated with process and people risks ( Table 4 ). The evaluation in this horizon should focus on clinician and patient outcomes ( Fig. 2 ).

The focus of risk mitigation in horizon-three revolves around clinicians (people) and the processes. Organizations can expect less supporting methodology in this horizon as far fewer experiences are reported.

Resourcing is an important concern in this horizon; in particular, sufficient resources with the right skill mixture. This begins with the clinicians: an important challenge to overcome early in clinical workflow design (e.g., within the rounds) is to provide sufficient time for the clinician to access and interact with the dashboard. 30 31 Also, new resources may be required. In one study, bioinformaticians were required to extensively code the desired data from the EHR and other databases. 22 Another resourcing challenge is the IT and analytical skill mixture and the ability to interpret data. 18 29 32 As organizations progress toward an LHS model, consideration needs to be given to adequately staff and train those with the necessary skills to effectively extract, model, and analyze data and action the insights created.

Although pilot studies may prove successful, 21 22 wider implementation of dashboards to different health care settings, sites, and environments can raise new risks and generate new challenges. 18 29 In particular, attention needs to be paid to the responsibilities of different clinical staff for dashboard indicators, notifications and alerts. 21 Addressing workflow and cultural issues early in each care setting is a suggested method to mitigate these risks. 21 31 33

Many risks of dashboards have been identified, but many remain unclear. For example, the unintended consequences related to the introduction of dashboards on both clinicians and patients. Many of these risks were raised by Dowding et al and included the risk of increased clinician workload and cognitive load and dashboard biases such as tunnel vision and measurement fixation. These kinds of biases may lead to care prioritization issues or poor clinical decisions which may have a detrimental impact on patient care. Our review did not identify solutions to these challenges; however, the concerns are very valid and require further research to support health care organizations in their ability to design safe and effective dashboards. Employing thorough clinician and patient outcome evaluations is essential to catch these kinds of problems.

As health care organizations move toward an LHS model, more predictive dashboard data may be utilized to inform clinical decision making. Deciding early on the alert strategy, that is, patient rescue versus alert frequency is a key decision. Paulson et al 26 describes a thorough approach to the use of alerts, snoozing techniques, and escalation procedure that was employed across 21 hospitals. Paulson et al also identified new problems to contend with related to successful predictive system implementations. As prediction times for patient problems become longer range, clinicians may find themselves taking actions that mitigate patient problems before the problems arise. This is a good news for the patient but can lead to clinicians questioning whether to act on the prediction. These kinds of LHS problems will arise more frequently as more organizations move to new kinds of predictive models of care. 42

Finally, perhaps hidden within the mass of challenges and potential solutions are basic problems concerning the maintainability of accurate data within dashboards. In a true LHS, regular reviews and validations are necessary to ensure data accuracy of the near-real-time clinical analytics tools. The data, data types, and algorithms that are configured in such tools may evolve or change overtime, depending on the needs of the organization, patients, and providers. The current existing manual review and validation processes are time consuming and not sustainable in the long term. Research into early automated detection of expected data changes is needed to proactively validate data integrity and update data views seamlessly.

Limitations

Our search strategy resulted in only 14 studies eligible for inclusion. Additionally, 11 of these studies originated from the United States, limiting the diversity and potential applicability of the conceptual framework to international health systems. Due to the low study numbers, no exclusion criteria were placed on study design. As a result, not all studies included were assessed as high quality and readers should be mindful of those which lacked a robust study design ( Supplementary Table S7 , available in the online version). Given only four studies have analyzed the impact of their near real-time clinical analytics tools on patient care outcomes, our conceptual framework offers insight into methods and interventions employed by others to date yet requires elaboration as further research is undertaken to understand the consequence of these key themes on clinical care.

Conclusion

In this study, we analyzed prior reviews and conducted our own systematic review of literature related to the implementation of near-real-time clinical analytic tools, usually referred to as digital dashboards. We focused the review on literature over the past 5 years and extracted key information relating to the implementation challenges faced by organizations, the methods they used to overcome these challenges and the methods of evaluation and impact of the dashboards. This information was compiled in the context of the three-horizon model which outlines a pathway for organizations to move to an LHS.

From the prior research and our review, we identified 71 implementation challenges and 72 methods to overcome these challenges. We also identified a range of metrics and approaches that were used to evaluate dashboards and their impact on clinicians and patients. Overall, very few studies evaluated their dashboards using patient outcomes and the benefit of utilizing such dashboards remains unclear and therefore an important direction for future research.

Using the evidence extracted from the studies, we formulated a framework to identify the different evaluation approaches that a health care organization can take when introducing a new clinical dashboard. Although not a formal implementation methodology, this framework is health care–domain specific and draws on the experiences and evidence from other health care providers who have walked down the clinical dashboard implementation path. We suggest this framework can support health care organizations in their efforts toward becoming an LHS.

Clinical Relevance Statement

A learning health care system uses routinely collected data to continuously monitor and improve health care outcomes, yet little is known about how to implement the extraction of electronic health record (EHR) data for continuous quality improvement. This systematic review identified the scarcity of clinical outcome assessment associated with real-time clinical analytics tools, warranting further research to determine fundamental design features that enhance usability and measure their impact on patient outcomes. A conceptual framework has been created, identifying key considerations during the design and implementation of real-time clinical analytics tools to guide decision-making for health care systems contemplating or pursuing a digitally enabled LHS.

Multiple Choice Questions

-

A learning health care system is:

designed to gather intermittent health information at discrete moments in time from health information systems to analyze and extrapolate on potential trends in patient care

designed to continuously gather and monitor aggregated health information from clinical practice and information systems to improve real-time clinical decision-making to improve patient care

designed to continuously gather and monitor health information from clinical practice and information systems to inform clinical decision making on single episodes of care for individual patients

designed to continuously gather information from administrative databases only to inform financial performance of health care organizations

Correct Answer: The correct answer is option b. A learning health care system is the continuous process of gathering/reviewing aggregated data within a healthcare system and providing clinicians with near real-time clinical decision support to improve the safety and quality of patient care.

-

Which of the following should health care organizations consider when developing and implementing a near real-time clinical analytics product:

a process for maintaining data integrity

access should be available from outside the existing electronic health record (EHR) framework

include as much information as possible within the visual display

development should be undertaken by the data analytics team, without involvement from clinicians

Correct Answer: The correct answer is option a. Near-real-time clinical analytics tools should constantly screen for disrupted data flow to verify the accuracy of the information populated. Data element mapping within the tools are part of configurable items within the EHR and data platform teams and application owners need to collaborate to ensure data integrity).

Funding Statement

Funding This study was funded by the Digital Health CRC, grant no.: STARS 0034.

Conflict of Interest None declared.

Author Contributions

Conception and design: H.C.L. and C.M.S. Data collection: J.D.P. and C.M.S. Data extraction: H.C.L., A.K.R., J.M., and A.V.D.V. Quality Assessment: J.A.A. and H.C.L. Data analysis and interpretation: H.C.L., J.A.A., A.V.D.V., and C.M.S. Drafting the manuscript: H.C.L., J.A.A., A.V.D.V., and C.M.S. This article has co–first authorship by three authors. H.C.L., J.A.A., and A.V.D.V. contributed equally and have the right to their name first in their CV. Critical revision of article: H.C.L., J.A.A., A.V.D.V., A.K.R., J.M., O.J.C., J.D.P., M.A.B., T.H., S.S., and C.M.S. All authors contributed to the article and approved the submitted version.

Protection of Human and Animal Subjects

Human and/or animal subjects were not involved in completing the present review.

Marked Authors Are Co–First Authors .

Supplementary Material

References

- 1.Charles D, Gabriel M, Furukawa M F.Adoption of electronic health record systems among U. S. non -federal acute care hospitals: 2008–2015Accessed October 7, 2021 at:https://www.healthit.gov/sites/default/files/briefs/2015_hospital_adoption_db_v17.pdf

- 2.Carroll J S, Quijada M A. Redirecting traditional professional values to support safety: changing organisational culture in health care. Qual Saf Health Care. 2004;13 02:ii16–ii21. doi: 10.1136/qshc.2003.009514. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Aggarwal A, Aeran H, Rathee M. Quality management in healthcare: The pivotal desideratum. J Oral Biol Craniofac Res. 2019;9(02):180–182. doi: 10.1016/j.jobcr.2018.06.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Oaten J, Stayner G, Ballard J.Baby deaths: hospital failures: an independent investigation has found a series of failures may have contributed to the deaths of 7 babies at a regional Victorian hospitalAccessed July 21, 2021 at:https://search-informit-org.ezproxy.library.uq.edu.au/doi/10.3316/tvnews.tsm201510160050

- 5.Barnett A, Winning M, Canaris S, Cleary M, Staib A, Sullivan C. Digital transformation of hospital quality and safety: real-time data for real-time action. Aust Health Rev. 2019;43(06):656–661. doi: 10.1071/AH18125. [DOI] [PubMed] [Google Scholar]

- 6.Sullivan C, Staib A, McNeil K, Rosengren D, Johnson I. Queensland digital health clinical charter: a clinical consensus statement on priorities for digital health in hospitals. Aust Health Rev. 2020;44(05):661–665. doi: 10.1071/AH19067. [DOI] [PubMed] [Google Scholar]

- 7.Mandl K D, Kohane I S, McFadden D. Scalable Collaborative Infrastructure for a Learning Healthcare System (SCILHS): architecture. J Am Med Inform Assoc. 2014;21(04):615–620. doi: 10.1136/amiajnl-2014-002727. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Olsen L A, Aisner D, McGinnis J M. Washington, DC: National Academies Press (US); 2007. Institute of Medicine (US) Roundtable on Evidence-Based Medicine. The Learning Healthcare System: Workshop Summary. [PubMed] [Google Scholar]

- 9.Platt J E, Raj M, Wienroth M. An analysis of the learning health system in its first decade in practice: scoping review. J Med Internet Res. 2020;22(03):e17026. doi: 10.2196/17026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Li X, Zhao X, Pu W, Chen P, Liu F, He Z. Optimal decisions for operations management of BDAR: a military industrial logistics data analytics perspective. Comput Ind Eng. 2019;137:106100. [Google Scholar]

- 11.Sullivan C, Staib A, Khanna S. The National Emergency Access Target (NEAT) and the 4-hour rule: time to review the target. Med J Aust. 2016;204(09):354. doi: 10.5694/mja15.01177. [DOI] [PubMed] [Google Scholar]

- 12.Auliya R Aknuranda I, Tolle H. A systematic literature review on healthcare dashboards development: trends, issues, methods, and frameworks. Adv Sci Lett. 2018;24(11):8632–8639. [Google Scholar]

- 13.Buttigieg S C, Pace A, Rathert C. Hospital performance dashboards: a literature review. J Health Organ Manag. 2017;31(03):385–406. doi: 10.1108/JHOM-04-2017-0088. [DOI] [PubMed] [Google Scholar]

- 14.Dowding D, Randell R, Gardner P. Dashboards for improving patient care: review of the literature. Int J Med Inform. 2015;84(02):87–100. doi: 10.1016/j.ijmedinf.2014.10.001. [DOI] [PubMed] [Google Scholar]

- 15.Khairat S SDA, Dukkipati A, Lauria H A, Bice T, Travers D, Carson S S. The impact of visualization dashboards on quality of care and clinician satisfaction: integrative literature review. JMIR Human Factors. 2018;5(02):e22. doi: 10.2196/humanfactors.9328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Maktoobi S Melchiori M.A brief survey of recent clinical dashboardsAccessed January 25, 2022 at:http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.1073.1848&rep=rep1&type=pdf

- 17.West V L, Borland D, Hammond W E. Innovative information visualization of electronic health record data: a systematic review. J Am Med Inform Assoc. 2015;22(02):330–339. doi: 10.1136/amiajnl-2014-002955. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Wilbanks B ALP, Langford P A. A review of dashboards for data analytics in nursing. Comput Inform Nurs. 2014;32(11):545–549. doi: 10.1097/CIN.0000000000000106. [DOI] [PubMed] [Google Scholar]

- 19.Veritas Health Innovation Covidence: systematic reviewAccessed January 25, 2022 at:https://unimelb.libguides.com/sysrev/covidence

- 20.Sirriyeh R, Lawton R, Gardner P, Armitage G. Reviewing studies with diverse designs: the development and evaluation of a new tool. J Eval Clin Pract. 2012;18(04):746–752. doi: 10.1111/j.1365-2753.2011.01662.x. [DOI] [PubMed] [Google Scholar]

- 21.Bersani K, Fuller T E, Garabedian P. Use, perceived usability, and barriers to implementation of a patient safety dashboard integrated within a vendor EHR. Appl Clin Inform. 2020;11(01):34–45. doi: 10.1055/s-0039-3402756. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Cox Z LP, Lewis C MNPCC, Lai P, Lenihan D JMD. Validation of an automated electronic algorithm and “dashboard” to identify and characterize decompensated heart failure admissions across a medical center. Am Heart J. 2017;183:40–48. doi: 10.1016/j.ahj.2016.10.001. [DOI] [PubMed] [Google Scholar]

- 23.Fletcher G S, Aaronson B A, White A A, Julka R. Effect of a real-time electronic dashboard on a rapid response system. J Med Syst. 2017;42(01):5. doi: 10.1007/s10916-017-0858-5. [DOI] [PubMed] [Google Scholar]

- 24.Fuller T E, Garabedian P M, Lemonias D P. Assessing the cognitive and work load of an inpatient safety dashboard in the context of opioid management. Appl Ergon. 2020;85:103047. doi: 10.1016/j.apergo.2020.103047. [DOI] [PubMed] [Google Scholar]

- 25.Mlaver E, Schnipper J L, Boxer R B. User-centered collaborative design and development of an inpatient safety dashboard. Jt Comm J Qual Patient Saf. 2017;43(12):676–685. doi: 10.1016/j.jcjq.2017.05.010. [DOI] [PubMed] [Google Scholar]

- 26.Paulson S S, Dummett B A, Green J, Scruth E, Reyes V, Escobar G J. What do we do after the pilot is done? Implementation of a hospital early warning system at scale. Jt Comm J Qual Patient Saf. 2020;46(04):207–216. doi: 10.1016/j.jcjq.2020.01.003. [DOI] [PubMed] [Google Scholar]

- 27.Schall M C, Jr, Cullen L, Pennathur P, Chen H, Burrell K, Matthews G. Usability evaluation and implementation of a health information technology dashboard of Evidence-Based Quality Indicators. Comput Inform Nurs. 2017;35(06):281–288. doi: 10.1097/CIN.0000000000000325. [DOI] [PubMed] [Google Scholar]

- 28.Ye C, Wang O, Liu M. A real-time early warning system for monitoring inpatient mortality risk: prospective study using electronic medical record data. J Med Internet Res. 2019;21(07):e13719. doi: 10.2196/13719. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Franklin A, Gantela S, Shifarraw S. Dashboard visualizations: supporting real-time throughput decision-making. J Biomed Inform. 2017;71:211–221. doi: 10.1016/j.jbi.2017.05.024. [DOI] [PubMed] [Google Scholar]

- 30.Ibrahim H, Sorrell S, Nair S C, Al Romaithi A, Al Mazrouei S, Kamour A. Rapid development and utilization of a clinical intelligence dashboard for frontline clinicians to optimize critical resources during Covid-19. Acta Inform Med. 2020;28(03):209–213. doi: 10.5455/aim.2020.28.209-213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Kurtzman G, Dine J, Epstein A. Internal medicine resident engagement with a laboratory utilization dashboard: mixed methods study. J Hosp Med. 2017;12(09):743–746. doi: 10.12788/jhm.2811. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Merkel M J, Edwards R, Ness J. statewide real-time tracking of beds and ventilators during coronavirus disease 2019 and beyond. Crit Care Explor. 2020;2(06):e0142. doi: 10.1097/CCE.0000000000000142. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Staib A, Sullivan C, Jones M, Griffin B, Bell A, Scott I. The ED-inpatient dashboard: Uniting emergency and inpatient clinicians to improve the efficiency and quality of care for patients requiring emergency admission to hospital. Emerg Med Australas. 2017;29(03):363–366. doi: 10.1111/1742-6723.12661. [DOI] [PubMed] [Google Scholar]

- 34.Yoo J, Jung K Y, Kim T. A real-time autonomous dashboard for the emergency department: 5-year case study. JMIR Mhealth Uhealth. 2018;6(11):e10666. doi: 10.2196/10666. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Kesselheim A S, Cresswell K, Phansalkar S, Bates D W, Sheikh A. Clinical decision support systems could be modified to reduce ‘alert fatigue’ while still minimizing the risk of litigation. Health Aff (Millwood) 2011;30(12):2310–2317. doi: 10.1377/hlthaff.2010.1111. [DOI] [PubMed] [Google Scholar]

- 36.Health I T.gov. 2020–2025 federal health it strategic plan 2020Accessed October 5, 2021 at:https://www.healthit.gov/sites/default/files/page/2020-10/Federal%20Health%20IT%20Strategic%20Plan_2020_2025.pdf

- 37.Harvey G, Kitson A. PARIHS revisited: from heuristic to integrated framework for the successful implementation of knowledge into practice. Implement Sci. 2016;11(01):33. doi: 10.1186/s13012-016-0398-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.CFIR Research Team-Center for Clinical Management Research Consolidated framework for implementation researchAccessed July 2, 2021 at:https://cfirguide.org/

- 39.Hunter S C, Kim B, Mudge A. Experiences of using the i-PARIHS framework: a co-designed case study of four multi-site implementation projects. BMC Health Serv Res. 2020;20(01):573. doi: 10.1186/s12913-020-05354-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Safaeinili N, Brown-Johnson C, Shaw J G, Mahoney M, Winget M. CFIR simplified: pragmatic application of and adaptations to the Consolidated Framework for Implementation Research (CFIR) for evaluation of a patient-centered care transformation within a learning health system. Learn Health Syst. 2019;4(01):e10201. doi: 10.1002/lrh2.10201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Waitman L R, Phillips I E, McCoy A B. Adopting real-time surveillance dashboards as a component of an enterprisewide medication safety strategy. Jt Comm J Qual Patient Saf. 2011;37(07):326–332. doi: 10.1016/s1553-7250(11)37041-9. [DOI] [PubMed] [Google Scholar]

- 42.Moorman L P. Principles for real-world implementation of bedside predictive analytics monitoring. Appl Clin Inform. 2021;12(04):888–896. doi: 10.1055/s-0041-1735183. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.