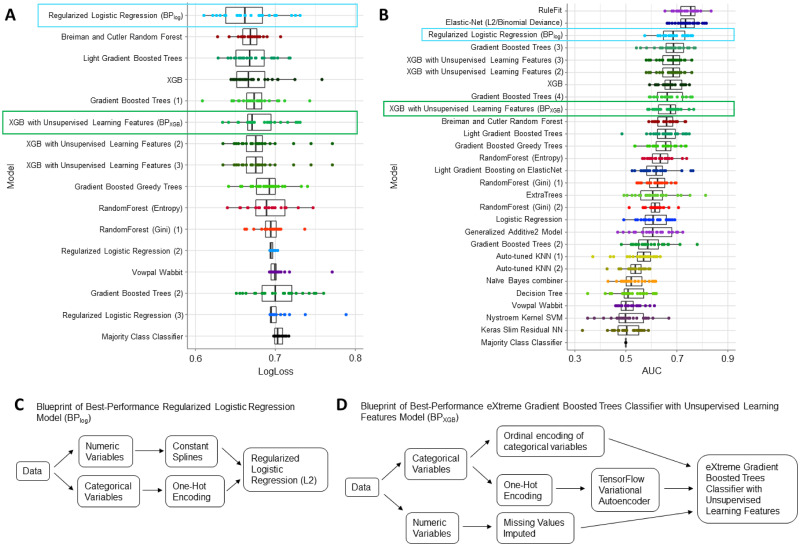

Fig 2. AutoML generated 15 models that performed better than the Majority Class Classifier model.

Each model consisted of automatically implemented preprocessing steps and algorithms. Models were assigned names according to the algorithm and encoded by a unique color. Blueprints of the same algorithm class are numbered for identification across both (A) LogLoss and (B) Area Under Curve (AUC) plots. Two models were selected for additional analysis: BPlog (blue box) and BPXGB (green box). Aggregating across 25 projects (unique partitioning arrangements of the dataset), BPlog had an average performance of 0.67 ± 0.01 LogLoss and 0.68 ± 0.02 AUC; BPXGB had an average performance of 0.68 ± 0.01 LogLoss and 0.67 ± 0.02 AUC. (C) BPlog consisted of a regularized logistic regression (L2) algorithm with a notable quintile spline transformation preprocessing step for numeric variables. (D) BPXGB implemented an eXtreme Gradient Boosted (XGB) trees classifier with unsupervised learning features, which refers to the TensorFlow Variational Autoencoder preprocessing step for categorical variables.