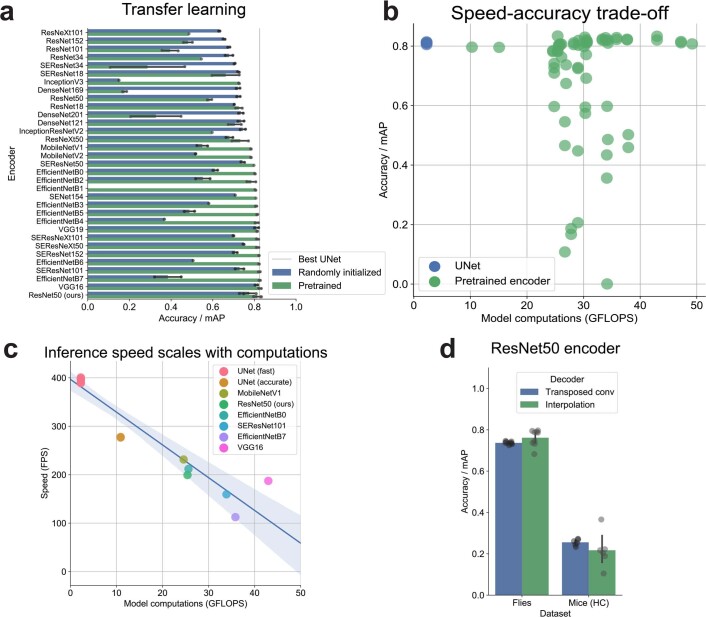

Extended Data Fig. 5. Pretrained encoder backbone models.

a, Transfer learning performance across all tested pretrained encoder model architectures. Accuracy evaluated on held-out test set of flies dataset using the top-down approach (n = 2-5 models per architecture and condition; 125 total models). b, Speed versus accuracy trade-off across all tested pretrained encoder model architectures as compared to optimal UNet. Accuracy evaluated on held-out test set of flies dataset using the top-down approach. Model floating point operations (GFLOPS) derived directly from configured architectures (n = 2-5 models per architecture and condition; 68 total models). c, Relationship between inference speed and computations. Points correspond to speed of the best model replicate for each architecture. Line and shaded area denotes linear fit and 95% confidence interval. d, Accuracy of our implementation of DLC ResNet50 with different decoder architectures. Points denote model training replicates (n = 3-5 models per condition; 30 total models).