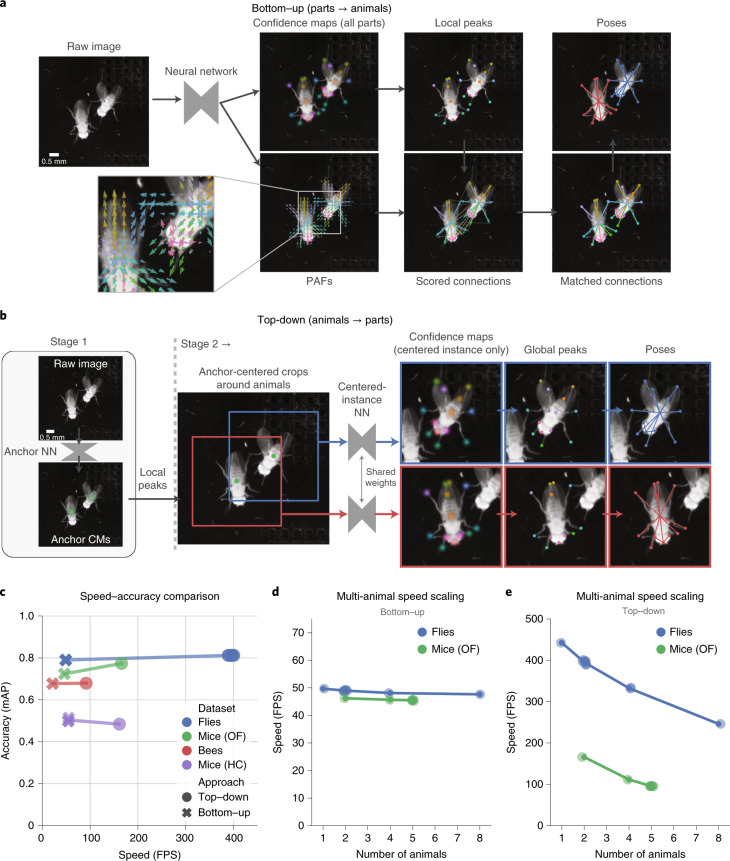

Fig. 3. Multi-animal pose-estimation approaches in SLEAP.

a, Workflow for the bottom–up approach. From left to right: a neural network takes an uncropped image as input and outputs confidence maps and PAFs; these are then used to detect body parts as local peaks in the confidence maps and score all potential connections between them; on the basis of connection scores, a matching algorithm then assigns the connections to distinct animal instances. b, Workflow for the top–down approach. From left to right: the first-stage neural network (NN) takes an uncropped image as input and outputs confidence maps for an anchor point on each animal; the anchors are detected as local peaks in the confidence maps (CMs); a centered crop is performed around each anchor point and provided as parallel inputs to the second-stage neural network; the network outputs confidence maps for all body parts only for the centered instance, which are then detected as global peaks. c, Speed versus accuracy of models trained using the two approaches across datasets. Points denote individual model replicates and accuracy evaluated on held-out test sets. Top–down models were evaluated here without TensorRT optimization for direct comparison to the bottom–up models. HC, home cage. d, Inference speed scaling with the number of animals in the frame for bottom–up models. Points correspond to sampled measurements of batch-processing speed (batch size of 16) over 1,280 images with the highest-accuracy model for each dataset. e, Inference speed scaling with the number of animals in the frame for top–down models. Points correspond to sampled measurements of batch-processing speed (batch size of 16) over 1,280 images with the highest-accuracy model for each dataset. Top–down models were evaluated here without TensorRT optimization for direct comparison to the bottom–up models.