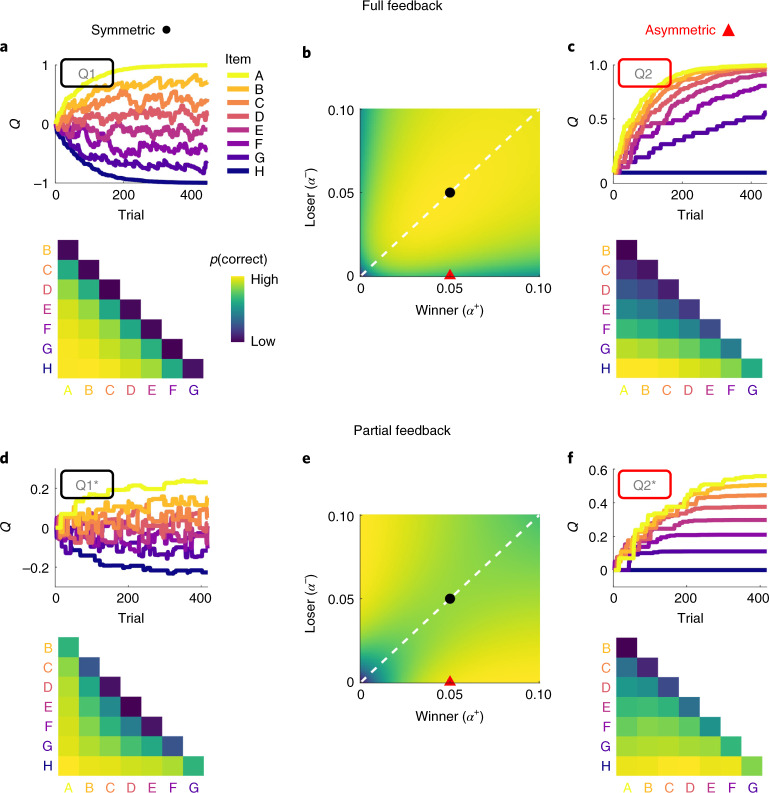

Fig. 2. Model simulations under full and partial feedback.

a, Item-level learning under full feedback (Exp. 1) simulated with symmetric Model Q1. Top, exemplary evolution of item values Q (a.u.) over trials. Bottom, simulated probability of making a correct choice for each item pairing (aggregated across all trials in the top panel). b, Simulated task performance (mean proportion correct choices on the second half of the trials) of asymmetric Model Q2 across different learning rates α+ (winning items) and α− (losing items). For values on the diagonal (dashed white line), Q2 is equivalent to Q1. The black dot indicates parameters used for the simulation of symmetric learning in a. The red triangle indicates parameters used for the simulation of asymmetric learning in c. c, Same as a, but using Model Q2 with asymmetric learning rates. d, Same as a, but for Model Q1* in a partial-feedback scenario (Exps. 2–4). e,f, Same as b and c, but using model Q2* under partial feedback. Note that asymmetric learning leads to lower performance under full feedback (b) but improves performance under partial feedback (e). Asymmetric learning results in a compressed value structure that is asymptotically stable under partial feedback (f) but not under full feedback (c).