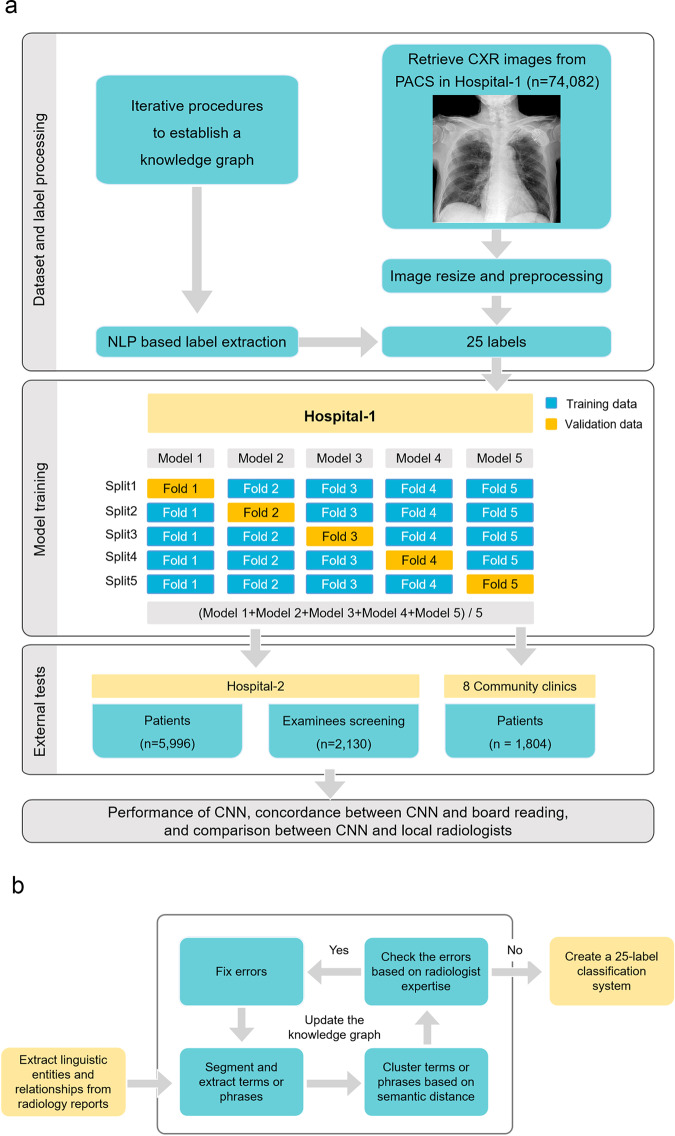

Fig. 1. Diagram of research steps.

a Workflow of dataset preparation, model training, and external testing. The training dataset consists of chest X-ray radiographs (CXR) and corresponding diagnostic reports. By using the bidirectional encoder representations from transformers (BERT) model to identify language entities from the reports, we conducted an iterative process to build a knowledge graph with the semantic relationship between language entities and finally established 25 labels representing 25 abnormal signs in CXR. After training the convolutional neural networks (CNNs) based on fivefold stratified cross-validation and weakly supervised labeling, we conducted external tests in another hospital and eight community clinics. The tests included the performance of CNN, the concordance between CNN and board reading, and the comparison between CNN and local radiologists. b Workflow of image labeling based on the bidirectional encoder representations from transformers (BERT) natural language processing model with an expert amendment. We used the BERT model to recognize linguistic entities, entity span, semantic type of entities, and semantic relationships between entities. In an iterative process to establishing the knowledge graph with the semantic relationship between language entities, two radiologists examined the established knowledge graph, amended the extracted linguistic entities, and clarified linguistic relationships based on their clinical experience. Finally, 25 labels representing 25 abnormal signs were established. CXR chest X-ray radiography, BERT the bidirectional encoder representations from transformers, CNN convolutional neural network, PACS picture archiving and communication system, NLP natural language processing.