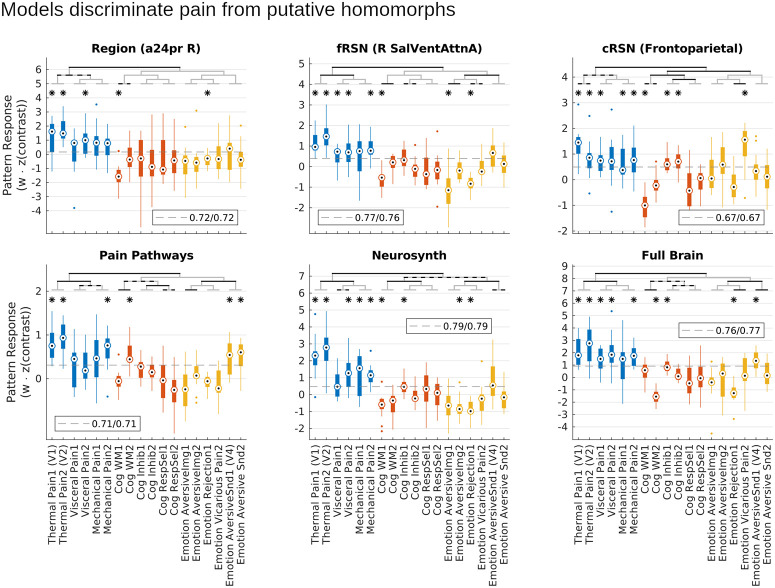

Fig 8. Distributed models were more pain-specific than local models.

In a set of 18 studies that estimated participant mean responses to 1 of 6 multimodal pain tasks or 1 of 12 nonpain tasks, models significantly discriminated pain from nonpain tasks on average (p < 10e-6), even though nonpain tasks all shared aversive characteristics or task demands with pain. Planned contrasts showed this discrimination was statistically significant for each of the 6 models tested. Notably, all models were exclusively trained on painful stimuli, so the ability to discriminate was an emergent phenomenon. Most importantly, discrimination of pain from nonpain was greater for distributed than local models (p = 2.2e-5), indicating that the performance advantage of distributed models was due to improved pain-specific representations. Bars are grouped into pain tasks (blue), cognitive demand control tasks (red), and nonpainful aversive tasks (yellow). Planned contrasts: black brackets, p < 0.003 (Sidak threshold for 17 comparisons), gray/black brackets p < 0.05. Post hoc t test: *p < 0.003. Dashed gray line: optimal cut point for discrimination (balancing sensitivity and specificity). Legend indicates sensitivity/specificity at the optimal threshold. Underlying data: https://github.com/canlab/petre_scope_of_pain_representation/tree/main/figure8. cRSN, coarse resting-state network; fRSN, fine resting-state network.